If you're reading this, you've probably heard something about Kubernetes (and if not, how did you get here?) But what exactly is Kubernetes? Is this “Industrial Grade Container Orchestration” ? Or "Cloud-Native Operating System" ? What does this mean anyway?

To be honest, I'm not 100% sure. But I think it's interesting to dig into the internals and see what actually happens in Kubernetes under its many layers of abstraction. So just for fun, let's see what a minimal “Kubernetes cluster” actually looks like. (This will be much easier than Kubernetes The Hard Way .)

I assume you have a basic knowledge of Kubernetes, Linux and containers. Everything we are going to talk about here is for research / study only, do not run any of this in production!

Overview

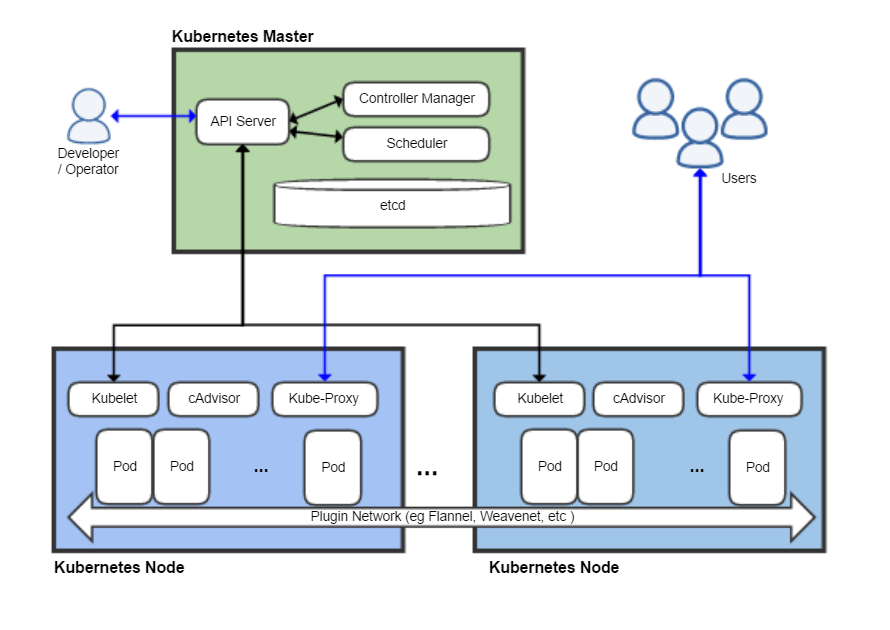

Kubernetes contains many components. According to wikipedia , the architecture looks like this:

There are at least eight components shown here, but we will ignore most of them. I want to state that the smallest thing that can reasonably be called Kubernetes has three main components:

- kubelet

- kube-apiserver (which depends on etcd - its database)

- container runtime (in this case Docker)

Let's see what the documentation says about each of them ( Russian , English ). First the kubelet :

An agent running on every node in the cluster. He makes sure that the containers are running in the pod.

Sounds simple enough. What about the runtime containers (container runtime)?

The container runtime is a program designed to run containers.

Very informative. But if you are familiar with Docker, then you should have a basic understanding of what it does. (The details of the separation of concerns between the container runtime and the kubelet are actually quite subtle and I won't go into it here.)

And the server API ?

API Server - A Kubernetes dashboard component that represents the Kubernetes API. The API Server is the front end of the Kubernetes dashboard.

Anyone who has ever done anything with Kubernetes has had to interact with the API either directly or via kubectl. This is the heart of what makes Kubernetes Kubernetes - the brain that turns the mountains of YAML that we all know and love (?) Into a working infrastructure. It seems obvious that the API should be present in our minimal configuration.

Preconditions

- Rooted Linux virtual or physical machine (I am using Ubuntu 18.04 in a virtual machine).

- And it's all!

Boring installation

Docker needs to be installed on the machine we will be using. (I'm not going to go into detail on how Docker and containers work; there are great articles out there if you're interested ). Let's just install it with

apt:

$ sudo apt install docker.io

$ sudo systemctl start dockerAfter that, we need to get the Kubernetes binaries. In fact, for the initial launch of our "cluster" we only need

kubelet, since we can use to launch other server components kubelet. To interact with our cluster after it is up and running, we will also use kubectl.

$ curl -L https://dl.k8s.io/v1.18.5/kubernetes-server-linux-amd64.tar.gz > server.tar.gz

$ tar xzvf server.tar.gz

$ cp kubernetes/server/bin/kubelet .

$ cp kubernetes/server/bin/kubectl .

$ ./kubelet --version

Kubernetes v1.18.5What happens if we just launch

kubelet?

$ ./kubelet

F0609 04:03:29.105194 4583 server.go:254] mkdir /var/lib/kubelet: permission deniedkubeletshould be running as root. It is logical enough, since he needs to manage the entire node. Let's take a look at its parameters:

$ ./kubelet -h

< , >

$ ./kubelet -h | wc -l

284Wow, there are so many options! Fortunately, we only need a couple of them. Here is one of the parameters we are interested in:

--pod-manifest-path stringThe path to the directory containing the files for the static pods, or the path to the file describing the static pods. Files starting with periods are ignored. (DEPRECATED: This parameter should be set in the configuration file passed to Kubelet via the --config option. For more information see kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file .)

This parameter allows us to run static Pods - Pods that are not managed through the Kubernetes API. Static pods are rarely used, but they are very convenient for quickly raising a cluster, which is exactly what we need. We'll ignore this loud warning (again, don't run this in production!) And see if we can run under.

First, we'll create a directory for static pods and run

kubelet:

$ mkdir pods

$ sudo ./kubelet --pod-manifest-path=podsThen in another terminal / tmux window / somewhere else, we'll create a pod manifest:

$ cat <<EOF > pods/hello.yaml

apiVersion: v1

kind: Pod

metadata:

name: hello

spec:

containers:

- image: busybox

name: hello

command: ["echo", "hello world!"]

EOFkubeletstarts writing some warnings and it seems that nothing is happening. But this is not the case! Let's take a look at Docker:

$ sudo docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8c8a35e26663 busybox "echo 'hello world!'" 36 seconds ago Exited (0) 36 seconds ago k8s_hello_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_4

68f670c3c85f k8s.gcr.io/pause:3.2 "/pause" 2 minutes ago Up 2 minutes k8s_POD_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_0

$ sudo docker logs k8s_hello_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_4

hello world!kubeletread the pod manifest and instructed Docker to run a couple of containers as per our spec. (If you're curious about the “pause” container, this is Kubernetes hacking - see this blog for details .) Kubelet will launch our container busyboxwith the specified command and restart it indefinitely until the static pod is removed.

Congratulate yourself. We've just come up with one of the most convoluted ways to output text to the terminal!

Run etcd

Our ultimate goal is to run the Kubernetes API, but for that we first need to run etcd . Let's start a minimal etcd cluster by placing its settings in the pods directory (for example

pods/etcd.yaml):

apiVersion: v1

kind: Pod

metadata:

name: etcd

namespace: kube-system

spec:

containers:

- name: etcd

command:

- etcd

- --data-dir=/var/lib/etcd

image: k8s.gcr.io/etcd:3.4.3-0

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

hostNetwork: true

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-dataIf you've ever worked with Kubernetes, then these YAML files should be familiar to you. There are only two things to note here:

We mounted the host folder

/var/lib/etcdin the pod so that the etcd data is saved after a restart (if this is not done, then the cluster state will be erased every time the pod is restarted, which would be bad even for a minimal Kubernetes installation).

We have installed

hostNetwork: true. This option, unsurprisingly, configures etcd to use the host network instead of the pod's internal network (this will make it easier for the API server to find the etcd cluster).

A simple check shows that etcd is indeed running on localhost and is saving the data to disk:

$ curl localhost:2379/version

{"etcdserver":"3.4.3","etcdcluster":"3.4.0"}

$ sudo tree /var/lib/etcd/

/var/lib/etcd/

└── member

├── snap

│ └── db

└── wal

├── 0.tmp

└── 0000000000000000-0000000000000000.walLaunching the API Server

Starting the Kubernetes API Server is even easier. The only parameter that needs to be passed

--etcd-servers, does what you expect:

apiVersion: v1

kind: Pod

metadata:

name: kube-apiserver

namespace: kube-system

spec:

containers:

- name: kube-apiserver

command:

- kube-apiserver

- --etcd-servers=http://127.0.0.1:2379

image: k8s.gcr.io/kube-apiserver:v1.18.5

hostNetwork: truePlace this YAML file in the directory

podsand the API server will start. Verification with help curlshows that the Kubernetes API is listening on port 8080 with fully open access - no authentication required!

$ curl localhost:8080/healthz

ok

$ curl localhost:8080/api/v1/pods

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/pods",

"resourceVersion": "59"

},

"items": []

}(Again, don't run this in production! I was a little surprised that the default setting is so insecure. But I'm guessing this is for ease of development and testing.)

And, pleasantly surprised, kubectl works out of the box without any extras. settings!

$ ./kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.5", GitCommit:"e6503f8d8f769ace2f338794c914a96fc335df0f", GitTreeState:"clean", BuildDate:"2020-06-26T03:47:41Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.5", GitCommit:"e6503f8d8f769ace2f338794c914a96fc335df0f", GitTreeState:"clean", BuildDate:"2020-06-26T03:39:24Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

$ ./kubectl get pod

No resources found in default namespace.Problem

But if you dig a little deeper, then it seems that something is going wrong:

$ ./kubectl get pod -n kube-system

No resources found in kube-system namespace.The static pods we created are gone! In fact, our kubelet node doesn't show up at all:

$ ./kubectl get nodes

No resources found in default namespace.What's the matter? If you remember, a few paragraphs ago we started kubelet with an extremely simple set of command line parameters, so kubelet doesn't know how to contact the API server and notify it of its state. After examining the documentation, we find the corresponding flag:

--kubeconfig string

The path to the file

kubeconfig, which indicates how to connect to the API server. The presence --kubeconfigenables the API server mode, the absence --kubeconfigenables the offline mode.

All this time, without knowing it, we were running kubelet in "offline mode". (If we were pedantic, we could consider kubelet standalone mode as “minimum viable Kubernetes,” but that would be very boring). For the "real" configuration to work, we need to pass the kubeconfig file to the kubelet so that it knows how to communicate with the API server. Fortunately, this is pretty straightforward (since we have no problem with authentication or certificates):

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://127.0.0.1:8080

name: mink8s

contexts:

- context:

cluster: mink8s

name: mink8s

current-context: mink8sSave this as

kubeconfig.yaml, kill the process kubeletand restart with the required parameters:

$ sudo ./kubelet --pod-manifest-path=pods --kubeconfig=kubeconfig.yaml(By the way, if you try to access the API with curl when the kubelet is down, you will find that it still works! Kubelet is not the "parent" of its pods like Docker, it is more like a "control daemon". The containers managed by the kubelet will run until the kubelet stops them.)

After a few minutes,

kubectlshould show us the pods and nodes, as we expect:

$ ./kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-mink8s 0/1 CrashLoopBackOff 261 21h

kube-system etcd-mink8s 1/1 Running 0 21h

kube-system kube-apiserver-mink8s 1/1 Running 0 21h

$ ./kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

mink8s Ready <none> 21h v1.18.5 10.70.10.228 <none> Ubuntu 18.04.4 LTS 4.15.0-109-generic docker://19.3.6Let's really congratulate ourselves this time (I know I already congratulated) - we have a minimal Kubernetes "cluster" working with a fully functional API!

Run under

Now let's see what the API is capable of. Let's start with the nginx pod:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: nginx

name: nginxHere we get a pretty interesting error:

$ ./kubectl apply -f nginx.yaml

Error from server (Forbidden): error when creating "nginx.yaml": pods "nginx" is

forbidden: error looking up service account default/default: serviceaccount

"default" not found

$ ./kubectl get serviceaccounts

No resources found in default namespace.Here we see how horribly incomplete our Kubernetes environment is — we don't have service accounts. Let's try again by manually creating a service account and see what happens:

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: default

namespace: default

EOS

serviceaccount/default created

$ ./kubectl apply -f nginx.yaml

Error from server (ServerTimeout): error when creating "nginx.yaml": No API

token found for service account "default", retry after the token is

automatically created and added to the service accountEven when we created the service account manually, no authentication token is generated. As we continue to experiment with our minimalist “cluster,” we will find that most of the useful things that usually happen automatically will be missing. The Kubernetes API server is pretty minimalistic, with most of the heavy automatic tweaks going on in various controllers and background jobs that are not yet running.

We can work around this problem by setting an option

automountServiceAccountTokenfor the service account (since we won't have to use it anyway):

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: default

namespace: default

automountServiceAccountToken: false

EOS

serviceaccount/default configured

$ ./kubectl apply -f nginx.yaml

pod/nginx created

$ ./kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 Pending 0 13mFinally, under appeared! But in fact, it will not start, because we do not have a scheduler (scheduler) - another important component Kubernetes. Again, we can see that the Kubernetes API is surprisingly stupid - when you create a pod in the API, it registers it, but doesn't try to figure out which node to run it on.

You don't actually need a scheduler to run the pod. You can manually add the node to the manifest in the parameter

nodeName:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: nginx

name: nginx

nodeName: mink8s

(Replace

mink8swith the hostname.) After delete and apply, we see that nginx has started and is listening on an internal IP address:

$ ./kubectl delete pod nginx

pod "nginx" deleted

$ ./kubectl apply -f nginx.yaml

pod/nginx created

$ ./kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 30s 172.17.0.2 mink8s <none> <none>

$ curl -s 172.17.0.2 | head -4

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>To verify that the network between pods is working correctly, we can run curl from another pod:

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl

spec:

containers:

- image: curlimages/curl

name: curl

command: ["curl", "172.17.0.2"]

nodeName: mink8s

EOS

pod/curl created

$ ./kubectl logs curl | head -6

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>It's pretty fun to dig into this environment and see what works and what doesn't. I found that ConfigMap and Secret work as expected, but Service and Deployment don't.

Success!

This post is getting big, so I'm going to announce victory and declare that this is a viable configuration to call “Kubernetes.” To summarize: four binaries, five command line parameters, and “just” 45 lines of YAML (not many by standards Kubernetes) and we have a lot of things working:

- Pods are managed using the regular Kubernetes API (with a few hacks)

- You can upload and manage public container images

- Pods stay alive and restart automatically

- Networking between pods within a single node works pretty well

- ConfigMap, Secret and simplest mounting of repositories works as expected

But most of the things that make Kubernetes really useful are still missing, for example:

- Pod planner

- Authentication / Authorization

- Multiple nodes

- Service network

- Clustered Internal DNS

- Controllers for service accounts, deployments, cloud provider integrations, and most of the other cool things Kubernetes brings

So what did we actually get? The Kubernetes API, working on its own, is really just a container automation platform . It doesn't do much - it does work for the various controllers and operators using the API - but it provides a consistent framework for automation.

Learn more about the course in a free webinar.

Read more:

- Why should sysadmins, developers and testers learn DevOps practices?

- Thanos — Prometheus

- QA- GitLab GitLab Performance Tool

- Loki — , Prometheus

- DevOps