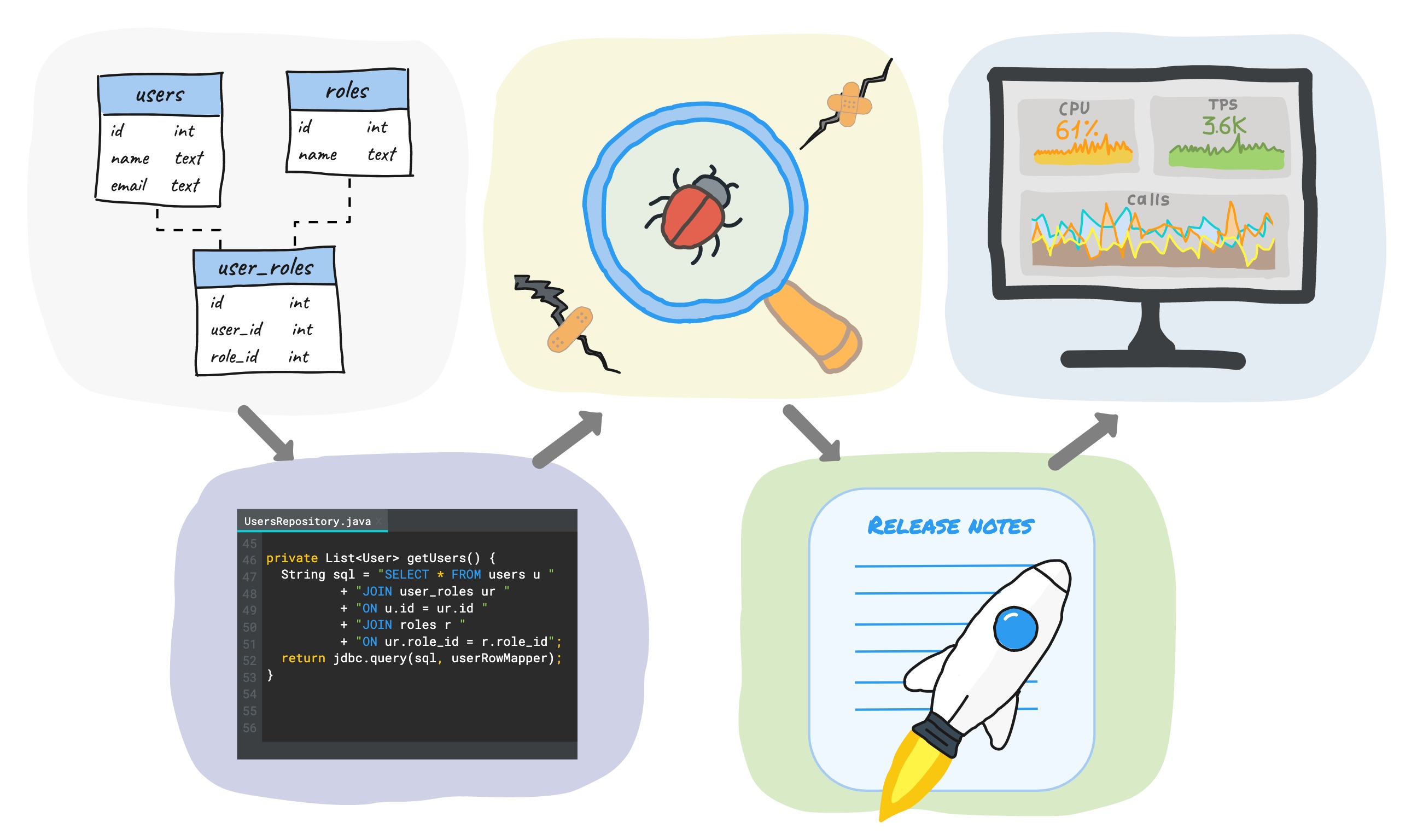

When you develop new functionality using a database, the development cycle usually includes (but is not limited to) the following stages:

Writing SQL migrations → writing code → testing → release → monitoring.

In this article, I want to share some practical advice on how you can reduce the time of this cycle at each stage, while not reducing the quality, but rather even increasing it.

Since we work with PostgreSQL at the company, and write the server code in Java, the examples will be based on this stack, although most of the ideas do not depend on the database and programming language used.

SQL migration

The first stage of development after design is writing the SQL migration. The main advice - do not make any manual changes to the data schema, but always do it through scripts and store them in one place.

In our company, developers write SQL migrations themselves, so all migrations are stored in a repository with the main code. In some companies, database administrators are involved in changing the schema, in which case the migration registry is somewhere with them. One way or another, this approach brings the following advantages:

- You can always easily create a new base from scratch or upgrade an existing one to the current version. This allows you to quickly deploy new test environments and local development environments.

- All bases have the same layout - no surprises in service.

- There is a history of all changes (versioning).

There are quite a few ready-made tools for automating this process, both commercial and free: flyway , liquibase , sqitch , etc. In this article I will not compare and choose the best tool - this is a separate large topic, and you can find many articles on it ...

We use flyway, so here's a little information about it:

- There are 2 types of migrations: sql-based and java-based

- SQL migrations are immutable (immutable). After the first execution, the SQL migration cannot be changed. Flyway calculates a checksum for the contents of the migration file and verifies it on every run. Additional manual manipulations are required to make Java migrations immutable .

- flyway_schema_history ( schema_version). , , , .

According to our internal agreements, all data schema changes are made only through SQL migrations. Their immutability ensures that we can always get an actual schema that is completely identical to all environments.

Java migrations are used only for DML , when it is impossible to write in pure SQL. For us, a typical example of such a situation is migrations to transfer data to Postgres from another database (we are moving from Redis to Postgres, but this is a completely different story). Another example is updating the data of a large table, which is performed in several transactions to minimize the table lock time. It is worth saying that from the 11th version of Postgres this can be done using SQL procedures on plpgsql.

When the Java code is outdated, the migration can be removed in order not to produce legacy (the Java migration class itself remains, but inside it is empty). In our country, this can happen no earlier than a month after the migration to production - we believe that this is enough time for all test environments and local development environments to be updated. It should be noted that since Java migrations are used only for DML, deleting them does not affect the creation of new databases from scratch in any way.

An important nuance for those using pg_bouncer

Flyway applies a lock during migration to prevent simultaneous execution of multiple migrations. Simplified, it works like this:

- lock capture occurs

- performing migrations in separate transactions

- unblocking.

For Postgres, it uses advisory locks in session mode, which means that for it to work correctly, it is necessary that the application server be running on the same connection during the lock capture and release. If you use pg_bouncer in transactional mode (which is the most common) or in single request mode, then for each transaction it may return a new connection and flyway will not be able to release an established lock.

To solve this problem, we use a separate small connection pool on pg_bouncer in session mode, which is intended only for migrations. From the side of the application, there is also a separate pool that contains 1 connection and it is closed by timeout after the migration, so as not to waste resources.

Coding

The migration has been created, now we are writing the code.

There are 3 approaches to working with the database from the application side:

- Using ORM (if we talk about Java, then hibernate is de facto the standard)

- Using plain sql + jdbcTemplate etc.

- Using DSL libraries.

Using ORM allows you to reduce the requirements for knowledge of SQL - a lot is generated automatically:

- data schema can be created from xml-description or Java-entity available in the code

- object relationships are defined using a declarative description - ORM will make joins for you

- when using Spring Data JPA, even more tricky queries can also be generated automatically by the repository method signature .

Another "bonus" is the presence of data caching out of the box (for hibernate, these are 3 levels of caches).

However, it is important to note that ORM, like any other powerful tool, requires certain qualifications when using it. Without proper configuration, the code will most likely work, but far from optimal.

The opposite is to write the SQL by hand. This allows you to have complete control over the requests - exactly what you wrote is executed, no surprises. But, obviously, this increases the amount of manual labor and increases the requirements for the qualifications of developers.

DSL libraries

Approximately in the middle between these approaches there is another one, which consists in using DSL libraries ( jOOQ , Querydsl , etc.). They are usually much lighter than ORMs, but more convenient than completely manual database manipulation. The use of DSLs is not that common, so this article will take a quick look at this approach.

We will talk about one of the libraries - jOOQ . What does she offer:

- database inspection and auto-generation of classes

- fluent API for writing requests.

jOOQ is not an ORM - there is no auto-generation of queries, no caching, but at the same time, some of the problems of a completely manual approach are closed:

- classes for tables, views, functions, etc. database objects are generated automatically

- requests are written in Java, this guarantees type safe - a syntactically incorrect request or a request with a parameter of the wrong type will not compile - your IDE will immediately prompt you for an error, and you will not have to spend time launching the application to check the correctness of the request. This speeds up the development process and reduces the likelihood of errors.

In the code, the requests look something like this :

BookRecord book = dslContext.selectFrom(BOOK)

.where(BOOK.LANGUAGE.eq("DE"))

.orderBy(BOOK.TITLE)

.fetchAny();

You can use plain sql if you want:

Result<Record> records = dslContext.fetch("SELECT * FROM BOOK WHERE LANGUAGE = ? ORDER BY TITLE LIMIT 1", "DE");

Obviously, in this case, the correctness of the query and the analysis of the results are completely on your shoulders.

jOOQ Record and POJO

The BookRecord in the example above is a wrapper over a row in the book table and implements the active record pattern . Since this class is part of the data access layer (besides its specific implementation), then you might not want to transfer it to other layers of the application, but use some kind of pojo object of your own. For the convenience of converting record <–> pojo jooq offers several mechanisms: automatic and manual . The documentation for the links above has a variety of read-use examples, but no examples for inserting new data and updating. Let's fill this gap:

private static final RecordUnmapper<Book, BookRecord> unmapper =

book -> new BookRecord(book.getTitle(), ...); // -

public void create(Book book) {

context.insertInto(BOOK)

.set(unmapper.unmap(book))

.execute();

}

As you can see, everything is quite simple.

This approach allows you to hide implementation details inside the data access layer class and avoid "leakage" into other layers of the application.

Also jooq can generate DAO classes with a set of basic methods to simplify working with table data and reduce the amount of manual code (this is very similar to Spring Data JPA):

public interface DAO<R extends TableRecord<R>, P, T> {

void insert(P object) throws DataAccessException;

void update(P object) throws DataAccessException;

void delete(P... objects) throws DataAccessException;

void deleteById(T... ids) throws DataAccessException;

boolean exists(P object) throws DataAccessException;

...

}

In the company we do not use auto-generation of DAO classes - we only generate wrappers over database objects, and write queries ourselves. Wrappers are generated every time a separate maven module is rebuilt, in which migrations are stored. A little later, there will be details on how this is implemented.

Testing

Writing tests is an important part of the development process - good tests guarantee the quality of your code and save time while maintaining it. That said, it's fair to say that the converse is also true - bad tests can create the illusion of high-quality code, hide errors, and slow down the development process. Thus, it is not enough just to decide that you will write tests, you need to do it right . At the same time, the concept of the correctness of tests is very vague and everyone has a little bit of their own.

The same goes for the question of test classification. This article suggests using the following splitting option:

- unit testing (unit testing)

- integration testing

- end-to-end testing (end-to-end).

Unit testing involves checking the functionality of individual modules in isolation from each other. The size of the module is again an undefined thing, for someone it is a separate method, for someone a class. Isolation means that all other modules are mocks or stubs (in Russian these are imitations or stubs, but somehow they don't sound very good). Follow this link to read Martin Fowler's article on the difference between the two. Unit tests are small, fast, but can only guarantee the correctness of the logic of an individual unit.

Integration testsunlike unit tests, they check the interaction of several modules with each other. Working with a database is a good example when integration tests make sense, because it is very difficult to "lock up" a database with high quality, taking into account all its nuances. Integration tests in most cases are a good compromise between execution speed and quality assurance when testing a database in comparison with other types of testing. Therefore, in this article we will talk in more detail about this type of testing.

End-to-end testing is the most extensive. To carry it out, it is necessary to raise the entire environment. It guarantees the highest level of confidence in product quality, but is the slowest and most expensive.

Integration testing

When it comes to integration testing of code that works with a database, most developers ask themselves questions: how to start the database, how to initialize its state with initial data, and how to do it as quickly as possible?

Some time ago, the use of h2 was a fairly common practice in integration testing . It is an in-memory database written in Java that has compatibility modes with most popular databases. The absence of the need to install a database and the versatility of h2 made it a very convenient replacement for real databases, especially if the application does not depend on a specific database and uses only what is included in the SQL standard (which is not always the case).

But problems begin at the moment when you use some tricky database functionality (or a completely new one from a fresh version), the support for which is not implemented in h2. In general, since this is a “simulation” of a specific DBMS, there can always be some differences in behavior.

Another option is to use embedded postgres . This is real Postgres, shipped as an archive and does not require installation. It allows you to work like a regular Postgres version.

There are several implementations, the most popular from Yandex and openTable... We in the company used the version from Yandex. Of the minuses - it is quite slow at startup (every time the archive is unpacked and the database is launched - it takes 2-5 seconds, depending on the power of the computer), there is also a problem with the lag behind the official release version. We also faced the problem that after an attempt to stop from the code, some error occurred and the Postgres process remained hanging in the OS - you had to kill it manually.

testcontainers

The third option is using docker. For Java, there is a testcontainers library that provides an api for working with docker containers from code. Thus, any dependency in your application that has a docker image can be replaced in tests using testcontainers. Also, for many popular technologies, there are separate ready-made classes that provide a more convenient api, depending on the image used:

- databases (Postgres, Oracle, Cassandra, MongoDB, etc.),

- nginx

- kafka, etc.

By the way, when the tescontainers project became quite popular, the yandex developers officially announced that they were stopping the development of the embedded postgres project and advised to switch to testcontainers.

What are the pros:

- testcontainers are fast (starting empty Postgres takes less than a second)

- postgres community releases official docker images for each new version

- testcontainers has a special process that kills dangling containers after shutting down jvm, unless you have done it programmatically

- with testcontainers, you can use a uniform approach to test external dependencies of your application, which obviously makes things easier.

Example test using Postgres:

@Test

public void testSimple() throws SQLException {

try (PostgreSQLContainer<?> postgres = new PostgreSQLContainer<>()) {

postgres.start();

ResultSet resultSet = performQuery(postgres, "SELECT 1");

int resultSetInt = resultSet.getInt(1);

assertEquals("A basic SELECT query succeeds", 1, resultSetInt);

}

}

If there is no separate class for the image in testcontainers, then creating a container looks like this :

public static GenericContainer redis = new GenericContainer("redis:3.0.2")

.withExposedPorts(6379);

If you are using JUnit4, JUnit5, or Spock, then testcontainers has extra. support for these frameworks, which makes writing tests easier.

Speeding up tests with testcontainers

Even though switching from embedded postgres to testcontainers made our tests faster by running Postgres faster, over time the tests began to slow down again. This is due to the increased number of SQL migrations that flyway performs at startup. When the number of migrations exceeded a hundred, the execution time was about 7-8 seconds, which significantly slowed down the tests. It worked something like this:

- before the next test class, a "clean" container with Postgres was launched

- flyway performed migrations

- tests of this class were executed

- the container was stopped and removed

- repeat from item 1 for the next test class.

Obviously, over time the 2nd step took more and more time.

Trying to solve this problem, we realized that it is enough to perform migrations only once before all tests, save the state of the container and then use this container in all tests. So the algorithm has changed:

- a "clean" container with Postgres is launched before all tests

- flyway performs migrations

- container state persists

- before the next test class, a previously prepared container is launched

- tests of this class are executed

- the container stops and is removed

- repeat from step 4 for the next test class.

Now the execution time of an individual test does not depend on the number of migrations, and with the current number of migrations (200+), the new scheme saves several minutes on each run of all tests.

Here are some technical details on how to implement this.

Docker has a built-in mechanism for creating a new image from a running container using the commit command . It allows you to customize the images, for example, by changing any settings.

An important caveat is that the command does not save the data of the mounted partitions. But if you take the official Postgres docker image, then the PGDATA directory, in which the data is stored, is located in a separate section (so that after restarting the container, the data is not lost), therefore, when the commit is executed, the state of the database itself is not saved.

The solution is simple - do not use the section for PGDATA, but keep the data in memory, which is quite normal for tests. There are 2 ways how to achieve this - use your dockerfile (something like this) without creating a section, or override the PGDATA variable when starting the official container (the section will remain, but will not be used). The second way looks much simpler:

PostgreSQLContainer<?> container = ...

container.addEnv("PGDATA", "/var/lib/postgresql/data-no-mounted");

container.start();

Before committing, it is recommended to checkpoint postgres to flush changes from shared buffers to "disk" (which corresponds to the overridden PGDATA variable):

container.execInContainer("psql", "-c", "checkpoint");

The commit itself goes something like this:

CommitCmd cmd = container.getDockerClient().commitCmd(container.getContainerId())

.withMessage("Container for integration tests. ...")

.withRepository(imageName)

.withTag(tag);

String imageId = cmd.exec();

It is worth noting that this approach using prepared images can be applied to many other images, which will also save time when running integration tests.

A few more words about optimizing build time

As mentioned earlier, when assembling a separate maven module with migrations, among other things, java wrappers are generated over the database objects. For this, a self-written maven plugin is used, which is launched before compiling the main code and performs 3 actions:

- Runs a "clean" docker container with postgres

- Launches Flyway, which performs sql migrations for all databases, thereby checking their validity

- Runs Jooq, which inspects the database schema and generates java classes for tables, views, functions and other schema objects.

As you can easily see, the first 2 steps are identical to those performed when the tests are run. To save time on starting the container and running migrations before tests, we moved the saving of the container state to a plugin. Thus, now, immediately after rebuilding the module, ready-made images for integration tests of all databases used in the code appear in the local repository of docker images.

More detailed code example

( «start»):

save-state stop .

:

@ThreadSafe

public class PostgresContainerAdapter implements PostgresExecutable {

private static final String ORIGINAL_IMAGE = "postgres:11.6-alpine";

@GuardedBy("this")

@Nullable

private PostgreSQLContainer<?> container; // not null if it is running

@Override

public synchronized String start(int port, String db, String user, String password)

{

Preconditions.checkState(container == null, "postgres is already running");

PostgreSQLContainer<?> newContainer = new PostgreSQLContainer<>(ORIGINAL_IMAGE)

.withDatabaseName(db)

.withUsername(user)

.withPassword(password);

newContainer.addEnv("PGDATA", "/var/lib/postgresql/data-no-mounted");

// workaround for using fixed port instead of random one chosen by docker

List<String> portBindings = new ArrayList<>(newContainer.getPortBindings());

portBindings.add(String.format("%d:%d", port, POSTGRESQL_PORT));

newContainer.setPortBindings(portBindings);

newContainer.start();

container = newContainer;

return container.getJdbcUrl();

}

@Override

public synchronized void saveState(String name) {

try {

Preconditions.checkState(container != null, "postgres isn't started yet");

// flush all changes

doCheckpoint(container);

commitContainer(container, name);

} catch (Exception e) {

stop();

throw new RuntimeException("Saving postgres container state failed", e);

}

}

@Override

public synchronized void stop() {

Preconditions.checkState(container != null, "postgres isn't started yet");

container.stop();

container = null;

}

private static void doCheckpoint(PostgreSQLContainer<?> container) {

try {

container.execInContainer("psql", "-c", "checkpoint");

} catch (IOException | InterruptedException e) {

throw new RuntimeException(e);

}

}

private static void commitContainer(PostgreSQLContainer<?> container, String image)

{

String tag = "latest";

container.getDockerClient().commitCmd(container.getContainerId())

.withMessage("Container for integration tests. It uses non default location for PGDATA which is not mounted to a volume")

.withRepository(image)

.withTag(tag)

.exec();

}

// ...

}

( «start»):

@Mojo(name = "start")

public class PostgresPluginStartMojo extends AbstractMojo {

private static final Logger logger = LoggerFactory.getLogger(PostgresPluginStartMojo.class);

@Nullable

static PostgresExecutable postgres;

@Parameter(defaultValue = "5432")

private int port;

@Parameter(defaultValue = "dbName")

private String db;

@Parameter(defaultValue = "userName")

private String user;

@Parameter(defaultValue = "password")

private String password;

@Override

public void execute() throws MojoExecutionException {

if (postgres != null) {

logger.warn("Postgres already started");

return;

}

logger.info("Starting Postgres");

if (!isDockerInstalled()) {

throw new IllegalStateException("Docker is not installed");

}

String url = start();

testConnection(url, user, password);

logger.info("Postgres started at " + url);

}

private String start() {

postgres = new PostgresContainerAdapter();

return postgres.start(port, db, user, password);

}

private static void testConnection(String url, String user, String password) throws MojoExecutionException {

try (Connection conn = DriverManager.getConnection(url, user, password)) {

conn.createStatement().execute("SELECT 1");

} catch (SQLException e) {

throw new MojoExecutionException("Exception occurred while testing sql connection", e);

}

}

private static boolean isDockerInstalled() {

if (CommandLine.executableExists("docker")) {

return true;

}

if (CommandLine.executableExists("docker.exe")) {

return true;

}

if (CommandLine.executableExists("docker-machine")) {

return true;

}

if (CommandLine.executableExists("docker-machine.exe")) {

return true;

}

return false;

}

}

save-state stop .

:

<build>

<plugins>

<plugin>

<groupId>com.miro.maven</groupId>

<artifactId>PostgresPlugin</artifactId>

<executions>

<!-- running a postgres container -->

<execution>

<id>start-postgres</id>

<phase>generate-sources</phase>

<goals>

<goal>start</goal>

</goals>

<configuration>

<db>${db}</db>

<user>${dbUser}</user>

<password>${dbPassword}</password>

<port>${dbPort}</port>

</configuration>

</execution>

<!-- applying migrations and generation java-classes -->

<execution>

<id>flyway-and-jooq</id>

<phase>generate-sources</phase>

<goals>

<goal>execute-mojo</goal>

</goals>

<configuration>

<plugins>

<!-- applying migrations -->

<plugin>

<groupId>org.flywaydb</groupId>

<artifactId>flyway-maven-plugin</artifactId>

<version>${flyway.version}</version>

<executions>

<execution>

<id>migration</id>

<goals>

<goal>migrate</goal>

</goals>

<configuration>

<url>${dbUrl}</url>

<user>${dbUser}</user>

<password>${dbPassword}</password>

<locations>

<location>filesystem:src/main/resources/migrations</location>

</locations>

</configuration>

</execution>

</executions>

</plugin>

<!-- generation java-classes -->

<plugin>

<groupId>org.jooq</groupId>

<artifactId>jooq-codegen-maven</artifactId>

<version>${jooq.version}</version>

<executions>

<execution>

<id>jooq-generate-sources</id>

<goals>

<goal>generate</goal>

</goals>

<configuration>

<jdbc>

<url>${dbUrl}</url>

<user>${dbUser}</user>

<password>${dbPassword}</password>

</jdbc>

<generator>

<database>

<name>org.jooq.meta.postgres.PostgresDatabase</name>

<includes>.*</includes>

<excludes>

#exclude flyway tables

schema_version | flyway_schema_history

# other excludes

</excludes>

<includePrimaryKeys>true</includePrimaryKeys>

<includeUniqueKeys>true</includeUniqueKeys>

<includeForeignKeys>true</includeForeignKeys>

<includeExcludeColumns>true</includeExcludeColumns>

</database>

<generate>

<interfaces>false</interfaces>

<deprecated>false</deprecated>

<jpaAnnotations>false</jpaAnnotations>

<validationAnnotations>false</validationAnnotations>

</generate>

<target>

<packageName>com.miro.persistence</packageName>

<directory>src/main/java</directory>

</target>

</generator>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</configuration>

</execution>

<!-- creation an image for integration tests -->

<execution>

<id>save-state-postgres</id>

<phase>generate-sources</phase>

<goals>

<goal>save-state</goal>

</goals>

<configuration>

<name>postgres-it</name>

</configuration>

</execution>

<!-- stopping the container -->

<execution>

<id>stop-postgres</id>

<phase>generate-sources</phase>

<goals>

<goal>stop</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

Release

The code is written and tested - it's time to release. In general, the complexity of a release depends on the following factors:

- on the number of databases (one or more)

- on the size of the database

- on the number of application servers (one or more)

- seamless release or not (whether application downtime is allowed).

Items 1 and 3 impose a backward compatibility requirement on the code, since in most cases it is impossible to simultaneously update all databases and all application servers - there will always be a point in time when the databases will have different schemas, and the servers will have different versions of the code.

The size of the database affects the migration time - the larger the database, the more likely you will need to carry out a long migration.

Seamlessness is partly a resultant factor - if the release is carried out with shutdown (downtime), then the first 3 points are not so important and only affect the time the application is unavailable.

If we talk about our service, then these are:

- about 30 database clusters

- size of one base 200 - 400 GB

- ( 100),

- .

We use canary releases : a new version of the application is first displayed on a small number of servers (we call it a pre-release), and after a while, if no errors are found in the pre-release, it is released to other servers. Thus, production servers can run on different versions.

Each application server, when launched, checks the database version with the versions of the scripts that are in the source code (in flyway terms, this is called validation ). If they are different, the server will not start. This ensures code and database compatibility . A situation cannot arise when, for example, the code works with a table that has not yet been created, because the migration is in a different version of the server.

But this of course does not solve the problem when, for example, in the new version of the application there is a migration that deletes a column in the table that can be used in the old version of the server. Now we check such situations only at the review stage (it is mandatory), but in an amicable way it is necessary to introduce additional. stage with such a check in the CI / CD cycle.

Sometimes migrations can take a long time (for example, when updating the data of a large table) and in order not to slow down the releases, we use the combined migrations technique... The combination consists in manually running the migration on a running server (through the administration panel, without flyway and, accordingly, without recording in the migration history), and then "regular" output of the same migration in the next version of the server. These migrations are subject to the following requirements:

- Firstly, it must be written in such a way as not to block the application during a long execution (the main point here is not to acquire long-term locks at the DB level). To do this, we have internal guidelines for developers on how to write migrations. In the future, I may also share them on Habré.

- Secondly, the migration at a "regular" start should determine that it has already been performed in manual mode and do nothing in this case - just commit a new record in history. For SQL migrations, such a check is performed by executing some SQL query for changes. Another approach for Java migrations is to use stored boolean flags, which are set after a manual run.

This approach solves 2 problems:

- the release is fast (albeit with manual actions)

- ( ) - .

After release, the development cycle does not end. To understand whether the new functionality works (and how it works), it is necessary to “enclose” with metrics. They can be divided into 2 groups: business and system.

The first group strongly depends on the subject area: for a mail server, it is useful to know the number of letters sent, for a news resource - the number of unique users per day, etc.

The metrics of the second group are approximately the same for everyone - they determine the technical state of the server: cpu, memory, network, database, etc.

What exactly needs to be monitored and how to do it - this is a topic of a huge number of separate articles and it will not be touched upon here. I would like to remind only the most basic (even captain's) things:

define metrics in advance

It is necessary to define a list of basic metrics. And it should be done in advance , before the release, and not after the first incident, when you do not understand what is happening with the system.

set up automatic alerts

This will speed up your reaction time and save time on manual monitoring. Ideally, you should know about problems before users feel them and write to you.

collect metrics from all nodes

Metrics, like logs, are never too many. The presence of data from each node of your system (application server, database, connection puller, balancer, etc.) allows you to have a complete picture of its state, and if necessary, you can quickly localize the problem.

A simple example: loading the data of a web page started to slow down. There can be many reasons:

- the web server is overloaded and takes a long time to respond to requests

- SQL query takes longer to execute

- a queue has accumulated on the connection pool and the application server cannot receive a connection for a long time

- network problems

- something else

Without metrics, finding the cause of a problem will not be easy.

Instead of completion

I would like to say a very banal phrase about the fact that there is no silver bullet and the choice of one or another approach depends on the requirements of a specific task, and what works well for others may not be applicable for you. But the more different approaches you know, the more thoroughly and qualitatively you can make this choice. I hope that from this article you have learned something new for yourself that will help you in the future. I would be happy to comment on what approaches you use to improve the process of working with the database.