In recent years, artificial intelligence has become increasingly popular. Just ask and Siri will tell you the result of the soccer match you missed last night. Spotify's recommendations will tell you what to add to your music library, and Amazon will try to predict which product you will buy next when even you don't know it yet.

He also did not pass by the gaming industry. While the gaming environment is comparing the graphics released on the Death Stranding PC with and without DLSS technology, the other day it became known that the NVIDIA Ampere processors will already use DLSS 3.0. However, it will work with any game with TAA (Temporal Anti-Aliasing) and Game Ready driver. This means that developers will need to fine-tune the technology for their games - however, this process will be much easier than it is now.

In this article, we'll take a look at how NVIDIA uses machine learning to improve our gaming experience.

What is DLSS?

The quality of graphics in modern games is only increasing, and with it the computational complexity of the operations performed is growing. We owe this to ray tracing, which simulates real-time lighting, thereby leaving pre-baked reflections a relic of the past. The computational complexity of ray tracing is due to the fact that the resolution of modern games exceeds the good old 1080p. Hence the need to speed up calculations during rendering.

Deep Learning Super Sampling (DLSS) is an NVIDIA technology that uses deep machine learning to improve frame rates in graphics- intensive games. With DLSS, gamers can use higher settings and resolutions without worrying about fps stability.

In particular, DLSS fulfills the task of super resolution. With it, an image with a resolution of, say, 1080p can be upscaled to 4K with minimal loss in quality. This removes the need to play the game in 4K (and thus probably melt your PC). The resolution will still be 1080p, allowing for higher frame rates, but with DLSS upscaling, you will hardly notice a difference from 4K.

DLSS 2.0 architecture

Essentially, DLSS is a neural network trained on NVIDIA supercomputers. The output of this neural network is compared to a 16K reference image, and the error between them is returned to the network through a feedback loop. To avoid speed issues, DLSS uses Tensor Cores, which are the backbone of RTX 2000 processors (and future RTX 3000). This can significantly speed up tensor operations and improve the efficiency of AI training and tasks related to high performance computing.

DLSS evolution: from 1.0 to 2.0

DLSS 1.0 was trained for each game separately, and therefore it took an extremely long time to study. It also did not support 4x upsampling, for example from 1080p to 4K, and had a number of other imperfections in image quality that were not worth the frame rate improvement.

DLSS 2.0 is a more general algorithm, devoid of training and upsampling restrictions, as well as having lower output latency due to the use of tensor cores: it is on the order of 1.5 ms at 4K on the RTX 2080ti - and in some cases it provides an even better result than the original picture. DLSS images are 1080p final

In DLSS 1.0, you can max out 720p to 1080p, while DLSS 2.0 can upscale to 1080p even at 540p. As you can see in the example, the image at 540p looks completely washed out. At the same time, the result with DLSS 2.0 turned out to be better than with DLSS 1.0, and even slightly better than the original picture. That is, DLSS 2.0 handles pixel filling more efficiently than DLSS 1.0, even though the latter needs to rescale a smaller difference in resolution.

DLSS 2.0's ability to scale images at 540p, combined with the low latency of the method itself, provides a significant performance boost over its predecessor.

Rendering time with and without DLSS 2.0 (in ms)

Learn more about how DLSS works

When rendering scene geometry in games (for example, in a triangle), the number of pixels used (or the sampling rate - sub-pixel mask) determines how the image will look.

When using a 4x4 sampling grid to render the triangle, we can see that the result leaves a lot to be desired.

By enlarging the sampling grid by 4 times - up to 8x8 - the image appears more like the intended triangle. This is the essence of DLSS: converting a low-resolution image to a higher one.

The essence of DLSS

As a result, for the same rendering cost as a low-quality image, you get a higher resolution image.

Purpose of DLSS

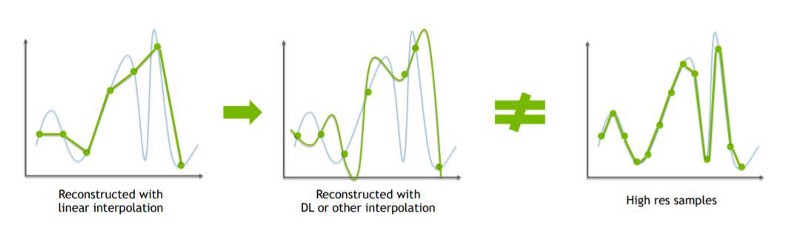

This actually solves the not new super-resolution problem.

Let's take a quick look at how AI-assisted resolution enhancement techniques evolved.

Single Image Super-Resolution

This technique allows high-resolution images to be obtained from a lower one using interpolation techniques such as bilinear, bicubic and Lanczos filters. You can also implement it with the help of deep neural networks, but then the problem of distortion of new pixels obtained from the training data arises. This makes the image appear believable, but not too similar to the original. The method produces an overly smooth, not detailed picture and is unstable in time, which leads to inconsistency and flickering of frames.

Single Image Super-Resolution

Let's compare some of the results of these single-image super-resolution techniques with what DLSS 2.0 offers. Target resolution - 1080p

Obviously, DLSS 2.0 is better than bicubic interpolation and ESRGAN , a neural network architecture that uses a generative adversarial network to achieve super-resolution. As a result, the ferns in the case of DLSS 2.0 look even more detailed than in the original image.

Multi-frame Super-Resolution

This method uses multiple low resolution images to produce a high resolution image. It helps to recover details better than the previous approach. It was mainly designed for video and burst photography, and therefore does not use rendering-specific information. One example of its use is frame alignment using optical flow instead of geometric motion vectors. In this case, the calculations are cheaper, and the results are more accurate. This approach seems more promising than the previous one and brings us to the next technique.

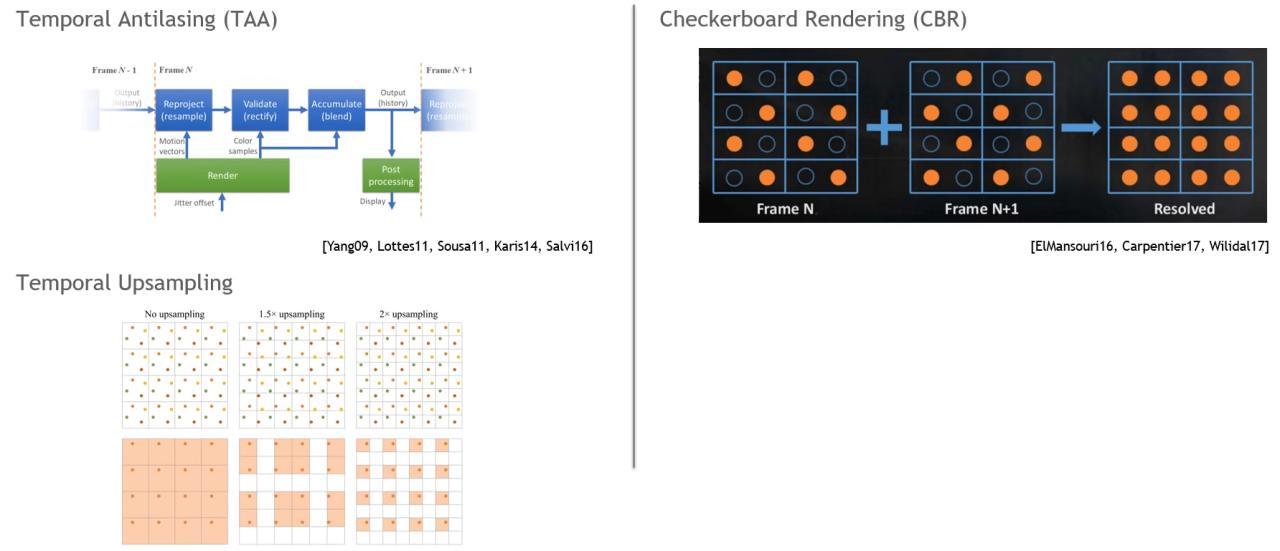

Spatial-Temporal Super Sampling

This method uses multiple frames to super-sample images.

We have the current frame. Let's say the previous one looks like it. By using a lower sampling rate, we can increase the total number of samples needed to reconstruct the image.

Spatial-Temporal Super Sampling Histogram

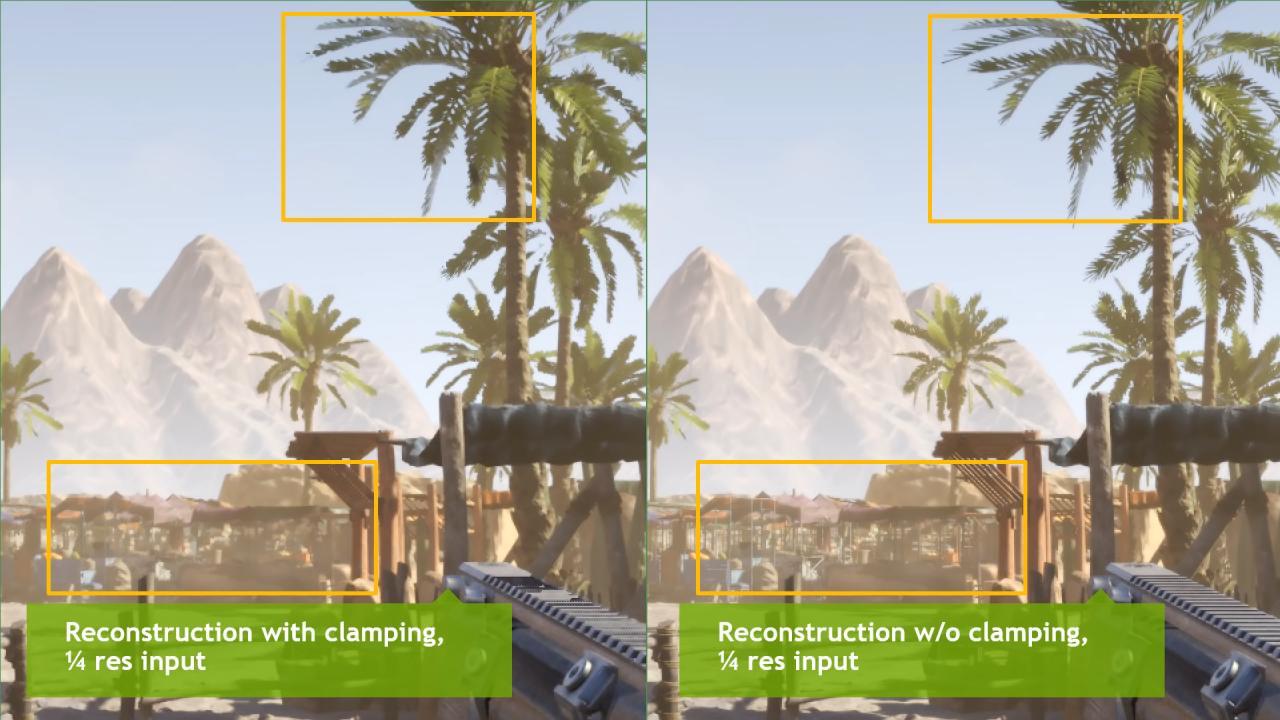

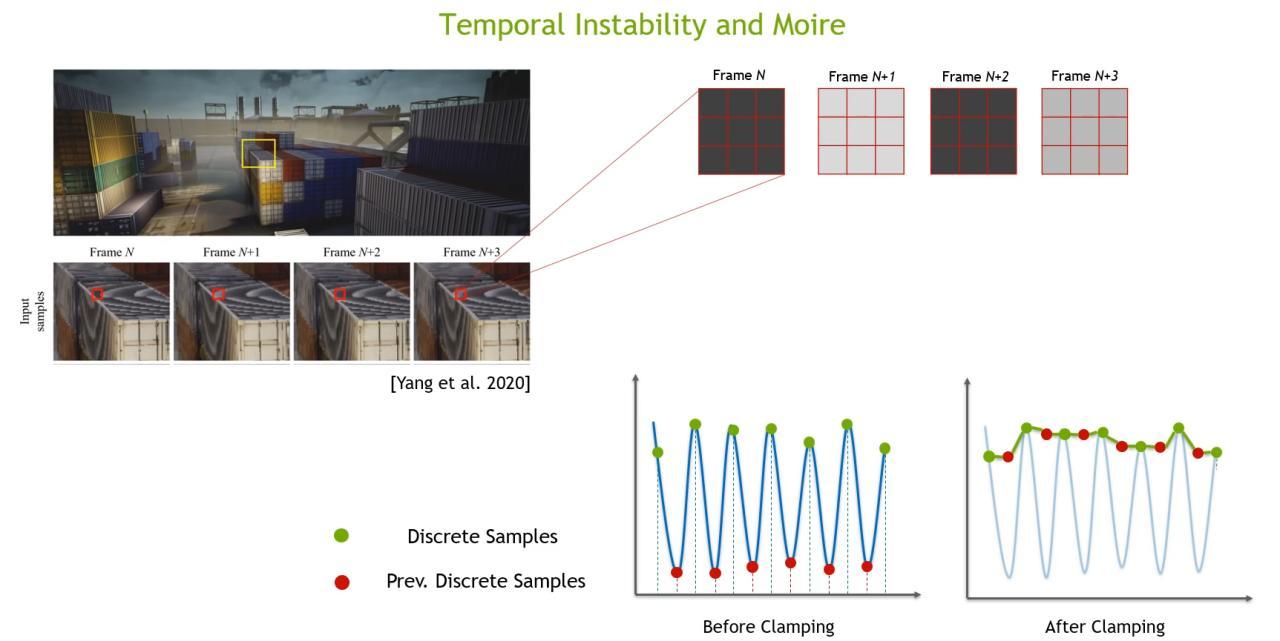

The problem is that in games, everything is constantly in motion. Therefore, to correct the frame history, this supersampling method is forced to perform a heuristic search, for example, according to the Neighbor Clamping principle. These heuristics contribute to blur, temporal instability, moire, lag, and ghosting. Adverse effects from Neighbor Clamping Temporary flicker and moire in images with Neighbor Clamping

DLSS 2.0: Deep Learning-based multi-frame reconstruction

The DLSS neural network learns from tens of thousands of training images designed to perform better reconstruction than the heuristics can offer, thereby eliminating their effects. This leads to a much better result using data from multiple frames.

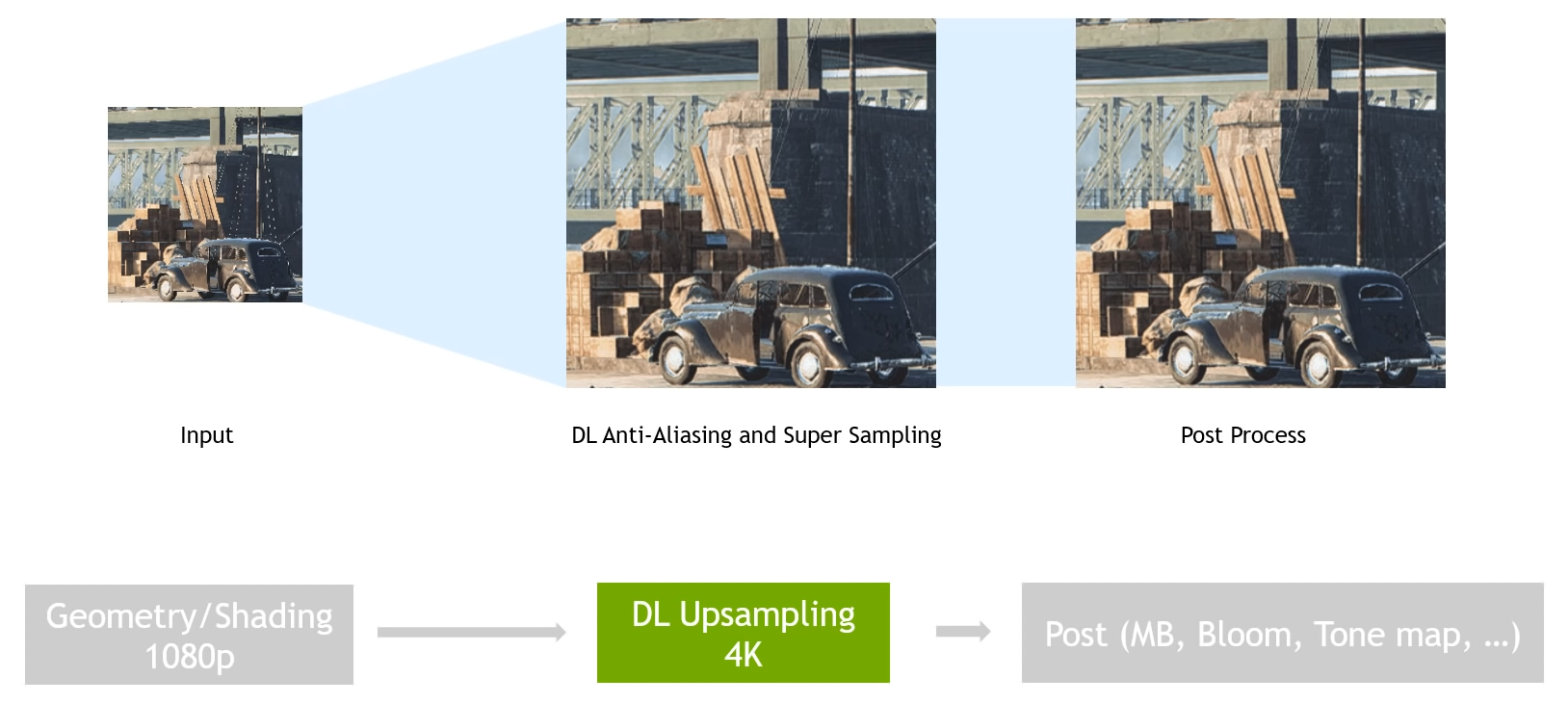

Implementing DLSS in the engine, first of all, it is necessary to render the scene in low resolution: all its geometry, dynamic lighting, effects in screen space and ray tracing. After that, DLSS can be applied at the stage when anti-aliasing usually occurs - after all, the technology performs exactly the same function, only with super-sampling. Then post-processing of the image takes place, including motion blur, bloom, chromatic aberration, tone mapping and other effects.

DLSS is not just an image processing algorithm. It usually works in conjunction with rendering, so its process needs to be revisited as well. However, in the case of DLSS 2.0, these changes are not as difficult to implement as it was before.

Performance tests

The latest title Remedy Control supports both ray tracing and DLSS. As you can see in the graph above, the RTX 2060 received a performance boost from 8fps to about 36.8fps through DLSS, which significantly increased the project's playability. This result is even better than the RTX 2080ti without DLSS, which further proves how effective this technology is.

Digital Foundry has compared the image quality using DLSS 1.9 and 2.0 in this video.

Left - Control with DLSS 1.9, right - with DLSS 2.0. Images captured on an RTX 2060 at 1080p and then upscaled to 4K

In the comparison above, we can see how DLSS 2.0 renders distorted hair strands in the case of DLSS 1.9.

Conclusion

DLSS is only available for RTX 2000 and Turing based GPUs (and upcoming Ampere based RTX 3000 GPUs) and currently only supports a handful of games. DLSS 2.0 is supported by even fewer titles, but the level of detail can surpass even the original image, while the frame rate remains high. This is a really cool achievement for NVIDIA, and the technology definitely has a promising future.