What has changed in consumer banking

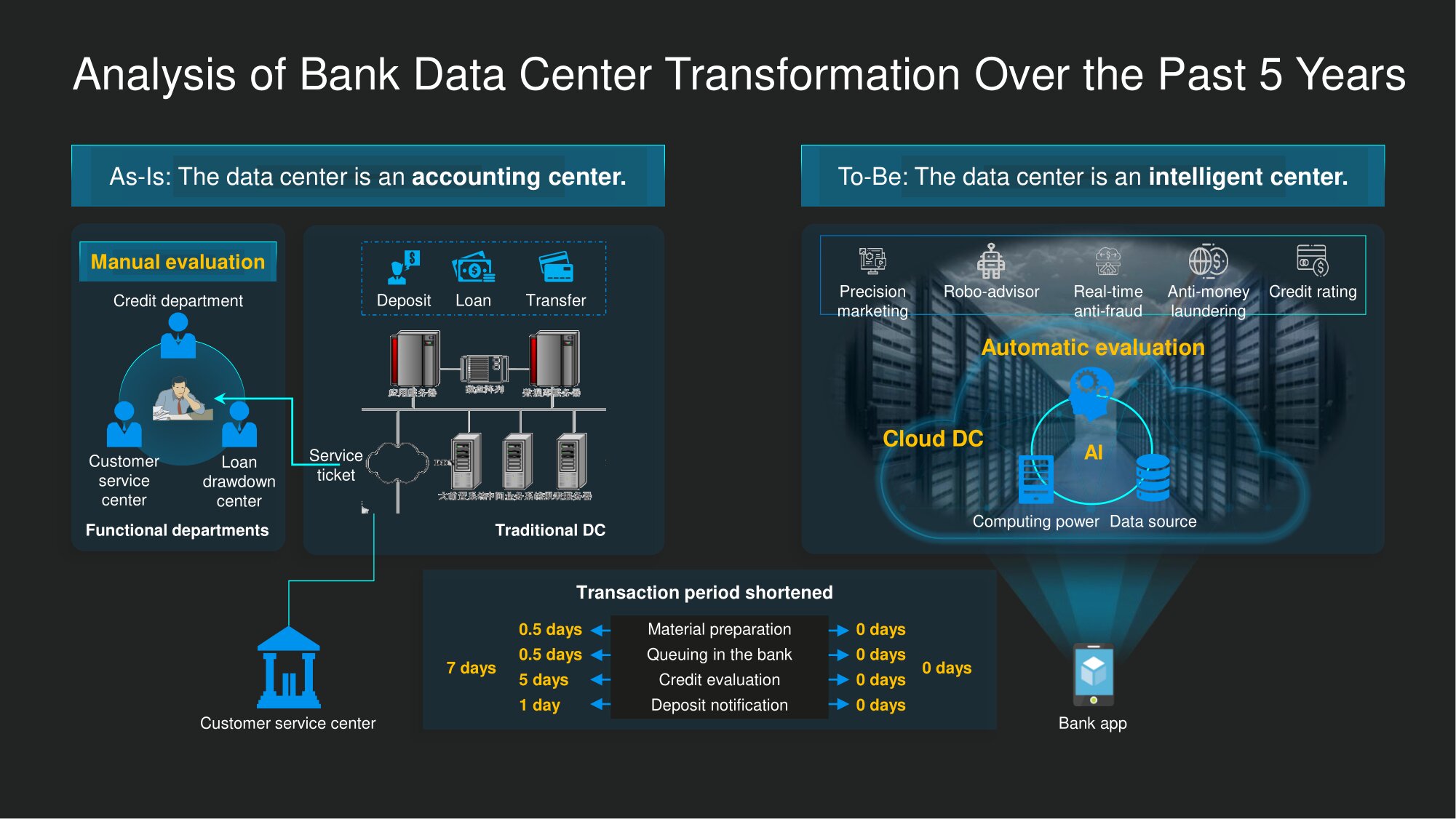

In China, even some five years ago, getting a loan was not a quick matter - for a mere mortal, for sure. It was required to fill out a lot of papers, send or take them to a bank branch, perhaps even standing in line, and back home, to wait for a decision. How much to wait? And how it comes out, from a week to several months.

By 2020, this procedure has been simplified dramatically. I recently conducted a small experiment - I tried to get a loan using my bank's mobile application. Several taps on the smartphone screen - and the system promises to give me an answer in a quarter of an hour at the latest. But in less than five minutes, I receive a push notification indicating what loan size I can count on. Agree, an impressive progress in comparison with the situation five years ago. Curiously, it took whole days and weeks in the recent past.

So, before, most of the time was spent on data verification and manual scoring. All information from questionnaires and other papers had to be entered into the bank's IT system. But this was only the beginning of the ordeal: the bank employees personally checked your credit history, after which they made the final decision. They left the office at 17:00 or 18:00, had a rest on weekends, and the process, as a result, could drag on for a long time.

It's different these days. The human factor in many tasks of digital banking is generally taken out of the brackets. The assessment, including anti-fraud and AML checks, is performed automatically using smart algorithms. The cars do not need rest, so they operate seven days a week and around the clock. In addition, a fair amount of information required for decision-making is already stored in bank databases. This means that a verdict is passed in a much shorter period than in "itish antiquity."

In general, earlier the banking data center was used rather for solving problems of the "registration" type. For a long time it remained only an accounting center and did not produce anything by itself. Today there are more and more "smart" data centers where the product is created... They are used for complex calculations and help to derive intelligence from raw data - in fact, knowledge with high added value. In addition, continuous data mining - if it is prepared correctly, of course - ultimately further increases the efficiency of the processes.

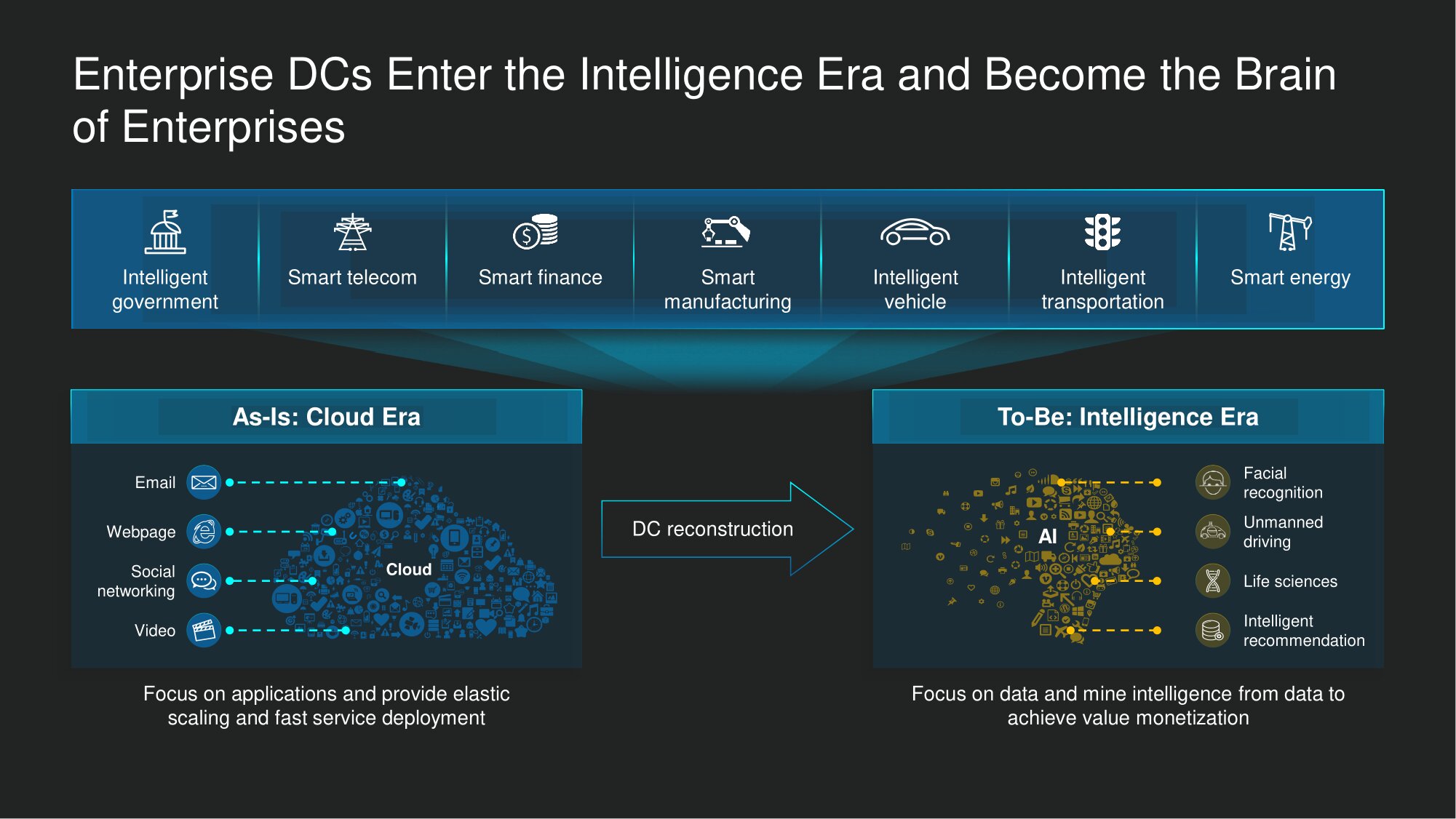

These transformations are taking place not only in finance, but in virtually all business verticals. For companies of a wide variety of profiles (and for us, as a solution manufacturer), data centers are now the main support in the world, where the competition between intelligent developments is more intense than ever. Even five years ago, it was mainstream to argue in line with the fact that the data center is inscribed in the world of cloud technologies, and this implied the ability to flexibly scale the total distributed pool of resources for computing and data storage. But this is the era of smart solutions, and in the data center we can perform data mining on an ongoing basis, converting the results obtained into extraordinary performance gains. In the financial sector, these changes are leading - among many other results - to the fact thatthat the assessment of loan requests is dramatically accelerating. Or, for example, they make it possible to instantly recommend the most suitable financial products for a particular bank client.

In the public sector, in the telecom, in the energy industry, intelligent work with data today contributes to digital transformation with a dramatic increase in the productivity of the organization. Naturally, new circumstances will form a new demand, and not only in relation to computing resources and data storage systems, but also in relation to network solutions for data centers.

What should be a "smart data center"

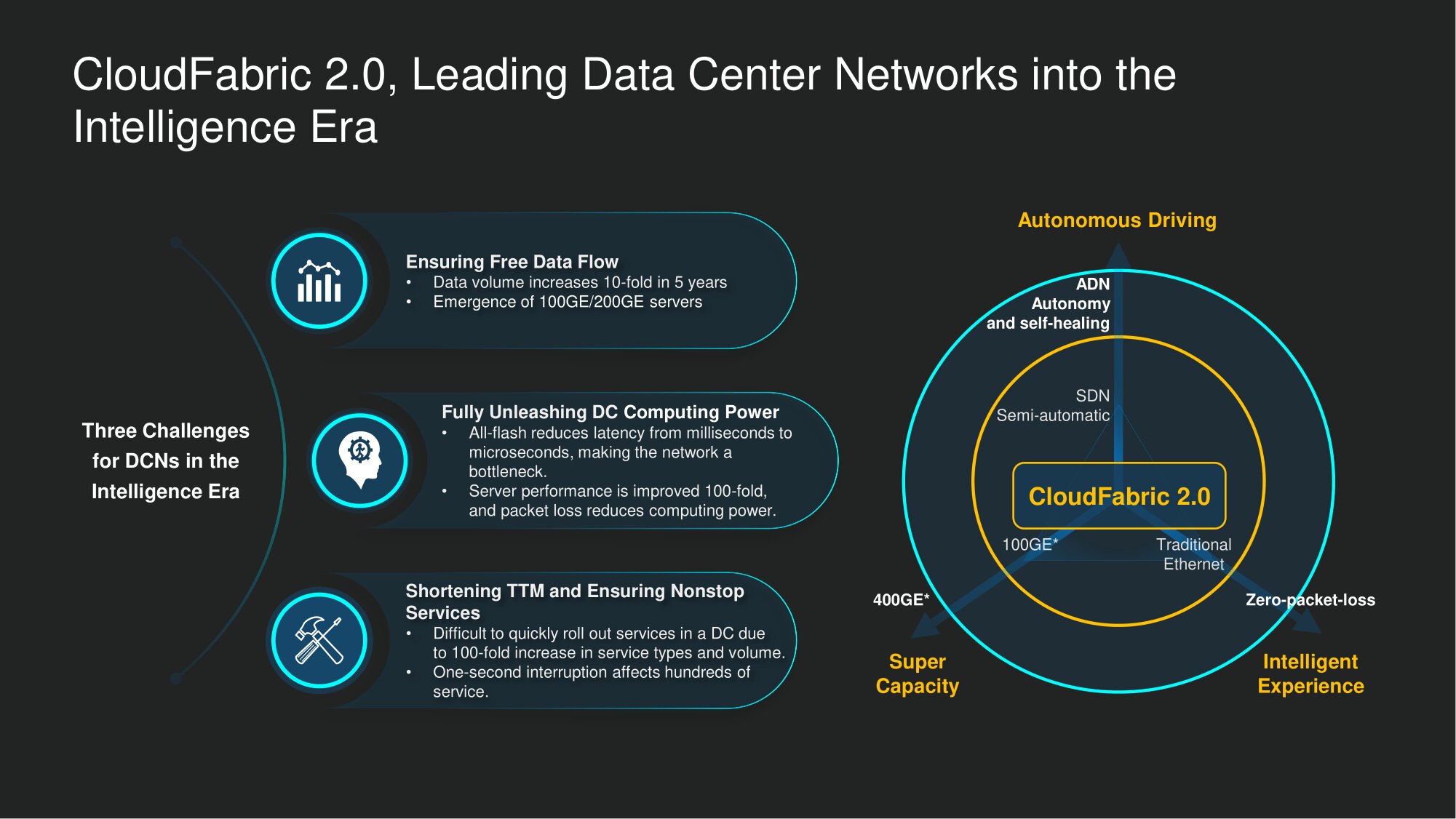

At Huawei, we have identified three major data center challenges in the smart data center era.

First, extraordinary bandwidth is required to handle the never-ending streams of new data.... According to our observations, over the past five years, the volume of data stored in data centers has grown tenfold. But what's even more impressive is how much traffic is generated when accessing such data. In data centers of "registration type" all this information was used to solve accounting problems and often lay dead weight, and in data centers of a new type it "works" - we need to provide constant data mining. As a result, 10-1000 times more iterations are performed when accessing a unit of stored data than before. For example, when training AI models, computational tasks are performed almost non-stop in the background, with the constant functioning of neural network algorithms in order to increase the "intelligence" of the system. Thus, not only the volumes of stored data are growing, but also the traffic that is generated when accessing them.So it is not at all at the whim of telecom vendors that there are more and more one hundred and two hundred gigabit ports on new models of datastore servers.

Second, no loss of data packetsin 2020, the absolute must. In any case, from our point of view. Previously, such losses were not a headache for engineers in banking data centers. The bottlenecks were processing power and storage efficiency. But the industry average values of both indicators have increased significantly over the past five years on a global scale. Naturally, the efficiency of the network infrastructure turned out to be the bottleneck in the work of data centers. Working with one of our top clients, we found that every percentage added to the packet loss rate threatens to halve the training efficiency of AI models. Hence the huge impact on the productivity and efficiency of the use of computing resources and data storage systems. That's what needs to be overcometo support the transformation of a simple data center into a data center for the smart age.

Third, it's important to deliver the service seamlessly and seamlessly . Modern digital banking has taught, and has taught quite rightly, people to the fact that the services of financial institutions can, or rather, even have to be available 24/7. A common situation: a worn-out entrepreneur with a disordered daily routine, in dire need of additional funds, wakes up closer to midnight and wants to find out which line of credit he can count on. The paths back are cut off: the bank no longer has the ability to suspend the work of the DC in order to fix or upgrade something.

Our CloudFabric 2.0 solution is precisely designed to cope with these challenges. It supports the highest throughput, intelligent data center network management and flawless functioning of autonomous driving networks (ADN).

What's in CloudFabric 2.0 for Smart Data Centers

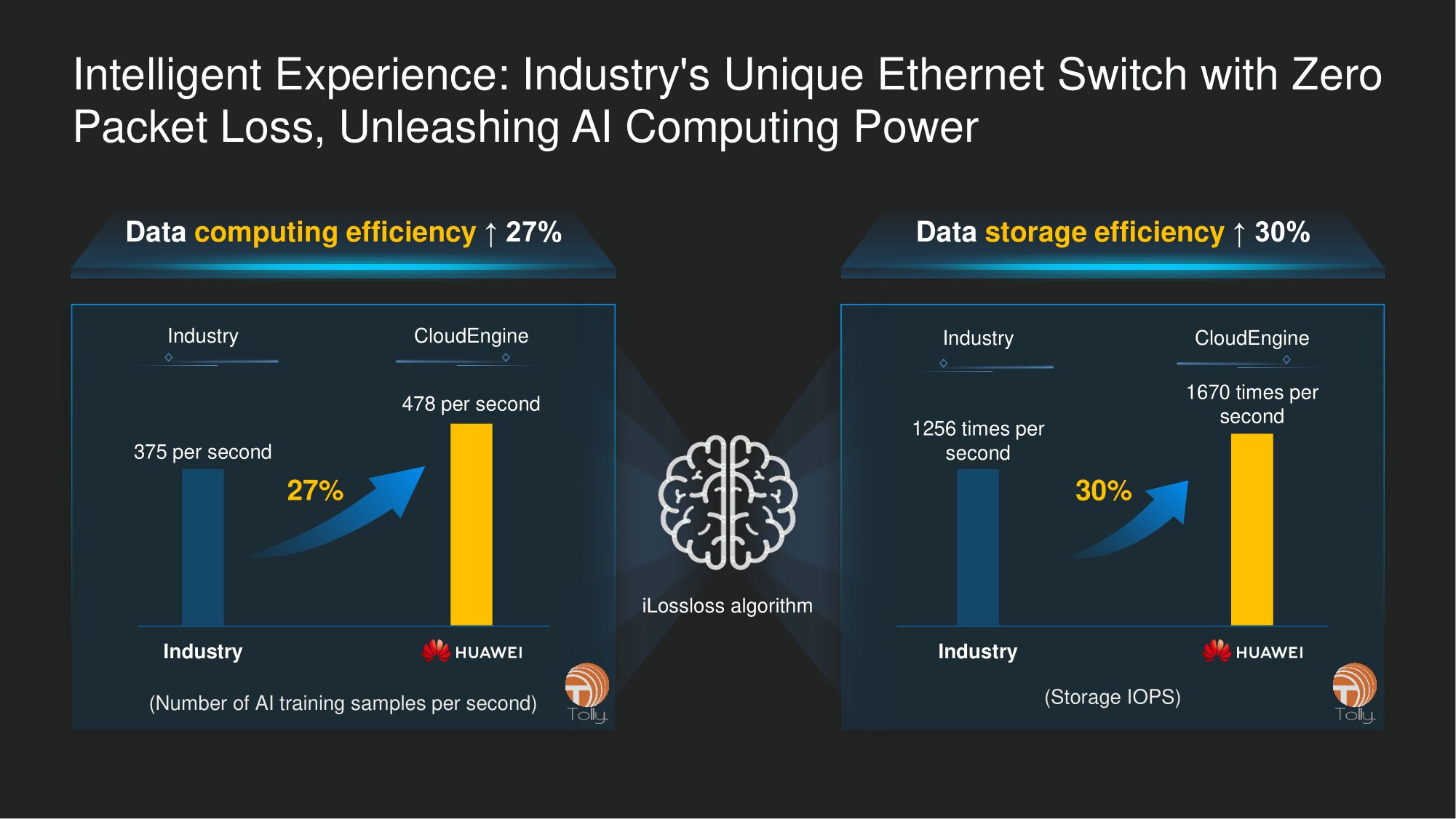

With regard to high throughput, we rely not only on the scalability of our network solutions, but also on the flexibility in working with them. For example, Huawei data center switches of the CloudEngine line became the first devices of this class in the industry with an embedded processor for neural network computing in real time, helping, among other things, to solve problems within the network infrastructure and prevent data packet loss (this is achieved using the iLossless algorithm, in including for the iNOF RoCE scenario). But, of course, the actual bandwidth also matters. Including support for 400 Gb / s interfaces is important, as well as backward compatibility with currently widespread ten-, forty- and hundred-gigabit connections.

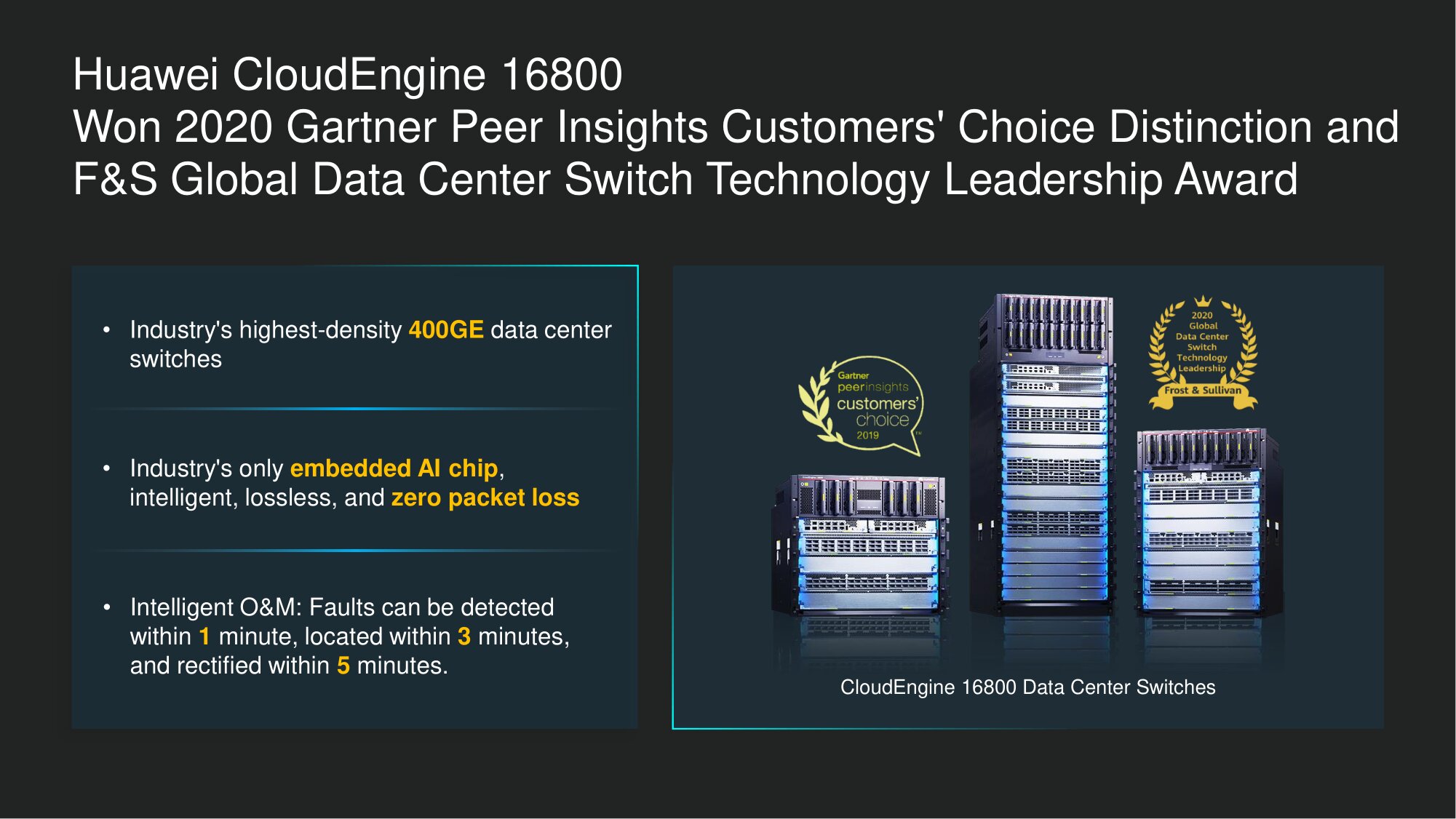

The supporting nodes of the infrastructure must also be able to work with high density of connections (the so-called high-density scenarios), with the possibility of significant scalability of the solution. Our flagship data center model CloudEngine 16800 supports up to 48 ports at 400 Gbps per slot - three times more than its closest competitor.

As for the system as a whole, the possibilities for expanding the throughput per chassis scalability are also impressive - 768 ports of 400 Gbps per chassis , or six times more than solutions of other market players allow. This gives us reason to call the CloudEngine 16800 the most powerful data center switch in the era of winning AI.

The intellectual component of the network solution also comes to the fore. In particular, it is also necessary in order to ensure a zero level of loss of data packets. To achieve this result, we use our most advanced technological advances, including an integrated AI processor for "neural network" computing, as well as the previously mentioned iLossless algorithm. While doing projects for our leading customers, we were convinced that these solutions can significantly improve system performance in at least two common scenarios.

The first is training AI models. It requires constant access to data and calculations on huge matrices or "heavy" operations with TensorFlow. Our iLossless is able to increase the productivity of training AI models by 27% - proven in real cases and verified by the Tolly Group lab test. The second scenario is to improve the efficiency of storage systems. Its, in turn, the use of our developments can raise it by about 30%.

Among other things, together with our customers, we strive to try out new opportunities that our developments open up. We are confident that by improving the Ethernet-based switching fabric for the data center, we can transform the high-performance data center fabric with the storage network into a single, coherent Ethernet-based infrastructure. So, in order not only to increase the productivity of learning processes for AI models and improve access to software-defined data stores, but also to significantly optimize the total cost of ownership of a data center through mutual integration and merging of vertical networks that are independent at the physical levels.

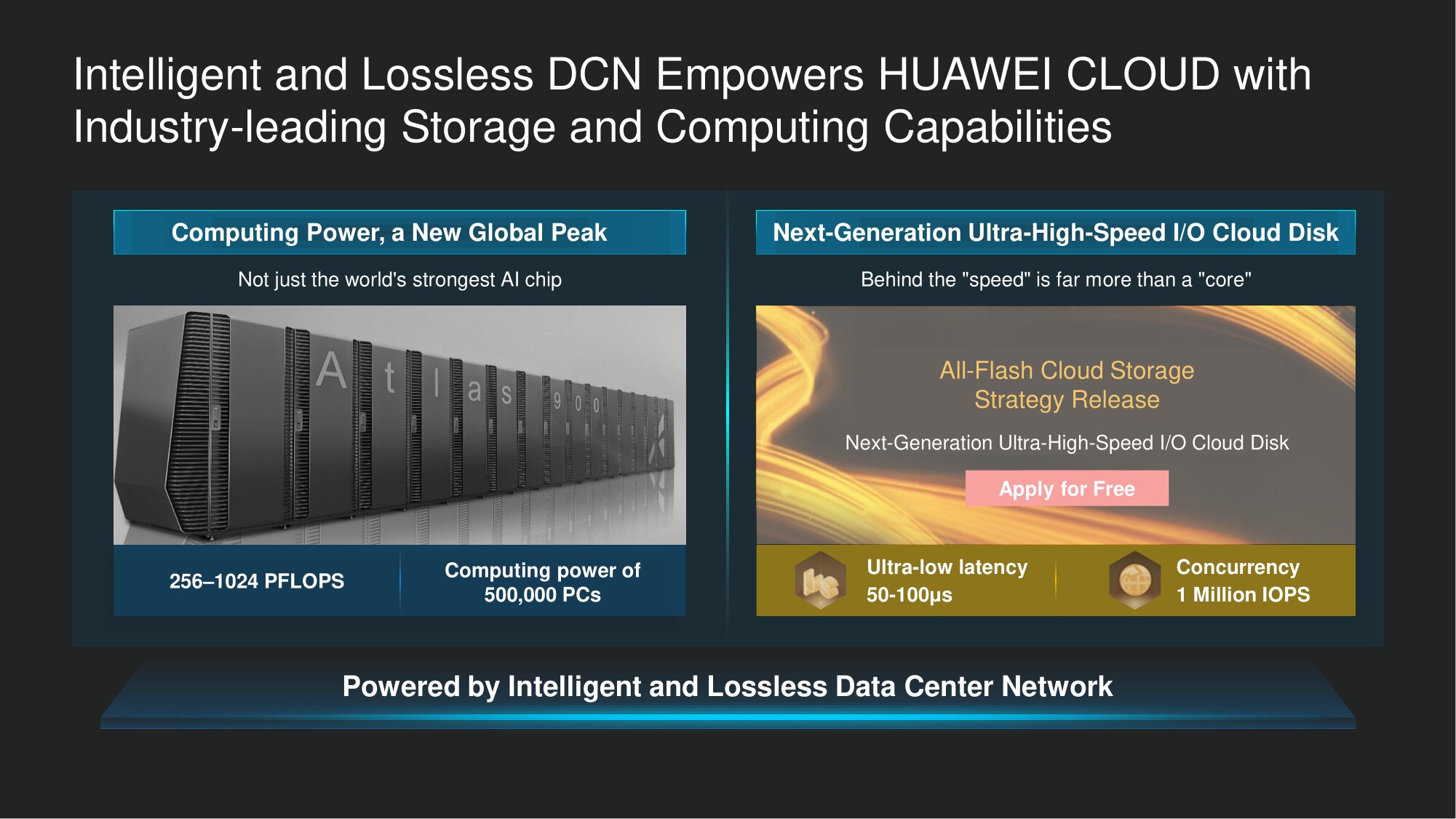

Many of our clients enjoy rolling out these new features. And one of those clients is Huawei itself. In particular, a member of our group of companies Huawei Cloud. Working closely with our colleagues in this division, we made sure that by guaranteeing them no data packet loss, we gave the impetus to noticeably improve their business processes. Finally, among our "internal" achievements, we note the fact that in Atlas 900, the largest AI cluster in the world, we are able to provide computing power used for training artificial intelligence, at around 1,000 petaflops - the highest figure in a computer industry today.

Another highly relevant scenario is cloud data storage using All-Flash systems. This is a very “trending” service by industry standards. Increasing computing resources and expanding storage facilities naturally require advanced technologies from the field of data center networking solutions. So we continue to work with Huawei Cloud and implement more and more application scenarios using our network solutions.

What ADN Networks Can Do Today

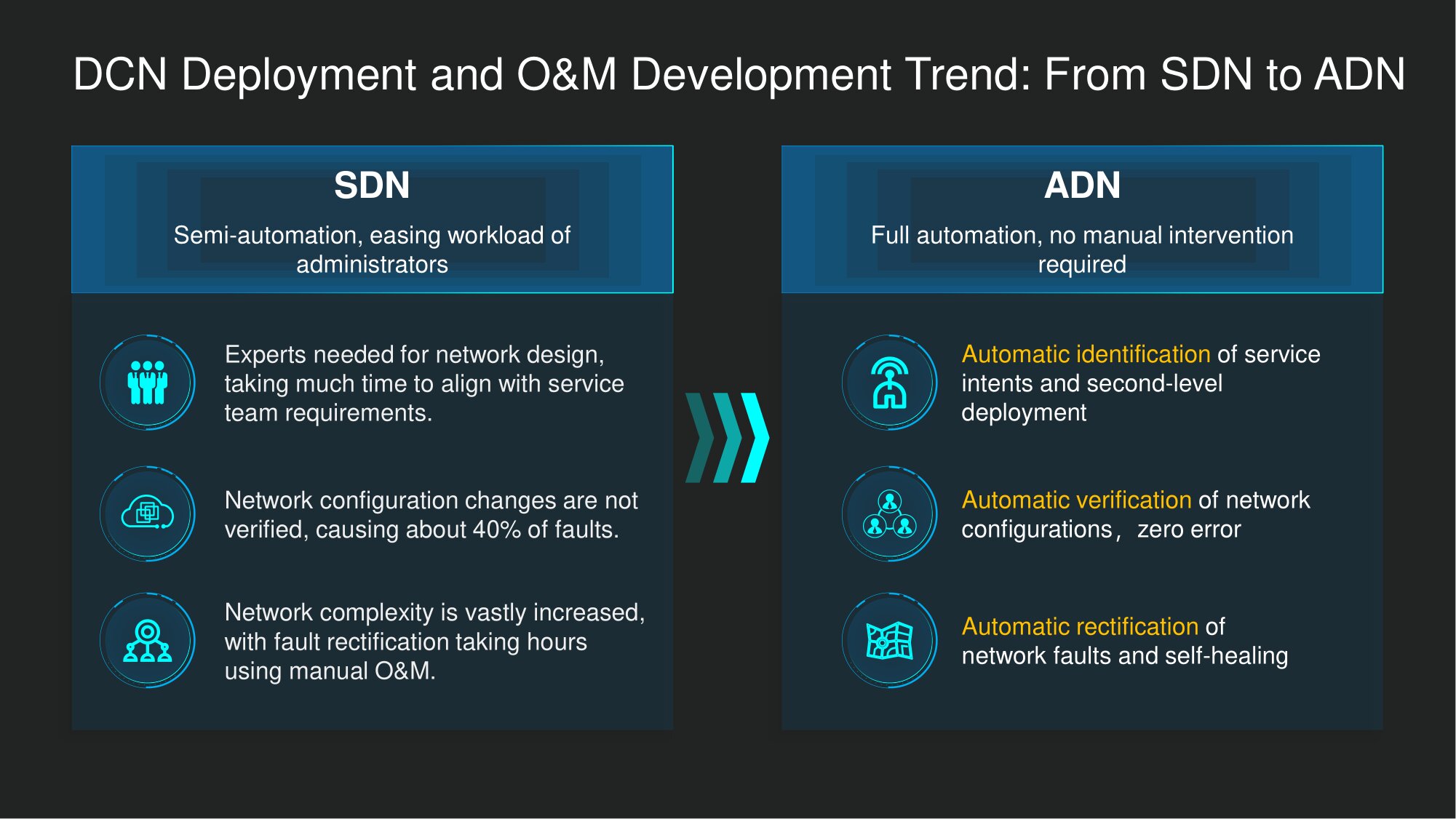

Let's turn to Autonomous Networks (ADN). There is no doubt that software-defined networks (software-defined networks) from the point of view of technology - a confident step forward in the management of the network component of the data center. The applied implementation of the SDN concept significantly speeds up the initialization and configuration of the data center network layer. But, of course, the capabilities it provides are not enough to fully automate the O&M of the data center. To go further, there are three primary challenges that need to be addressed.

Firstly, in the network infrastructure of data centers there are more and more opportunities associated with the provision of services and settings for their operation, in the financial sector - especially. It is important to be able to automatically translate the service-level intent to the network layer...

Secondly, it also comes down to the verification of such incremental provisioning commands. Understandably, data center networks have been configured a lot a long time ago, based on well-established or even outdated approaches. How do you ensure that additional customization does not break your debugged procedures? Automatic verification of new additional settings is indispensable. Precisely automatic, since the set of existing settings in the data center is usually prohibitively large. It is practically impossible to cope with it manually.

Thirdly, the question arises of effective prompt elimination of problems in the network infrastructure... When automation reaches a high level, administrators and service engineers of the data center are no longer able to track in real time what is happening on the network. They need a toolkit that can make a network of thousands of changes a day consistently transparent to them, as well as build databases built on knowledge graphs to quickly deal with problems.

ADNs can help us meet these challenges of moving to truly smart data centers. And the ideology of networks with autonomous control (it migrated into the world of data centers from the neighboring industry - at the junction of IoT and V2X, in particular) allows us to revise approaches to automation at different levels of the data center network.

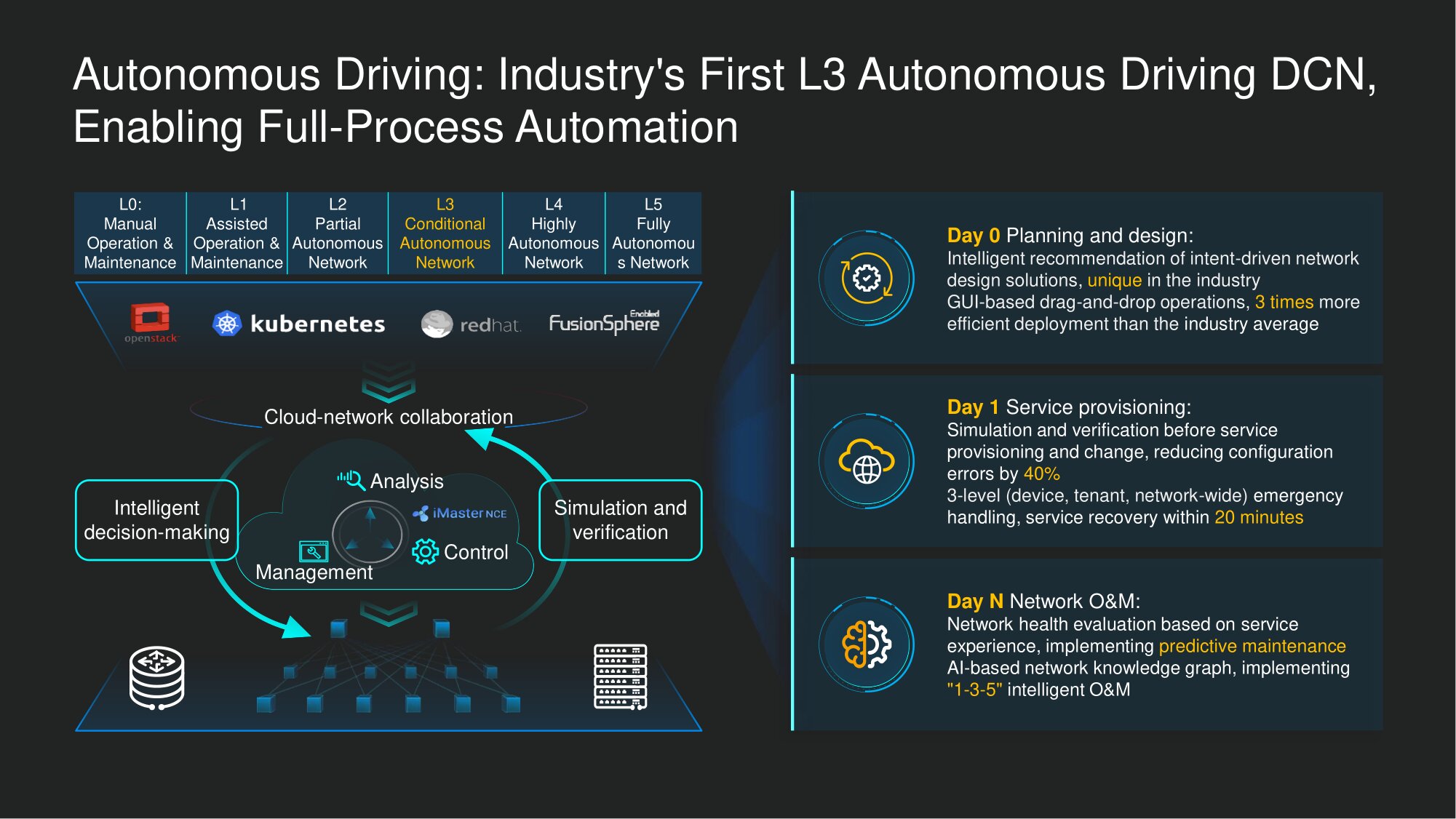

At the moment, in the autonomy of managing networks for data centers, we have reachedlevel L3 (conditional automation). This means a high degree of data center automation, in which human intervention is required pointwise and only under certain conditions.

Meanwhile, in a number of scenarios, full automation is also possible. We are already working with our clients as part of a joint innovation program for the comprehensive automation of data center networks in accordance with the ADN concept, primarily in the context of troubleshooting network problems, and in relation to the most pressing and time-consuming of them we have achieved success: for example, with the help of our intelligent technologies automatically manage to close about 85% of the most frequently developing failure scenarios in data center networks .

This functionality is implemented within the framework of our O&M 1-3-5 concept: a minute to establish the fact that a failure has occurred, or to detect the risk of a failure, three minutes to determine its root cause, and five minutes to suggest how eliminate it. Of course, for the time being, human participation is necessary for making final decisions - in particular, choosing one of the possible decisions and giving the command to execute it. Someone has to take responsibility for the choice. However, starting from practice, we believe that the system, even in its current implementation, offers highly qualified and appropriate solutions.

Here are a few of the most challenging challenges facing smart data center architects in 2020, and we have actually dealt with them. For example, functionality for transferring requests from the service layer to the network layer and for automatic verification of settings is already included in CloudFabric 2.0.

We are pleased that our accomplishments have been recognized - and this year we received the Gartner Peer Insights Customer Choice Award, as well as the F&S Global Data Center Switch Technology Leadership Award - for the CloudEngine 16800 Switch, which was recognized for outstanding throughput , the highest density of 400 Gigabit interfaces and the overall scalability of the system, as well as intelligent technologies that allow, in particular, to reduce the level of data packet loss to zero.