Approx. transl. : The author of this article (Luc Perkins) is a developer advocate for CNCF, home to Open Source projects such as Linkerd, SMI (Service Mesh Interface) and Kuma (by the way, have you also wondered why Istio is not on this list ?. .). In another attempt to bring a better understanding of the service mesh hype to the DevOps community, he cites 16 characteristic capabilities that provide such solutions. Service mesh is one of the hottest topics in software engineering

today(and rightfully so!). I find this technology incredibly promising and dream of witnessing its widespread adoption (when it makes sense, of course). However, it is still surrounded by a halo of mystery for most people. Moreover, even those whois well acquainted with it, it is often difficult to formulate its advantages and what exactly it is (including your humble servant). In this article, I will try to remedy the situation by listing various scenarios for using "service grids" *.

* Approx. transl .: hereinafter, in the article, we will use just such a translation ("service mesh") for the still new term service mesh.

But first I want to make a few comments:

- , . , service mesh Twitter 2015 ( « ») Linkerd, - .

- — . , , .

- At the same time, not every existing service mesh implementation supports all of these use cases. Therefore, my expressions like "service mesh can ..." should be read as "separate, and possibly all popular service mesh implementations can ...".

- The order of the examples does not matter.

Short list:

- service discovery;

- encryption;

- authentication and authorization;

- load balancing;

- circuit breaking;

- autoscaling;

- canary deployments;

- blue-green deployments;

- health check;

- load shedding;

- traffic mirroring;

- insulation;

- rate limiting, retries and timeouts;

- telemetry;

- audit;

- visualization.

1. Service Discovery

TL; DR: Connect to other services on the network using simple names.

Services should be able to automatically "discover" each other via appropriate name - for example,

service.api.production, pets/stagingor cassandra. Cloud environments are resilient, and a single name can hide multiple instances of a service. It is clear that in such a situation it is physically impossible to hardcode all IP addresses.

Plus, when one service finds another, it should be able to send requests to this service without fear that they will end up at the entrance of its idle instance. In other words, the service mesh must monitor the health of all service instances and keep the list of hosts up to date.

Each service mesh implements a service discovery mechanism differently. At the moment, the most common way is to delegate to external processes like DNS Kubernetes. In the past, on Twitter, we used the Finagle naming system for this purpose . In addition, the service mesh technology makes it possible for custom naming mechanisms to emerge (although I have yet to come across any SM implementation with this functionality).

2. Encryption

TL; DR: Get rid of unencrypted traffic between services and make the process automated and scalable.

It's nice to know that attackers cannot penetrate your internal network. Firewalls do an excellent job of this. But what happens if a hacker gets inside? Can he do whatever he wants with intraservice traffic? Let's hope it doesn't happen. To prevent this scenario, you should implement a zero-trust network in which all traffic between services is encrypted. Most modern service meshes achieve this through mutual TLS.(mutual TLS, mTLS). In some cases, mTLS works in entire clouds and clusters (I think that interplanetary communications will someday be arranged in a similar way).

Of course, for mTLS, the service mesh is optional . Each service can take care of its own TLS, but this means that it will be necessary to find a way to generate certificates, distribute them among service hosts, include code in the application that will load these certificates from files. Yes, also remember to renew these certificates at regular intervals. Service grids automate mTLS with systems like SPIFFE , which in turn automate the process of issuing and rotating certificates.

3. Authentication and authorization

TL; DR: Determine who initiate the request and determine what they are allowed to do before the request reaches the service.

Services often want to know who is making the request (authentication) and, using this information, decide what the subject is allowed to do (authorization). In this case, the pronoun "who" can hide:

- Other services. This is called " peer authentication ". For example, a service

webwants to access a servicedb. Service meshes usually solve these problems with mTLS: certificates in this case act as a necessary identifier. - -. « ». ,

haxor69. , , JSON Web Tokens.

. ,users, ,permissions.. service mesh , .

After we have established who the request came from, we need to determine what this subject is allowed to do. Some service meshes allow you to define baseline policies (who can do what) as YAML files or on the command line, while others offer integration with frameworks like the Open Policy Agent . The ultimate goal is to ensure that your services accept any requests, safely assuming that they come from a reliable source and this action is allowed.

4. Load balancing

TL; DR: Load distribution across service instances according to a specific pattern.

"Service" within a service sect very often consists of many identical instances. For example, today the service

cacheconsists of 5 copies, and tomorrow their number may increase to 11. Requests directed to cacheshould be distributed in accordance with a specific purpose. For example, minimize latency or maximize the likelihood of getting to a healthy instance. The most commonly used round-robin algorithm (Round-robin), but there are many others - for example, the method of weighted (weighted) requests (you can select the preferred target), annular (ring)hashing (using consistent hashing for upstream hosts) or the least request method (the instance with the least number of requests is preferred).

Classic load balancers have other features, such as HTTP caching and DDoS protection, but they are not very relevant for east-west traffic (i.e., for traffic flowing within a data center - approx. Transl.) (Typical use of service mesh). Of course, you don't need to use a service mesh for load balancing, but it allows you to define and control balancing policies for each service from a centralized management layer, thereby eliminating the need to start and configure separate load balancers in the network stack.

5. circuit breaking

TL; DR: Stop traffic to problematic service and control damage in worst-case scenarios.

If, for some reason, the service is unable to handle the traffic, the service mesh provides several options for solving this problem (others will be discussed in the corresponding sections). Circuit breaking is the most severe way to disconnect a service from traffic. However, it doesn't make sense on its own - you need a backup plan. May provide backpressure ( backpressure ) on services that query (just do not forget to set up your service mesh for that!), Or, for example, staining of the status of the page in red and redirect users to another version of the page with the "falling whale» ( «Twitter is down ").

Service grids do more than just determine when and what will happen next. In this case, “when” can include any combination of the specified parameters: the total number of requests for a certain period, the number of parallel connections, pending requests, active retries, etc.

You might not want to abuse circuit breaking, but it is nice to know that there is a contingency plan for emergencies.

6. Auto zoom

TL; DR: Increase or decrease the number of service instances based on the specified criteria.

Service meshes are not schedulers, so they don't scale out on their own. However, they can provide information on the basis of which planners will make decisions. Since service meshes have access to all traffic between services, they have a wealth of information about what is happening: which services are experiencing problems, which ones are very weakly loaded (the power allocated to them is wasted), etc.

For example, Kubernetes scales services depending on the CPU and memory usage of the pods (see our talk " Autoscaling and resource management in Kubernetes " - approx. Transl.), but if you decide to scale based on any other metric (in our case, related to traffic), you will need a special metric. A tutorial like this shows you how to do this with Envoy , Istio and Prometheus , but the process itself is quite complex. We would like the service mesh to simplify it by simply allowing conditions such as "increase the number of service instances

authif the number of pending requests exceeds the threshold for a minute."

7. Canary deployments

TL; DR: Try out new features or service versions on a subset of users.

Let's say you're developing a SaaS product and intend to roll out a cool new version of it. You tested it in staging and it worked great. But still, there are certain concerns about her behavior in real conditions. In other words, it is required to test the new version on real tasks, without risking user confidence. Canary deployments are great for this. They allow you to demonstrate a new feature to a subset of users. This subset might be the most loyal users, or those who use the free version of the product, or those who have expressed a desire to be guinea pigs.

Service meshes do this by letting you specify criteria that determine who sees which version of your application and routing traffic accordingly. At the same time, nothing changes for the services themselves. Version 1.0 of the service believes that all requests come from users who should see it, and version 1.1 assumes the same for its users. In the meantime, you can change the percentage of traffic between the old and the new version, redirecting a growing number of users to the new one, if it works stably and your "experimental" give the go-ahead.

8. Blue-green deployments

TL; DR: Roll out the cool new feature, but be prepared to put it back in immediately.

The point of blue-green deployments is to roll out a new blue service by running it in parallel with the old green one. If everything goes smoothly and the new service proves itself well, then the old one can be gradually turned off. (Alas, someday this new "blue" service will repeat the fate of the "green" one and disappear ...) Blue-green deployments differ from canary deployments in that the new function covers all users at once (not just a part); the point here is to have a "spare harbor" ready in case something goes wrong.

Service meshes provide a very convenient way to test a blue service and instantly switch to a working green in case of a problem. Not to mention the fact that along the way they give a lot of information (see the item "Telemetry" below) about the work of the "blue", which helps to understand whether he is ready for full-fledged exploitation.

Approx. transl. : Read more about different Kubernetes deployment strategies (including the mentioned canary, blue / green and others) in this article .

9. Health check

TL; DR: Keep track of which service instances are up and react to those that are no longer so.

A health check helps you decide whether service instances are ready to receive and process traffic. For example, in the case of HTTP services, a health check might look like a GET request to an endpoint

/health. The answer 200 OKwill mean that the instance is healthy, any other - that it is not ready to receive traffic. Service meshes allow you to specify both the way in which the health will be checked and the frequency with which this check will be performed. This information can then be used for other purposes, such as load balancing and circuit breaking.

Thus, the health check is not an independent use case, but is usually used to achieve other goals. Also, depending on the results of health checks, external (in relation to other targets of the service grids) actions may be required: for example, refresh the status page, create an issue on GitHub, or fill out a JIRA ticket. And the service mesh offers a handy mechanism to automate all of this.

10. Load shedding

TL; DR: Redirect traffic in response to temporary spikes in usage.

If a certain service is overloaded with traffic, you can temporarily redirect some of this traffic to another location (that is, "dump", " shed" it there). For example, to a backup service or data center, or to a permanent Pulsar topic. As a result, the service will continue to process part of the requests instead of crashing and stopping processing everything at all. Dumping the load is preferable to breaking the circuit, but it is still not desirable to overuse it. It helps prevent cascading failures that cause downstream services to crash.

11. Traffic parallelization / mirroring

TL; DR: Send one request to multiple locations at once.

Sometimes it becomes necessary to send a request (or some sample of requests) to several services at once. A typical example is sending a part of production traffic to a staging service. The main production web server sends a request to

products.productionand only to the downstream service . And the service mesh intelligently copies this request and sends it to products.staging, which the web server is not even aware of.

Another related service grid use case that can be implemented on top of traffic parallelization is regression testing... It involves sending the same requests to different versions of a service and checking to see if all versions behave the same way. I have not yet come across a service mesh implementation with an integrated regression testing system like Diffy , but the idea itself seems promising.

12. Insulation

TL; DR: Break your service mesh into mini networks.

Also known as segmentation , isolation is the art of dividing a service grid into logically distinct segments that know nothing about each other. Isolation is a bit like creating virtual private networks. The fundamental difference is that you can still take full advantage of the service mesh (like service discovery), but with added security. For example, if an attacker manages to penetrate a service on one of the subnets, he will not be able to see which services are running on other subnets or intercept their traffic.

In addition, the benefits can be organizational. You may want to subnet your services based on your organization's structure and relieve developers of the cognitive burden of keeping the entire service mesh in mind.

13. Rate limiting, retries and timeouts

TL; DR: No longer need to include the pressing tasks of managing requests in the codebase.

All of these things could be viewed as separate use cases, but I decided to combine them because of one thing in common: they take over the tasks of managing the request lifecycle, usually handled by application libraries. If you are developing a Ruby on Rails web server (not integrated with the service mesh) that makes requests to backend services via gRPC, the application will have to decide what to do if N requests fail. You will also have to find out how much traffic these services will be able to handle and hardcode these parameters using a special library. Plus, the application will have to decide when to give up and let the request go bad (by timeout). And in order to change any of the above parameters, the web server will have to be stopped, reconfigured and restarted.

Transferring these tasks to the service grid means not only that service developers don't need to think about them, but also that they can be viewed in a more global way. If you are using a complex chain of services, say A -> B -> C -> D -> E, the entire life cycle of the request must be considered. If the task is to extend timeouts in service C, it is logical to do it all at once, and not in parts: by updating the service code and waiting for the pull request to be accepted and the CI system to deploy the updated service.

14. Telemetry

TL; DR: Collect all the necessary (and not quite) information from services.

Telemetry is a general term that includes metrics, distributed tracing, and logging. Service grids offer mechanisms for collecting and processing all three types of data. This is where things get a little fuzzy as there are so many options available. To collect metrics, there are Prometheus and other tools, to collect logs you can use fluentd , Loki , Vector , etc. (for example, ClickHouse with our loghouse for K8s - approx.transl.) , For distributed tracing there is Jaegeretc. Each service mesh may support some tools and not others. It will be curious to see if the Open Telemetry project can provide some convergence.

In this case, the advantage of service mesh technology is that sidecar containers can, in principle, collect all of the above data from their services. In other words, you get a unified telemetry collection system at your disposal, and the service mesh can process all this information in various ways. For instance:

- tail 'logs from a certain service in the CLI;

- track the volume of requests from the service mesh dashboard;

- collect distributed traces and redirect them to a system like Jaeger.

Attention, subjective judgment: Generally speaking, telemetry is an area where strong service mesh interference is not desirable. Gathering basic information and tracking on the fly some of the "golden metrics" like success rates and latencies is fine, but let's hope we don't see Frankenstein stacks emerge that try to replace specialized systems, some of which have already proven themselves to be excellent. and well studied.

15. Audit

TL; DR: Those who forget the lessons of history are doomed to repeat them.

Auditing is the art of observing important events in the system. In the case of a service mesh, this could mean keeping track of who made requests to specific endpoints of certain services, or how many times a security event occurred in the last month.

It is clear that audit is very closely related to telemetry. The difference is that telemetry is usually associated with things like performance and technical correctness, while auditing can be related to legal and other issues that go beyond the strictly technical area (for example, compliance with the requirements of the GDPR - General EU Regulation for data protection).

16. Visualization

TL; DR: Long live React.js - an inexhaustible source of fancy interfaces.

Perhaps there is a better term, but I don’t know it. I just mean a graphical representation of the service mesh or some of its components. These visualizations can include indicators such as average latencies, sidecar configuration information, health check results, and alerts.

Working in a service-oriented environment carries a much higher cognitive load than His Majesty the Monolith. Therefore, cognitive pressure should be reduced at all costs. A corny graphical interface for a service mesh with the ability to click on a button and get the desired result can be critical to the growth of the popularity of this technology.

Not included in the list

I originally intended to include a few more use cases on the list, but then decided not to. Here they are, along with the reasons for my decision:

- Multi-data center . In my opinion, this is not so much a use case as a narrow and specific area of application of service grids or some set of functions like service discovery.

- Ingress and egress . This is a related area, but I have limited myself (perhaps artificially) to the east-west traffic scenario. Ingress and egress deserve a separate article.

Conclusion

That's all for now! Again, this list is highly tentative and most likely incomplete. If you think I am missing something or am mistaken about something, please contact me on Twitter ( @lucperkins ). Please observe the rules of decency.

PS from translator

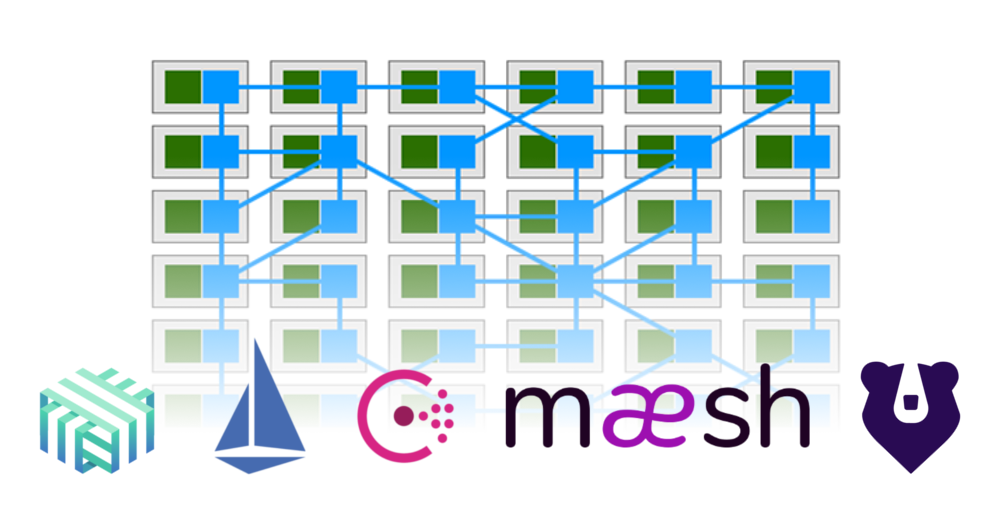

An image from the article “ What is a Service Mesh (and when to use one)? "(By Gregory MacKinnon). It shows how some of the functionality from applications (in green) has moved to the service mesh, which provides interconnections between them (in blue).

Read also on our blog: