Somehow it was necessary to integrate Rosplatform (R-Virtualization and R-Storage) with hardware storage system ( 3PAR ), and this can be very useful in various scenarios.

Synergy configuration

Previously, this kind of integration was tested on the blades (Blade CH121 v5) of the Huawei center blade with Dorado 5000 v3 storage system, where SDS (software-defined storage) of the Rosplatforma's P-Storage on top of the storage system showed itself quite well due to all flash, but with Synergy , if available JBOD disks from the D3940 module, everything is much more interesting and flexible.

3 Server Blades (Synergy 480 Gen10 (871940-B21)):

- Each has two Intel® Xeon® Platinum 8270 CPUs.

- Network two ports of 20 Gb / s.

- RAM 256GB.

- There are no hard drives in the blades.

- In the Synergy 2HDD disk module, 900GB in RAID1 for each OS / hypervisor and 3SSD HPE VK0960GFDKK 960GB for the metadata + cache role (each for one server), as well as 9HDD EG0900JFCKB for 900GB.

The OS was loaded via a channel via a RAID controller from a Synergy disk module.

Local SDS deployed over JBOD channel from Synergy disk module.

3PAR configuration

Synergy is connected to 3PAR (FC16 Gb / s):

One LUN (one to many) RAID6 from SSD with 200GB capacity. 9 LUN RAID6 from HDD, each with a capacity of 150GB (three LUNs per blade).

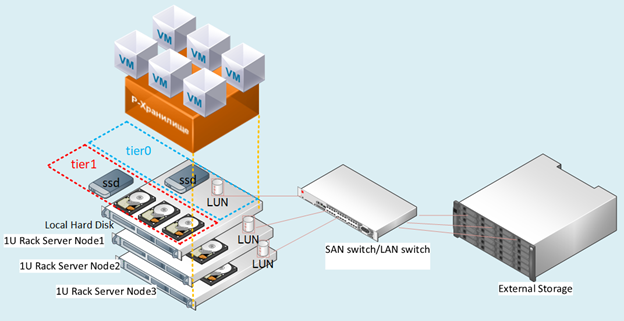

Figure: 1 Test bench layout.

Description of scenarios

We tested several options for integration with 3PAR, which can also be used simultaneously all at once in a mixed configuration.

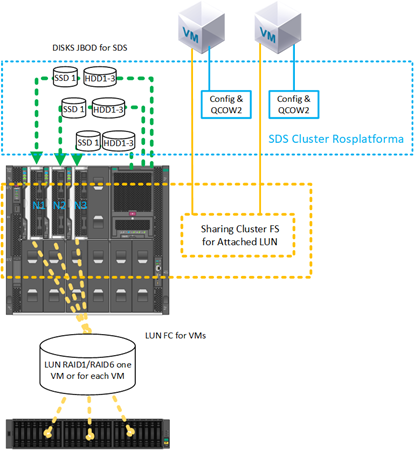

Scenario with SDS deployment of Rosplatform on separate LUNs of 150GB each from 3PAR:

Fig. 2 Scenario with SDS deployment of Rosplatform on separate LUNs

with storage systems for each node of the three were presented FC with 3 LUNs with a volume of 150GB.

On storage systems, they were configured in RAID6 from HDD disks. In Fig. 2 shows schematically 10Gb and switch, but the implementation was at the level of the Synergy switch with 20Gb ports, where one interface is for management and virtualization, and the other for SDS storage.

This scenario was tested to verify that the following options work:

- SDS Rosplatform works on top of 3PAR.

- Strengthening SDS of Rosplatforma over 3PAR by adding local SSD cache.

- By creating a small SDS Rosplatform for storing VM configuration files, where the VMs themselves are created on LUN 3PAR.

- Testing SDS Rosplatforma on top of 3PAR, defining it for a slightly slower dash than a dash of local disks.

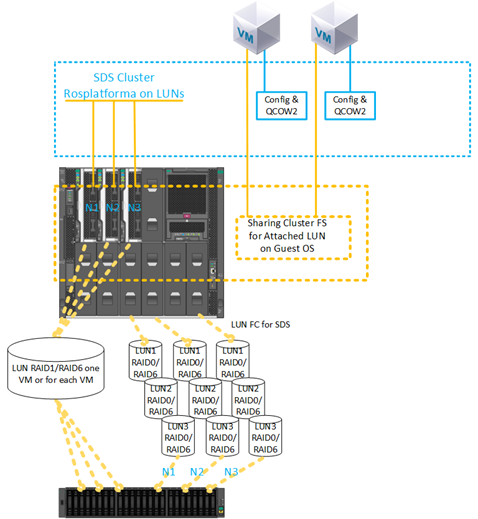

2) A scenario with creating a VM on a LUN from 3PAR:

Fig. 3 Scenario with creating a VM on a LUN from 3PAR.

LUN 200GB RAID6 from SSD for VM was presented with storage. LUN configuration is one to many.

This scenario was tested to verify the capabilities:

- Attach to VM LUN from 3PAR directly.

- Using the attached VM LUN as the primary disk without using qcow2.

- Using multiple VMs with the same 200GB LUN attached to later install the guest cluster file system.

- Migrating a VM with a 200GB LUN from 3PAR and a node crash with restarting this VM on the remaining nodes.

- Using SDS from 3PAR as storage of VM configuration files, and the guest OS with deployment on LUNs from 3PAR directly.

- Using SDS on the local disks of the Synergy module as storage of VM configuration files, and the guest OS with deployment on LUN 200GB from 3PAR directly.

Description of settings

For all scenarios on each node, before the usual deployment of the cluster, the same settings were previously made, which were performed immediately after installing three nodes from the Rosplatform images. Brief instructions for installing, configuring the cluster itself are available in the previous article .

1.enabling multipath service to autoload:

#chkconfig multipathd on2.enabling loading of modules:

#modprobe dm-multipath

#modprobe dm-round-robin3. copying the config template to the folder for the working config:

#cp /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf /etc/4.check in the inclusion pattern below the following parameters:

defaults {

user_friendly_names yes

find_multipaths yes

}5.adding an alias for a shared disk for a VM:

multipaths {

multipath {

# wwid 3600508b4000156d700012000000b0000

# alias yellow

# path_grouping_policy multibus

# path_selector "round-robin 0"

# failback manual

# rr_weight priorities

# no_path_retry 5

# }

multipath {

wwid 360002ac0000000000000000f000049f4

alias test3par

}

}

6.check for service start:

#systemctl start multipathd7. Checking the service and showing devices with mpath:

#multipath –ll8. Mandatory reboot:

#reboot9. Checking the service and displaying devices with mpath:

#multipath –llNext, the standard cluster settings were performed.

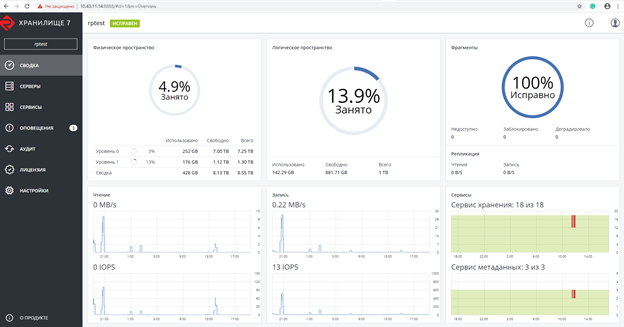

Figure: 4 web UI before enabling multipath service.

Figure: 5 After enabling multipath service, dm devices appeared.

Screenshot after creating the Rosplatforma cluster, where 200GB LUN for VMs is specially with an unassigned role:

Fig. 6 Screenshot after creating the Rosplatforma cluster.

The screenshot shows two tier0 and tier1, where tier0 is the local SDS, and tier1 is SDS over 3PAR:

Fig. 7 Local SDS and over 3PAR.

Next, a VM was created without a local disk with a 200GB LUN from 3PAR attached instead:

#prlctl set testVM --device-add hdd --device /dev/mapper/ test3parThe VM was created with the following parameters:

- Type - CentOS Linux

- vCPU - 4

- RAM - 8

- Guest OS - Centos 7 (1908)

Migration tests

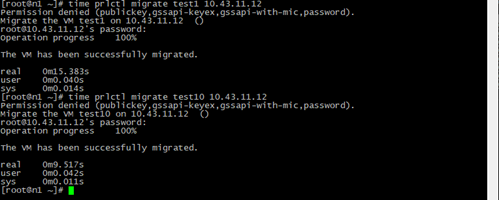

Migration tests were performed with or without the guest tools installed.

In all cases, the VM successfully migrated without stopping.

Figure: 8 Result and time of VM migration.

Test1 - a normal VM with the same parameters only with a local disk image QCOW2 64GB and Live migration of a test10 VM with a 200GB LUN from 3PAR.

The migration time is less due to the fact that during the process there is no step of switching to a copy of the VM disk image on another node, only the configuration file is copied with a link to the LUN 200GB, which is visible from any node of the cluster.

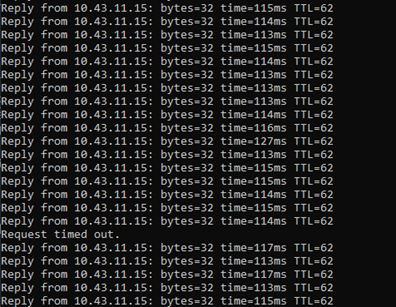

Figure: 9 Ping Results.

During the live migration, a ping was made to the VM with a 200GB LUN from 3PAR.

The loss of only one packet was recorded, the same thing happens with a regular VM on SDS, but the VM remains accessible over the network and continues to work.

Emergency shutdown tests

When the server was powered off, on which there was a VM with a LUN 200GB from 3PAR, and after the HA service detected the missing node, the tested VM successfully restarted on another node selected by the default drs algorithm, round robin, continuing to work. During the ping, 7 packets were lost. When switching, only the VM configuration file was launched, and the guest OS was always accessible via the attached path to the LUN.

We also tested creating a backup, where the VM configuration file was successfully backed up and when restoring from this VM copy, it started successfully.

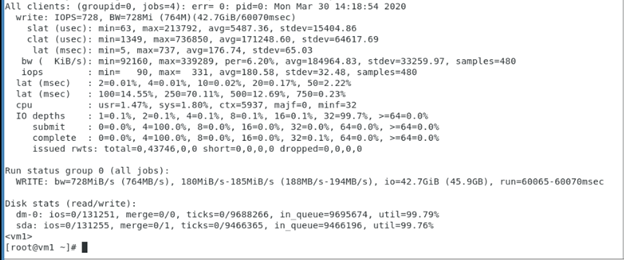

Tests of sequential write to VM with 200GB LUN from 3PAR, which showed the performance of this LUN from 3PAR, where Rosplatforma was not an intermediate layer, and the test shows the operation of the guest OS and 3PAR storage systems.

Used fio parameters inside VM with LUN 200GB from 3PAR:

[seqwrite]

rw=write

size=4g

numjobs=4

bs=1m

ioengine=libaio

iodepth=32

time_based

#runtime=60

direct=1

filename_format=__testfile'$jobnum'

thread

directory=/sdb/

Tests with a clustered file system inside the guest OS

Figure: 10 Clustered file system inside the guest OS.

A scenario with one 200GB LUN connected to two VMs has been successfully tested, on which the GFS2 cluster file system is installed inside the VM using the guest OS. During the test, one of the VMs or the host was turned off, after which the other VM continued to work with this LUN and write / read files from there. Below is a screenshot with the GFS2 settings inside the VM, the outputs of the pacemaker commands are also shown:

Further SDS of the Rosplatform over the LUN:

Fig. 11 Configuring with SDS Rosplatform deployed on a 3PAR LUN.

The same scenario was successfully tested with one 200GB LUN connected to two VMs, on which a cluster file system was installed inside the VM using the guest OS, but the datastore from SDS Rosplatforma created on the LUN from 3PAR was used as the VM location. During the test, one of the VMs or the host was turned off, after which the other VM continued to work with this LUN and write / read files from there.

Figure: 12 Test with adding SSD for the role of cache from the Synergy disk module.

The same scenario was successfully tested with one 200GB LUN connected to two VMs, on which a cluster file system was installed inside the VM using the guest OS, but the datastore from SDS Rosplatforma created on the LUN from 3PAR was used as the VM location. Plus, this datastore has been enhanced in performance by adding an SSD as a cache from the Synergy disk module. During the test, one of the VMs or the host was turned off, after which the other VM continued to work with this LUN and write / read files from there.

Cluster file system tests on Rosplatform nodes

Figure: 13 Test with VM configuration files located on SDS Rosplatforma, from 3PAR LUN 200GB was presented.

A scenario was tested with shared storage from 3PAR for VM disk images on a cluster file system, which was installed on the Rosplatforma nodes, and the configuration files of these VMs were located on the SDS of the Rosplatforma. During the test, one of the hosts was turned off, after which the VMs had to continue working with their disk images due to the work of two other hosts according to replica 3 on the SDS cluster for VM configuration files and 3 logs of the cluster file system for VM disk images.

Figure: 14 SDS is brought up to LUN from 3PAR.

A scenario was tested with shared storage from 3PAR for VM disk images on a cluster file system, which was installed on Rosplatforma nodes, and the configuration files of these VMs were located on SDS of Rosplatforma, where SDS was raised to the LUN from 3PAR. During the test, one of the hosts was turned off, after which the VMs had to continue working with their disk images due to the work of two other hosts according to replica 3 on the SDS cluster for VM configuration files and 3 logs of the cluster file system for VM disk images.

Figure: 15 SDS tier0 on LUNs from 3PAR, SDS tier1 on disks from Synergy module.

A scenario was tested with shared storage from 3PAR for VM disk images on a cluster file system, which was installed on the Rosplatforma nodes, and the configuration files of these VMs were located on SDS Rosplatforma, where SDS tier0 was raised on the LUN from 3PAR and the second SDS tier1 on disks from the module Synergy. During the test, one of the hosts was turned off, after which the VMs had to work with their disk images due to the work of two other hosts according to replica 3 on the SDS cluster for VM configuration files and 3 logs of the cluster file system for VM disk images. But there were problems with the work of kvm-qemu with GFS2, after a long investigation, the lock manager from GFS2 failed and the Rosplatforma could not start the VM on another host of the cluster because of this. The question remains open. The same with the options in Figure 13 and 14.A possible problem with this scenario lies in the peculiarities of kvm-qemu working with the qcow2 and raw image, where writes are synchronous, and the lock manager from GFS2 is limited for such operations.

Conclusion

The options from SDS to LUN from 3PAR and LUN presentation from 3PAR are quite working and can be used to work in infrastructure, but of course they have some disadvantages.

For example, in the case of SDS on the moons, performance will always be slightly lower than SDS on local disks, this can be improved by local SSDs with the role of cache, but regular SDS will always be predominantly faster. As an option, SDS on storage can be configured in a separate shooting gallery. Another not unimportant minus is fault tolerance, on SDS each node is a controller, where at least a cluster starts with three nodes, then in the case of storage systems there are always two controllers per rack. For SDS, this is a single point of failure, but despite these disadvantages, such scenarios take place during a gradual transition from external storage to SDS or when disposing of an existing storage system. Plus, there are capabilities of the storage system itself, such as deduplication, compression, which, due to the peculiarities of the architectural approachRosplatforma does not exist on SDS, but 3PAR does, and thanks to the scenarios described above, they can be used at the storage level.

Scenarios with LUN presentation for VMs, for guest systems with their own cluster system are also relevant. In the case of the presentation of LUNs to each VM separately, such disadvantages as the lack of automation during the life cycle of a large number of VMs appear, which could be due to the cluster file system on the Rosplatform nodes, but GFS2 with kvm-qemu in this case failed, If it use for any other files, then everything works even in emergency tests on the Rosplatform, but as soon as VM images are put there, then the GFS2 lock manager does not cope in emergency tests. Perhaps this problem will be resolved.

The above scenarios for using multipath can be useful for linking tape libraries. Rosplatform has a built-in backup system (SRK) that can write copies to the P-storage cluster folder or a local folder, but cannot work with tape devices until you write a script yourself, or for these purposes you can use external SRK, such as rubackup , which in addition to working with tape, it will help to make copies of VMs with attached LUNs, which is important when integrating with storage systems.