Hundreds of new games are released every year. Some of them are successful and sold in the millions, but this alone does not guarantee legendary status. However, rarely do games appear that become part of the history of the industry, and we continue to discuss and play them years after release.

For PC players, there is one game that has become almost legendary thanks to its incredible, ahead of its time graphics and ability to reduce computer frame rates to single digits. She later became famous due to the fact that for ten years she became a reliable source of memes. In this article, we'll explore Crysis and what made it so unique.

The early days of Crytek

Before diving into the history of Crysis, it's worth taking a quick trip back in time to learn how Crytek was founded. This software development company was founded in autumn 1999 in Coburg, Germany by three brothers - Avni, Cevat and Faruk Yerli. Having united under the leadership of Cevat, the brothers began to create demo games for PC.

The company's most important early project was X-Isle: Dinosaur Island . The studio advertised this and other demos at the 1999 E3 show in Los Angeles. One of the many companies that the Yerle brothers advertised their software to was Nvidia, particularly admiring the X-Isle. And for good reason: the graphics of the demo were amazing at the time. The enormous drawing distances of objects, combined with beautiful lighting and realistic surfaces, had a striking effect.

For Nvidia, the Crytek demo was an excellent tool to advertise the future line of GeForce graphics cards, so the companies struck a deal - when the GeForce 3 hit the market in February 2001, Nvidia used the X-Isle to showcase the card's capabilities.

With the much-needed income from the success of X-Isle, Crytek could begin building the complete game. Her first project, Engalus, was advertised during 2000, but the company eventually abandoned it. The reasons for this are not entirely clear, but thanks to the notoriety gained from the deal with Nvidia, Crytek soon struck another contract with a major studio: the French gaming company Ubisoft.

His terms were to create a complete AAA-level game based on X-Isle. The result was Far Cry , released in 2004 .

It's not hard to see how the technological aspects of X-Isle found their way into Far Cry. Once again, we were presented with vast landscapes saturated with lush vegetation and rendered in the most advanced graphics. While the game did not gain popularity with critics, Far Cry sold quite well, and Ubisoft saw tremendous potential in the brand and in the game engine created by Crytek.

Another contract was signed, under which Ubisoft retained full intellectual property rights to Far Cry and licensed Crytek's engine called CryEngine perpetually . Gradually, over the years, it evolved into the company's own Dunia engine used in games such as Assassin's Creed II and the next three installments of the Far Cry series.

By 2006, Crytek was taken over by Electronic Arts and moved its offices to Frankfurt. Development of CryEngine continued and a new game project from the company was announced: Crysis . From the outset, Crytek wanted the game to be a showcase for the company's technological capabilities; she worked with Nvidia and Microsoft on expanding the capabilities of DirectX 9-capable hardware. Later the game will be used to advertise DirectX 10 features, but this will happen quite late in the development of the project.

2007: the golden year of video games

Crysis was slated for a late 2007 release, which has proven to be great for gaming, especially in terms of graphics. We can say that the stream of hits began a year earlier, when The Elder Scrolls IV: Oblivion set the bar for what an open world 3D game should look like (aside from character models) and the level of interaction attainable in it.

For all its graphical splendor, Oblivion showed signs of development constraints across platforms, namely PCs and consoles at the time. The Xbox 360 and PlayStation 3 were complex machines that were quite architecturally different from Windows systems. Microsoft's Xbox 360 had CPUs and GPUs unique to this console: an IBM triple-core PowerPC chip and an ATI graphics chip that boasted a unified shader system.

The Sony console contained a fairly common Nvidia GPU (modified GeForce 7800 GTX), but the CPU was completely non-standard. The Cell processor, jointly developed by Toshiba, IBM and Sony, had a PowerPC core, but also contained 8 vector coprocessors inside.

The board for the first version of the PlayStation 3. Image: Wikipedia

Because each console had its own unique strengths and features, making games that would work well on both them and the PC was a huge challenge. Most developers have usually targeted the “lowest common factor,” that is, limited to the relatively small amount of system and video memory, and the most basic graphics functions of the systems.

The Xbox 360 only had 512MB of shared GDDR3 memory and an additional 10MB of onboard DRAM for the graphics chip, while the PS3 had 256MB of XDR DRAM system memory and 256MB of GDDR3 for the Nvidia GPU. However, by mid-2007, the most powerful PCs could already be equipped with 2 gigabytes of DDR2 system RAM and 768 MB GDDR3.on the graphics card.

The console versions of the games had fixed graphics settings, while the PC versions provided customization flexibility. At maximum settings, even the most powerful computers of the time sometimes failed to cope with the load . This situation arose because the game was designed for maximum performance at the maximum resolutions of the Xbox 360 and PS3 consoles (i.e. 720p or 1080p with upscaling) at 30 frames per second, so although the PC components were better, they work with more detailed graphics and higher resolutions significantly increased the load.

The first aspect that was limited to this particular 3D game due to the memory size of the consoles was texture size, so lower resolution textures were used whenever possible. In addition, the number of polygons used to create environments and models was also quite low.

If we look at another scene from Oblivion in wireframe mode (no textures), we will see that the trees are created from a maximum of several hundred triangles. Leaves and grass are just textures with transparent areas that give the impression that each blade of grass is a separate object.

Models of characters and static objects, such as buildings and rocks, are created from more polygons, but in general, more attention has been paid to the design and lighting of the scenes, rather than the geometry and complexity of textures. When creating graphics, the developers did not strive for realism - Oblivion takes place in a fantasy world reminiscent of a cartoon, so it is forgivable for the lack of sophisticated rendering.

However, a similar situation can be seen in one of the biggest games of 2007: Call of Duty 4: Modern Warfare . Set in today's real world, this game was developed by Infinity Ward and published by Activision using an engine originally built with id Tech 3(Quake III Arena). The developers adapted and greatly changed it so that it could render all the graphic "chips" that were popular at that time: bloom, HDR, self-shadowing, dynamic lighting and shadowing.

But as you can see in the image above, the texture quality and polygon count remained quite low, also due to the limitations of the consoles of that era. Objects that are constantly in sight (for example, a weapon in the player's hands) have good detail, but the static environment was quite simple .

All of these objects have been artfully designed to look as realistic as possible, so the clever use of light and shadows, along with subtle particle effects and fluid animations, magically enhanced scenes. But just like Oblivion, Modern Warfare on PC was still very demanding at maximum settings - the multi-core CPU was very important . BioShock

was another multi-platform hit in 2007... The visual design of this game, set in an underwater city setting in an alternate reality from 1960, was a key element in the storytelling and character development. This time, Epic's modified Unreal Engine was used to create the game, and like the games mentioned above, BioShock implemented a full set of graphics technologies.

At the same time, it cannot be said that the developers did not save as much as possible - look at the character's hand from the image above, the edges of the polygons used in the model are easily visible on it. In addition, the lighting gradient on the skin is also quite sharp, which indicates a rather low polygon count.

As with Oblivion and Modern Warfare, the multiplatform nature of the game meant low texture resolution. Painstaking work on lighting and shadows, particle effects for water and exquisite design raised the level of graphic quality. This situation was repeated in other games developed for PC and consoles: Mass Effect, Half Life 2: Episode Two, Soldier of Fortune: Payback, and Assassin's Creed took a similar approach to the implementation of graphics.

Crysis, on the other hand, was intended to be a PC-only game and had the potential to be free of the hardware and software limitations of consoles. Crytek has already proven with Far Cry that it knows how to make the most of its graphics and computing power ... but if PCs are having trouble running new games designed for consoles at maximum settings, why should Crysis be better?

All at once

At the annual SIGGRAPH 2007 conference on graphics technology, Crytek published a paper describing the research and development that the company has undertaken to create CryEngine 2, a descendant of the engine that was used to write Far Cry. In this document, the company outlined the goals that the engine should achieve:

- Cinematic rendering

- HDR-

- CPU GPU

- 21 x 21

In the first game developed with CryEngine 2, Crysis, the developers achieved all but one goal - the playable area had to be reduced to just 4 km x 4 km. It might seem like a lot, but Oblivion's game world was 2.5 times larger in area. However, it's worth looking at what Crytek has achieved on a smaller scale.

The game begins on the coast of a jungle-covered island near the Philippines. It was invaded by North Korean troops, and a team of American archaeologists excavating the island called for help. You and your team, dressed in special nanosuits (giving you superpower, speed and short-term invisibility), were sent to scout the situation.

The first few levels easily demonstrate that the claims of the Crytek developers were not exaggeration. The sea is a complex mesh of real 3D waves interacting with objects in it - there are underwater light rays, reflection and refraction, caustics and light scattering, waves rolling onto the shore. This all looks good by modern standards, although it's surprising that despite all the efforts Crytek has put into creating the look of the water, almost no single player action takes place in it.

You can look at the sky and think how correct it looks. This is as it should be, because the game engine calculates the light scattering equationon the CPU, taking into account the time of day, the location of the Sun and other atmospheric conditions. The calculation results are stored in a floating point texture, which is then used by the GPU to render the skybox (dome of the sky).

The clouds that we see in the distance are "real", that is, they are not just part of the skybox or overlaid textures. It is a collection of small particles, almost like real clouds, and a process called ray-marching is used to locate the volume of particles in the 3D world. And since clouds are dynamic objects, they cast shadows on the scene (although you don't see it here).

Completing the first levels demonstrates further evidence of CryEngine 2's capabilities. In the above screenshot, we see a richly detailed environment - directional and indirect lighting is dynamic, all trees and bushes cast shadows on themselves and other vegetation. Branches move as you squeeze through them, and small stumps can be shot so they don't interfere with your view.

Speaking of shooting objects: Crysis allows us to destroy a lot of objects - vehicles and buildings can be blown up to pieces. Is the enemy hiding behind a wall? Don't bother shooting through a wall like you did in Modern Warfare, just take it out entirely.

As with Oblivion, almost any item can be picked up and dropped, although it's a shame Crytek hasn't expanded this feature. It would be great to pick up the jeep and throw it into the crowd of soldiers - the most we can do is to punch it a little, hoping it will move enough to do damage. And all the same: in what other games can you kill an enemy with a chicken or a turtle?

Even today, these scenes are very impressive, and already in 2007 it was a huge leap forward in rendering technology. But despite all its innovations, Crysis can be considered a fairly standard game, because the epic mountain views and beautiful locations hid the linearity and predictability of the gameplay.

For example, in those days, levels in which the player moved only by transport were popular, so it's not surprising that Crysis followed this fashion. Some time after the successful invasion of the island, you are tasked with driving a stylish tank, but everything ends very quickly. Perhaps this is for the best, because its control is inconvenient, and the player can complete the level without even participating in the battle.

There are probably not many people who have not played Crysis or do not know in general its plot, but we will not go into its details. Be that as it may, sooner or later the player finds himself on the levels deep underground, in a mysterious cave system. She is quite beautiful, but often confusing and claustrophobic.

It demonstrates well the volumetric lighting and engine fog systems that also use ray-marching. It is not perfect (there is too much blur around the edges of the weapon), but the engine handled a lot of directional lights with ease.

After getting out of the cave system, the player heads to a frosty part of the island with intense battles and another standard gameplay part: an escort mission. The developers at Crytek redesigned the snow and ice rendering system four times, barely having time to meet the deadline.

The end result is very beautiful: the snow is applied in a separate layer on the surface, and the frozen water particles flicker in the rays of light, but the graphics are slightly less effective than in the previous scenes with the coast and jungle. Take a look at the large cobblestone on the left side of the image: there is not much texture there.

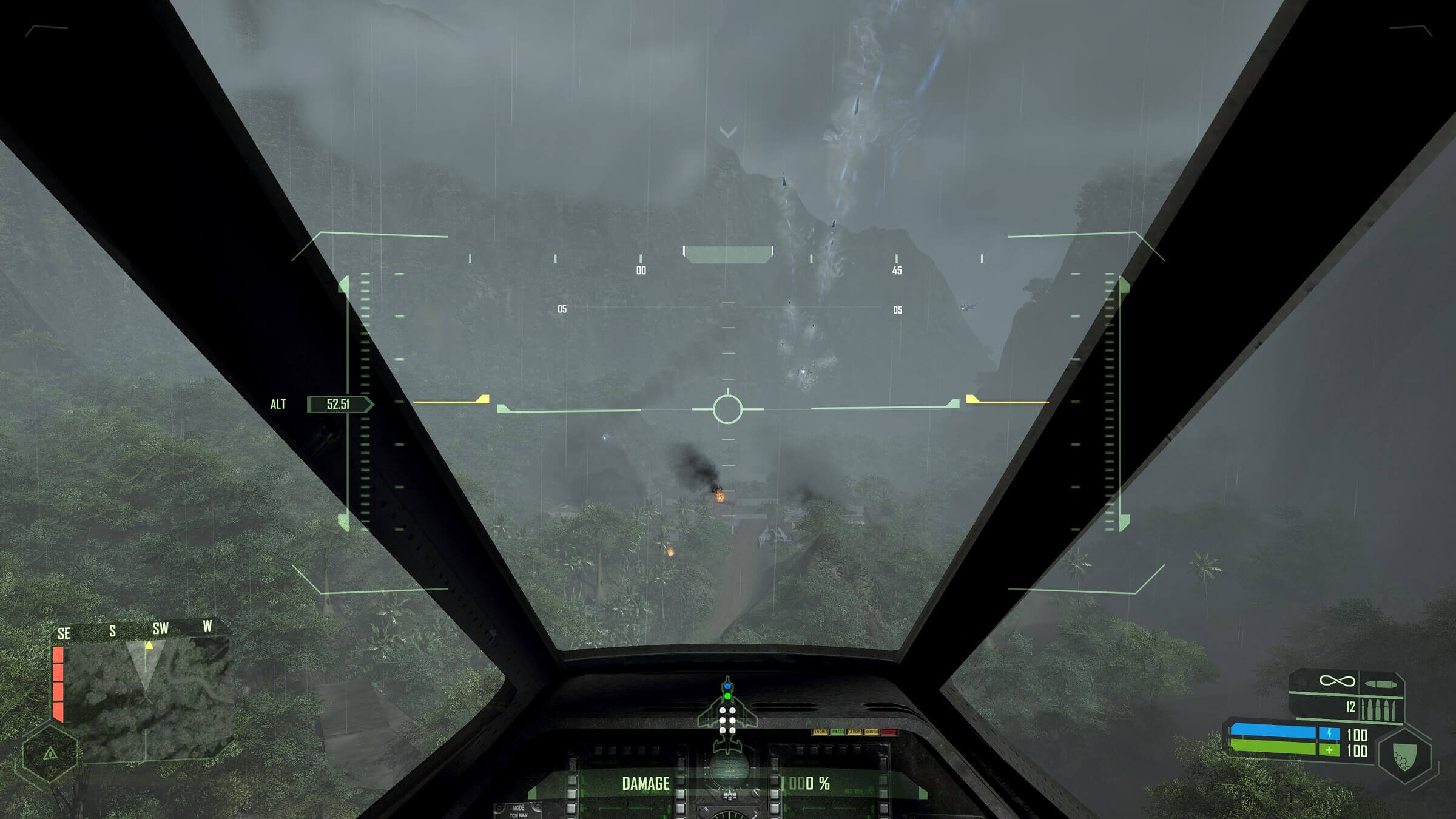

Later, we return to the jungle and, unfortunately, we will have another transport level. This time, a small amphibious transport (the creators of which were clearly inspired by "Aliens") needs a replacement pilot, and, of course, you will be it (is there something that our soldiers cannot do ?). Once again, the gameplay consists of a short-term struggle with an awkward government system and indiscriminate shooting in which you can not participate.

Curiously, when Crytek released the console version of Crysis four years later, this entire level was cut. Probably heavy use of particle-based cloud systems, complex geometry and long rendering distances were too much of a burden for the Xbox 360 and PS3.

The game concludes with a very intense battle aboard an aircraft carrier with an obligatory “I'm not dead yet” boss fight . Some of the graphics in this piece of the game are very impressive, but the design of the level is incomparable with the design of the previous stages.

In general, Crysis was fun to play, but the plot was not very original - in many ways, the game became a spiritual descendant of Far Cry, both in terms of entourage, and in the choice of weapons, as well as in the plot twists. Even the advertised function of the nanosuit was not fully realized, because usually its capabilities were hardly required. In addition to a couple of places where a power mode was needed, a standard shield was quite enough for passing.

The game was stunningly beautiful, the most beautiful release of 2007 (and many years after), but Modern Warfare had much better multiplayer, BioShock had a richer storyline, and Oblivion and Mass Effect provided much more depth and replayability.

But, of course, we all know exactly why after 13 years we are still talking about Crysis - it all comes down to memes ...

The price of all that great graphics came at the expense of hardware requirements, and running the game at maximum settings seemed out of reach for the most powerful PCs of the day. In fact, this feeling persisted year after year - new CPUs and GPUs appeared, but they all could not run Crysis in the same quality as other games.

Yes, the graphics may be pleasing, but the low frame rate, which never seemed to increase, was definitely upsetting.

Why is everything so serious?

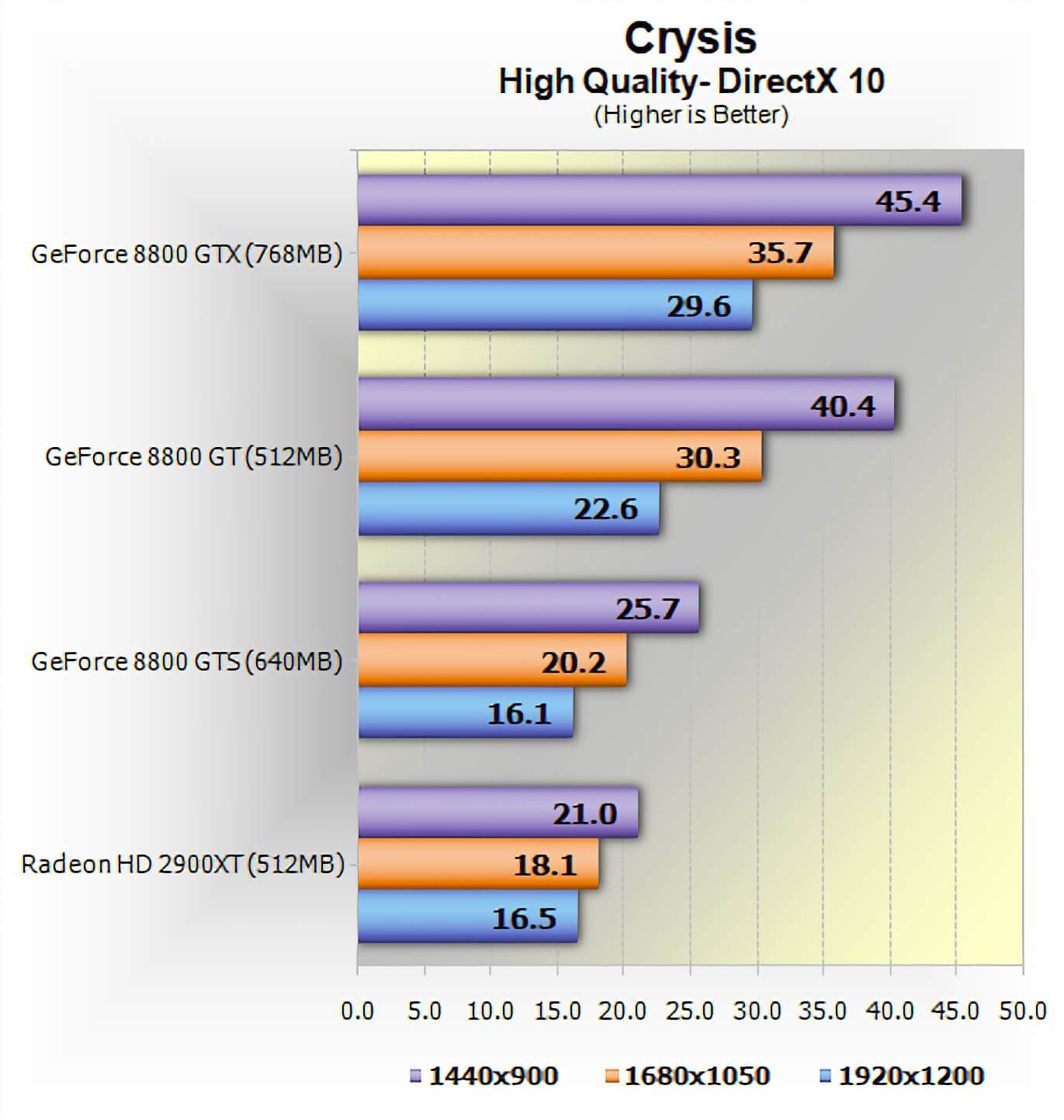

Crysis was released in November 2007, but the peer-to-peer demo and map editor were made available a month earlier. Our site was already doing benchmarks for PC hardware and games, so we tested the performance of this demo (and later the 1.1 patch with SLI / Crossfire ). We found out that the game behaves pretty well ... but only on the best graphics cards of the time and with average graphics parameters. As soon as we switched to high quality, mid-range GPUs immediately fizzled out.

Worse, the advertised DirectX mode and anti-aliasing did little to instill confidence in the game's potential. The early pre-reviews in the press have already said about the frame rates far less than ideal, but Crytek promised that there will be further improvements and upgrades.

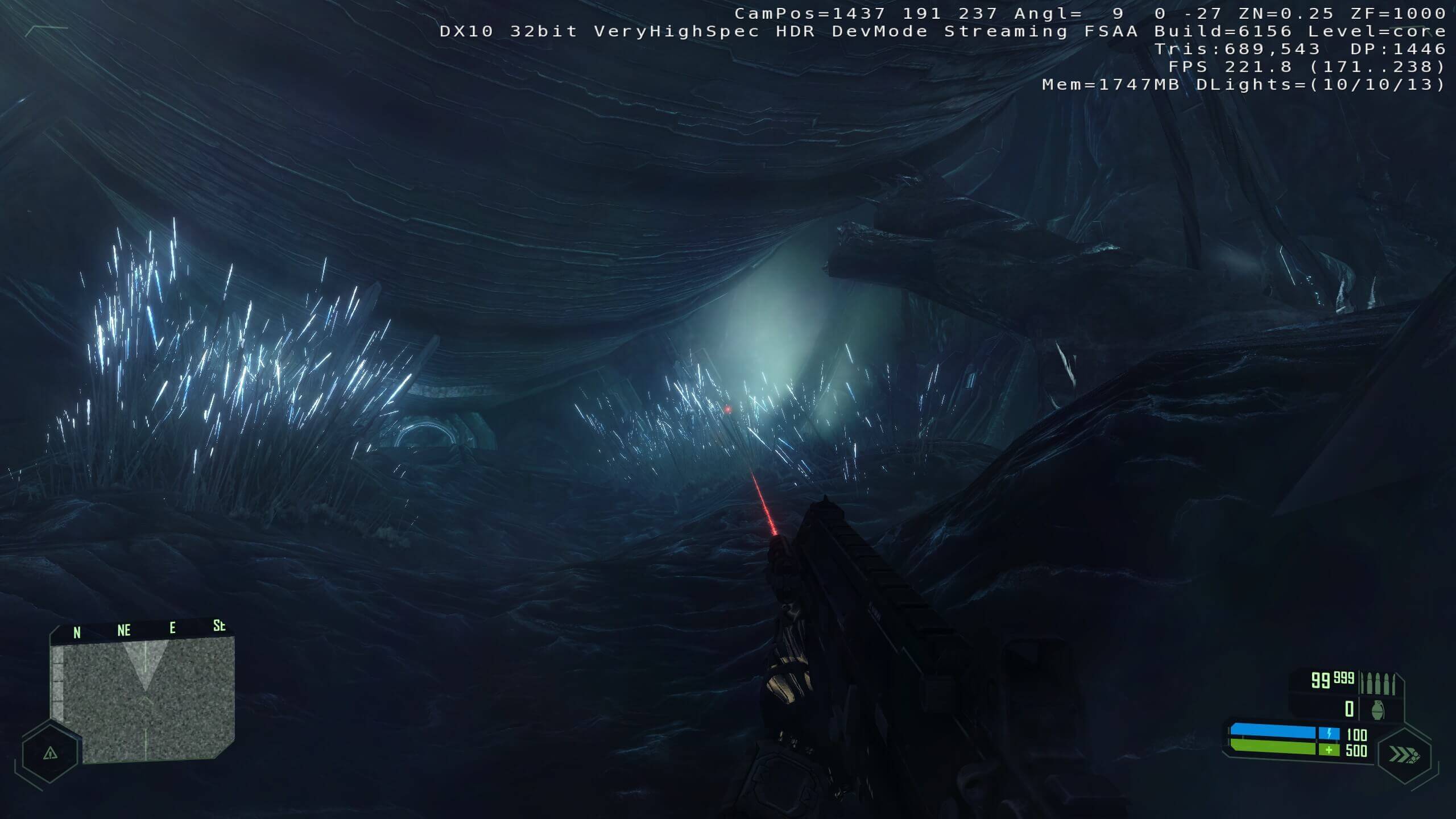

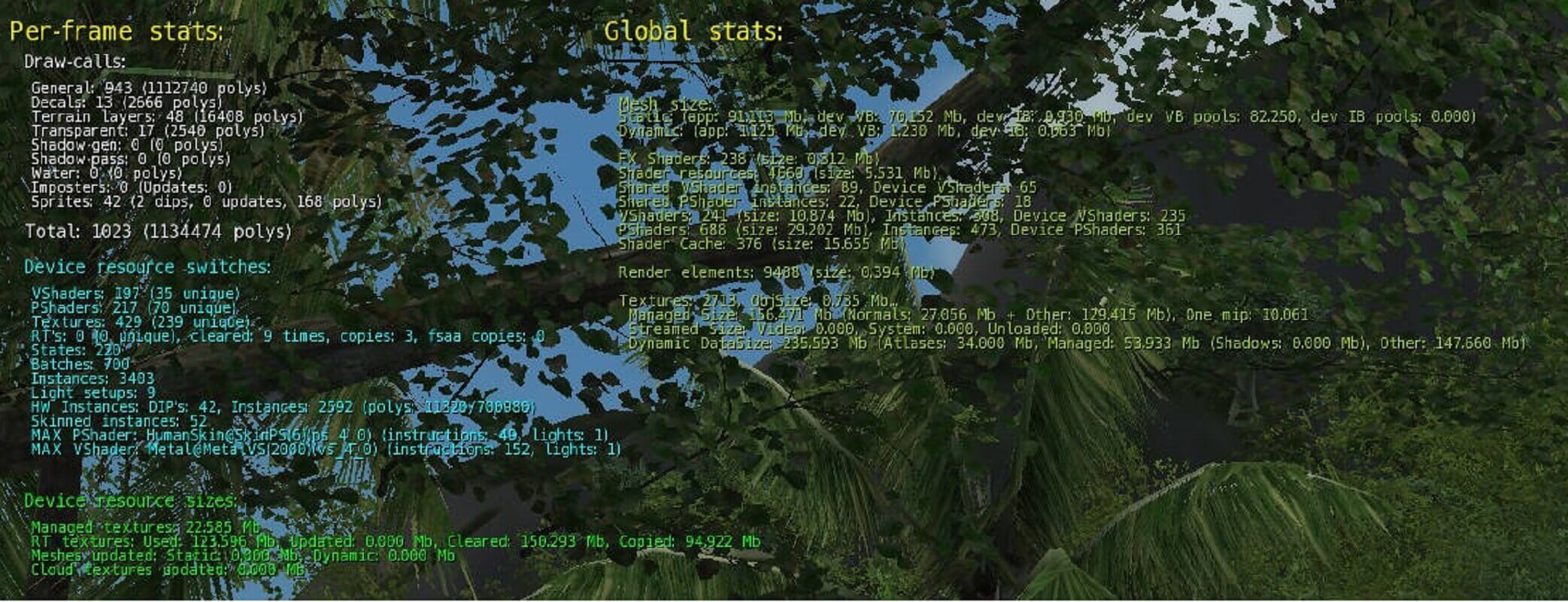

So why was the game so demanding on the hardware? We can get an idea of what's going on inside the game by running it with devmode enabled. This mode provides access to the console, which allows you to enable all kinds of settings and cheats, as well as display an overlay with rendering information.

In the screenshot above, taken almost at the start of the game, we can see the average frame rate and the amount of system memory used by the game. The Tris , DP, and DLights values represent the number of triangles per frame, the number of draw calls per frame, and the number of directional (and dynamic) lights.

First, let's talk about polygons: there are 2.2 million of them in this frame. That is how much is used to build the scene, although not all of them are displayed on the screen (invisible ones are clipped at the early stages of the rendering process). The bulk of this amount is in vegetation because Crytek chose to draw individual leaves on the trees rather than using simple textures with transparency.

Even in relatively simple environments, a huge number of polygons are used (compared to other games of the time). Not much is visible in this later-game image because it is dark and underground, but there are still around 700k polygons here.

Compare also the difference in the number of directional light sources: in a scene in an open space in the jungle, there is only one (i.e., the Sun), but in this environment there are 10 of them. It seems that this is not so many, but each dynamic light source casts active shadows ... Crytek used a mixture of technologies to implement them, and although they are all modern by the standards of the time (cascading and dispersive shadow maps with fluctuation sampling for smoothing edges), they add a serious load to the vertex processing process.

The DP value tells us how many times a particular material or visual effect is “invoked” on objects in the frame. The console command r_stats is useful for us - it displays in detail the structure of draw calls, as well as the shaders used, details of geometry processing and loading textures in the scene.

Let's go back to the jungle scene again and see what was used to create this image.

We ran the game in 4K to get the best possible screenshots, so the statistics are not so clear. Let's bring it closer.

In this scene, over 4,000 polygon groups for 2.5 million environment triangles are called up to apply 1,932 textures to them (although most of them are repeated, for example, in objects such as trees and ground). The largest vertex shader is needed to prepare the motion blur, it consists of 234 instructions, and the bump pixel shader consists of 170 instructions.

For a 2007 game, that number of calls is extremely high, because the overhead in DirectX 9 (and, to a lesser extent, DirectX 10) to make every call wasn't all that trivial. Soon after Crysis, some AAA-level console games would have that many polygons, but their graphics software was much more specialized than DirectX.

We can sort this out with the Batch Size Test from the 3DMark06 package . This DirectX 9-capable tool draws a series of moving and changing color rectangles on the screen, increasing the number of triangles in each subsequent session. When tested on a GeForce RTX 2080 Super, the results show a noticeable difference in performance across different polygon group sizes.

We cannot easily determine how many triangles are processed in one group, but if the number shown takes into account all the polygons used, then there are on average about 600 triangles per group, which is too few. This problem is probably counteracted by a large number of instances (that is, an object can be called several times in a single call).

Some levels of Crysis use even more draw calls; a good example of this is the aircraft carrier battle. In the image below, we can see that while there are fewer triangles compared to the jungle scene, the sheer number of moving, textured, and individually illuminated objects are bottlenecks in rendering performance with CPU and graphics API / driver systems.

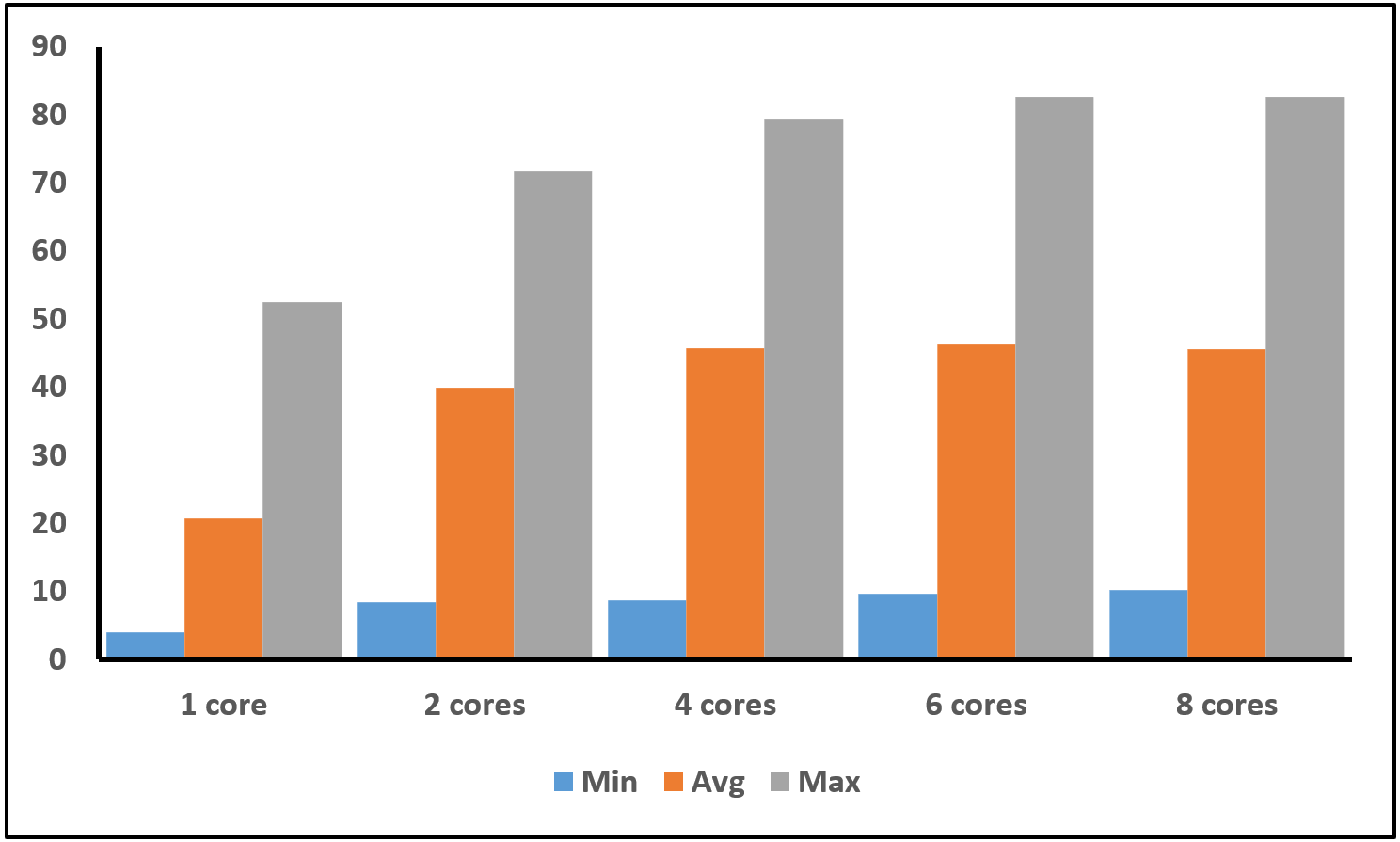

Crysis has been criticized by many for its poor optimization for multi-core CPUs. Most powerful gaming PCs were equipped with dual-core processors before the game was released, AMD started selling its quad-core Phenom X4 line at the same time as its release, and Intel had quad-core CPUs in early 2007.

Has Crytek really overlooked this? We ran the standard CPU2 timedemo on a modern 8-core Intel Core i7-9700 supporting up to 8 threads. There seemed to be some truth to these accusations: while overall performance remained not so bad for the 4K test, frame rates dropped below 25 fps quite often, and at times to single digits.

However, if you run the tests again, but this time limit the number of available cores in the motherboard BIOS, you can see the other side of the story.

There is a noticeable increase in performance when moving from one to two cores, and another increase when moving from two to four (albeit much less). There are no noticeable differences further, but the game definitely uses the presence of several cores. With quad-core CPUs becoming the norm after the game was released, there was no real need for Crytek for further optimizations.

In DirectX 9 and 10, graphics commands are usually executed in a single thread (because otherwise performance is severely degraded). This means that with the large number of draw calls seen in many scenes, only one or two cores are heavily loaded - no version of the graphics API allows multiple threads to queue up what needs to be displayed.

That is, it's not that Crysis doesn't properly support multithreading - it's obviously because of DirectX limitations; in fact, the game's design decisions and rendering impacts (for example, accurately simulated trees, dynamic and destructible environments) are to blame for the drop in performance. Remember the screenshot of the cave level? Note that the DP was below 2,000 and then look at the FPS counter.

What else is so demanding other than draw calls and API issues? In fact, almost everything. Let's go back to the scene where we explained the meaning of the draw calls, but this time we will switch all graphics options to the minimum settings.

From the devmode information, we can understand the obvious facts: when using the minimum settings, the number of polygons is halved, and the draw calls become almost four times less. But pay attention to the lack of shadows, ambient occlusion and beautiful lighting.

The weirdest change, however, is the smaller field of view - this simple trick reduces the amount of geometry and pixels that need to be rendered in a frame, because anything that doesn't get into the viewport is clipped early in the process. Going further into the statistics sheds even more light on the situation.

Here you can see how much less load on shaders has become - the largest pixel shader contains only 40 instructions (before there were 170). The largest vertex shader is quite large at 152 instructions, but it is only used for surfaces with metallic reflections.

Cycling quality settings - Low, Medium, High, and Very High

As the graphics settings are improved, shading significantly increases the total number of polygons and draw calls, and at high and very high quality levels, the entire lighting model switches to HDR (high dynamic range) and completely volumetric fog and lighting; motion blur and depth of field are also actively used (motion blur is very difficult and involves several passes. large shaders and multiple sampling).

Does this mean that Crytek just stuffed every possible rendering technique into the engine without thinking about performance? Is that why Crysis deserves all these jokes on itself? While the developers were aiming for the best CPU and graphics cards of 2007, it would be unfair to say that the company didn't care how well the game performed.

Having unpacked the shaders from the game files, you can see a lot of comments in the code, many of which relate to computational costs in terms of instructions and the number of ALU cycles. Other comments describe how specific shaders are used to improve performance and when they are best preferred over others for speed.

Generally, game developers do not design games to be fully supported by technology that changes over time. Once one project is complete, they move on to the next and apply the lessons of the past to new games. For example, the first Mass Effect was quite demanding on hardware and even today is rather slow in 4K, but its sequels work quite well.

Crysis was designed to be as beautiful as possible while still being playable given the fact that games on consoles typically ran at 30 fps or less. When it came time to move on to developing the must-have sequels, Crytek apparently decided to downscale, and games like Crysis 3 perform noticeably better than the first installment - it's just another pretty game, but it doesn't have that wow factor of its predecessor.

One more attempt

In April 2020, Crytek announced that it will be remastering Crysis for PC, Xbox One, PS4 and Switch, and intends to release it in July (the company clearly missed its chance here, as the original game begins in August 2020!).

This was soon followed by an apology for the delay in release needed to bring the game to "the standards we expect from Crysis games."

I must say that the reaction to the trailer and the leaked gameplay cutscenes were rather mixed, people criticized the lack of visual differences from the original (in some areas the game even became noticeably worse). Unsurprisingly, the announcement was followed by heaps of comments echoing old memes - even Nvidia decided to get involved with a tweet saying it "turns on its supercomputer."

When the first part hit the Xbox 360 and PS3, some improvements were made to it, mainly related to the lighting model, however, the resolution of textures and the complexity of objects significantly deteriorated.

Since the remaster is slated to be released on multiple platforms, it will be interesting to see if Crytek continues its tradition or takes the safe road to keep everyone happy. Crysis 2007 still lives up to its reputation today, and deservedly so. The bar for what can be achieved in PC games is still striving to achieve today.

See also: