Real-time rendering for virtual reality creates a unique range of challenges, the main ones being the need to support photorealistic effects, achieve high resolutions and increase refresh rates. To address these challenges, Facebook Reality Labs researchers developed DeepFocus , a rendering engine we introduced in December 2018; it uses AI to create ultra-realistic graphics in variable focal length devices. At this year's SIGGRAPH virtual conference, we presented a further development of this work, opening a new milestone on our journey towards future high-definition displays for VR.

Our technical article for SIGGRAPH titled Neural Supersampling for Real-Time Rendering provides a machine learning solution that converts low-res input images to high-res images for real-time rendering. This upsampling process uses neural networks trained on scene statistics to recover accurate details, while reducing the computational cost of rendering those details in real-time applications.

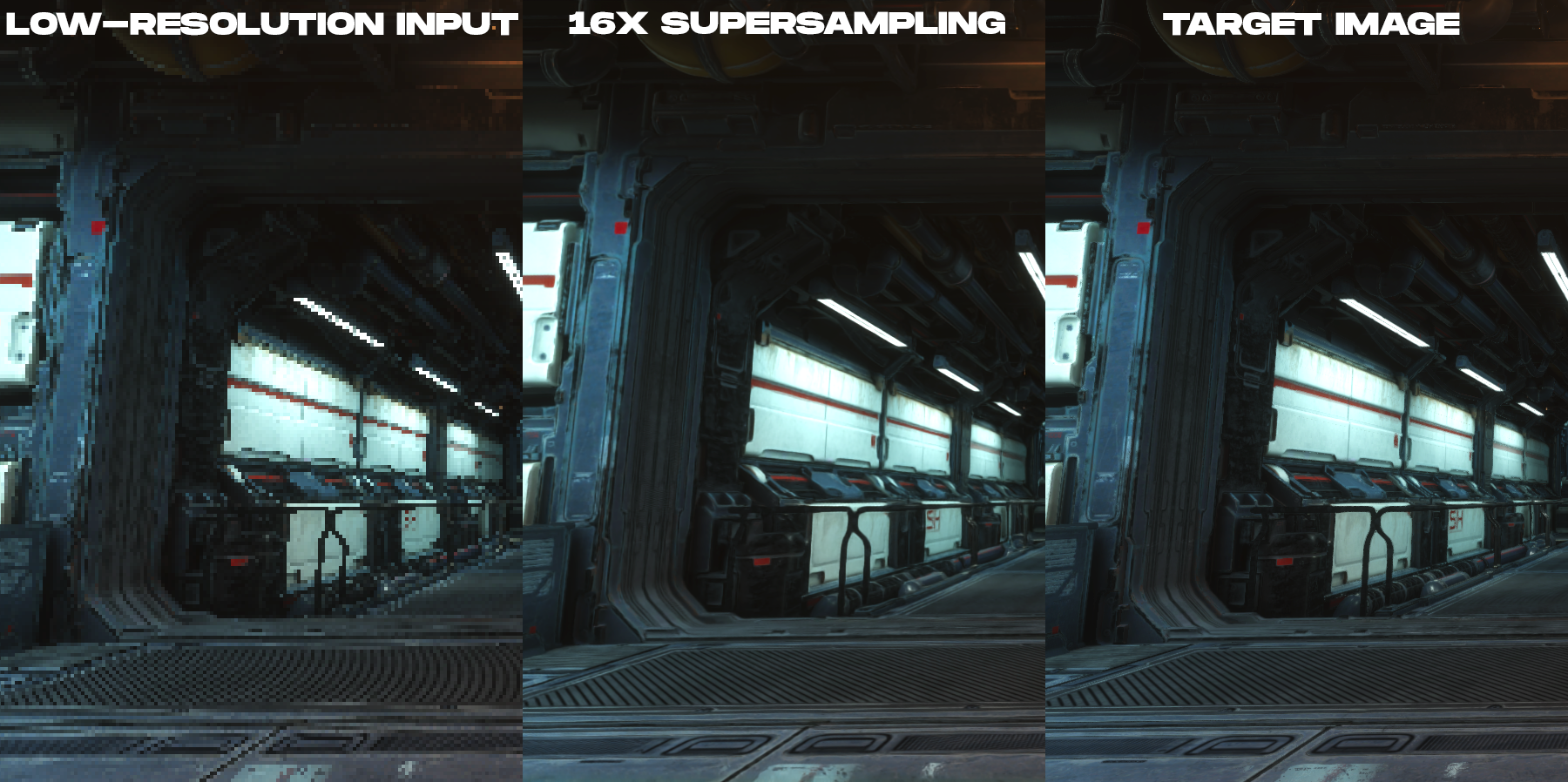

Our solution is a pre-trained supersampling technique that achieves 16x supersampling of content for rendering with high spatial and temporal fidelity, vastly outperforming previous work.

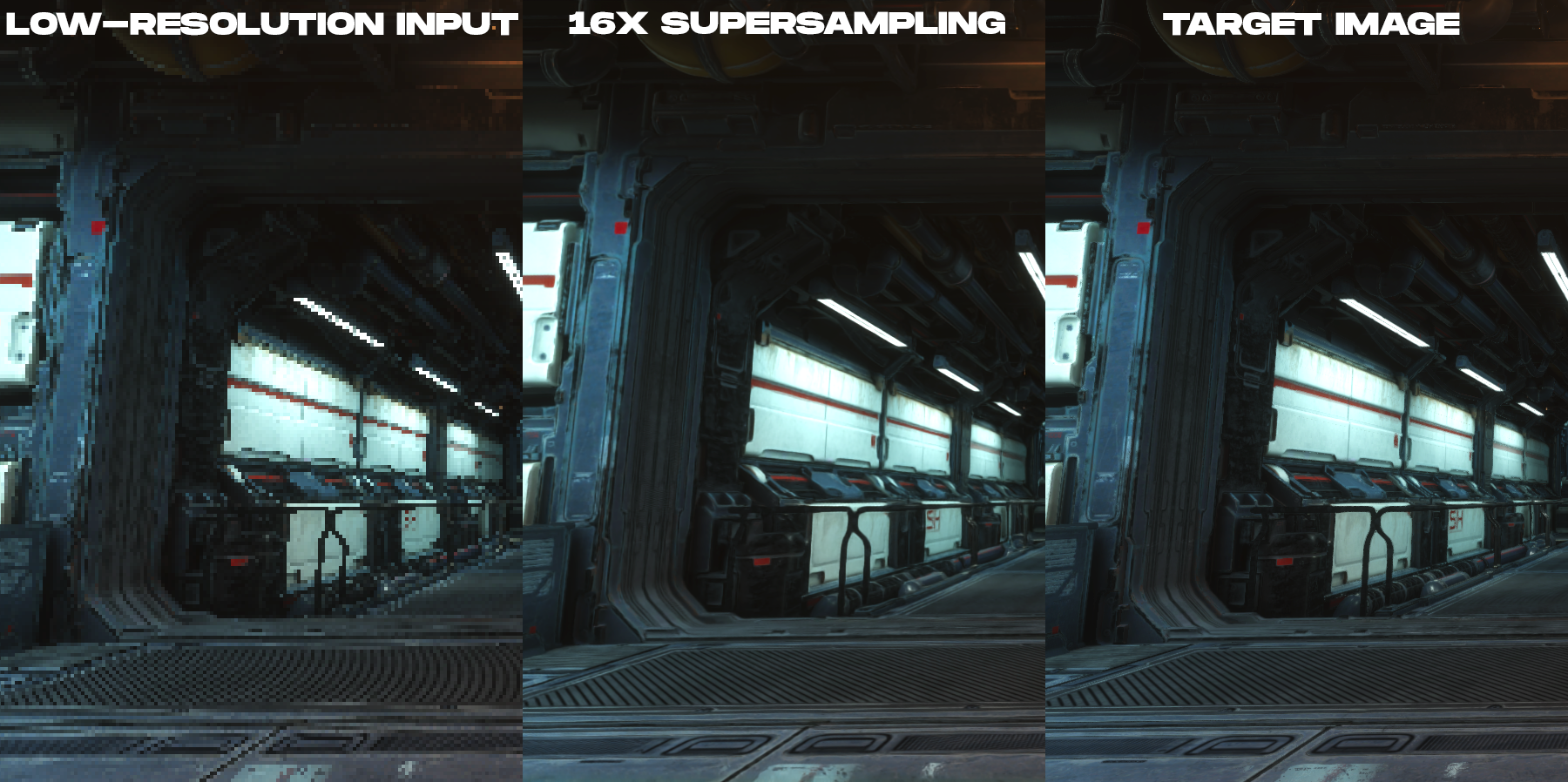

Animation to compare the rendered low-resolution color image and the 16x supersampling output image created by the new neural supersampling technique.

What is this study about?

To reduce the cost of rendering on high-resolution displays, our method takes an input image that has 16 times fewer pixels than the desired output image. For example, if the target display has a resolution of 3840 × 2160 , the network starts our with an input image size of 960 × 540 , otrendernnogo game engines, and then performs its upsampling to a desired resolution of the display in real-time post-processing.

While there has already been a wealth of research into learning-based upsampling of photographic images, none of this work has directly addressed the unique needs of rendered content such as images produced by video game engines. This is because there are fundamental imaging differences between rendered and photographic images. In real-time rendering, each sample is a point in both space and time. This is why rendered content tends to have severe distortion, jagged lines, and other sampling artifacts seen in the low-resolution image examples in this post. Because of this, upsampling of rendered content becomes a task of both anti-aliasing and interpolation, rather than a task of eliminating noise and blur.which is well studied by computer vision experts. The fact that the incoming images are highly distorted and that there is absolutely no information for interpolation in the pixels creates significant difficulties in constructing a highly accurate reconstruction of the rendered content with time integrity.

, ( , ), .

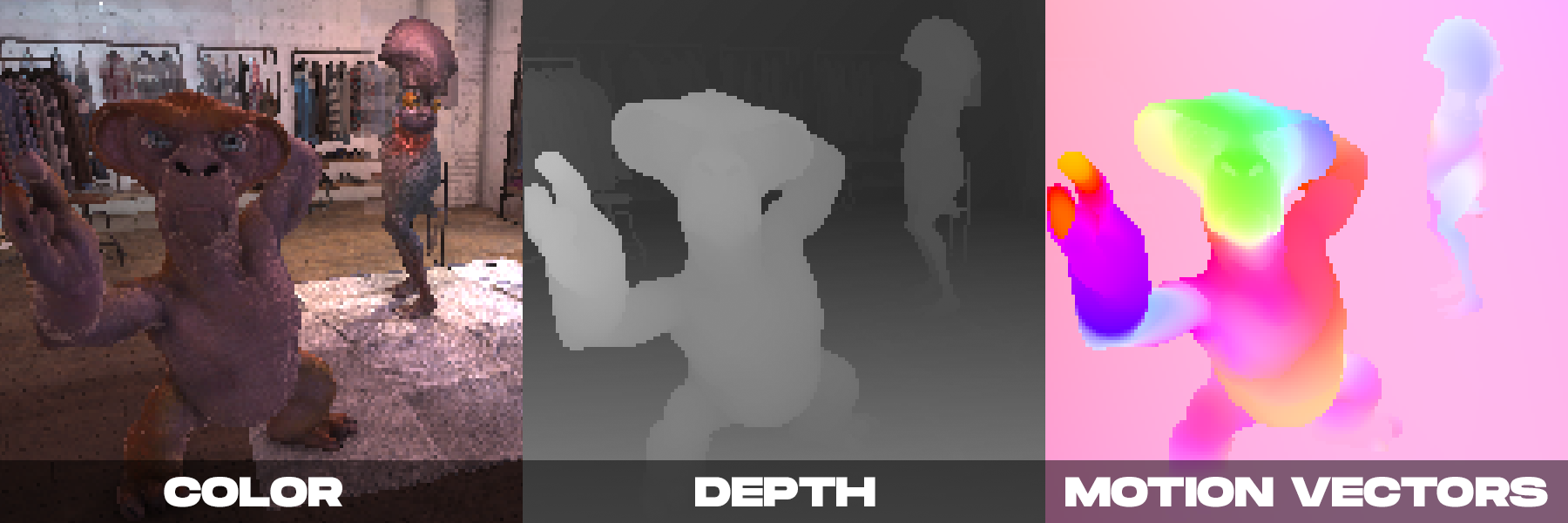

On the other hand, when rendering in real time, we may have several color images produced by the camera. As we demonstrated in DeepFocus, modern rendering engines also provide auxiliary information such as depth values. We noticed that with neural supersampling, the additional ancillary information provided by the motion vectors was particularly important. Motion vectors define geometric relationships between pixels in consecutive frames. In other words, each motion vector points to a sub-pixel location at which a surface point visible in one frame may have been in a previous frame. For photographic images, such values are usually calculated using computer vision methods, but such algorithms for calculating optical motion are prone to errors.In contrast, the rendering engine can generate dense motion vectors directly, thus providing reliable and sufficient input for neural supersampling applied to the rendered content.

Our method is based on the above observations, combining additional ancillary information with a new spatio-temporal neural network scheme designed to maximize image and video quality while providing real-time performance.

When making a decision, our neural network receives as input the rendering attributes (color, depth map, and dense motion vectors of each frame) of both the current and several previous frames, rendered at low resolution. The output of the network is a high-resolution color image corresponding to the current frame. The network uses supervised learning. During training, with each incoming low-resolution frame, a high-resolution reference image with anti-aliasing methods is matched, which is the target image for training optimization.

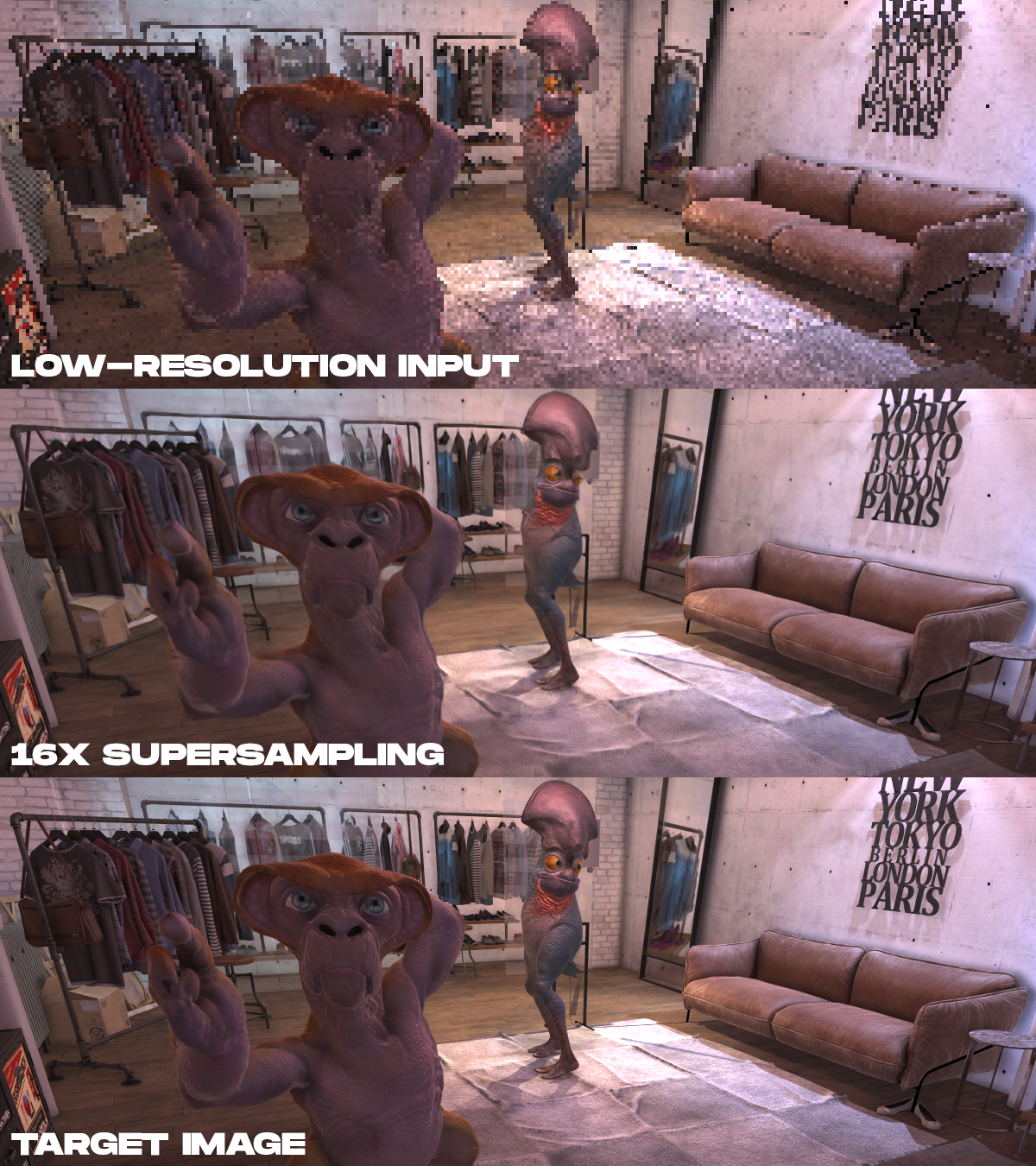

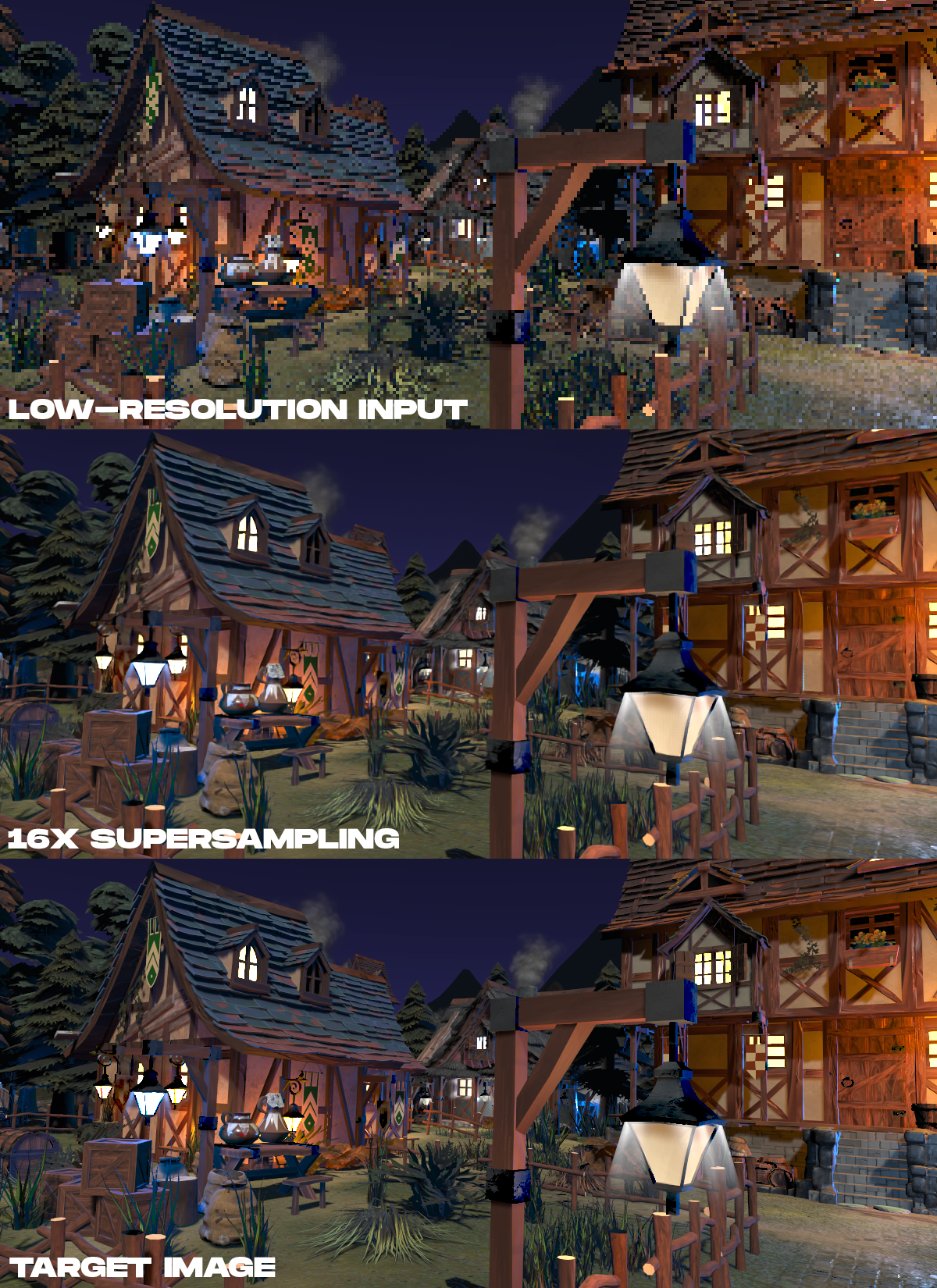

Examples of results. From top to bottom, the rendered input color data is shown at low resolution, the result of supersampling by 16 times performed by the proposed method, and the high-resolution target image, which was rendered in non-real time.

. , 16 , , , .

. , 16 , , , .

?

Neural rendering has tremendous potential in AR / VR. Although this task is difficult, we want to inspire other researchers to work on this topic. As display manufacturers for AR / VR strive for higher resolutions, higher frame rates, and photorealism, neural supersampling techniques could be a key way to recover accurate detail from scene data, rather than directly rendering. This work makes us understand that the future of high-definition VR lies not only in displays, but also in the algorithms needed to practically drive them.

Full technical article: Neural Supersampling for Real-time Rendering , Lei Xiao, Salah Nouri, Matt Chapman, Alexander Fix, Douglas Lanman, Anton Kaplanyan, ACM SIGGRAPH 2020.

See also: