We all, one way or another, have encountered users with vision problems. Those responsible for the UI, no matter the site, mobile application or any other software, most likely know about the need to take into account the needs of such people and therefore made modes of increased contrast, enlarged fonts, and so on.

But what if the user is completely blind and all these modes do not make his life easier? This is where screen readers and sound synthesizers enter the arena, which they cannot do without. And today I would like to tell you about one of them.

It is called from RhVoice and was mentioned in several publications on Habré. But did you know that many consider it the best free synthesizer of Russian (and not only) speech, and it was written alone by a completely blind developer - Olga Yakovleva?

Today we restore historical justice and learn a little about the synthesizer itself in general, and Olga in particular.

Let's open all the cards at once : synthesizer github The synthesizer

code is distributed free of charge under the GPL, which means that anyone can integrate it into their product. Available on three platforms: Windows, Linux and Android. Olga leads the development alone and works in Linux. The best (of the open source) synthesizer of Russian speech, it is considered by the users themselves and these are not only people with poor eyesight. In its work, the synthesizer uses statistical parametric synthesis and was based on the developments of existing projects, such as HTS, and published scientific studies. It is a hybrid deep neural network that works with a hidden Markov model. The task of such networks is to solve unknown parameters based on observables. It can be considered that this is the simplest Bayesian network. HTS itself was based on the developments of another project - HTK . But here we are most interested in that some of the developments have been published for free use, including a description of the algorithms and applied techniques.

The synthesizer itself is positioned as a tool for daily work. It can be used for more creative purposes like sounding books, but it is still better when people are speaking.

Olga started her project almost 10 years ago, when she began to study Linux and did not find a synthesizer convenient for herself there. She writes all the code herself, using a special Braille display for this. This is a special device designed to display text information in the form of six dot Braille characters. She also uses JAWS, a screen reader that traces its history back to DOS and was also created with the active participation of the blind.

Now that the introduction has been given, let's delve a little deeper into the world of speech synthesizers.

What is a speech synthesizer and what does it include?

Traditionally, it is considered that any synthesizer consists of two parts: a language component and a speech signal generation component. The language component analyzes the text received from the screen reader. Its task is to break the text into sentences, sentences into phrases, words and syllables. At the end, a transcription of all words is built and a map of sounds is created from it (as everyone knows, it is not always how it is written or said). This analysis can be done with different depth of study. RhVoice, for example, lacks the resources for complex operations like defining a role in a sentence or a part of speech. But in any case, at the end of the parsing, we get a set of sounds that must be assembled by the speech signal generation component using the base of pre-recorded sounds. We will dwell on each of the components in more detail later.

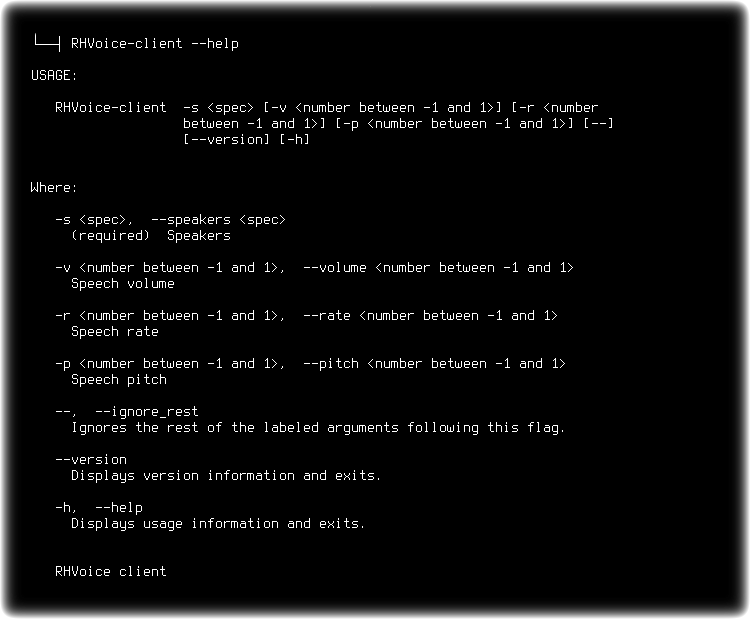

Demonstration of working with a synthesizer

Olga's story

Olga lives in Cheboksary, graduated from the mathematical faculty of the Chuvash State University and works as a programmer. Like all blind people, she studied in specialized schools. At first, there were 6 classes in a school in Nizhny Novgorod, because at that time there were no places in Cheboksary where completely blind children would be accepted. But six years later, she still managed to return to her hometown, and Olga finished her studies in Cheboksary. In the same school, Olga fell in love with mathematics, which later allowed her to enter the Faculty of Mathematics. At some point, she thought about entering the IWT, but here uncertainty in her own abilities played a role. Yes, and among the graduates of the Faculty of Mathematics there were several blind, and at the introductory interview, the dean said that their programmers are prepared even better than at the ICT.

Olga received her first experience of working with computers not at school, but at the university, in the library of which they bought special computers equipped for use by the blind, with the installed JAWS program (a screen reader that has been leading its history since 1989). There she studied Sarah Morley's famous Windows 95 textbook. Most likely, you will now be surprised, because what is such a famous textbook, about which you have never heard? The answer lies in its name: "Windows 95 for the blind and visually impaired"... The main difference between such textbooks from those familiar to all of us is the emphasis on describing various objects and options for managing them. Since for a blind person, the instruction of the form "click on the drop-down list and select the desired menu item" is somewhat useless. They don't see the screen, the mouse cursor, and even more - they don't know what the window and the drop-down list look like. By the way, because of this, another non-obvious nuance arises - blind people may be hostages of the bitness of the synthesizer used. So, five years ago, when switching to Windows 8, many faced with the lack of support for 64 bit applications from speech synthesizers and switched to RhVoice, where this support was already implemented.

But let's go back to the times when Olga was just beginning to explore a new area for herself. The speech synthesizer then was the Digalo program with the voice of Nikolay. This is such a canonical bundle that the results of its work were heard by absolutely anyone who went online. His voice can be considered synonymous with the term "robotic", so closely he entered the Internet culture and was used in an endless number of videos on YouTube. This is probably why the absolute majority is sure that Digalo is Nikolai's surname.

Digalo Nikolay in all its glory

Getting started on your own project

Olga's journey into the world of synthesizers began around 2010 with the development of the NVDA (NonVisual Desktop Access) driver for the Festival synthesizer. NVDA is a free screen reader that allows visually impaired and blind people to fully interact with their computer. A similar class of programs includes a speech synthesizer and the ability to output to a braille display.

Thanks to the Festival, Olga plunged into the world of speech synthesizers and discovered that not only commercial companies, but also anyone who wants to have the opportunity to make a computer speak. At that time, there were already several open speech synthesizers, which were mainly distributed by scientists studying speech synthesis technologies.

Therefore, Olga did her first experiments based on the works of more experienced colleagues around the same Festival. It is an academic speech synthesizer created in 1995 by a group of scientists led by Alan Black. They developed methods of synthesis and based on their research made their own synthesizer, which was originally just a demonstration of the results of their work. Over time, an equally important project FestVox was added to it, allowing you to generate new artificial voices, and on top it was spiced up with pretty good documentation. At that time, the Festival already had the Russian voice of Alexander with a pretty good speech base.

What is a speech base: in the case of RhVoice, these are more than a thousand special sentences, read by a speaker with a clear and unemotional pronunciation. These sentences must be selected in such a way that they contain all diphones, that is, all combinations of two phonemes. And it would be nice if several times each for greater variability. According to recollections, about 600 phrases were used in the first versions. Further, the synthesizer can form any word from these phonemes. In English, this method is called unit selection, and in our country it is known as the method of selecting speech units. Yes, not the most fashionable and youthful, but it works reliably like an iron. Each sentence is entered into the database and analyzed: sounds, their positions in syllables, in words, in sentences are determined. Individual phonemes are classified,their location relative to each other and so on. During the reverse operation, that is, speech synthesis, for each phoneme obtained from the transcription, you simply select the most suitable (read: close) example from the database. Sometimes it is possible to find a strict correspondence, sometimes you have to be content with the most similar. In the world of philologists this is called theoretical and practical phonetics and was not invented yesterday. Therefore, it is impossible to engage in speech synthesizers without reading textbooks on phonetics. By the way, especially good textbooks were published at the Moscow State University.In the world of philologists, this is called theoretical and practical phonetics and was not invented yesterday. Therefore, it is impossible to engage in speech synthesizers without reading textbooks on phonetics. By the way, especially good textbooks were published at the Moscow State University.In the world of philologists, this is called theoretical and practical phonetics and was not invented yesterday. Therefore, it is impossible to engage in speech synthesizers without reading textbooks on phonetics. By the way, especially good textbooks were published at the Moscow State University.

And where to get these offers? You can write yourself, but this is a rather difficult undertaking and there are two alternative ways. You can take a text written by someone in advance, but this may violate copyright or cost individual money. Therefore, the authors of some voices use texts from Wikipedia. For large languages like English or Russian, you can easily find the necessary examples there. Small languages are not lucky in this sense. For example, this trick did not work with the Belarusian Wikipedia.

What's the difference between language and voice? After all, seven years ago there was still no Ukrainian or Tatar announcer. How do they appear in synthesizers?

Voices in speech synthesizers

It all starts with the analysis of the language, which consists in creating a database where the phonetic system of this language is formally described. Such bases can already be made by someone and sold on the market (for very good money). Otherwise, you have to do it yourself. For the analysis of each language, a separate program is developed and this can take up to one and a half years of work, depending on the complexity of the language. For example, Italian is very simple in terms of speech synthesis, while languages such as Arabic and Chinese are extremely complex. But on average, a language analyzer is created in a year. After its readiness, a voice is already written. This has been taking about three months. The recording of the voice-over and other work in the studio takes two to three weeks. This is due to the factthat the speaker can speak with quality and beauty for about four hours a day. Then he gets tired and no longer sounds clean enough. If you think that this is foolishness, then no - very serious requirements are imposed on the quality of these records. Commercial companies conduct entire castings, selecting not only for the subjective beauty of the voice, but also, if possible, use a specific voice for a specific language in their synthesizer.

Then the record is segmented into chunks according to the database, and then using a language analyzer, these chunks are combined together. That is, an analysis is made that this is a noun, this is a verb, it stands next to this, which means it should sound like this and the closest phoneme is substituted. So the role of the analyzer is extremely important: it must take into account not only the arrangement of syllables in a word, but also the arrangement of words in a sentence and punctuation marks. All of this affects pronunciation. In some languages, the same word can be pronounced differently, depending on whether it is a noun or a verb.

But this is more of a route for commercial products, the creators of which have the resources for such in-depth research. Independent developers use simpler options: without a complete classification by parts of speech, but, for example, only at the level of an independent word / preposition / union, etc. Olga took her own path even further and wrote her language module based on textbooks and articles on phonetics. Fortunately, there is a sufficient number of published studies on this topic.

Have you noticed that most synthesizers have female voices first? This is not because of the preferences of the authors, but because of the complexity of developing a female voice. The female voice is naturally higher, and the high frequencies are more difficult to process than the low ones. And if you manage to create a female voice, then a male one will definitely work out. But on the contrary, not a fact.

In Olga's case, this is a personal passion for the topic and even a necessity. What about commercial development? How do they decide which language to add and which not? The answer to everything is money. The first, obvious, option is to analyze a possible sales market for new voices. To put it simply: what is the economic level of the country and whether its inhabitants have the money to buy their product. The second incentive is already more interesting. It is the desire of government or other organizations to create speech synthesis for a given language. Therefore, speech synthesizers were made for very small languages, simply because someone took care of this and allocated money for development. And, for example, in the Scandinavian countries there are laws that all written documents must be accessible to the blind and visually impaired. Therefore, any published newspaper must have its own audio version.

And to understand the order of prices: the development of a new voice, from private companies, costs about ten to forty thousand euros, depending on the complexity of the language. The development of the analyzer module costs many times more. Regarding RhVoice, Olga has a principled position - her project will always be free. Then where does the money for the announcers come from then? In the initial stages, there were volunteers who offered help. They had their own studio and offered to pay for the announcer, so Olga could only send a list of proposals for voiceover. This is how several new languages appeared in RhVoice. Then they began to turn to her with specific requests.

But the fate of further development depends on finding the necessary resources in the free access. For example, there was no open dictionary of stresses for the Ukrainian language before, and it is impossible to build a synthesizer without knowing how stresses are placed. Now it has already been added, but a lot of work has been done. The Russian language is much more fortunate in terms of the availability of materials. And the canon voice "Alexander" was made publicly available by its creator, thanks to which Olga was able to start her first experiments on creating a speech synthesizer.

How can you create a synthesizer if you don't know the language at all? Conventionally, you know Russian and English, but are asked to develop Arabic? There are no technical limitations, the main thing is to find on the Internet some articles and materials about the language, about its structures, or even consult a philologist. This may be enough to develop an initial speech synthesizer. Indeed, by and large, the amount of starting information is standard: a list of phonemes, transcription rules from letter representation to pronunciation, details about auxiliary parts of speech, etc. The main problem will be that the developer cannot verify the results of his work without the participation of a native speaker. And a native speaker should not only give a clear / incomprehensible feedback, but also explain all the subtleties and nuances of places where something went wrong. In the case of RhVoice, Tatar has become such a difficult language.Philologists helped Olga a lot with him, with whom she was connected by representatives of the Kazan Library for the Blind and Visually Impaired, who initiated these works. In the course of work on the synthesizer, a separate dictionary of the correct pronunciation of words borrowed from the Russian language was compiled. So that borrowings sound exactly according to the rules of the Tatar language, and not Russian. And it's good that such a dictionary was compiled by professional philologists. For example, there is no such dictionary for Kyrgyz, and there are a lot of problem areas, the ways of solving which have not yet been found simply technically.In the course of work on the synthesizer, a separate dictionary was even compiled for the correct pronunciation of words borrowed from the Russian language. So that borrowings sound exactly according to the rules of the Tatar language, and not Russian. And it's good that such a dictionary was compiled by professional philologists. For example, there is no such dictionary for Kyrgyz, and there are a lot of problem areas, the ways of solving which have not yet been found simply technically.In the course of work on the synthesizer, a separate dictionary of the correct pronunciation of words borrowed from the Russian language was compiled. So that borrowings sound exactly according to the rules of the Tatar language, and not Russian. And it's good that such a dictionary was compiled by professional philologists. For example, there is no such dictionary for Kyrgyz, and there are a lot of problem areas, the ways of solving which have not yet been found simply technically.

A separate problem is stress placement. In some languages, the location of the stress can be predicted, but in the same Russian and Ukrainian one cannot do without a dictionary. Moreover, there are stress prediction algorithms based on these dictionaries. But it is impossible to do this without having a basic vocabulary.

What's in the future? Rather, what new functions, or improvements to existing ones, do users most often ask for? The undisputed leader here is a request to add one or another language. Work on new languages is underway, but as mentioned above, this is not very fast and depends on the help of external specialists. And also many people ask to improve the sound quality in order to bring it even closer to natural. However, with the tools available to Olga, there will be no dramatic improvements here. True, from version to version, changes in the sound are still made.

Now Olga hopes that there will be ready-made components for neural networks written in low-level C-like languages that can provide sufficient performance on mobile devices. And if it starts up on mobile phones, then it will work on other platforms. Such projects are already being developed, and then she will be able to rework her synthesizer. Another important problem to be solved is that there is no simple and straightforward way to add your own language and voice in RhVoice. There are people who are ready to pay for this work, but the problem is el classico: there are a lot of requests, Olga is one, and as in most for fun projects, the codebase is such a magical forest that it is a deadly task for someone other than the creator to figure it out. ... In most of these projects, developers provide a set of tools and documentation to those who wish,by which, knowing the phonetics of the language and having the rest of the knowledge, you can create your own language module. So far, Olga has neither one nor the other. But there are plans to do so.

In conclusion, I would like to say that like this, thanks to one enthusiastic person, a very good job has been done for many years. Thank you more, Olga.

If you also want to thank Olga for her unselfish work, or even take part in the development of RhVoice, help the project with your knowledge, best practices or sponsorship, then you can do this by contacting Olga through her github .