Have you ever wondered how the computer finds the objects needed for processing from the video stream? At first glance, it looks like a "high" programming task using a huge number of formulas from mathematical analysis, discrete mathematics, etc., requires a huge amount of knowledge to write at least a small program like "Hello, world" in the recognition world images on the video. And if you were told that in fact now it is much easier to enter the world of computer vision and after reading this article you can write your own program that will teach your computer to see and detect faces? Also at the end of the article you will find a bonus that can increase the security of your PC using computer vision.

In order for your computer to begin to understand that you are showing it something that looks like a face, we need:

- Computer;

- Web camera;

- Python 3;

- Your favorite code editor (PyCharm, Jupyter, etc.).

So, after you have collected everything you need from the list above, we can start writing our program for face recognition directly.

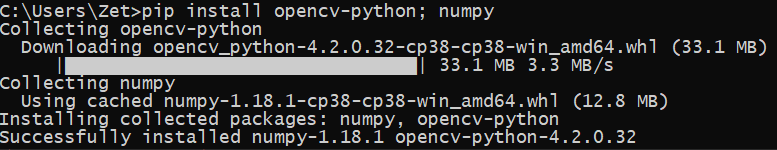

First, download the necessary Python libraries using the command in the Command Line:

pip install opencv-python; numpy

Briefly about why we need them:

OpenCV. This library is the backbone of almost every modern computer vision project. It houses hundreds of computer vision and image processing algorithms. It was originally written in C / C ++, but later it was rewritten in Python as well due to its great demand in this language.

Numpy. Will help with internal calculations of the OpenCV library.

After finishing the installation of the libraries we need, open our favorite code editor and start writing our program:

Attach the OpenCV computer vision library

import cv2 as cv

2. We capture a video stream from a Web-camera using the VideoCapture (index) method, where index is the serial number of our Web-camera in the system. If there is only one camera, the parameter will be equal to 0.

capture = cv.VideoCapture(0)3. To get the video, we will use the read () method, which returns us the rtrn flag - shows the success of capturing a frame from the video stream and image - the frame of our video stream (numpy array). We will read data from the video stream continuously until the Escape key is pressed.

while True:

rtrn, image = capture.read()

cv.imshow("Capture from Web-camera", image) #

if cv.waitKey(1) == 27: # Esc

break

capture.release()

cv.destroyAllWindows()Save our file, run it from the command line using python our_file_name.py. Now our program is able to receive a video stream from a Web-camera! This means that we are already halfway to the fact that the computer can recognize faces.

4. Let's make it so that our computer can also write video to a file:

Specify the codec for saving the video, specify the name for the saved file, fps and sizes. For our task, we take the XVID codec.

import cv2 as cv

capture = cv.VideoCapture(0)

codec = cv.VideoWriter_fourcc(*'XVID')5. We sequentially output the frames to the video window, and then save the result to the output variable. Then the data from output, after the end of the video stream, will be transferred to the file "saved_from_camera.avi":

output = cv.VideoWriter('saved_from_camera.avi ', codec, 25.0, (640, 480))

while capture.isOpened():

rtrn, image = capture.read()

if cv.waitKey(1) == 27 or rtrn == False:

break

cv.imshow('video for save', image)

output.write(image)

output.release()

capture.release()

cv.destroyAllWindows()After we have learned how to take video from a web camera and save it to a file, we can proceed to the most interesting thing - face recognition in a video stream. To find the face in the frames, we will use the so-called Haar Features. Their essence is that if we take rectangular areas in the image, then by the difference in intensities between the pixels of adjacent rectangles, we can distinguish features inherent in faces.

For example, in images with faces, the area around the eyes is darker than around the cheeks. Therefore, one of the Haar Signs for faces can be called 2 adjacent rectangles at the cheeks and eyes.

There are many other, faster and more accurate methods for detecting objects in the image, but to understand the general principles, we will only need to know the Haar Signs for now.

The developers of OpenCV have already carried out work on determining the Haar Signs and provided everyone with the results for the processing capabilities of the video stream.

Let's start writing a face detector from our Webcam:

6. First of all, get our Haar Features and define the parameters for capturing a video stream. The Traits file is located in the path where the Python libraries are installed. By default, they are located in the folder

C:/Python3X/Lib/sitepackages/cv2/data/haarcascade_frontalface_default.xml

where X is your Python 3 subversion.

import cv2 as cv

cascade_of_face = cv.CascadeClassifier('C:/Python3.X/Lib/site-packages/cv2/data/haarcascade_frontalface_default.xml ')

capture = cv.VideoCapture(0)

capture.set(cv.CAP_PROP_FPS, 25) # 25 7. Next, in the loop, we will take turns reading frames from the Web-camera and transmitting it to our face detector:

while True:

rtrn, image = capture.read()

gr = cv.cvtColor(image, cv.COLOR_BGR2GRAY)

faces_detect = cascade_of_face.detectMultiScale(

image=gr,

minSize=(15, 15),

minNeighbors=10,

scaleFactor=1.2

)

for (x_face, y_face, w_face, h_face) in faces_detect:

cv.rectangle(image, (x_face, y_face), (x_face + w_face, y_face + h_face), (0, 0, 255), 2)

cv.imshow("Image", image)

if cv.waitKey(1) == 27: # Esc key

break

capture.release()

cv.destroyAllWindows()

8. Now let's combine everything into one whole and get a program that captures video from a Web-camera, recognizes faces on it and saves the result to a file:

import cv2 as cv

faceCascade = cv.CascadeClassifier('C:/Users/Zet/Desktop/Python/test_opencv/Lib/site-packages/cv2/data/haarcascade_frontalface_default.xml')

capture = cv.VideoCapture(0)

capture.set(cv.CAP_PROP_FPS, 25)

codec = cv.VideoWriter_fourcc(*'XVID')

output = cv.VideoWriter('saved_from_camera.avi', codec, 25.0, (640, 480))

while True:

rtrn, image = capture.read()

gr = cv.cvtColor(image, cv.COLOR_BGR2GRAY)

faces_detect = faceCascade.detectMultiScale(

image=gr,

minSize=(15, 15),

minNeighbors=10,

scaleFactor=1.2

)

for (x_face, y_face, w_face, h_face) in faces_detect:

cv.rectangle(image, (x_face, y_face), (x_face + w_face, y_face + h_face), (255, 0, 0), 2)

cv.imshow("Image", image)

output.write(image)

if cv.waitKey(1) == 27: # Esc key

break

output.release()

capture.release()

cv.destroyAllWindows()All! You've written a program that is the first step in understanding how a computer sees. Further, you can improve face recognition, for example, so that the computer recognizes certain people in the video by training neural networks. You can also write a detector configured to recognize more complex objects (for example, traffic tracking) with the ability to analyze them. And also to solve other, no less interesting tasks of computer vision.

BONUS

Let's apply the program in practice - we will track the logins under the account.

- Let's go to the Task Scheduler (can be found through the standard Windows Search);

- Let's create a Simple Task, give it a title and a short description;

3. Click Next and go to the Trigger item. Here we select the event at which the launch of our task will occur. We select "When entering Windows";

4. Next, in action, we indicate "Run the program";

5. In Action, specify the path to python.exe, and in the Parameters, the path to our program:

Done! As a result, when logging in, the person logged in under the account will be recorded, and the video will be saved. Thus, you can track who worked at the computer in your absence, while maintaining a record of evidence.