Let's remember who I am?

Hi habr. I am Ivan Bakaidov . Many years ago I wrote about my school project of a program that allows people with severe physical disabilities to type with one button. Here is that article .

Since that moment, a lot has changed, the girl for whom I wrote this program died , the project became known as LINKa. I have done many programs for other forms of motor impairment. I realized that I lost the wave of volunteers that arose during the last habrohype, since Open source projects need to be maintained.

But I have not left the topic of developing programs for communication. And with money from the “Dudya grant”, he developed a new communicator that allows you to select cards with a glance. And I need help adding a few features. Stack: C #, WPF.

With a glance? o_O! Like this? Is your head twitching?

Actually, for a long time in the comments of the Habr they wrote to me about the technology of eye tracking, and I skipped these comments, because I thought it was expensive and with a shaking head would not be able to work. I was wrong!

Since 2016, Tobii (aka. Apple from the world of eye-tracking) has begun developing low-cost devices for the gamer market. These devices are 10 times cheaper than devices for “disabled people” (Special device sticker law). In a couple of years, they came up with the excellent Tobii 4c model , which can be bought at a regular electronics hypermarket and plugged into USB 2.0.

One of the foundations threw me this device with the words “Try it, guy”. I put it on the shelf with the words "Eytracking and cerebral palsy - it won't work, then I'll see it somehow." It still only works with Windows, but I have a Mac. But foundations are such great organizations that they demand reports.

Bootcamp, set it up, set it up and realized that this generation of eye trackers learned to track the position of the head, and from it the position of the eyes. At a very high frequency. And everything works, shake your head as much as you like.

The main software for this tracker is gaming, in the Dock you can mark 2 enemies with your eyes. All built-in demos show gamer features. But there is an open API for working in third-party programs.

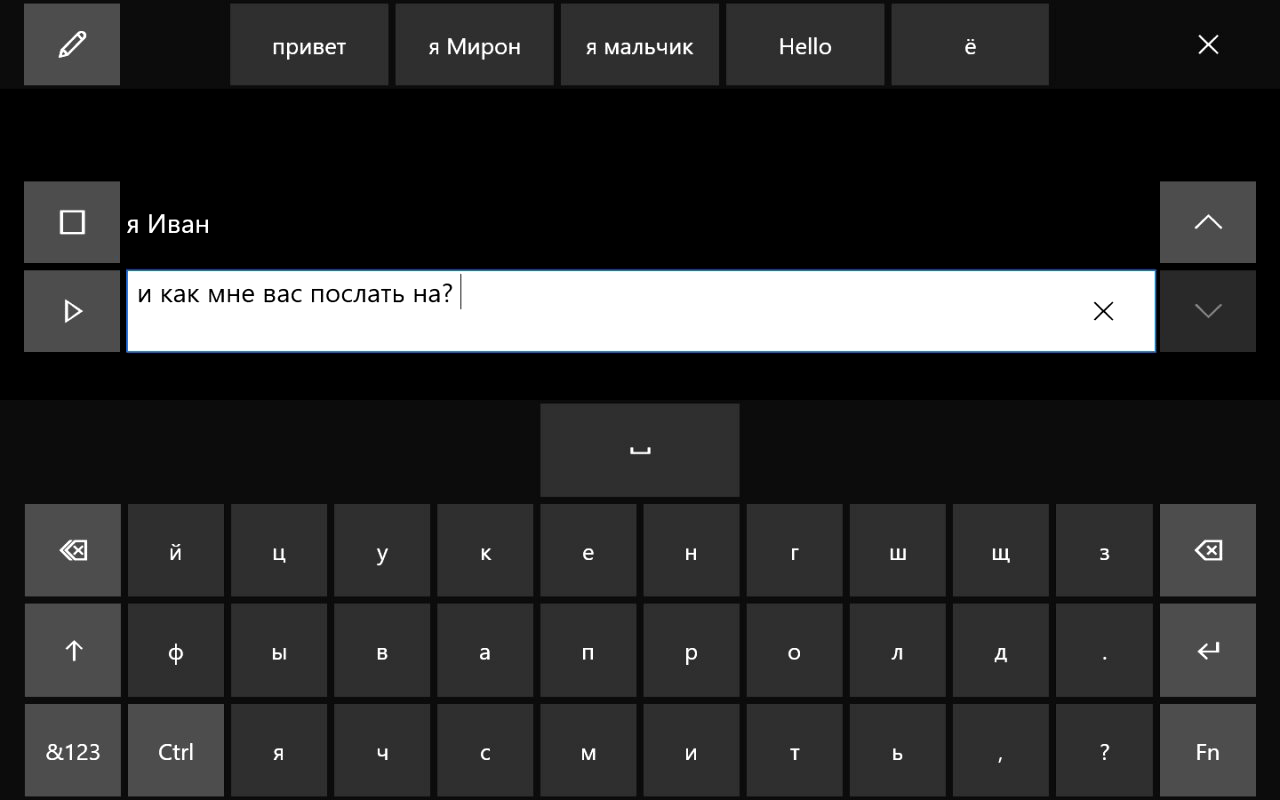

There is scope for creating eye-guided interfaces. These interfaces are extremely simple: they consist of a grid of buttons. When you hold your gaze on the button, a click occurs. And here many have already distinguished themselves. Windows 10 itself has a built-in keyboard and mouse emulator for the eye tracker (True, there are no letters “X” and “B” in the localization. Don't even ask for Bread!).

No way!

There are quite a sensible keyboard BB2K , I wrote about it in the developer Habré. I personally really like Optikey , it's a well-localized keyboard from a UK developer. Has several options, including pictograms for non-writing children.

Well, if you already have an optician, why write your own?

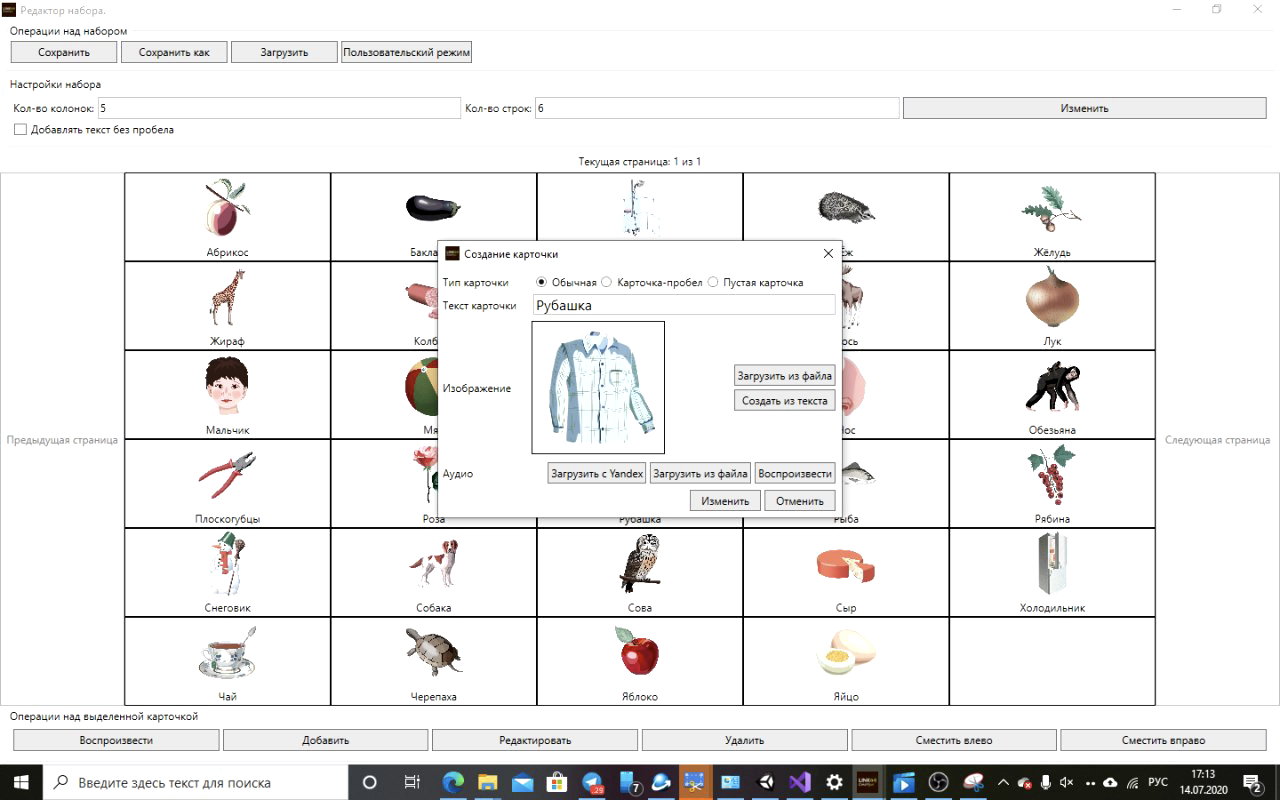

This is a natural question. As you remember from previous articles, for specialists who work with people from speech, it is very important to be able to create the content chosen by the ward themselves, and not work with a ready-made brick, into which even very good cards are sewn. This is due to the fact that often people without speech only have to be taught how to write, or simply to understand that the drawing of a mug denotes the very cup in the kitchen from which it is drunk. And in the same Optician, you need to create a set of cards through PowerPoint .

That is why Link was based on the idea that I learned well while studying at the school for children with disabilities - “Everything should be customizable”. Based on the idea, a very simple task arose: Make a grid of cards, from which cards can be selected with the eyes, but at the same time the selection method, cards and the grid should be easy to customize. I formalized all this in the TOR and found a C # programmer who understood WPF (better than me). At this time, Yura Dud transferred money and everything worked out in general.

What happened in the end?

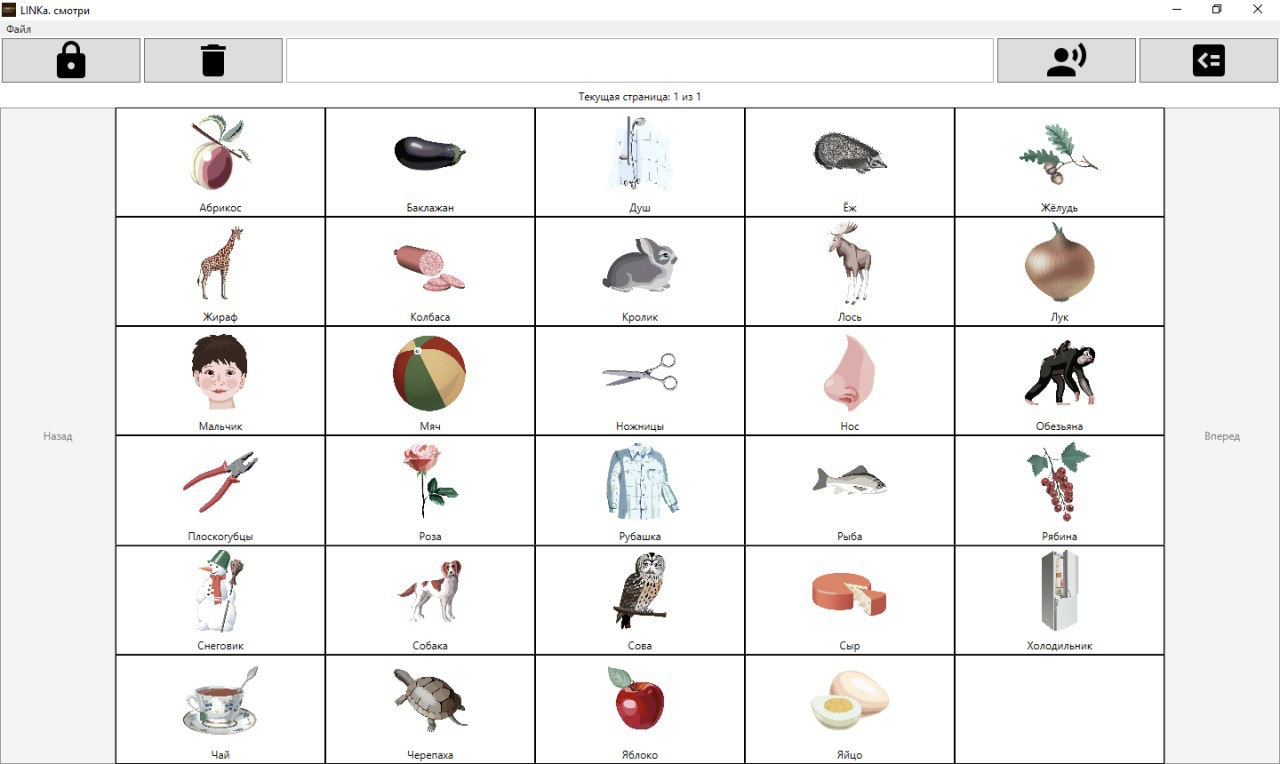

The result is a program that shows a set of cards from a .linka file in a grid and allows you to select them using: Tobii eye tracker, mouse, keyboard and game joystick. There is also a mode in which the choice of a card is carried out with a glance, and confirmation with a button.

The program contains an editor for .linka files.

→ You can download it from here for free: linka.su/looks

So, what needs to be done?

Despite the simplicity and brevity of the program, I hope its flexibility will help to establish communication in a variety of cases.

I have a few small ideas that I would like to implement the program and I would be very happy if you could help with this. These tasks are described in this issue , but I will bring some of them here and explain what it is about.

- , .

API Yandex TTS. , . . . , , , . , . . - Make a system of selecting cards with one button.

Despite the magic of the eye tracker, I met comrades with whom it did not work. And I would like to add to transfer for them the algorithm for selecting a card using one button from the good old link, click (and stop supporting the latter). - Make it possible to print in third-party programs.

- Localization into the languages of the CIS countries.

I will also be glad if you just test the program and write your ideas.

→ GitHub

→ Patreon

Thanks!