One of the main features of Linkurious Enterprise is an easy-to-learn, easy-to-use graph visualization interface designed for laymen. In 2015, frustrated by the possibilities of existing JavaScript libraries for graph visualization, we started developing our own library - Ogma.

Ogma is a rendering and computational performance JS library aimed at rendering network structures. You may have seen how network structures are rendered using other JavaScript tools like D3.js or Sigma.js. We lacked the capabilities of these tools. It was important for us that the solution we used would have some specific capabilities so that it would meet certain performance requirements. We did not find either one or the other in third-party libraries. Therefore, we decided to develop our own library from scratch.

Task

Network structure visualization

The Ogma library was designed with the use of the most modern algorithms. All of its components, from the advanced WebGL-based rendering engine to the web workers used in its depths, have been aimed at providing the best network rendering performance available. Our goal was to make the library very interactive when performing long-term tasks, and so that the leading graph visualization algorithms implemented in it would work quickly and stably.

WebAssembly (Wasm) technology, from the moment it was first reported, has promised web developers a level of performance that compares favorably with what was previously available to them. At the same time, the developers themselves did not need to make excessive efforts to use the new technology. They just had to write code in some high-performance language they knew, which, after compilation, could be run in a browser.

After the Wasm technology developed a bit, we decided to try it out and conduct some performance tests before implementing it. In general, before jumping into the fast Wasm train without looking back, we decided to play it safe.

What attracted us to Wasm was that this technology could well solve our problems, using memory and processor resources more efficiently than JavaScript.

Our research

Our research began with a search for an algorithm aimed at working with graphs that could be easily ported to different languages using similar data structures.

We chose the n-body algorithm. It is often used as a baseline for comparing force graph visualization algorithms. The calculations carried out in accordance with this algorithm are the most resource-intensive part of the visualization systems. If this part of the work of such systems could be done more efficiently than before, this would have a very positive effect on all the force graph visualization algorithms implemented in Ogma.

Benchmark

There are lies, gross lies, and benchmarks.

Max De Marzi

Developing an “honest” benchmark is often an impossible task. The fact is that in an artificially created environment, it is difficult to reproduce scenarios typical of the real world. Creating an adequate environment for complex systems is always extremely difficult. Indeed, in a laboratory environment it is easy to control external factors, but in reality, many unexpected influences what the "performance" of a solution looks like.

In our case, the benchmark was aimed at solving a single, well-defined problem, to study the performance of the implementation of the n-body algorithm.

It is a clear and well-documented algorithm used by reputable organizations to measure performance.programming languages.

As with any fair test, we pre-defined some rules for the various languages we study:

- Different implementations of the algorithm should use similar code structures.

- It is forbidden to use multiple processes or multiple threads.

- The use of SIMD is prohibited .

- Only stable versions of compilers are tested. It is forbidden to use releases like nightly, beta, alpha, pre-alpha.

- Only the most recent compiler versions are used for each language.

After we decided on the rules, we could already proceed to the implementation of the algorithm. But, before doing this, we still had to choose the languages whose performance we will study.

JS competitors

Wasm is a binary format for instructions that run in a browser. Code written in various programming languages is compiled into this format. They say about Wasm code as human-readable code, but writing programs directly on Wasm is not a decision that a person of sane mind can make. As a result, we conducted a benchmark survey and selected the following candidates:

The n-body algorithm was implemented in these three languages. The performance of various implementations was compared to the performance of the base JavaScript implementation.

In each of the implementations of the algorithm, we used 1000 points and ran the algorithm with a different number of iterations. We measured the time it took to complete each program session.

The tests were carried out using the following software and hardware:

- NodeJS 12.9.1

- Chrome 79.0.3945.130 (Official Build) (64-bit)

- clang 10.0.0 - for the version of the algorithm implemented in the C language

- emcc 1.39.6 - frontend for calling the Emscripten compiler from the command line, an alternative to gcc / clang, as well as a linker

- cargo 1.40.0

- wasm-pack 0.8.1

- AssemblyScript 0.9.0

- MacOS 10.15.2

- Macbook Pro 2017 Retina

- Intel Dual Core i5 2,3 GHz, 8GB DDR3, 256GB SSD

As you can see, for the tests we chose not the fastest computer, but we are testing Wasm, that is, the code that will be executed in the context of the browser. And the browser, anyway, usually does not have access to all the processor cores available in the system and to all the RAM.

To make it more interesting, we created several versions of each algorithm implementation. At one point in the n-body system, they had 64-bit numerical representation of coordinates, at the other - 32-bit.

It is also worth noting that we used a "double" implementation of the algorithm in Rust. First, without using any Wasm tools, the "original", "unsafe" Rust implementation was written. Later, using wasm-pack, an additional, "safe" Rust implementation was created. It was expected that this implementation of the algorithm will be easier to integrate with JS, and that it will be able to better manage memory in Wasm.

Testing

We ran our experiments in two main environments. This is Node.js and browser (Chrome).

In both cases, benchmarks were performed using a warm script. Namely, garbage collection was not started before running tests. When we ran the garbage collection after running each test, it didn't seem to have much of an impact on the results.

Based on the source code written in AssemblyScript, the following was generated:

- Basic JS implementation of the algorithm.

- Wasm module.

- Asm.js module.

It is interesting to note that the asm.js module was not fully compatible with asm.js. We tried to add a "use asm" directive to the top of the module, but the browser didn't accept this optimization. Later we discovered that the binaryen compiler we used was not really trying to make the code fully compatible with asm.js. Instead, it was focused on forming some kind of efficient JS version of Wasm.

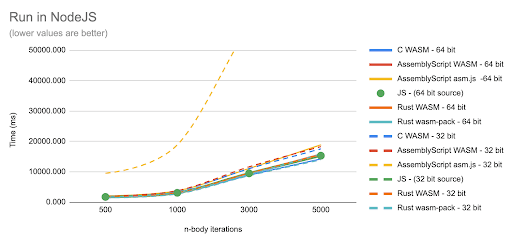

We first ran tests in Node.js.

Running the code in the Node.js environment

Then we measured the performance of the same code in the browser.

Running the Code in a Browser

We immediately noticed that the asm.js version of the code works slower than the other options. But these graphs do not allow a clear enough comparison of the results of various code variants with the basic JS implementation of the algorithm. Therefore, in order to better understand everything, we built a few more diagrams.

Differences between other implementations of the algorithm and JS-implementation (benchmark version with 64-bit coordinates of points)

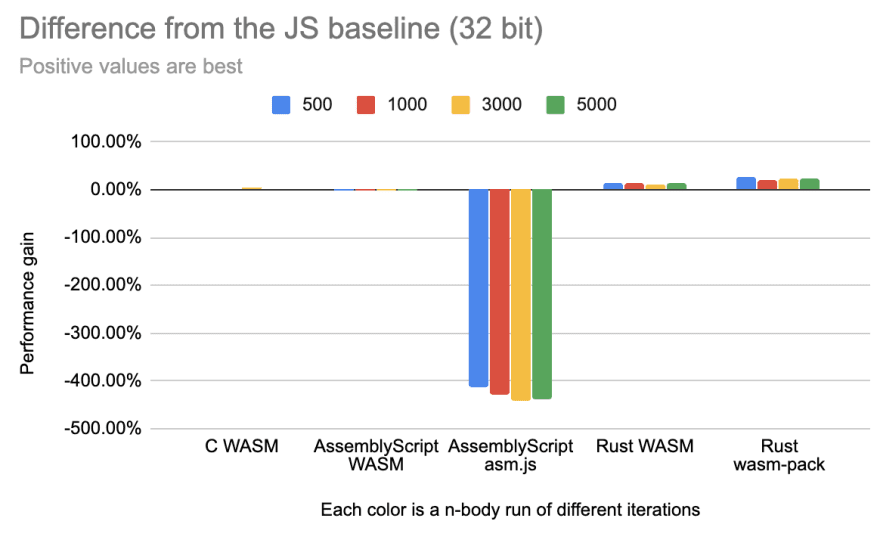

Differences between other implementations of the algorithm and JS-implementation (benchmark version with 32-bit point coordinates)

Benchmark versions with 64-bit and 32-bit point coordinates differ markedly. This might lead us to think that in JavaScript, numbers can be both. The fact is that the numbers in JS, in the implementation of the algorithm, taken as the comparison base, are always 64-bit, but compilers that convert code from other languages to Wasm work with such numbers in different ways.

In particular, this has a huge impact on the asm.js version of the test. Its version with 32-bit coordinates of points is very much inferior in performance to both the basic JS implementation and the asm.js version, which uses 64-bit numbers.

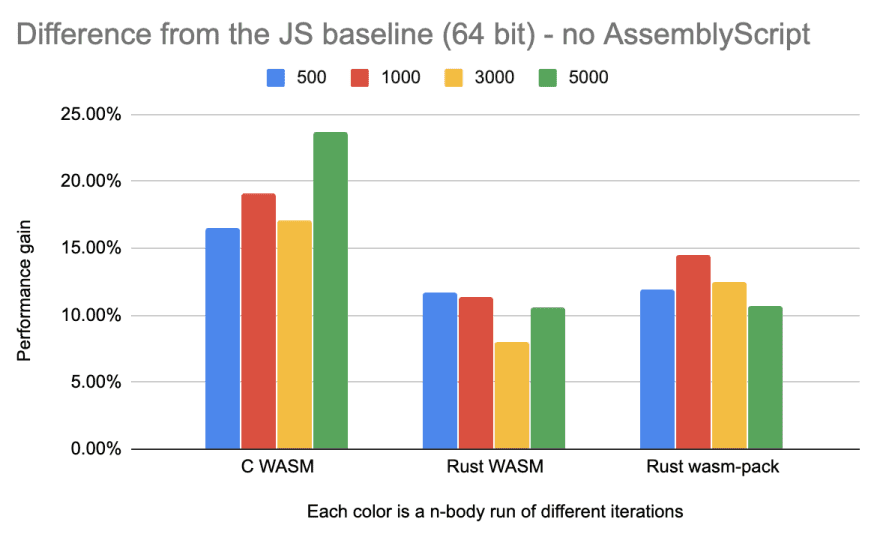

In the previous diagrams, it is difficult to understand how the performance of other code variants compares to the JS variant. This is because AssemblyScript's metrics are too different from the rest. In order to understand this, we built another diagram, removing the results of asm.js.

Differences between other implementations of the algorithm and JS-implementation (benchmark variant with 64-bit coordinates of points, without asm.js)

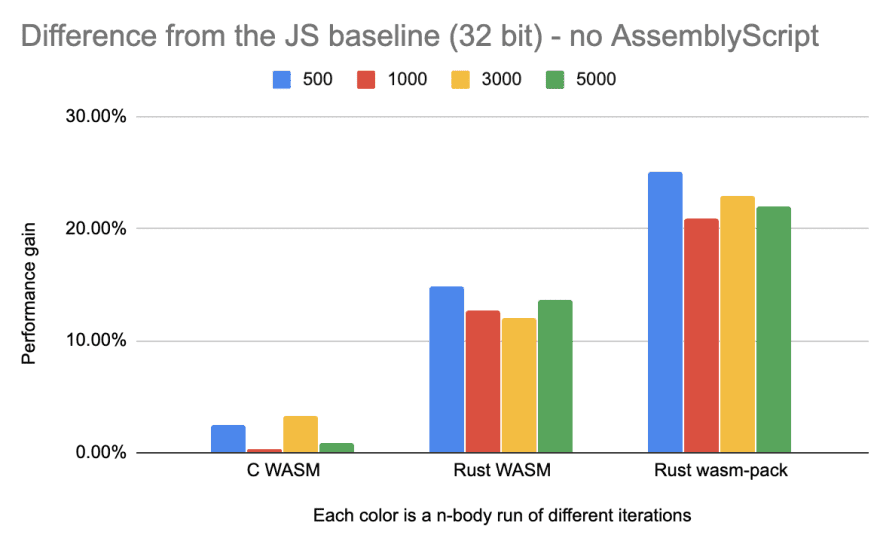

Differences between other implementations of the algorithm and JS-implementation (benchmark variant with 32-bit coordinates of points, without asm.js)

Different representations of numbers seem to have influenced other versions of the test. But they influenced in different ways. For example, the C variant, which used 32-bit numbers (floats), became slower than the C-variant, which used 64-bit numbers (double). Both Rust versions of the test with 32-bit numbers (f32) became faster than their versions with 64-bit numbers (f64).

Poor implementation of the algorithm?

Analysis of the above data may suggest the following thought. Since all tested Wasm assemblies are very close in performance to JavaScript implementations, is it possible that Wasm implementations only reflect the performance features of native algorithm implementations?

Comparing native implementations of an algorithm with a JavaScript implementation

Native versions of an algorithm implementation are always faster than a JavaScript implementation.

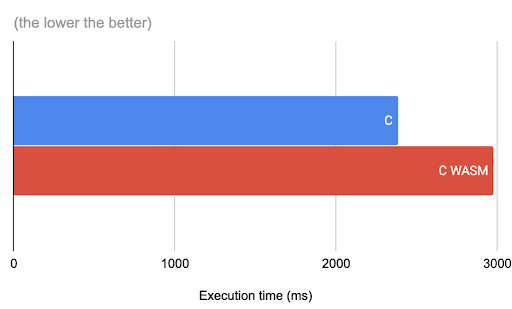

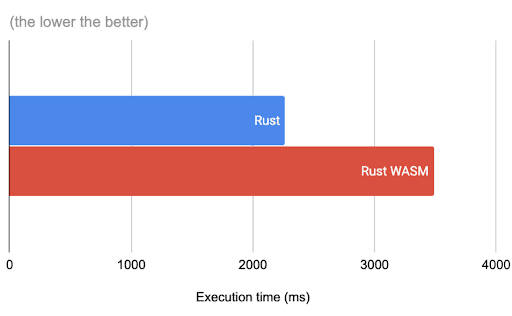

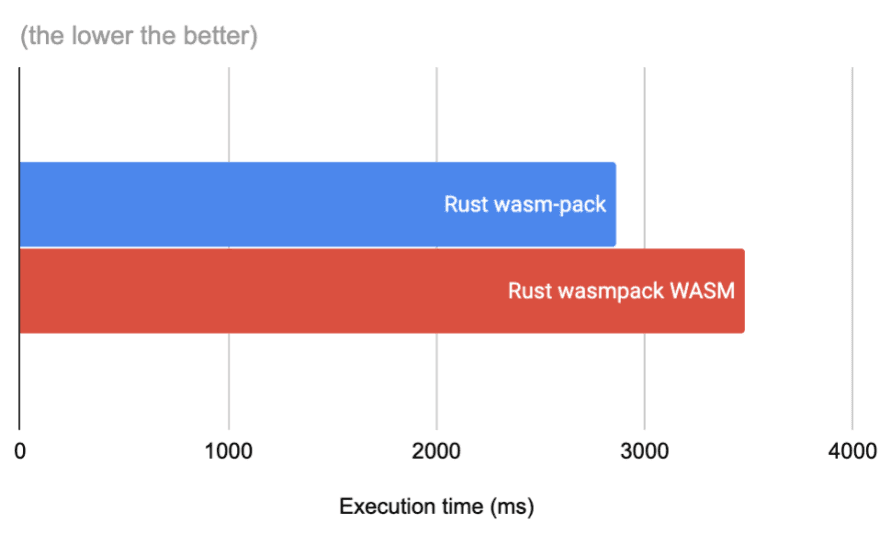

We also noticed that Wasm assemblies are slower than the native versions of the code used to create such assemblies. The performance difference is 20-50%. We found this out on a shortened version of the benchmark with 1000 iterations.

C-implementation and corresponding Wasm-assembly

Rust implementation and corresponding Wasm build

Rust implementation, which was created using wasm-pack, and the corresponding Wasm-assembly.

When measuring the execution time of the native code, the time required to load the program was taken into account, and in the case of Wasm variants, this time was not taken into account.

Outcome

On average, the performance gain of two Rust implementations of the algorithm, compared to its basic JS implementation, was 20%. This is probably good for the image of Rust, but it is too little performance gain compared to the effort it took to get it.

What conclusions can we draw from these tests? And here are some: thoughtful writing of JS code allows you to get a fairly high performance and does not require switching to other programming languages.

Learning new programming languages is always good. But there must be good reasons for learning new languages. Performance is often the “wrong” reason, as the high-level design architecture is more important for performance than compilers or micro-optimizations.

As an experiment, we changed JavaScript to TypeScript to write an implementation of the force graph visualization algorithm. As a result, we have improved the quality of the codebase, but not the performance. We specifically measured the performance, and it turned out that after the transition, it increased slightly, by 5%. Probably the reason is the code refactoring.

If you are interested in JavaScript development and performance, then you might be interested to watch this talk, which sounded similar results to those we got.

How do you approach the development of the "heavy" parts of web projects?