Shot from the series South Park

We recognized him by his handwriting

During the existence of JSOC, we were faced with different IT infrastructures and various customer desires for monitoring coverage of their IT landscape. It often happens, for example, that a customer wants to monitor only the servers, depriving himself of the opportunity to see what is happening around the monitored segment. This approach can result in a late attack detection, when most of the infrastructure is already compromised. And if our existing customers do not have such situations, then on pilot projects this is a common story.

So, during one of the pilots, the customer was in the process of rebuilding its own IT landscape and wanted to see how the SOC would fit in with its new infrastructure and processes. Due to various circumstances beyond our control, we did not have a single network source connected, which

What happened? The first thing we saw was adding new accounts to the Domain Admins group. But one more rule worked, namely the suspicious name of the accounts, which we have already encountered. An

Unfortunately, the first compromised machine was outside the scope of connected sources, so the moment of launching the script itself could not be recorded. Following the creation of the accounts, we captured a rule change on the local firewall that allowed the use of a specific remote administration tool, and then the agent service of that very tool was created. By the way, the antivirus that the customer uses does not recognize this tool, so we control it by creating the corresponding service and starting the process.

The cherry on top was the distribution of a legal encryption utility to compromised hosts. He did not have time to upload it to all hosts. The attacker was “kicked out” of the infrastructure 23 minutes after the first triggering of this incident.

Of course, the client and I wanted to get a complete picture of the incident and find the attacker's entry point. Based on our experience, we requested logs from network equipment, but, unfortunately, the level of logging on it did not allow us to draw any conclusions. However, after receiving the config of the edge network equipment from the administrators, we saw the following:

ip nat inside source static tcp "gray address" 22 "white address" 9922

ip nat inside source static tcp "gray address" 3389 "white address" 33899

From which it was concluded that, most likely, one of the servers where such NAT was configured became the entry point. We analyzed the logs of these servers and saw successful authentication under the admin account, launching Mimikatz and then launching a script that created domain administrators. Through this incident, the customer undertook a complete revision of firewall rules, passwords and security policies and identified several more flaws in their infrastructure. And I also got a more systematic understanding of why the SOC is needed in its organization.

Remote and home routers - hacker's paradise

Obviously, in the conditions of companies moving to remote control, it has become much more difficult to monitor events on end devices. There are two key reasons:

- a large number of employees use personal devices for work;

- VPN , .

It is also an obvious fact that even experienced attackers have become interested in home networks, since this is now the ideal point of penetration into the corporate perimeter.

One of our customers had 90% of employees switched to a remote mode of work, and all had domain laptops - therefore, we could continue to monitor end devices - of course, taking into account point 2 above. And it was this point that played against us.

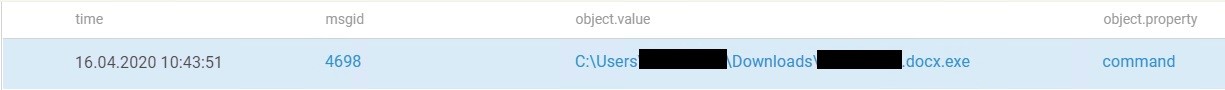

One of the users during self-isolation did not connect to the VPN for almost an entire day. At the end of the day, he still needed access to corporate resources. He used a VPN, we got the logs from his work machine and saw something strange. A suspicious task was created in Task Scheduler: it launched a certain file every Wednesday at 17:00. They began to understand.

The traces led to two doc files: one of them created the task, the second was the executable file in the task. The user downloaded them from Google Drive.

At this stage of our search, the customer's security service was already connected and began an internal investigation. It turned out that the user received a letter to his personal mail, which contained a link to Google Drive, from where the documents were downloaded. Gradually we got to the user's router - of course, the login / password to it were admin \ admin (as if it were otherwise?). But the most interesting thing was found in the settings of the router's DNS server: the IP address of one of the European countries was indicated there. We drove this address into VirusTotal - most of the sources lit up in red. After the end of the investigation, the customer sent us two files for research, and we saw that the file launched by the task began to "walk" through various directories and download data from there.

The chronology of the incident was as follows: the attacker gained access to the user's router, changed the settings in it, specifying his own server as the DNS server. For some time I watched my "victim" and sent a letter to the user's personal mail. At this step, we found it, not allowing us to get deep into the corporate infrastructure.

They break theirs too

At the initial stage of working with an outsourcing SOC, we always recommend connecting event sources in stages, so that the customer on his side debugs the processes, clearly defines the areas of responsibility and generally gets used to this format. So, at one of our new customers, we first connected basic sources, such as domain controllers, firewalls, proxies, various information security tools, mail, DNS and DHCP servers and several other different servers. We also offered to connect the machines of the administrators of the head office at the level of local logs, but the customer said that for now this is unnecessary and he trusts his admins. Here, in fact, our story begins.

One day, events stopped coming to us. We learned from the customer that, allegedly, due to a large-scale DDoS attack, his data center "fell" and now he is engaged in recovery. This immediately raised a lot of questions - after all, the DDoS protection system was connected to us.

The analyst immediately began digging into her logs, but did not find anything suspicious there - everything was in normal mode. Then I looked at the network logs and drew attention to the strange work of the balancer, which distributed the load between two servers that process incoming traffic. The load balancer did not distribute the load, but, on the contrary, directed it to only one server until it was "choked", and only then redirected the flow to the second node. But it is much more interesting that as soon as both servers fell, this traffic went further and "put" everything in general. While the restoration work was underway, the customer found out who messed up. This, it would seem, is the end of the incident: it has nothing to do with information security and is simply associated with

Interestingly, this administrator was the subject of our investigations a couple of weeks prior to the incident. He accessed from the local network to an external host on port 22. Then this incident was marked as legitimate, because admins are not prohibited from using external resources to automate their own work or test any new equipment settings. Perhaps this connection between the fall of the data center and the call to an external host would never have been noticed, but there was another incident: calls from one of the servers of the test segment to the same host on the Internet that the admin had previously contacted - this incident was also noted by the customer as legitimate. After looking at the administrator's activity, we saw constant requests to this server from the test segment and asked the customer to test it.

And here it is the denouement - a web server was deployed on that server that implements partial functionality of the customer's main website. It turned out that the admin was planning to redirect some of the incoming traffic to his fake site in order to collect user data for his own purposes.

Outcome

Despite the fact that it is already 2020, many organizations still do not adhere to the common truths of information security, and the irresponsibility of their own staff can lead to disastrous consequences.

In this regard, here are some tips:

- Never expose RDP and SSH to the outside, even if you hide them behind other ports - it does not help.

- Adjust the highest possible logging level to speed up the detection of intruders.

- Threat Hunting . TTPs , . .

- , . , , .

, Solar JSOC (artkild)