Memory hardware architecture

The modern memory hardware architecture is somewhat different from the internal Java memory model. It is important to understand the hardware architecture in order to understand how the Java model works with it. This section describes the general hardware memory architecture, and the next section describes how Java works with it.

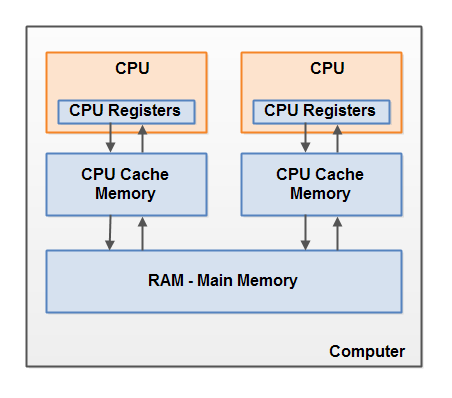

Here is a simplified diagram of the hardware architecture of a modern computer:

A modern computer often has 2 or more processors. Some of these processors may also have multiple cores. On such computers, several threads can run simultaneously. Each processor (translator's note - hereinafter, the author probably means a processor core or a single-core processor by a processor)able to run one thread at any given time. This means that if your Java application is multithreaded, then within your program one thread can be running simultaneously per processor.

Each processor contains a set of registers that are essentially in its memory. It can perform operations on data in registers much faster than on data that is in the computer's main memory (RAM). This is because the processor can access these registers much faster.

Each CPU can also have a cache layer. In fact, most modern processors have it. A processor can access its cache memory much faster than main memory, but generally not as fast as its internal registers. Thus, the speed of access to the cache memory is somewhere between the speed of access to the internal registers and to the main memory. Some processors may have tiered caches, but this is not important to understand in order to understand how the Java memory model interacts with hardware memory. It is important to know that processors can have some level of cache memory.

The computer also contains an area of main memory (RAM). All processors can access main memory. The main memory area is usually much larger than the processor's cache.

Typically, when a processor needs access to main memory, it reads a portion of it into its cache memory. It can also read part of the data from the cache into its internal registers and then perform operations on them. When the CPU needs to write a result back to main memory, it flushes data from its internal register to cache memory and at some point to main memory.

Data stored in the cache is usually flushed back to main memory when the processor needs to store something else in the cache. The cache can clear its memory and write new data to it at the same time. The processor does not have to read / write the full cache every time it is updated. Typically, the cache is updated in small blocks of memory called "cache lines". One or more cache lines can be read into cache memory, and one or more cache lines can be flushed back to main memory.

Combining Java memory model and hardware memory architecture

As mentioned, Java memory model and memory hardware architecture are different. The hardware architecture does not distinguish between thread stack and heap. On hardware, the thread stack and heap are in main memory. Portions of stacks and thread heaps can sometimes be present in caches and internal registers of the CPU. This is shown in the diagram:

When objects and variables can be stored in different areas of computer memory, certain problems can arise. There are two main ones:

• Visibility of changes made by the thread on shared variables.

• Race conditions when reading, checking and writing shared variables.

Both of these problems will be explained in the following sections.

Shared Object Visibility

If two or more threads share an object with each other without proper volatile declaration or synchronization, then changes to the shared object made by one thread may not be visible to other threads.

Imagine that a shared object is initially stored in main memory. A thread running on a CPU reads a shared object into the cache of that same CPU. There he makes changes to the object. Until the CPU cache has been flushed to main memory, the modified version of the shared object is not visible to threads running on other CPUs. This way, each thread can get its own copy of the shared object, each copy will be in a separate CPU cache.

The following diagram illustrates a sketch of this situation. One thread, running on the left CPU, copies the shared object to its cache and changes the value of the variable

countby 2. This change is invisible to other threads running on the right CPU because the update for has countnot yet been flushed back to main memory.

In order to solve this problem, you can use

volatilewhen declaring a variable. It can ensure that a given variable is read directly from main memory and is always written back to main memory when updated.

Race condition

If two or more threads share the same object and more than one thread update variables in that shared object, a race condition may occur .

Imagine thread A is reading a

countshared object variable into its processor's cache. Imagine also that thread B is doing the same thing, but in the cache of a different processor. Now thread A adds 1 to the value of the variable count, and thread B does the same. Now it has var1been increased twice - separately, +1 in the cache of each processor.

If these increments were performed sequentially, the variable

countwould be doubled and written back to main memory + 2.

However, the two increments were executed simultaneously without proper synchronization. Regardless of which thread (A or B) writes its updated version

countto main memory, the new value will only be 1 more than the original value, despite two increments.

This diagram illustrates the occurrence of the race condition problem described above:

To solve this problem you can use a synchronized Java block... A synchronized block ensures that only one thread can enter a given critical section of code at any given time. Synchronized blocks also ensure that all variables accessed within a synchronized block are read from main memory, and when a thread exits a synchronized block, all updated variables will be flushed back to main memory, regardless of whether the variable is declared as

volatileor not. ...