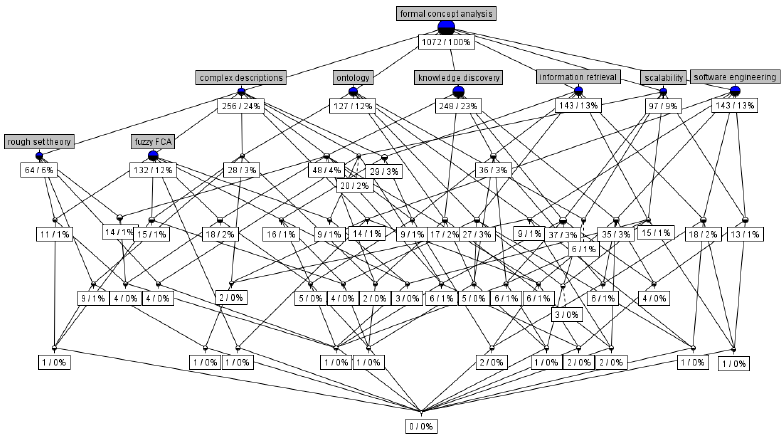

This is the third article in a series of papers (links to the first and second papers) describing a machine learning system based on lattice theory, entitled "VKF-system". It uses a structural (lattice-theoretic) approach to the presentation of training examples and their fragments, considered as the causes of the target property. The system calculates these fragments as similarities between some subsets of the training examples. There is an algebraic theory of such representations called Formal Concept Analysis (AFP).

However, the described system uses probabilistic algorithms to eliminate the disadvantages of the unlimited approach. Details below ...

Introduction

We will start by demonstrating our approach as applied to a school problem.

, .

, : ( ) .

, , ( ).

— .

, :

" " (A),

" " (B),

" " (C),

" " (D),

" " (E).

.

| A | B | C | D | E | ||

|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 1 | 1 | 1 | |

| 1 | 0 | 1 | 0 | 0 | 1 | |

| 0 | 1 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 1 | 0 | 1 | 0 | |

| ? | 1 | 0 | 1 | 0 | 1 |

( ) ( ) .

⟨{,},{E}⟩,

( , ), — .

{E} {A,C,E}, , .. . -. ( ), , .

:

⟨{,},{D}⟩,

, .

.., .., .. (.). . 2: , M.: URSS, 2020, 238 . ISBN 978-5-382-01977-2

, " -", {D} {A,C,D,E} ().

1.

- . , " - ". . ( ) , .

(= ) — (G,M,I), G M — , I⊆G×M. G M , . , gIm ⟨g,m⟩∈I, , g m.

A⊆G B⊆M

A′={m∈M|∀g∈A(gIm)},B′={g∈G|∀m∈B(gIm)};

A′ — , A, B′ — , B. (⋅)′:2G→2M (⋅)′:2M→2G (= ) (G,M,I).

(= ) (G,M,I) ⟨A,B⟩, A⊆G, B⊆M, A′=B B′=A. A ⟨A,B⟩ (=) , B (=). (G,M,I) L(G,M,I).

, L(G,M,I)

⟨A1,B1⟩∨⟨A2,B2⟩=⟨(A1∪A2)″,B1∩B2⟩,⟨A1,B1⟩∧⟨A2,B2⟩=⟨A1∩A2,(B1∪B2)″⟩...

: ⟨A,B⟩∈L(G,M,I), g∈G m∈M

CbO(⟨A,B⟩,g)=⟨(A∪{g})″,B∩{g}′⟩,CbO(⟨A,B⟩,m)=⟨A∩{m}′,(B∪{m})″⟩.

CbO, "--" (Close-by-One (CbO)), L(G,M,I).

CbO

(G,M,I) — , ⟨A1,B1⟩,⟨A2,B2⟩∈L(G,M,I), g∈G m∈M.

⟨A1,B1⟩≤⟨A2,B2⟩⇒CbO(⟨A1,B1⟩,g)≤CbO(⟨A2,B2⟩,g),⟨A1,B1⟩≤⟨A2,B2⟩⇒CbO(⟨A1,B1⟩,g)≤CbO(⟨A2,B2⟩,g)...

2.

, :

( ) .

(NP-).

.

'' , .

1 , ( ):

| MG | m1 | m2 | ... | mn |

|---|---|---|---|---|

| g1 | 0 | 1 | ... | 1 |

| g2 | 1 | 0 | ... | 1 |

| ⋮ | ⋮ | ⋮ | ⋱ | ⋮ |

| gn | 1 | 1 | ... | 0 |

, ⟨G∖{gi1,...,gik},{mi1,...,mik}⟩ . 2n .

, , n=32, 128 , 2n 237 , .. 16 !

2 . .. (- ).

3 4 . , "" -, . — , , "" -

1-e-a-a⋅e-a⋅[1-e-c⋅√a],

( ... ) p=√a/n→0, - m=c⋅√n→∞, n→∞.

, 1-e-a-a⋅e-a , , a >1.

3.

- . ( - ).

, , , .

, , (-).

input: (G,M,I), CbO( , )

result: <A,B>

X=G U M;

A = M'; B = M;

C = G; D = G';

while (A!=C || B!= D) {

x X;

<A,B> = CbO(<A,B>,x);

<C,D> = CbO(<C,D>,x);

}. , ( )

(n+k)22k⋅n=2+(n-k)22k⋅n≥2

, n — , k — .

, .. .

4. -

, , .

(G,M,I). O - ( -).

T .

, ⟨A,B⟩∈L(G,M,I). - VKF-hypothesis ⟨A,B⟩, - o∈O, B⊆{o}′.

input: N -

result: S

while (i<N) {

<A,B> (G,M,I);

hasObstacle = false;

for (o in O) {

if (B {o}') hasObstacle = true;

}

if (hasObstacle == false) {

S = S U {<A,B>};

i = i+1;

}

}B⊆{o}′ B ⟨A,B⟩ ( ) - o.

, -.

(, "--") , - .

.

input: T

input: S -

for (x in T) {

target(x) = false;

for (<A,B> in S) {

if (B is a part of {x}') target(x) = true;

}

}, - .

x ε-, - ⟨A,B⟩ B⊆{x}′ ε.

N , .

n=|M|, ε>0 1>δ>0 S -

N≥2⋅(n+1)-2⋅log2δε

>1-δ , ε- $x$ - ⟨A,B⟩∈S, .. B⊆{x}′.

. .. . .. .

, . "-" . .. .

.

Discrete features will again require some AFP technique. Continuous features will require logistic regression, entropy principles for dividing ranges into subintervals, and a convex hull representation of the intervals whose similarity is calculated.

The author is pleased to have this opportunity to thank his colleagues and students for their support and incentives.