Machine learning is shifting more and more from hand-designed models to automatically optimized pipelines using tools like H20 , TPOT, and auto-sklearn . These libraries, along with techniques such as random search , aim to simplify model selection and tweak parts of machine learning by finding the best model for a dataset without any manual intervention. However, object development, arguably the more valuable aspect of machine learning pipelines, remains almost entirely human.

Design features ( Feature engineering), also known as feature creation, is the process of creating new features from existing data to train a machine learning model. This step may be more important than the actual model used because the machine learning algorithm only learns from the data we provide it, and creating features that are relevant to the task is absolutely necessary (see the excellent article “A Few Useful Things Things to Know About Machine Learning " ).

Typically, feature development is a lengthy manual process based on domain knowledge, intuition, and data manipulation. This process can be extremely tedious, and the end characteristics will be limited by both human subjectivity and time. Automated feature design aims to help the data scientist to automatically generate many candidate objects from a dataset from which the best can be selected and used for training.

In this article, we will look at an example of using automatic feature development with the Python featuretools library.... We'll use a sample dataset to show the basics (watch out for future posts using real data). The complete code from this article is available on GitHub .

Feature Development Basics

Characteristic development means creating additional characteristics from existing data, which are often spread across several related tables. Feature development requires extracting relevant information from the data and putting it into a single table that can then be used to train a machine learning model.

The process of creating characteristics is very time consuming, since it usually takes several steps to create each new characteristic, especially when using information from several tables. We can group feature creation operations into two categories: transformations and aggregations . Let's take a look at some examples to see these concepts in action.

Transformationacts on a single table (in Python terms, a table is just Pandas

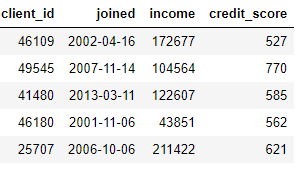

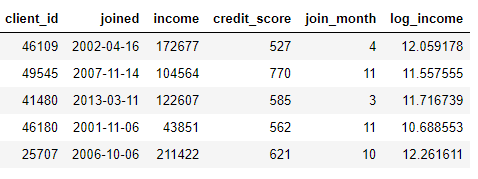

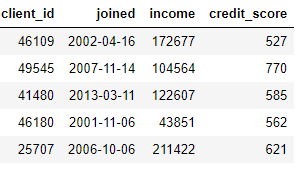

DataFrame), creating new features from one or more existing columns. For example, if we have the customer table below,

we can create features by finding the month from a column

joinedor taking the natural logarithm from a column income. These are both transformations because they only use information from one table.

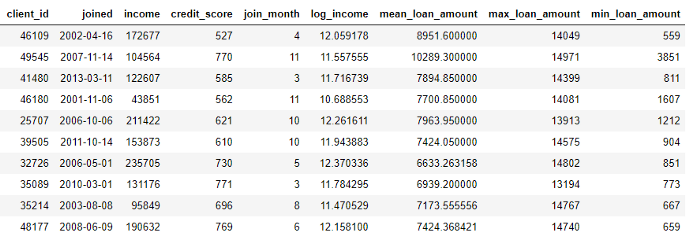

On the other hand, aggregations are performed across tables and use a one-to-many relationship to group cases and then calculate statistics. For example, if we have another table with information about customer loans, where each customer can have several loans, we can calculate statistics such as average, maximum and minimum loan values for each customer.

This process includes grouping the loans table by customer, calculating the aggregation, and then combining the received data with the customer data. This is how we could do it in Python using the Pandas language .

import pandas as pd

# Group loans by client id and calculate mean, max, min of loans

stats = loans.groupby('client_id')['loan_amount'].agg(['mean', 'max', 'min'])

stats.columns = ['mean_loan_amount', 'max_loan_amount', 'min_loan_amount']

# Merge with the clients dataframe

stats = clients.merge(stats, left_on = 'client_id', right_index=True, how = 'left')

stats.head(10)

These operations are not complicated by themselves, but if we have hundreds of variables scattered across dozens of tables, this process cannot be done manually. Ideally, we need a solution that can automatically perform transformations and aggregations across multiple tables and combine the resulting data into one table. While Pandas is a great resource, there are still many data manipulations we want to do manually! (For more information on manually designing features, see the excellent Python Data Science Handbook .)

Featuretools

Fortunately, featuretools is exactly the solution we are looking for. This open source Python library automatically generates many traits from a set of related tables. Featuretools is based on a technique known as " Deep Feature Synthesis " which sounds much more impressive than it actually is (the name comes from combining multiple features, not because it uses deep learning!).

Deep Feature Synthesis combines several transformation and aggregation operations (called feature primitivesin the FeatureTools dictionary) to create features from data spread across many tables. Like most ideas in machine learning, it is a complex method based on simple concepts. By studying one building block at a time, we can form a good understanding of this powerful technique.

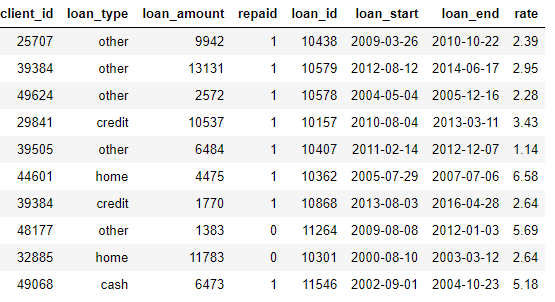

First, let's take a look at the data from our example. We've already seen something from the dataset above, and the complete set of tables looks like this:

clients: basic information about clients in the credit association. Each client only has one row in this dataframe

loans: loans to customers. Each credit only has its own row in this data frame, but customers can have multiple credits.

payments: loan payments. Each payment has only one line, but each loan will have multiple payments.

If we have a machine learning task such as predicting whether a customer will repay a future loan, we want to combine all customer information into one table. The tables are linked (via the

client_idand variables loan_id), and we could use a series of transformations and aggregations to manually complete the process. However, we will soon see that we can instead use featuretools to automate the process.

Entities and EntitySets (entities and entity sets)

The first two concepts of featuretools are entities and entitysets . Entity is just a table (or

DataFrameif you think in Pandas). EntitySet is a collection of tables and relationships between them. Imagine entityset is just another Python data structure with its own methods and attributes.

We can create an empty set of entities in featuretools using the following:

import featuretools as ft

# Create new entityset

es = ft.EntitySet(id = 'clients')Now we need to add entities. Each entity must have an index, which is a column with all unique elements. That is, each value in the index must appear only once in the table. The index in the data frame

clientsis client_idbecause each client only has one row in that data frame. We add an entity with an existing index to the entity set using the following syntax:

# Create an entity from the client dataframe

# This dataframe already has an index and a time index

es = es.entity_from_dataframe(entity_id = 'clients', dataframe = clients,

index = 'client_id', time_index = 'joined')The data frame

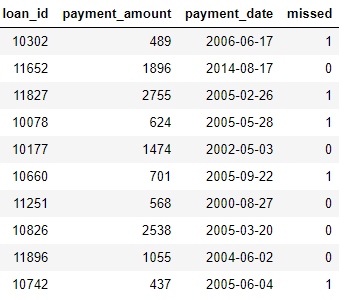

loansalso has a unique index loan_id, and the syntax for adding it to an entity set is the same as for clients. However, there is no unique index for the payment data frame. When we add this entity to the entity set, we need to pass a parameter make_index = Trueand specify the index name. Additionally, while featuretools will automatically infer the data type of each column in an entity, we can override this by passing a dictionary of column types to the parameter variable_types.

# Create an entity from the payments dataframe

# This does not yet have a unique index

es = es.entity_from_dataframe(entity_id = 'payments',

dataframe = payments,

variable_types = {'missed': ft.variable_types.Categorical},

make_index = True,

index = 'payment_id',

time_index = 'payment_date')For this dataframe, even though it

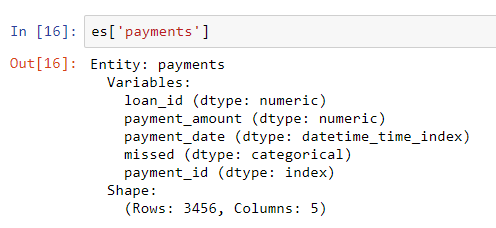

missedis an integer, it is not a numeric variable as it can only take 2 discrete values, so we tell featuretools to treat it as a categorical variable. After adding the data frames to the entity set, we examine any of them:

The column types were inferred correctly with the specified revision. Next, we need to indicate how the tables in the entity set are related.

Relationships between tables

The best way to represent the relationship between two tables is with a parent-child analogy . One-to-many relationship: each parent can have multiple children. In the table area, the parent table has one row for each parent, but the child table can have multiple rows corresponding to multiple children of the same parent.

For example, in our dataset, the

clientsframe is the parent of the loansframe. Each client has only one line in clients, but can have multiple lines in loans. Likewise, loansare parentspaymentsbecause each loan will have multiple payments. Parents are linked to their children by a common variable. When we do the aggregation, we group the child table by the parent variable and calculate statistics on the children of each parent.

To formalize the relationship in featuretools , we only need to specify a variable that links the two tables together.

clientsand the table is loansassociated with the variable client_id, and loans, and payments- with the help of loan_id. The syntax for creating a relationship and adding it to an entity set is shown below:

# Relationship between clients and previous loans

r_client_previous = ft.Relationship(es['clients']['client_id'],

es['loans']['client_id'])

# Add the relationship to the entity set

es = es.add_relationship(r_client_previous)

# Relationship between previous loans and previous payments

r_payments = ft.Relationship(es['loans']['loan_id'],

es['payments']['loan_id'])

# Add the relationship to the entity set

es = es.add_relationship(r_payments)

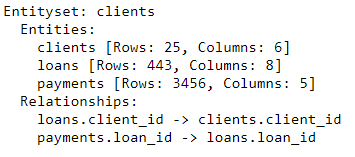

es

The entity set now contains three entities (tables) and relationships that tie these entities together. After adding entities and formalizing relationships, our set of entities is complete and we are ready to create features.

Feature primitives

Before we can move fully into deep synthesis of traits, we need to understand the primitives of traits . We already know what they are, but we just call them by different names! These are just the basic operations that we use to form new features:

- Aggregations: Operations performed on a parent-child relationship (one-to-many) that are grouped by parent and calculate statistics for children. An example is grouping a table

loansbyclient_idand determining the maximum loan amount for each client. - Conversions: operations performed from one table to one or more columns. Examples include the difference between two columns in the same table, or the absolute value of a column.

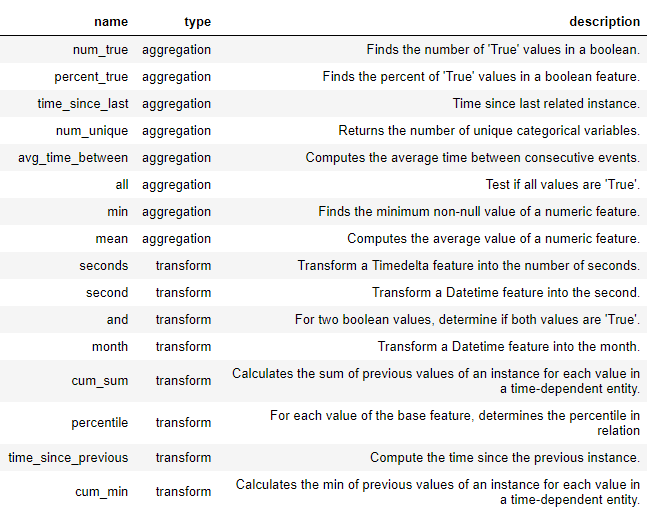

New features are created in featuretools using these primitives, either by themselves or as multiple primitives. Below is a list of some of the primitives in featuretools (we can also define custom primitives ):

These primitives can be used on their own or combined to create features. To create features with the specified primitives, we use a function

ft.dfs(stands for deep feature synthesis). We pass a set of entities target_entity, which is a table to which we want to add the features selected trans_primitives(transformations) and agg_primitives(aggregates):

# Create new features using specified primitives

features, feature_names = ft.dfs(entityset = es, target_entity = 'clients',

agg_primitives = ['mean', 'max', 'percent_true', 'last'],

trans_primitives = ['years', 'month', 'subtract', 'divide'])The result is a dataframe of new features for each client (because we made clients

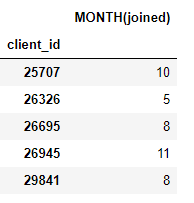

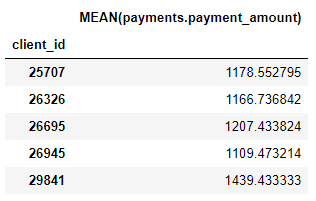

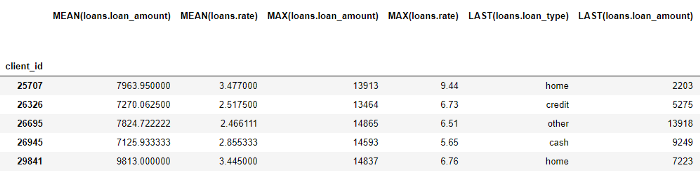

target_entity). For example, we have a month in which each client joined, which is a transformation primitive:

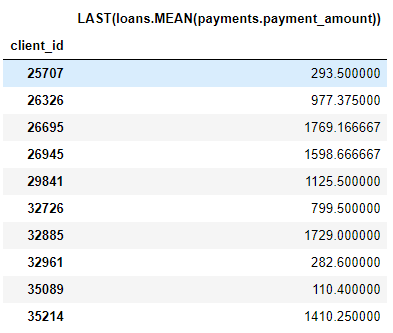

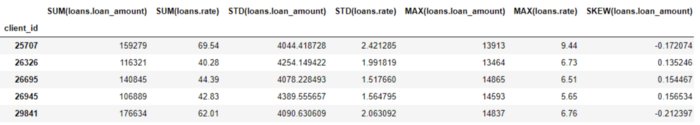

We also have a number of aggregation primitives such as the average payment amounts for each client:

Even though we have only specified a few primitives, featuretools has created many new features by combining and stacking these primitives.

The complete data frame contains 793 columns of new features!

Deep Synthesis of Signs

We now have everything to understand deep feature synthesis (dfs). In fact, we already did dfs in the previous function call! A deep trait is simply a trait consisting of a combination of multiple primitives, and dfs is the name of the process that creates those traits. The depth of a deep feature is the number of primitives needed to create a feature.

For example, the column

MEAN (payment.payment_amount)is a deep feature with a depth of 1 because it was created using a single aggregation. An element with a depth of two is this LAST(loans(MEAN(payment.payment_amount)). This is done by combining two aggregations: LAST (most recent) on top of MEAN. This represents the average payment for the most recent loan for each client.

We can compose features to any depth we want, but in practice I have never gone beyond depth 2. After this point, features are difficult to interpret, but I urge anyone interested to try to “go deeper” .

We don't need to manually specify the primitives, but instead we can let the featuretools automatically select features for us. For this we use the same function call

ft.dfs, but we do not pass any primitives:

# Perform deep feature synthesis without specifying primitives

features, feature_names = ft.dfs(entityset=es, target_entity='clients',

max_depth = 2)

features.head()

Featuretools has created many new features for us. While this process automatically creates new traits, it won't replace a Data Scientist because we still have to figure out what to do with all of those traits. For example, if our goal is to predict whether a customer will repay a loan, we might look for the signs that are most relevant to a particular outcome. Moreover, if we have knowledge of the subject area, we can use it to select specific primitives of features or for deep synthesis of candidate features .

Next steps

Automated feature design solved one problem but created another: Too many features. While it is difficult to say which of these features will be important before fitting a model, most likely not all of them will be relevant to the task on which we want to train our model. Moreover, too many features can degrade model performance because less useful features crowd out those that are more important.

The problem of too many attributes is known as the curse of dimension . As the number of features (dimension of data) increases in the model, it becomes more difficult to study the correspondence between features and goals. In fact, the amount of data required for the model to work well isscales exponentially with the number of features .

The curse of dimensionality is combined with feature reduction (also known as feature selection) : the process of removing unnecessary features. This can take many forms: Principal Component Analysis (PCA), SelectKBest, using feature values from a model, or automatic coding using deep neural networks. However, feature reduction is a separate topic for another article. At this point, we know that we can use featuretools to create many features from many tables with minimal effort!

Output

Like many topics in machine learning, automated feature design with featuretools is a complex concept based on simple ideas. Using the concepts of sets of entities, entities, and relationships, featuretools can perform deep feature synthesis to create new features. Deep synthesis of features, in turn, combines primitives - aggregates that operate through one-to-many relationships between tables, and transformations , functions applied to one or more columns in one table - to create new features from multiple tables.

Find out the details of how to get a high-profile profession from scratch or Level Up in skills and salary by taking SkillFactory's paid online courses:

- Machine Learning Course (12 weeks)

- Data Science (12 )

- (9 )

- «Python -» (9 )

- DevOps (12 )

- - (8 )

- Data Sciene 2020

- Data Science . Business Science

- Data Scientist

- Data Scientist -

- 450

- Data Science : «data»

- Data Sciene : Decision Intelligence