When developing another router, we tested network performance using a useful open-source piece - the Cisco TRex traffic generator. What is this tool? How to use it? And how can it be useful for development engineers? Below are the answers to these questions.

1. What is Cisco TRex

It is an open source software traffic generator that runs on standard Intel DPDK-based processors and supports stateful / stateless modes. Comparatively simple and fully scalable.

English language documentation for this tool is available on the website .

Trex allows you to generate different types of traffic and analyze the data when it is received. Work at the MAC and IP level is supported. You can set the size of packets and their number, control the data transfer rate.

Working with the generator is organized in Linux environment.

One of the important differences between the Trex generator is the use of DPDK technology, which allows you to bypass the performance bottlenecks in the Linux network stack. DPDK or Data Plane Development Kit is a set of libraries and drivers for fast packet processing that allows you to exclude the Linux network stack from packet processing and interact directly with a network device.

DPDK turns a general-purpose processor into a packet forwarding server. This transformation eliminates the need for expensive switches and routers. However, DPDK imposes restrictions on the use of specific network adapters, the list of supported hardware is indicated on the link - here is the most popular platform from Intel, i.e. hardware support is provided that works with linux drivers e1000, ixgbe, i40e, ice, fm10k, ipn3ke, ifc, igc.

It is also important to understand that for a TRex server to operate at 10 Gbps, a multi-core processor is required - from 4 cores or higher, preferably an Intel CPU with support for simultaneous multi-threading (hyper-threading).

2. How to get and try TRex

1) Download the archive from the trex-tgn.cisco.com server: trex-tgn.cisco.com/trex/release/

Unpack the archive in the user's home directory "/ home / user", where user is the username.

[bash]>wget --no-cache https://trex-tgn.cisco.com/trex/release/latest

[bash]>tar -xzvf latest2) Configuring the interfaces for sending and receiving data

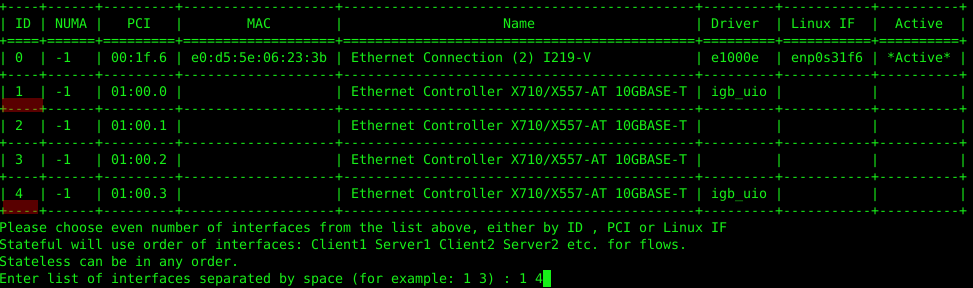

Let's configure using the utility "dpdk_setup_ports.py", which comes in the archive with TRex. You can configure the network interfaces that TRex uses at the MAC or IP level. To start, you need to run this utility with the "sudo ./dpdk_setup_ports.py –i" interactive setting key.

The first step is to abandon the configuration at the MAC level (Do you want to use MAC based config? (Y / N) n).

The second step is to select a pair of network interfaces with which we will work, in our case the Intel X710 network card works with 4 network interfaces, we will use the 1st and 4th slot of the network card.

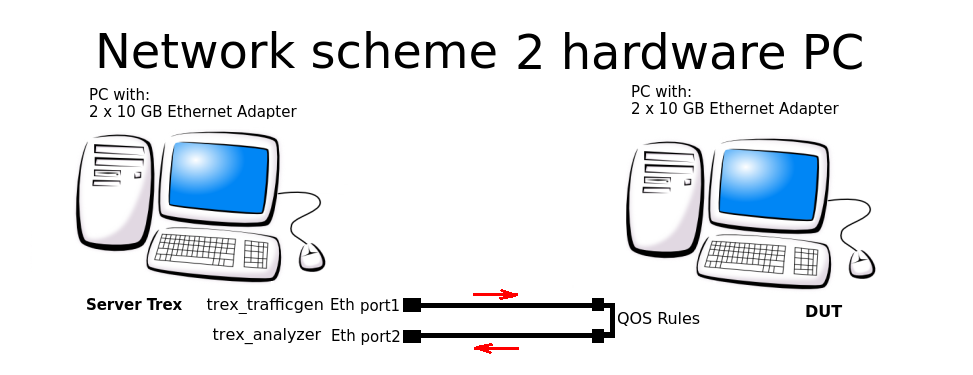

In the third step, the system will offer to automatically create a closed configuration - when data leaves port 1 and arrives in port 2 (and back), all on one PC. We had to abandon this scheme and configure the routing scheme for 2 PCs.

In the fourth and fifth steps, we agree to save the configuration to the /etc/trex_cfg.yaml file.

For example, consider the configuration at the IP level for the following connection scheme:

The configuration file is located here: "/etc/trex_cfg.yaml". A simple configuration file is shown below for a 2-port NIC with a CPU supporting 8 threads:

### Config file generated by dpdk_setup_ports.py ###

- version: 2

interfaces: ['01:00.0', '01:00.3']

port_info:

- ip: 192.168.253.106

default_gw: 192.168.253.107

- ip: 192.168.254.106

default_gw: 192.168.254.107

platform:

master_thread_id: 0

latency_thread_id: 1

dual_if:

- socket: 0

threads: [2,3,4,5,6,7]In configuration:

- '01: 00.0 ', '01: 00.3' - the name of Eth-interfaces in the used Linux system.

- ip: 192.168.253.106 - the address of the port of the Server TRex PC from which traffic is generated.

- default_gw: 192.168.253.107 - address of 1 port of the PC DUT (Device under test).

- ip: 192.168.254.106 - the address of the Server TRex PC port, from which traffic is returned after passing through the QOS rules.

- default_gw: 192.168.253.107 - address of 2 ports of PC DUT.

Attention! The TRex system prohibits the use of the same subnet when generating streams that are used by the system; for this, when generating packets, subnets 16.0.0.0 and 48.0.0.0 are used.

3) Configuring the interfaces on the remote machine

It is necessary to configure forwarding and routes so that the system (DUT) through which we will pass traffic knows where to receive and where to send packets.

Configure flow routing rules on the DUT PC:

sudo echo 1 > /proc/sys/net/ipv4/ip_forward

sudo route add -net 16.0.0.0 netmask 255.0.0.0 gw 192.168.253.106

sudo route add -net 48.0.0.0 netmask 255.0.0.0 gw 192.168.254.1064) Start the TRex server in astf mode:

cd v2.XX

sudo ./t-rex-64 -i --astfIf the TRex server is successfully launched, we will see information about the Ethernet ports used for testing:

The ports are bound/configured.

port : 0

------------

link : link : Link Up - speed 10000 Mbps - full-duplex

promiscuous : 0

port : 1

------------

link : link : Link Up - speed 10000 Mbps - full-duplex

promiscuous : 0

number of ports : 2

max cores for 2 ports : 1

tx queues per port : 35) Launch the TRex console

Using the console, in a separate window, launch the stream generation from ready-made examples (the folder with astf examples is in the archive for TRex):

cd v2.XX

./trex-console

start -f astf/http_simple.py -m 1

start (options):

-a (all ports)

-port 1 2 3 (ports 1 2 3)

-d duration (-d 100 -d 10m -d 1h)

-m stream strength (-m 1 -m 1gb -m 40%)

-f load from disk the streams fileIf the launch is successful, we will see the traffic flow statistics in the TRex server console:

Global stats enabled

Cpu Utilization : 0.3 % 0.6 Gb/core

Platform_factor : 1.0

Total-Tx : 759.81 Kbps

Total-Rx : 759.81 Kbps

Total-PPS : 82.81 pps

Total-CPS : 2.69 cps

Expected-PPS : 0.00 pps

Expected-CPS : 0.00 cps

Expected-L7-BPS : 0.00 bps

Active-flows : 2 Clients : 0 Socket-util : 0.0000 %

Open-flows : 6413. Automation of development and testing with TRex

In the process of developing a network router, we wrote many tests for TRex, so the question arose of running them in automatic mode using python. How we organized it:

We started the TRex server in stl mode:

cd v2.XX

sudo ./t-rex-64 -i --stlSet the environment variable for python, since TRex works in conjunction with python.

export PYTHONPATH = / home / !!! user !!! / v2.XX / automation / trex_control_plane / interactive, where "!!! user !!!" - username and home directory, v2.XX - version of TRex software downloaded and unpacked into this folder.

We started the traffic generator using python, the listing of the example configuration is given below.

python example_test_2bidirectstream.py

Expected Output:

Transmit: 10000.24576MByte/s Receive: 10000.272384MByte/s

Stream 1 TX: 4487179200 Bit/s RX: 4487179200 Bit/s

Stream 2 TX: 2492873600 Bit/s RX: 2492873600 Bit/s

Stream 3 TX: 1994294400 Bit/s RX: 1994294400 Bit/s

Stream 4 TX: 997147200 Bit/s RX: 997147200 Bit/sLet's look at this example:

c = STLClient (server = '127.0.0.1')

Create a connection to the TRex server, in this case, the connection is created to the same machine as the server.

- "Base_pkt_dir_a, base_pkt_dir_b, base_pkt_dir_c, base_pkt_dir_d" - packet templates, which contain source and destination addresses, source and destination ports. In this example, 4 streams are created, 2 in one direction and 2 in the opposite direction.

- "S1, s2, s3, s4" - request the parameters of the generated stream from the STLStream class, such as stream ID and bitrate, in our case ID1 = 4.5 Gbps, ID2 = 2.5 Gbps, ID3 = 2 Gbps, ID4 = 1 Gbps.

Listing of the stream configuration file example_test_2bidirectstream.py

# get TRex APIs

from trex_stl_lib.api import *

c = STLClient(server = '127.0.0.1')

c.connect()

try:

# create a base packet with scapy

base_pkt_dir_a = Ether()/IP(src="16.0.0.1",dst="48.0.0.1")/UDP(dport=5001,sport=50001)

base_pkt_dir_b = Ether()/IP(src="48.0.0.1",dst="16.0.0.1")/UDP(dport=50001,sport=5001)

base_pkt_dir_c = Ether()/IP(src="16.0.0.2",dst="48.0.0.2")/UDP(dport=5002,sport=50002)

base_pkt_dir_d = Ether()/IP(src="48.0.0.2",dst="16.0.0.2")/UDP(dport=50002,sport=5002)

# pps : float

# Packets per second

#

# bps_L1 : float

# Bits per second L1 (with IPG)

#

# bps_L2 : float

# Bits per second L2 (Ethernet-FCS)

packet_size = 1400

def pad(base_pkt):

pad = (packet_size - len(base_pkt)) * 'x'

return pad

s1 = STLStream(packet=STLPktBuilder(base_pkt_dir_a/pad(base_pkt_dir_a)), mode=STLTXCont(bps_L2=4500000000), flow_stats=STLFlowStats(pg_id=1))

s2 = STLStream(packet=STLPktBuilder(base_pkt_dir_b/pad(base_pkt_dir_b)), mode=STLTXCont(bps_L2=2500000000), flow_stats=STLFlowStats(pg_id=2))

s3 = STLStream(packet=STLPktBuilder(base_pkt_dir_c/pad(base_pkt_dir_c)), mode=STLTXCont(bps_L2=2000000000), flow_stats=STLFlowStats(pg_id=3))

s4 = STLStream(packet=STLPktBuilder(base_pkt_dir_d/pad(base_pkt_dir_d)), mode=STLTXCont(bps_L2=1000000000), flow_stats=STLFlowStats(pg_id=4))

my_ports = [0, 1]

c.reset(ports = [my_ports[0], my_ports[1]])

# add the streams

c.add_streams(s1, ports = my_ports[0])

c.add_streams(s2, ports = my_ports[1])

c.add_streams(s3, ports = my_ports[0])

c.add_streams(s4, ports = my_ports[1])

# start traffic with limit of 10 seconds (otherwise it will continue forever)

# bi direction

testduration = 10

c.start(ports=[my_ports[0], my_ports[1]], duration=testduration)

# hold until traffic ends

c.wait_on_traffic()

# check out the stats

stats = c.get_stats()

# get global stats

totalstats = stats['global']

totaltx = round(totalstats.get('tx_bps'))

totalrx = round(totalstats.get('rx_bps'))

print('Transmit: {}MByte/s Receive: {}MByte/s'.format((totaltx / 1000000), (totalrx / 1000000)))

c.clear_stats(ports = [my_ports[0], my_ports[1]])

# get flow stats

totalstats = stats['flow_stats']

stream1 = totalstats[1]

stream2 = totalstats[2]

stream3 = totalstats[3]

stream4 = totalstats[4]

totaltx_1 = stream1.get('tx_pkts')

totalrx_1 = stream1.get('rx_pkts')

print('Stream 1 TX: {} Bit/s RX: {} Bit/s'.format((totaltx_1['total'] / testduration * packet_size * 8),

(totalrx_1['total'] / testduration * packet_size * 8)))

totaltx_2 = stream2.get('tx_pkts')

totalrx_2 = stream2.get('rx_pkts')

print('Stream 2 TX: {} Bit/s RX: {} Bit/s'.format((totaltx_2['total'] / testduration * packet_size * 8),

(totalrx_2['total'] / testduration * packet_size * 8)))

totaltx_3 = stream3.get('tx_pkts')

totalrx_3 = stream3.get('rx_pkts')

print('Stream 3 TX: {} Bit/s RX: {} Bit/s'.format((totaltx_3['total'] / testduration * packet_size * 8),

(totalrx_3['total'] / testduration * packet_size * 8)))

totaltx_4 = stream4.get('tx_pkts')

totalrx_4 = stream4.get('rx_pkts')

print('Stream 4 TX: {} Bit/s RX: {} Bit/s'.format((totaltx_4['total'] / testduration * packet_size * 8),

(totalrx_4['total'] / testduration * packet_size * 8)))

except STLError as e:

print(e)

finally:

c.disconnect()

Conclusion

When preparing this guide for Habr, we launched and tested the operation of the DUT system with 4 threads, collected information on threads and global statistics.

The operation described above is launched using python, which means that using TRex, you can automate testing and debugging of network devices and software products - in a loop or when running tests in python sequentially.

So why is Cisco's TRex better or worse than other similar traffic generators? For example, the popular client-server program iperf? In the TRex usage scenario, we see a description of setting up and working with streams. Both testing and debugging tools are good: iperf - for quick testing of functionality on the go, and TRex does an excellent job of automating testing and development of complex network devices and systems, where the ability to configure multi-threaded streams is important in order to configure each stream for a specific task and analyze the output results ...

TRex allows you to create templates for almost any type of traffic and amplify them to generate large-scale DDoS attacks, including TCP-SYN, UDP and ICMP streams. The ability to generate massive traffic streams allows you to simulate attacks from multiple clients on multiple target servers.

So if you have not tried this tool yet, you can take a note. And if you tried - share your examples and feedback in the comments. It is interesting to know what TRex is being thought of and used by fellow engineers.