In this article, I want to talk about how our team decided to apply the CQRS & Event Sourcing approach in a project that is an online auction site. And also about what came out of this, what conclusions can be drawn from our experience and what rake it is important not to step on for those who go by CQRS & ES.

Prelude

To begin with, a little history and business background. A customer came to us with a platform for holding so-called timed auctions, which was already in production and on which a certain amount of feedback was collected. The client wanted us to create a platform for live auctions for him.

Now a little terminology. An auction is when certain items are sold - lots, and buyers (bidders) place bids. The owner of the lot is the buyer who offered the highest bid. Timed auction is when each lot has a predetermined closing time. Buyers place bets, at some point the lot is closed. Similar to ebay.

Timed platform was made in a classic way, using CRUD. Lots were closed by a separate application, starting on a schedule. All this did not work very reliably: some bets were lost, some were made as if on behalf of the wrong buyer, lots were not closed or closed several times.

Live auction is an opportunity to participate in a real offline auction remotely via the Internet. There is a room (in our internal terminology - "room"), it contains the host of the auction with a hammer and the audience, and right there next to the laptop sits the so-called clerk, who, by pressing buttons in his interface, broadcasts the course of the auction to the Internet, and the connected By the time of the auction, buyers see bids that are being placed offline and can place their bids.

Both platforms work in principle in real time, but if in the case of timed all shoppers are in an equal position, then in the case of live it is extremely important that online shoppers can successfully compete with those in the room. That is, the system must be very fast and reliable. The sad experience of the timed platform told us in no uncertain terms that the classic CRUD was not suitable for us.

We did not have our own experience of working with CQRS & ES, so we consulted with colleagues who had it (we have a large company), presented them our business realities and jointly came to the conclusion that CQRS & ES should suit us.

What else are the specifics of online auctions:

- — . , « », , . — , 5 . .

- , , .

- — - , , — .

- , .

- The solution must be scalable - several auctions can be held simultaneously.

A brief overview of the CQRS & ES approach

I will not dwell on the consideration of the CQRS & ES approach, there are materials about this on the Internet and in particular on Habré (for example, here: Introduction to CQRS + Event Sourcing ). However, I will briefly remind you of the main points:

- The most important thing in event sourcing: the system does not store data, but the history of their change, that is, events. The current state of the system is obtained by sequential application of events.

- The domain model is divided into entities called aggregates. The unit has a version. Events are applied to aggregates. Applying an event to an aggregate increments its version.

- write-. , .

- . . , , . «» . .

- , , - ( N- ) . «» . , .

- - , , , , write-.

- write-, read-, , . read- . Read- .

- , — Command Query Responsibility Segregation (CQRS): , , write-; , , read-.

. .

In order to save time, as well as due to the lack of specific experience, we decided that we need to use some kind of framework for CQRS & ES.

In general, our technology stack is Microsoft, i.e. .NET and C #. Database - Microsoft SQL Server. Everything is hosted in Azure. A timed platform was made on this stack, it was logical to make a live platform on it.

At that time, as I remember now, Chinchilla was almost the only option suitable for us in terms of the technological stack. So we took her.

Why do we need a CQRS & ES framework at all? He can "out of the box" solve such problems and support such aspects of implementation as:

- Aggregate entities, commands, events, aggregate versioning, rehydration, snapshot mechanism.

- Interfaces for working with different DBMS. Saving / loading events and snapshots of aggregates to / from the write-base (event store).

- Interfaces for working with queues - sending commands and events to the appropriate queues, reading commands and events from the queue.

- Interface for working with websockets.

Thus, taking into account the use of Chinchilla, we added to our stack:

- Azure Service Bus as a command and event bus, Chinchilla supports it out of the box;

- Write and read databases are Microsoft SQL Server, that is, they are both SQL databases. I will not say that this is the result of a conscious choice, but rather for historical reasons.

Yes, the frontend is made in Angular.

As I already said, one of the requirements for the system is that users learn as quickly as possible about the results of their actions and the actions of other users - this applies to both the customers and the clerk. Therefore, we use SignalR and websockets to quickly update data on the frontend. Chinchilla supports SignalR integration.

Selection of units

One of the first things to do when implementing the CQRS & ES approach is to determine how the domain model will be divided into aggregates.

In our case, the domain model consists of several main entities, something like this:

public class Auction

{

public AuctionState State { get; private set; }

public Guid? CurrentLotId { get; private set; }

public List<Guid> Lots { get; }

}

public class Lot

{

public Guid? AuctionId { get; private set; }

public LotState State { get; private set; }

public decimal NextBid { get; private set; }

public Stack<Bid> Bids { get; }

}

public class Bid

{

public decimal Amount { get; set; }

public Guid? BidderId { get; set; }

}

We got two aggregates: Auction and Lot (with Bids). In general, it is logical, but we did not take into account one thing - the fact that with such a division, the state of the system was spread over two units, and in some cases, to maintain consistency, we must make changes to both units, and not to one. For example, an auction can be paused. If the auction is paused, you cannot bid on the lot. It would be possible to pause the lot itself, but a paused auction cannot process any commands other than "unpause".

Alternatively, only one aggregate could be made, Auction, with all lots and bids inside. But such an object will be quite difficult, because there can be up to several thousand lots in the auction and there can be several dozen bids for one lot. During the lifetime of the auction, such an aggregate will have a lot of versions, and rehydration of such an aggregate (sequential application of all events to the aggregate), if no snapshots of aggregates are made, will take quite a long time. Which is unacceptable for our situation. If you use snapshots (we use them), then the snapshots themselves will weigh a lot.

On the other hand, to ensure that changes are applied to two aggregates within the processing of a single user action, you must either change both aggregates within the same command using a transaction, or execute two commands within the same transaction. Both are, by and large, a violation of architecture.

Such circumstances must be taken into account when breaking down the domain model into aggregates.

At this stage in the evolution of the project, we live with two units, Auction and Lot, and we break the architecture by changing both units within some commands.

Applying a command to a specific version of the unit

If several buyers place a bid on the same lot at the same time, that is, they send a “place a bid” command to the system, only one of the bids will be successful. A lot is an aggregate, it has a version. During command processing, events are generated, each of which increments the version of the aggregate. There are two ways to go:

- Send a command specifying which version of the aggregate we want to apply it to. Then the command handler can immediately compare the version in the command with the current version of the unit and not continue if it does not match.

- Do not specify the version of the unit in the command. Then the aggregate is rehydrated with some version, the corresponding business logic is executed, events are generated. And only when they are saved can an execution pop up that such a version of the unit already exists. Because someone else did it earlier.

We use the second option. This gives teams a better chance of being executed. Because in the part of the application that sends commands (in our case, this is the frontend), the current version of the aggregate with some probability will lag behind the real version on the backend. Especially in conditions when a lot of commands are sent, and the version of the unit changes frequently.

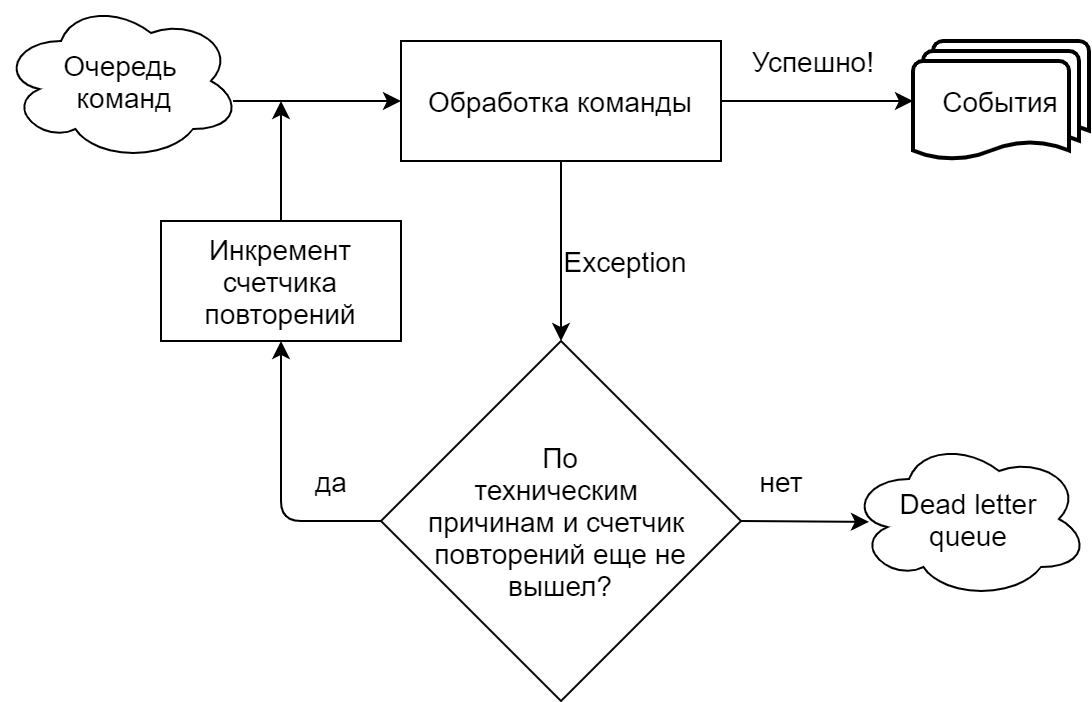

Errors when executing a command using a queue

In our implementation, heavily driven by Chinchilla, the command handler reads commands from a queue (Microsoft Azure Service Bus). We clearly distinguish situations when a team fails for technical reasons (timeouts, errors in connecting to a queue / base) and when for business reasons (an attempt to make a bid on a lot of the same amount that has already been accepted, etc.). In the first case, the attempt to execute the command is repeated until the number of repetitions specified in the queue settings is reached, after which the command is sent to the Dead Letter Queue (a separate topic for unprocessed messages in the Azure Service Bus). In the case of a business execution, the team is sent to the Dead Letter Queue immediately.

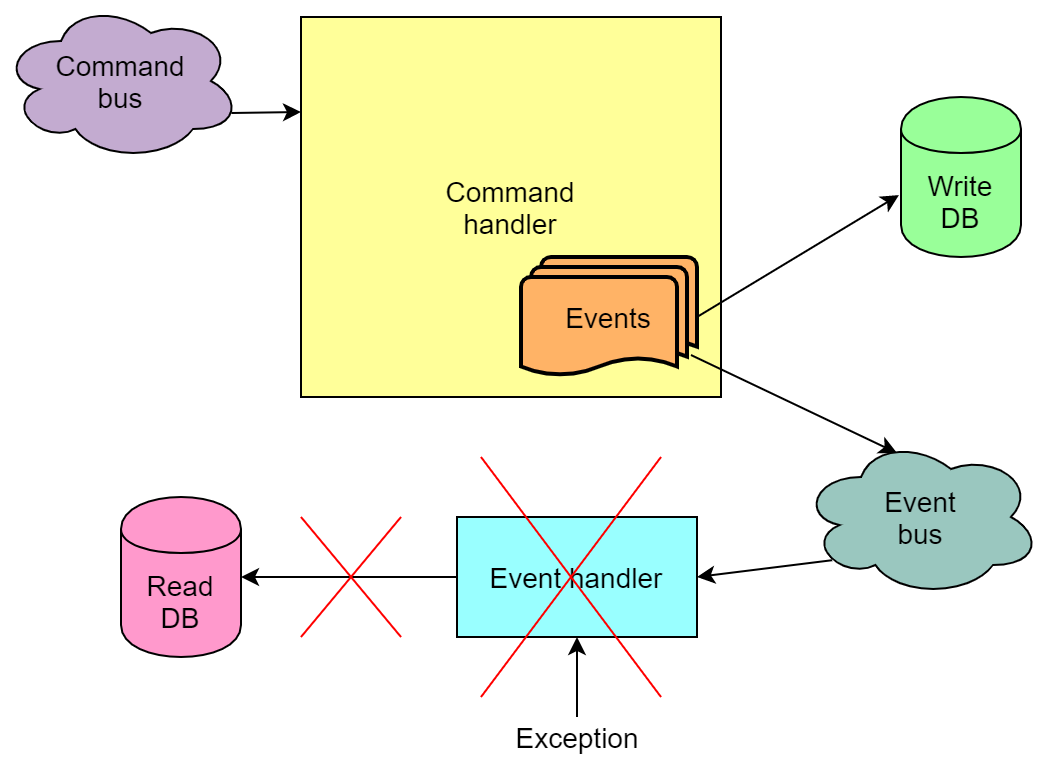

Errors when handling events using a queue

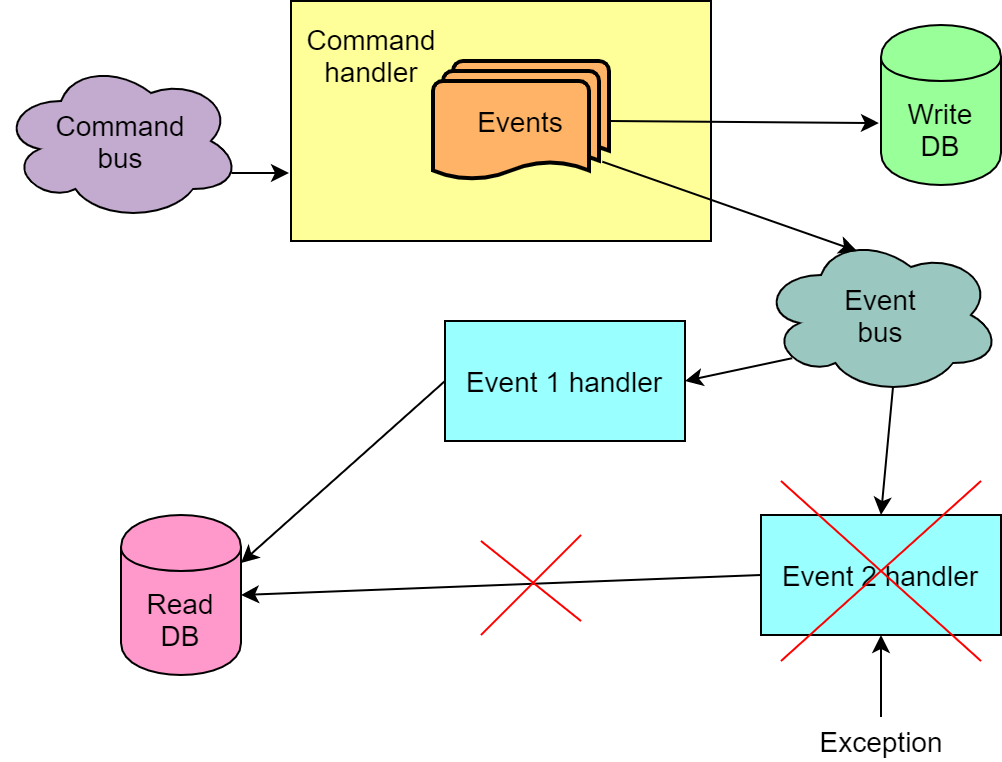

Events generated as a result of command execution, depending on the implementation, can also be sent to the queue and taken from the queue by event handlers. And when handling events, errors happen too.

However, in contrast to the situation with an unexecuted command, everything is worse here - it may happen that the command was executed and the events were written to the write-base, but their processing by the handlers failed. And if one of these handlers updates the read base, then the read base will not be updated. That is, it will be in an inconsistent state. Due to the mechanism of retrying the processing of the read event, the database is almost always eventually updated, but the likelihood that after all attempts it will remain broken still remains.

We have encountered this problem at home. The reason, however, was largely due to the fact that we had some business logic in the event processing, which, with an intense stream of bets, has a good chance of failing from time to time. Unfortunately, we realized this too late, it was not possible to redo the business implementation quickly and simply.

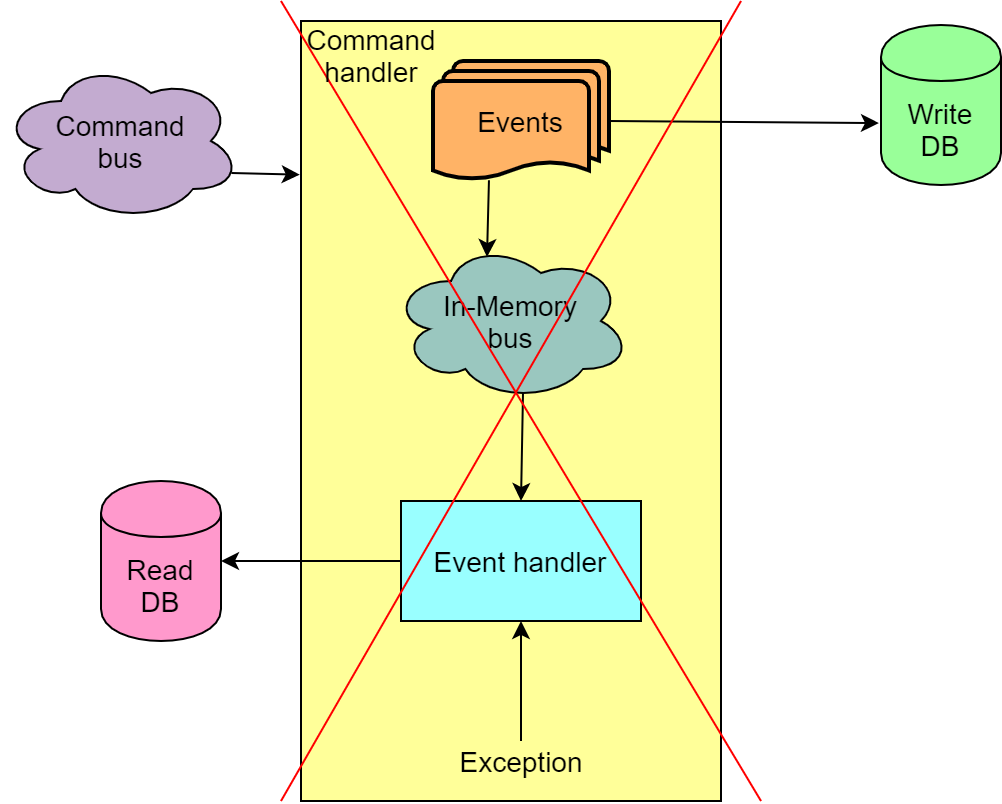

As a result, as a temporary measure, we stopped using the Azure Service Bus to transfer events from the write part of the application to the read part. Instead, the so-called In-Memory Bus is used, which allows you to process the command and events in one transaction and, in case of failure, roll back the whole thing.

Such a solution does not contribute to scalability, but on the other hand, we exclude situations when our read base breaks, which in turn breaks frontends and the continuation of the auction without re-creating the read base through replaying all events becomes impossible.

Sending a command in response to an event

This is, in principle, appropriate, but only in the case when the failure to execute this second command does not break the state of the system.

Handling multiple events of one command

In general, the execution of one command results in several events. It happens that for each of the events we need to make some change in the read database. It also happens that the sequence of events is also important, and in the wrong sequence, event processing will not work as it should. All this means that we cannot read from the queue and process the events of one command independently, for example, with different instances of code that reads messages from the queue. Plus, we need a guarantee that the events from the queue will be read in the same sequence in which they were sent there. Or we need to be prepared for the fact that not all command events will be successfully processed on the first try.

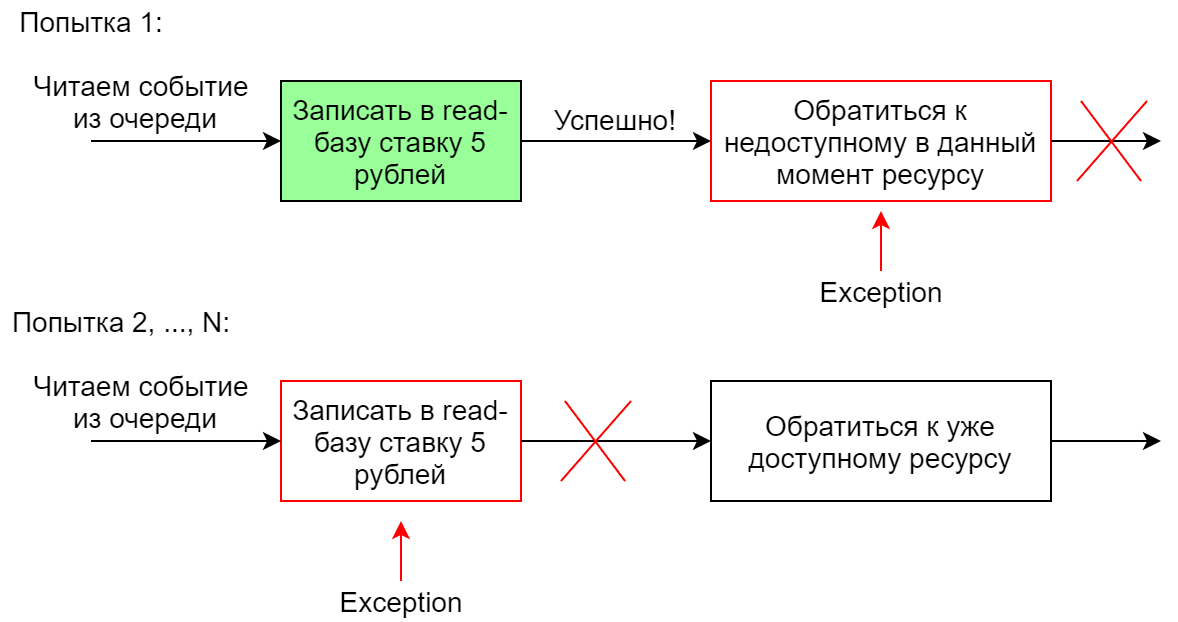

Handling one event with multiple handlers

If the system needs to perform several different actions in response to one event, usually several handlers for this event are made. They can work in parallel or sequentially. In the case of a sequential launch, if one of the handlers fails, the entire sequence is restarted (this is the case in Chinchilla). With such an implementation, it is important that the handlers are idempotent so that the second run of a once successfully executed handler does not fail. Otherwise, when the second handler falls from the chain, it, the chain, will definitely not work entirely, because the first handler will fall on the second (and subsequent) attempts.

For example, an event handler in the read-base adds a bid for a lot of 5 rubles. The first attempt to do this will be successful, and the second will not allow the constraint to be executed in the database.

Conclusions / Conclusion

Now our project is at a stage when, as it seems to us, we have already stepped on most of the existing rakes that are relevant to our business specifics. In general, we consider our experience to be quite successful, CQRS & ES is well suited for our subject area. Further development of the project is seen in the abandonment of Chinchilla in favor of another framework that gives more flexibility. However, it is also possible to refuse to use the framework at all. It is also likely that there will be some changes in the direction of finding a balance between reliability on the one hand and the speed and scalability of the solution on the other.

As for the business component, here too some questions still remain open - for example, dividing the domain model into aggregates.

I would like to hope that our experience will be useful for someone, help save time and avoid a rake. Thanks for your attention.