In my talk, I recalled the evolution of Python in the company: from the first services packed in deb packages and rolled out on bare metal, to a complex mono-repository with its own build system and cloud. More in the story will be Django, Flask, Tornado, Docker, PyCharm, IPv6 and other things that we have encountered over the years.

- Let me tell you about myself. I came to Yandex in 2008. At first I did content services, in particular Yandex.Afisha. I wrote there in Python, we rewrote the service from Perl and other fun technologies.

Then I switched to internal services. The internal services department was gradually transformed into the management of search interfaces and services for organizations. A lot of time has passed, from a simple developer I have grown to the head of the python development of our division, about 30 people.

An important point: the company is very large, and I cannot speak for all Yandex. I communicate, of course, with colleagues from other departments, I know how they live. But basically I can only talk about our department, about our products. My talk will focus on this. Sometimes I will tell you that somewhere else in Yandex they do this and that. But it will not be often.

Python is used in many places in the company: any technology, any technology stack, any language. Everything that comes to mind is somewhere in the company, either as an experiment or something else. And in any Yandex service there will definitely be Python in one component or another.

Everything that I will tell you is my interpretation of events. I do not pretend to be one hundred percent objectivity. All this also passed through my hands, it was emotionally colored. All my experience is so personal.

How will the report be structured? To make it easier for you to perceive information, and for me to tell, I decided to break the entire evolution from 2007 to about the current moment into several eras. The report will be strictly structured by these eras. The era means some kind of radical change in infrastructure or approach to development. If our iron infrastructure changes and at the same time we change how we develop services, what tools we use, this is an era. It is clear that I had to adjust a little. It is clear that everything did not happen synchronously, and there were gaps between these changes, but I tried to fit everything under one timeline, just to make it more compact.

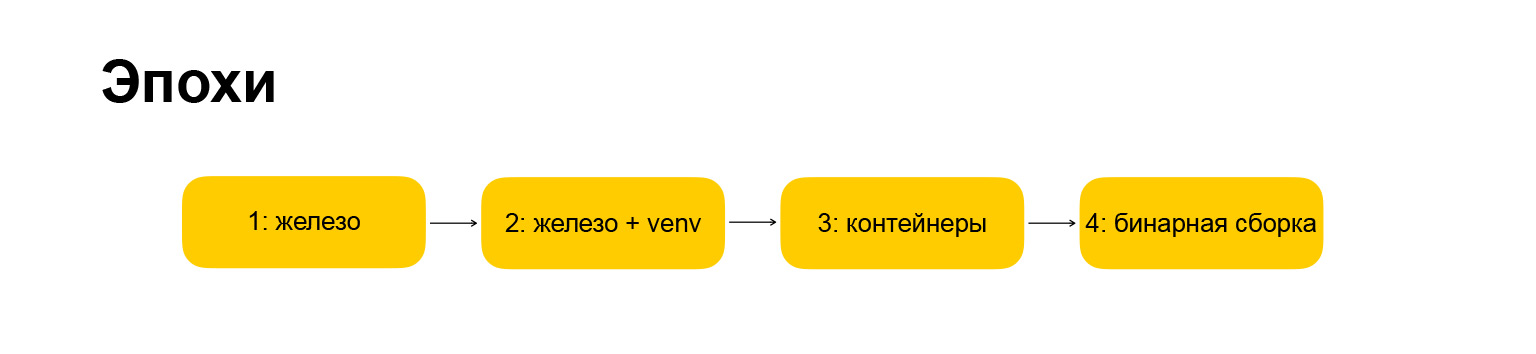

What epochs did we have? Here, too, everything is copyright, all the names are mine. The first era is called "hardware", this is what we started with when I came to the company. Therefore, everything has changed a little. The era has become "iron + venv". Further I will reveal what is hidden behind these names. First, I want to give you a guide on what I will tell you.

The next era is "containers", everything is more or less clear here. The era that we are stepping into at the moment is “binary assembly”, I will also tell about it.

How will each era be structured? This is also important, because I wanted to make a rhythmic narrative so that each section was strictly structured and easier to understand. The era is described by the infrastructure, the frameworks that we use, how we work with dependencies, what kind of development environment we have, and the pros and cons of this or that approach.

(Technical pause.) I told you the introduction, I told you how the report will be structured, let's move on to the story itself.

Age 1: iron

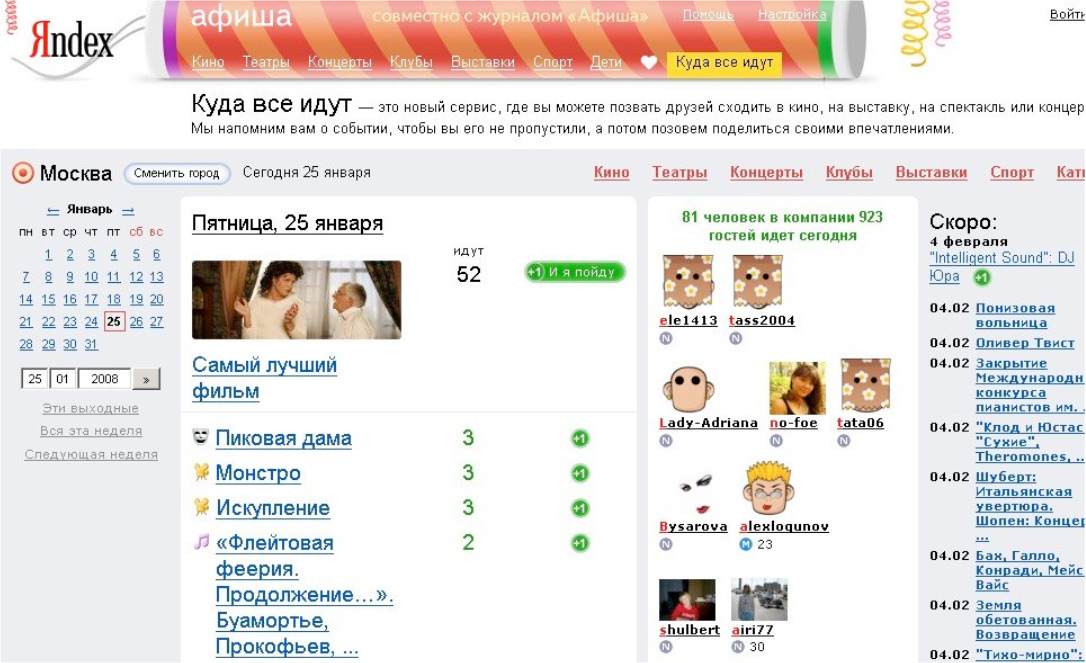

The first service that I started doing when I joined the company was called "Where Everybody Goes". It was Afisha's satellite, the first big Django service.

Grisha Bakunovbobukcan tell why he once decided to transfer Django to Yandex - in general, it happened. We began to do services on Django.

I came, and they told me: let's do Where Everybody Goes. Then there was already some kind of base. I connected, and we considered it a kind of experiment - will it work or not. The experiment turned out to be successful. Everyone agreed that it is possible to create web services in Yandex in Python and Django. Fine! Our infrastructure is ready for this, people are ready, the world is also ready. Let's go, let's further distribute Python and Django further throughout the company. We began to do it. We rewrote Yandex.Afish, Weather. Then - the TV program. Then everything went like a fog, they began to rewrite everything.

By that time, the Yandex infrastructure looked like the whole backend was mostly written in pluses. Obviously, the move towards Python has sped up development a lot in many places, and this has been well received by the company and management. Let's now talk about the infrastructure - where these services worked, what they were spinning on and all that.

These were iron machines, hence the name of this era. What are iron machines? They are just servers in the data center. There are many servers, they are all combined into a cluster of, say, 15 machines. Then there were 30, then 50, 100. And all this on Debian or Ubuntu. By that time, we had migrated from Debian to Ubuntu. We launched all applications through a standard init process. Everything was standard, as was done at the time. For our applications to communicate on the web server, we used the FastCGI protocol and the flup library. If you've been using Python for a long time, you've probably heard of it. But now, I'm sure you don't use it, because it is morally outdated and was a very strange thing, it worked very slowly.

At that moment, obviously, there was no third Python, we wrote in Python 2.3. Then they migrated to 2.4. Wild times. I don’t even want to remember them, because the language, communities and ecosystem looked completely different, although they were also fun, and many then attracted them. Personally, it immersed me in the world of Python - that even despite the peculiarities and oddities, it was already clear then that Python is a promising technology, you can invest your time there.

An important point: then we did not use nginx yet, did not use Apache anymore, but used a web server called Lighttpd. If you have been making web services for a long time, then you've probably heard about it too. At one point he was very popular.

Of the frameworks, we actually used Django. We started making great services on Django. Somewhere in the company there was CherryPy, somewhere - Web.py. Perhaps you also heard about these frameworks. Now they are not at the forefront, have long been pushed back by younger and more daring frameworks. Then they had their own niche, but eventually they died out sooner or later, we stopped doing anything on them. They started doing everything in Django.

At this point, Python became so widespread in the company that it burst out of our department: web services in Python and Django began to be done everywhere in the company.

Let's move on to working with dependencies. And here is such a thing that you most likely also encountered if you came to work in a large company: the corporation already has an established infrastructure, you need to adapt to it. Yandex had a deb infrastructure, internal repositories of deb packages. It was believed that the Yandex developer knew how to compile this deb package. We were forced to integrate into this flow, assembled our projects in the form of full-fledged deb packages and then, as a deb package, put all this on the servers that I was talking about, and then put them into clusters in the form of deb packages.

As a result, all dependencies and libraries, the same Django, we also had to pack in deb packages. For this we made our own infrastructure, raised an internal repository, learned how to do it. This is not a very original lesson: if you tried to build an RPM or deb package, then you know about it. RPM is a bit simpler, deb is more complicated. But all the same - you won't be able to just come from the street and start doing it on click. You need to dig a little.

For many years after that, we collected deb packages. And it seems to me that not everyone who did this for work needs understood what was happening under the hood. They just took from each other, copied blanks, templates, but did not dig deeply. But those who dug out what was going on inside became very useful and very demanded colleagues: if suddenly something was not going to, everyone went to them for advice and asked for nuances and help in debugging. It was a funny time, because I was interested in figuring out what was inside. Thus, he earned a cheap popularity among colleagues.

In addition to the ecosystem of dependencies, there is also work with common code. Already at the start there was an explosive growth in the number of projects, and it was necessary to work with common code, make shared libraries, and more. We started doing this kind of internal open source. We made the general functionality of authorization, working with logs, with other common things, internal APIs, internal storages. We did all this in the form of libraries, put it into the internal repository. At first, these were SVN repositories, then internal GitHub.

And in the end they packed all these dependencies, all these libraries are also in deb, loaded into a single repository. From this, such a package environment was formed. If you started a new project, you could put several libraries there, get a database of functionality, and immediately launch the project in the Yandex infrastructure. It was very comfortable.

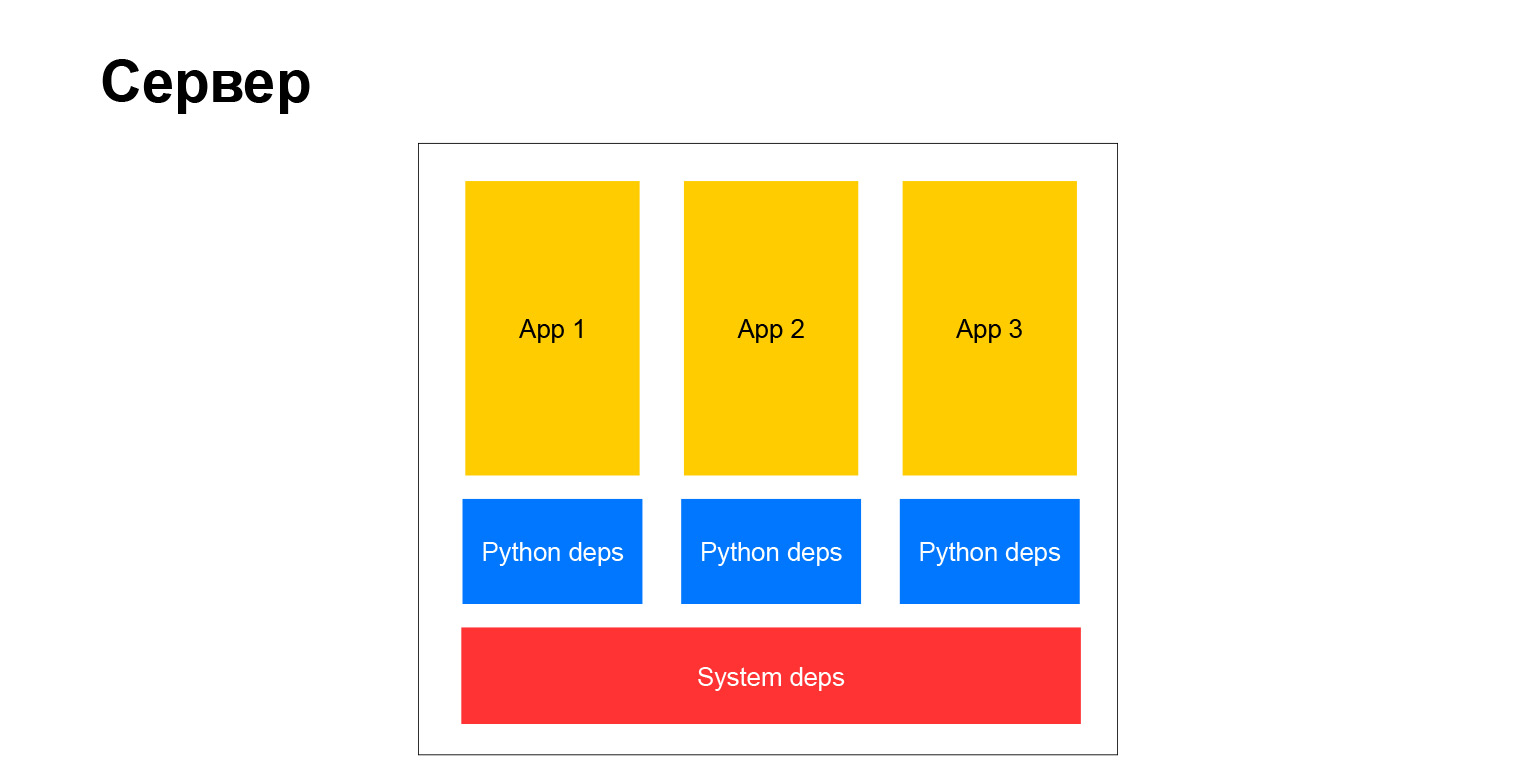

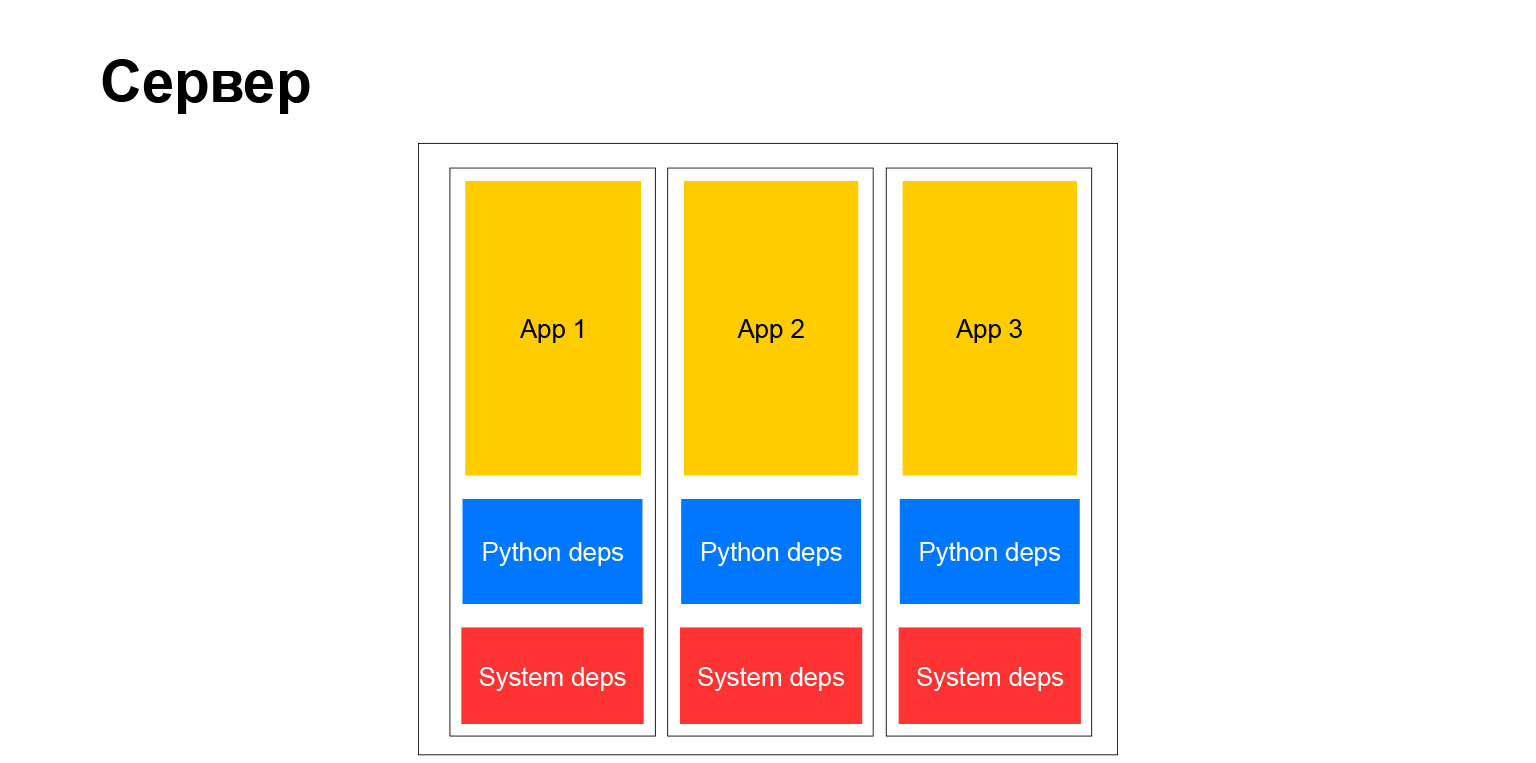

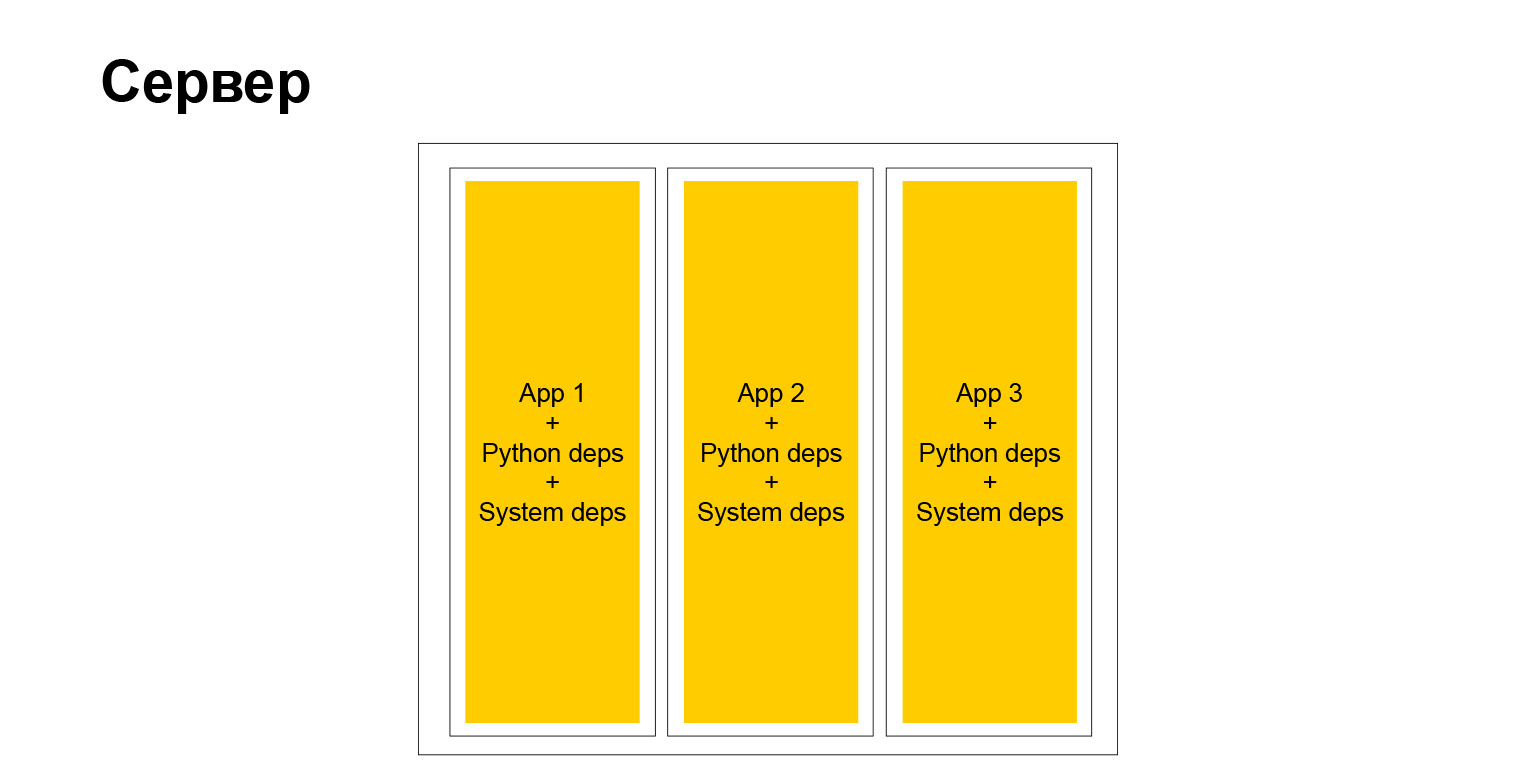

What did our typical server look like? Classically. There are system dependencies, there are python dependencies and applications. Several things follow from this. First of all, all applications that run on the same server and therefore on the same cluster must have the same dependencies. Because if you install a package-system, it is always one version, there cannot be several of them, you must synchronize.

When there are few projects, it can still be done somehow. When there is a lot, everything becomes very complicated. They are made by different teams, it is difficult for them to agree. Each team wants to upgrade early to some library or wants to upgrade the framework. And everyone else should follow this. Over time, this creates a lot of problems. This prompted us to abandon such a scheme, to move into the next era. But I will talk about it a little later.

Let's talk about the development environment. But there is such an expanded understanding of the development environment. This is how you come to work, how you write code, how you debug it, how you work with it, where you test it, how you run it, where you run tests and all that.

When I joined the company, we were all working on remote dev servers. That is, you have some kind of desktop, on Windows or Linux, it doesn't matter. You go to a remote server with the correct Debian and the correct package repository. And you run, run vim, Emacs, write code, debug.

It’s not very convenient, but then we didn’t really know another life. Those fortunate enough to have a Linux desktop or laptop might try to do this locally. But there were no special instructions either, nothing. Such a little wild time. Special people who at that time lived on Windows and Macs and wanted to develop locally, raised a virtual machine, inside which Linux. They wrote code inside this virtual machine, ran it. More precisely, they wrote the code on the host system, launched the code inside the virtual machine and somehow threw the file system there so that everything would synchronize. It all worked pretty badly, but somehow it survived.

What are the pros and cons of this era, this approach to development? In fact, there are solid cons:

- As I said, shared clusters were crowded.

- All projects on the cluster had to have the same dependencies.

- . , , Django . , . 15-20 . . , , — . X, . , . - , - , . . , , . .

- Yandex was dependent on the Debian infrastructure. We supported it, built packages, maintained an internal repository. And this, of course, is also not very good, not very convenient, not very flexible. You depend on strange things that weren't done for the company. Debian as an open source solution, as a Linux distribution, was nevertheless made for other tasks.

Let's talk a little more about Django. Why did we use it? I just thought before the report, while sitting on a chair, that it turns out that 11 years ago I spoke at a conference in Kiev with the same topic "Why should I use Django?" Then I liked it myself. I was an admired developer who read the documentation, made his first project, and it seems to him that everything, now this tool is universally suitable for everything and you can, I don’t know, even hammer nails on it.

But it took a long time. I still love Django, it is still used quite a lot in our department and in the company in general. For example, even before the fall of 2018, Alice had Django in its backend. Now she is no longer there, but in order to quickly start, her colleagues chose her. Because some of the advantages are still valid - a large ecosystem, there are still many specialists. There are all the batteries you need.

And there is enough flexibility. When you first start working with Django, it seems to you that it limits you very much, ties hands, requires a certain flow of work with it. But if you dig a little deeper, many things can be disabled, changed, configured. And if you use this skillfully, you can get all the goodies that are associated with the most popular Python framework. You can bypass all the cons. There are also many of them, but they can be stopped one way or another.

Age 2: iron + venv

We have finished talking about this era. The year 2011 has come to an end, we have moved into the next era, "Iron + venv". You already know about hardware, now you need to tell what happened, where the name venv comes from. Lyrical digression: venv did not arise because virtual machines appeared. Why in quotes? Because we started trying all sorts of container-like things like OpenVZ or LXC. Then they were very poorly developed, not like now. They did not really fly with us. We still lived on common clusters, we still had to exist together with other projects shoulder to shoulder on the same machines. We were looking for a solution.

For example, we switched from init to upstart systemd, and a little later we got flexibility in launching our applications. We abandoned FastCGI and started using either the WSGI interface for communicating with the web server, or HTTP. At this point, we were already using more or less modern Python, the ecosystem was already very well developed. We switched to nginx as a web server, in general everything was fine.

We also began to adapt new frameworks for ourselves. For example, they started using Tornado. Of course, by that time Flask had already appeared, after 2012 Flask was already very fashionable, popular and Django threatened to throw off the pedestal of popular frameworks in Python. And, of course, began to use Celery. Because when projects grow, their number grows, they become more highly loaded, solve more tasks, process more data, then you need a framework for offline tasks execution on a large computing cluster. Of course, we started using Celery for this. But more on that later.

What has changed dramatically is how it works with dependencies. We began to collect a virtual environment. Around that time, the python community got to the point that it is possible not to put python libraries in the system, but to create a virtual environment, put all the python dependencies you need there, and this environment will be completely independent. There can be as many such virtual environments on a machine. This is isolation, a very convenient addiction. You still use it. And we also adapted it all. In the end, what did we do? We created a virtual environment and put all python dependencies there, packed it into a deb package and already rolled it to the server.

As a result, all projects ceased to interfere with each other, depended on the general dependency in the system, could safely choose which version of the framework or library to use. It is very convenient. Changes happened to the shared code too. Since we partially abandoned the Debian infrastructure and, in particular, stopped installing python dependencies with deb packages, we needed something where we could unload all our common code and common libraries and from where we could put them.

Link from the slide

By that time, there were already several PyPI implementations, that is, a repository of python packages, implementations written, in particular, in Django. And we chose one of them. It's called Localshop, here's a link... This repository is still alive, there are already about a thousand internal packages in it. That is, from about 2011-2012, a company the size of Yandex generated about one thousand different libraries, utilities written in Python, which are believed to be reused in other projects. You can appreciate the scale.

All libraries are published on this internal repository. And then from there they are either installed in Python, or there is a special automatic infrastructure that reverts them to Debian. It's all more or less automated and convenient. We stopped wasting so many resources on maintaining the Debian infrastructure. It all more or less worked by itself.

And this is a qualitative step. Here is a diagram with what I was talking about.

That is, python addictions finally ceased to be the same for everyone. The system ones still remain, but there are not many of them. For example, a driver for a database, an XML parser required system binaries. In general, at that time we could not get rid of these dependencies.

The development environment has also changed. Since venv, the virtual environment became available and worked everywhere at that time, we could build our project in general on any local platform. This made life much easier. There was no need to mess with Debian anymore, no virtual machines needed to be made. You could just take any OS, say virtual venv, then pip install something. And it worked.

What are the advantages of this scheme? Since we have lived for quite a long time - maybe a little more than three years - with this configuration of parameters, it has become easier to live on clusters-hostels. This is really convenient. That is, we stopped depending on these global updates of some Django throughout the company. We could more precisely select the versions that suit us, update critical dependencies more often if they fix vulnerabilities or something else. And it was a very correct way, we liked it and saved us a lot of everything.

But there were also disadvantages. System dependencies were still common. Sometimes it fired, sometimes it got in the way. Once again, I will go a little beyond the scope of our department and tell you about the company. Since the company is large, not everyone kept pace with these eras with us. At that time, the company still continued to use deb packages to work with python dependencies. Let me tell you in more detail why we started using these or those frameworks. In particular, Tornado.

We needed an asynchronous framework, we now have tasks for it. The third Python and its asyncio did not exist yet, or they were in an initial state, it was not very reliable to use them yet. Therefore, we tried to choose which asynchronous framework to use. There were several options: Gevent and Twisted. Most likely, there were more of them, but we chose among them. Twisted has already been used by the company - for example, the Yandex.Taxi backend was written in Twisted. But we looked at them and decided that Twisted doesn’t look like a python, even PEP-8 doesn’t match. And Gevent - there is some kind of hack with a python stack inside. Let's use Tornado.

We have not regretted it. We still use Tornado in some services - in those that we have not yet rewritten in the third Python. The framework has proven over the years that it is compact, reliable and can be relied on.

And of course Celery. I have already partially told about this. We needed a framework for the distributed execution of deferred tasks. We got it.

It was very convenient that Celery has support for different brokers. We actively used this b for various tasks trying to find one or another correct broker. Somewhere it was Mongo, somewhere SQS, somewhere Redis. But we tried to choose according to need, and we succeeded.

Despite the fact that there are a lot of complaints about Celery, how it is written inside, how to debug it, what kind of logging it is, it rather works. Celery is still actively used in almost every project in our department, and as far as I know, outside of our project. Celery is a must-have. If you need deferred execution of tasks, then everyone takes Celery. Or at first they don't take it, they try to take something else, but then they still come to Celery.

We go to the next era. Already approaching the present time, more modern. Here the name of the era speaks for itself.

Age 3: containers

We inside the company got a docker compatible cloud. Inside, not docker runtime, but internal development. But at the same time, docker images can be deployed there. It helped us a lot because we were able to use the entire docker ecosystem for development and testing. We could use all sorts of goodies, and then, simply having received the tested image, upload it to this cloud. It started up there and worked as it should.

By that time, we were already independent of what OS was inside the container. You could choose any. We, of course, did not use ordinary demons, but, for example, a supervisor. Subsequently, everyone switched to uWSGI - it turned out that uWSGI does not just know how to run your web applications and provide an interface for the web server in them. It's also just a good generic thing for starting processes.

There is, however, a little strange configuration, but, in general, it is convenient. We got rid of the unnecessary essence and started doing everything through uWSGI. We also use it to communicate with the web server. The peculiarities of our cloud are such that we cannot use the uWSGI protocol to communicate with the balancer, which is globally represented in the cloud, as a component. But this is not scary. Inside uWSGI, the HTTP server is pretty well implemented, it works quickly and reliably.

What about frameworks? The Falcon framework appeared, and we rewrote the same Alice with Django on Falcon, because there was a certain number of apis - it was necessary for them to work quickly, but at the same time they were not very complicated.

Django at some point became a little redundant, and in order to increase speed and get rid of such a large dependency, a large library, we decided to rewrite it to Falcon.

And of course asyncio. We began to write new services in the third Python, and rewrite the old ones in the third Python. Only in our department there are now about 30 services that are written in Python. These are 30 full-fledged products, with a backend, frontend, and their own infrastructure. The data processor provides services to both internal and external consumers.

But the company, as you know, has thousands of Python services, and they are different. They are on different frameworks, different Python, older and newer. Now the company uses almost all modern frameworks that you know. Django, Flask, Falcon, something else, asynchronous - Tornado, Twisted, asyncio. Everything is used and beneficial.

Let's go back to the structure of the era - how we started working with dependencies.

Everything is simple here. Now we can not use the virtual environment. We don't need deb packages. At the time of building the image, we simply install everything that we need with pip. It is completely isolated. We don't bother anyone. And very comfortable. Any system dependencies, you can choose any base image of Debian, Ubuntu, whatever. We like. Full freedom.

But in fact, complete freedom, as you know, has a second side. When you have a large company, and even more so when you want to promote uniform development methods and methods, testing methods, approaches to documentation - at this moment you are faced with the fact that this zoo, on the one hand, helps somewhere. On the other hand, on the contrary, it complicates. He cannot easily, for example, inject some library into all services, because the services are different. They have different versions of Python, Django, or some other framework. This complicates things. But in the end, a typical server began to look like this.

Yes, this is a server. We have completely independent containers. Each of them has its own system environment, and our applications spin. Very comfortably. But, as I said, there are drawbacks.

Let's go back to docker for a moment. We began to use docker for development, it helped us a lot.

Docker is available for all platforms. You can test, use docker-compose, do a docker swarm, and try to emulate your production environment on small clusters to test something. Maybe load testing. We began to actively use it.

Docker is also very well integrated with all sorts of development environments. For example, I am developing in PyCharm, and so are most of my colleagues. There is built-in support for docker, with its pros and cons, but in general everything works.

It has become very convenient, we have made a qualitative step, and it is at this stage that we are now. It is convenient to develop using docker, even though our target cloud, where we will deploy our applications, is not a full-fledged Docker Runtime, has some limitations. But this still does not prevent us from using the Docker Engine locally and in related tasks.

Let's take stock of this era. Pros - complete isolation, convenient toolchain for development and, as I said, IDE support.

There are also disadvantages. Docker is everywhere, but if it's not Linux, it works a little strange. Yandex developers who have a MacBook install docker for Mac. And there are features, for example, IPv6 works strangely, or you need to tweak it somehow. And in our company IPv6 is very common. We have long lacked IPv4 addresses, so the entire internal infrastructure is largely tied to IPv6. And when IPv6 does not work on your laptop or inside docker, which is on the laptop, you suffer and cannot really do anything, then we have to bypass it.

Despite this, we love docker very much. It is efficient and has a good ecosystem. People come to us from the street, we say - can you docker? They - yes, I can. All perfectly. A person comes and literally immediately begins to be useful, because he does not need to delve into how to start and how to build a project, how to run tests, how to look at compose, or some debug output. Man already knows everything. This is a de facto standard in the outside world, it increases our efficiency, we can quickly deliver features to users, and not spend money on infrastructure.

Age 4: binary assembly

But we are already approaching the last era in which we are just entering. And here I will return to the beginning of my report, when I said: you come to a large corporation with your own infrastructure approaches. It's the same with Yandex. If earlier it was a Debian infrastructure, now it’s different. The company has had a single monolithic repository for quite some time, where all the code is gradually collected. A build mechanism, a distributed testing mechanism, a bunch of tools and everything that we do not use yet, but are starting to use, has been made around it. That is, our python projects also visit this repository. We try to collect with the same tools. But since these tools of a single repository, sharpened primarily in C ++, Java and Go, there is a peculiarity there.

Here's the feature. If now the result of the assembly of our project is the Docker Engine, where our source code with all the dependencies simply lies, then we come to the conclusion that the result of the assembly of our project will be just a binar. Just a binar, in which there is a python-interpreter, a code and our python-rabbits and all other necessary dependencies, they are statically linked.

It is believed that you can come, throw this binar on any Linux-service with a compatible architecture and it will work. And it is true.

It seems a bit unnatural. Most people in the python community don't do that, and I'm sure you don't. This has its pros and cons. Pros:

- . , , , , , . , . , , . .

- , , , . , , . , . , checkout , , . .

- , .

And, of course, there is a minus: a closed ecosystem. An outsider needs to be immersed in how it all works, to tell how it works. He must try and only then becomes effective. We are only at the beginning of this journey. Perhaps, if I come to this conference in a year or two, I can tell you how we went through this transformation. But now we are optimistic about the future and obey some internal corporate rules, and we rather like it than not, because we will get a lot of internal goodies.

conclusions

They are more philosophical. The report itself is not so much technical as philosophical.

- Evolution is inevitable. If you make a service and it lives for a long time, then you will evolve it, evolve its infrastructure, the way you develop it.

- . , , , .

- . , Django, . , . , , , Django - , . , .

- Python-. , , -. , , . , , , , , : , . , .

The topic is very big. I very briefly told you about how and what we do, how Python has evolved in our country. You can take each era, take each point on the slide and analyze it more deeply. And this is also enough for 40 minutes - you can talk for a very long time about dependencies, and about internal open source, and about infrastructure. I gave an overview picture. If any topic is very popular, I can cover it at the next meet-ups or conferences. Thank.