Introduction

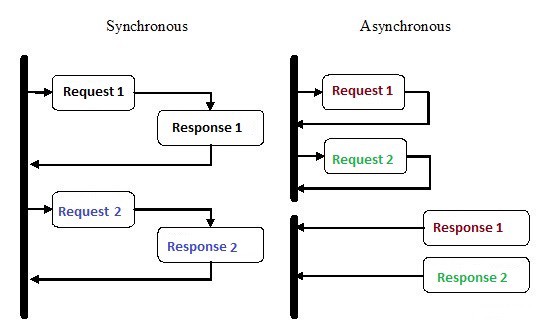

Asynchronous programming is a type of parallel programming in which a unit of work can be performed separately from the main application execution thread . When work is completed, the main thread is notified of the completion of the workflow or an error occurred. There are many benefits to this approach, such as improved application performance and increased response speed.

Over the past few years, asynchronous programming has attracted close attention, and there are reasons for this. While this kind of programming can be more complex than traditional sequential execution, it is much more efficient.

For example, instead of waiting for the HTTP request to complete before continuing, you can send the request and do other work that is waiting in line using asynchronous coroutines in Python.

Asynchrony is one of the main reasons for the popularity of Node.js for backend implementation. A lot of the code we write, especially in I / O heavy applications like websites, depends on external resources. It can contain anything from a remote database call to POST requests to a REST service. As soon as you send a request to one of these resources, your code will just wait for a response. With asynchronous programming, you let your code handle other tasks while you wait for a response from resources.

How does Python manage to do multiple things at the same time?

1. Multiple Processes

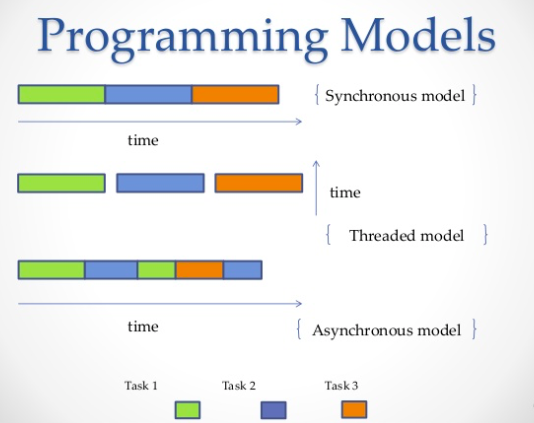

The most obvious way is to use multiple processes. From the terminal, you can run your script two, three, four, ten times, and all scripts will run independently and simultaneously. The operating system will take care of the distribution of processor resources between all instances. Alternatively, you can use the multiprocessing library , which can spawn several processes, as shown in the example below.

from multiprocessing import Process

def print_func(continent='Asia'):

print('The name of continent is : ', continent)

if __name__ == "__main__": # confirms that the code is under main function

names = ['America', 'Europe', 'Africa']

procs = []

proc = Process(target=print_func) # instantiating without any argument

procs.append(proc)

proc.start()

# instantiating process with arguments

for name in names:

# print(name)

proc = Process(target=print_func, args=(name,))

procs.append(proc)

proc.start()

# complete the processes

for proc in procs:

proc.join()Output:

The name of continent is : Asia

The name of continent is : America

The name of continent is : Europe

The name of continent is : Africa2. Multiple Threads

Another way to run multiple jobs in parallel is to use threads. A thread is an execution queue, which is very similar to a process, however, you can have multiple threads in a single process, and all of them will share resources. However, it will be difficult to write stream code because of this. Likewise, the operating system will do all the hard work of allocating processor memory, but a global interpreter lock (GIL) will only allow one Python thread to run at a time, even if you have multithreaded code. This is how the GIL on CPython prevents multi-core concurrency. That is, you can forcibly run on only one core, even if you have two, four or more.

import threading

def print_cube(num):

"""

function to print cube of given num

"""

print("Cube: {}".format(num * num * num))

def print_square(num):

"""

function to print square of given num

"""

print("Square: {}".format(num * num))

if __name__ == "__main__":

# creating thread

t1 = threading.Thread(target=print_square, args=(10,))

t2 = threading.Thread(target=print_cube, args=(10,))

# starting thread 1

t1.start()

# starting thread 2

t2.start()

# wait until thread 1 is completely executed

t1.join()

# wait until thread 2 is completely executed

t2.join()

# both threads completely executed

print("Done!")Output:

Square: 100

Cube: 1000

Done!3. Coroutines and

yield:

Coroutines are a generalization of subroutines. They are used for cooperative multitasking, when a process voluntarily surrenders control (

yield) at some frequency or in periods of waiting to allow several applications to work simultaneously. Coroutines are similar to generators , but with additional methods and minor changes in how we use the yield statement . Generators produce data for iteration, while coroutines can also consume data.

def print_name(prefix):

print("Searching prefix:{}".format(prefix))

try :

while True:

# yeild used to create coroutine

name = (yield)

if prefix in name:

print(name)

except GeneratorExit:

print("Closing coroutine!!")

corou = print_name("Dear")

corou.__next__()

corou.send("James")

corou.send("Dear James")

corou.close()Output:

Searching prefix:Dear

Dear James

Closing coroutine!!4. Asynchronous programming

The fourth way is asynchronous programming, in which the operating system is not involved. On the operating system side, you are left with one process with just one thread, but you can still perform multiple tasks at the same time. So what's the trick?

Answer:

asyncio

Asyncio- an asynchronous programming module that was introduced in Python 3.4. It is intended to use coroutine and future to simplify the writing of asynchronous code and makes it almost as readable as synchronous code, due to the lack of callbacks.

Asynciouses different constructions:, event loopcoroutines and future.

- event loop . .

- ( ) – , Python, await event loop. event loop. Tasks, Future.

- Future , . exception.

With help,

asyncioyou can structure your code so that subtasks are defined as coroutines and allow you to schedule them to run as you please, including at the same time. Coroutines contain points yieldat which we define possible context switching points. If there are tasks in the waiting queue, the context will be switched, otherwise it will not.

A context switch in

asynciois event loop, which transfers control flow from one coroutine to another.

In the following example, we run 3 asynchronous tasks that individually make requests to Reddit, retrieve and output JSON content. We use aiohttp - an http client library that ensures that even an HTTP request is made asynchronously.

import signal

import sys

import asyncio

import aiohttp

import json

loop = asyncio.get_event_loop()

client = aiohttp.ClientSession(loop=loop)

async def get_json(client, url):

async with client.get(url) as response:

assert response.status == 200

return await response.read()

async def get_reddit_top(subreddit, client):

data1 = await get_json(client, 'https://www.reddit.com/r/' + subreddit + '/top.json?sort=top&t=day&limit=5')

j = json.loads(data1.decode('utf-8'))

for i in j['data']['children']:

score = i['data']['score']

title = i['data']['title']

link = i['data']['url']

print(str(score) + ': ' + title + ' (' + link + ')')

print('DONE:', subreddit + '\n')

def signal_handler(signal, frame):

loop.stop()

client.close()

sys.exit(0)

signal.signal(signal.SIGINT, signal_handler)

asyncio.ensure_future(get_reddit_top('python', client))

asyncio.ensure_future(get_reddit_top('programming', client))

asyncio.ensure_future(get_reddit_top('compsci', client))

loop.run_forever()Output:

50: Undershoot: Parsing theory in 1965 (http://jeffreykegler.github.io/Ocean-of-Awareness-blog/individual/2018/07/knuth_1965_2.html)

12: Question about best-prefix/failure function/primal match table in kmp algorithm (https://www.reddit.com/r/compsci/comments/8xd3m2/question_about_bestprefixfailure_functionprimal/)

1: Question regarding calculating the probability of failure of a RAID system (https://www.reddit.com/r/compsci/comments/8xbkk2/question_regarding_calculating_the_probability_of/)

DONE: compsci

336: /r/thanosdidnothingwrong -- banning people with python (https://clips.twitch.tv/AstutePluckyCocoaLitty)

175: PythonRobotics: Python sample codes for robotics algorithms (https://atsushisakai.github.io/PythonRobotics/)

23: Python and Flask Tutorial in VS Code (https://code.visualstudio.com/docs/python/tutorial-flask)

17: Started a new blog on Celery - what would you like to read about? (https://www.python-celery.com)

14: A Simple Anomaly Detection Algorithm in Python (https://medium.com/@mathmare_/pyng-a-simple-anomaly-detection-algorithm-2f355d7dc054)

DONE: python

1360: git bundle (https://dev.to/gabeguz/git-bundle-2l5o)

1191: Which hashing algorithm is best for uniqueness and speed? Ian Boyd's answer (top voted) is one of the best comments I've seen on Stackexchange. (https://softwareengineering.stackexchange.com/questions/49550/which-hashing-algorithm-is-best-for-uniqueness-and-speed)

430: ARM launches “Facts” campaign against RISC-V (https://riscv-basics.com/)

244: Choice of search engine on Android nuked by “Anonymous Coward” (2009) (https://android.googlesource.com/platform/packages/apps/GlobalSearch/+/592150ac00086400415afe936d96f04d3be3ba0c)

209: Exploiting freely accessible WhatsApp data or “Why does WhatsApp web know my phone’s battery level?” (https://medium.com/@juan_cortes/exploiting-freely-accessible-whatsapp-data-or-why-does-whatsapp-know-my-battery-level-ddac224041b4)

DONE: programmingUsing Redis and Redis Queue RQ

Using

asyncioand is aiohttpnot always a good idea, especially if you are using older versions of Python. In addition, there are times when you need to distribute tasks across different servers. In this case, RQ (Redis Queue) can be used. This is the usual Python library for adding jobs to the queue and processing them by workers in the background. To organize the queue, Redis is used - a database of keys / values.

In the example below, we added a simple function to the queue

count_words_at_urlusing Redis.

from mymodule import count_words_at_url

from redis import Redis

from rq import Queue

q = Queue(connection=Redis())

job = q.enqueue(count_words_at_url, 'http://nvie.com')

******mymodule.py******

import requests

def count_words_at_url(url):

"""Just an example function that's called async."""

resp = requests.get(url)

print( len(resp.text.split()))

return( len(resp.text.split()))Output:

15:10:45 RQ worker 'rq:worker:EMPID18030.9865' started, version 0.11.0

15:10:45 *** Listening on default...

15:10:45 Cleaning registries for queue: default

15:10:50 default: mymodule.count_words_at_url('http://nvie.com') (a2b7451e-731f-4f31-9232-2b7e3549051f)

322

15:10:51 default: Job OK (a2b7451e-731f-4f31-9232-2b7e3549051f)

15:10:51 Result is kept for 500 secondsConclusion

As an example, let's take a chess exhibition where one of the best chess players competes against a large number of people. We have 24 games and 24 people with whom you can play, and if the chess player plays with them synchronously, it will take at least 12 hours (provided that the average game takes 30 moves, the chess player thinks through the move for 5 seconds, and the opponent takes about 55 seconds.) However, in the asynchronous mode, the chess player will be able to make a move and leave time for the opponent to think, while moving on to the next opponent and dividing the move. Thus, it is possible to make a move in all 24 games in 2 minutes, and they can all be won in just one hour.

This is what is meant when they say that asynchrony speeds up work. We are talking about such speed. A good chess player does not start playing chess faster, just time is more optimized, and it is not wasted on waiting. This is how it works.

By this analogy, the chess player will be a processor, and the main idea will be to keep the processor idle as little as possible. It's about always having something to do.

In practice, asynchrony is defined as a parallel programming style in which some tasks free the processor during periods of waiting so that other tasks can use it. Python has several ways to achieve concurrency to suit your needs, code flow, data handling, architecture, and use cases, and you can choose from any of them.

.