AMD is one of the oldest mainstream microprocessor manufacturers, and has been a hot topic of controversy among technology fans for nearly fifty years. The history of the company has turned into an exciting story full of heroic successes, desperate mistakes and the proximity of ruin. While other semiconductor companies have come and gone, AMD has weathered many storms and fought many battles in boardrooms, courts and on store shelves.

In this article, we will talk about the past of the company, study its winding paths to the current state and predict what lies ahead of this Silicon Valley veteran.

The hike for fame and fortune

To begin our story, we need to go back in time and travel to America in the late 1950s. The country, which flourished after the difficult years of World War II, has become a site of cutting-edge technological innovation.

Companies such as Bell Laboratories, Texas Instruments, and Fairchild Semiconductor have hired the best engineers and one after the other they have released products that were the first in their field: bipolar transistor, integrated circuit, MOSFET (MOS transistor).

Fairchild Engineers circa 1960: left Gordon Moore, foreground center Robert Noyce

These young professionals wanted to research and develop even more amazing products, but because of a cautious senior management who remembered a time when the world was fearful and unstable , the engineers had a desire to try their luck themselves.

So in 1968, two Fairchild Semiconductor engineers, Robert Noyce and Gordon Moore, left the company and went their own way. That summer came NM Electronics, which was renamed Integrated Electronics , or Intel for short , just a week later .

Others followed, and less than a year later eight more people left Fairchild, together organizing their own electronics development and manufacturing company: Advanced Micro Devices (naturally, it was AMD).

The group was led by former Fairchild Marketing Director Jerry Sanders. They began by redesigning Fairchild and National Semiconductor products, not trying to compete directly with companies like Intel, Motorola, and IBM (which spent significant sums on research and development of new integrated circuits).

Having started so modestly, in just a few months AMD, having moved from Santa Clara to Sunnyvale, set about creating products that could boast increased efficiency, resistance to stress and high speed. These microchips were designed to meet US Army quality standards, which provided the company with a significant advantage in the still young computer industry, where reliability and production stability varied greatly.

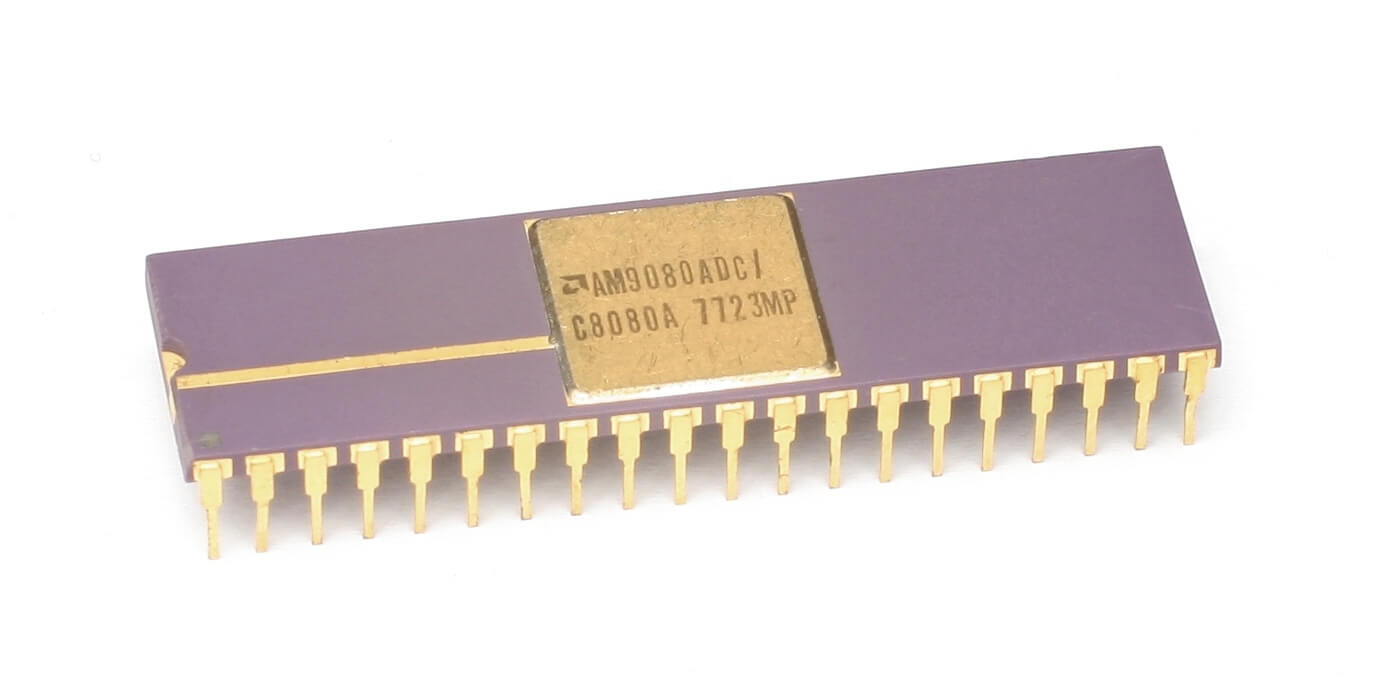

The first AMD processor is the Am9080. Image: Wikipedia

By the time Intel released the first 8-bit microprocessor in 1974 (8008), AMD was already a public company with a portfolio of more than 200 products, a quarter of which were its own designs, including RAM chips, logic counters and shift registers. The following year, many new models appeared: the own family of integrated circuits (ICs) Am2900 and the 2MHz 8-bit Am9080 processor - a clone of the descendant of Intel 8008, obtained by reverse engineering. The latter was a set of components that are now fully integrated into the CPU and GPU, but 35 years ago, arithmetic logic units and memory controllers remained separate chips.

The flagrant plagiarism of Intel architecture by modern standards may seem quite shocking, but it is quite consistent with the era of the emergence of microchips. The processor clone was later renamed the 8080A because AMD and Intel signed the cross licensing agreement in 1976. One could assume that it was worth a penny, but its price was 325 thousand dollars (1.65 million at current prices).

The deal allowed AMD and Intel to flood the market with terribly profitable chips that sold for just over $ 350 (double the price for military grade models). In 1977, the 8085 (3 MHz) processor appeared, soon followed by the 8086 (8 MHz). Improvements in design and production led to the appearance in 1979 of 8088 (from 5 to 10 MHz); that same year, production began at AMD's Austin, Texas facility.

When IBM began the 1982 shift from mainframes to what it called "personal computers" (PCs), the company decided to outsource the creation of the devices rather than manufacture them in-house. For this, Intel's first x86 processor called 8086 was chosen.; however, it was agreed that AMD would be a secondary supplier to ensure continuity of supply of processors for the IBM PC / AT.

The buyer could choose any color as long as it is beige. IBM 5150 PC 1981 model

In February of the same year, a contract was signed between AMD and Intel, under which the first received the right to create processors 8086, 8088, 80186 and 80188 - not only for IBM, but also for many IBM clones (one of them was Compaq). Towards the end of 1982, AMD also began to produce the 16-bit Intel 80286, which received the Am286 marking .

It would later become the first truly significant desktop PC processor, and while Intel models typically ranged from 6 to 10 MHz, AMD started at 8 MHz and went up to a whopping 20 MHz. Without a doubt, this marked the beginning of the battle for dominance in the CPU market between two powerful forces in Silicon Valley: what Intel was developing, AMD was trying to improve.

During this period, there was a huge growth in the young PC market, so noticing that AMD was offering the Am286 at a significantly increased speed compared to the 80286, Intel tried to stop AMD. It achieved this by denying it a license for the next generation of 386 processors.

AMD filed a lawsuit, but it took four and a half years to complete the proceedings. Although the court ruled that Intel was not obligated to transfer every new product to AMD, it was decided that Intel would violate the presumption of good faith.

Intel refused to grant a license in a critical period, just at a time when the IBM PC market increased from 55% to 84%. Left without access to the specs of the new processors, AMD spent over five years reverse-engineering the 80386 to release it under the name Am386 . After the release of the processor, it again proved to be superior to the Intel model. The original 386 was released in 1985 at just 12 MHz and later reached 33 MHz, while the most powerful version, the Am386DX, came in 1989 at 40 MHz.

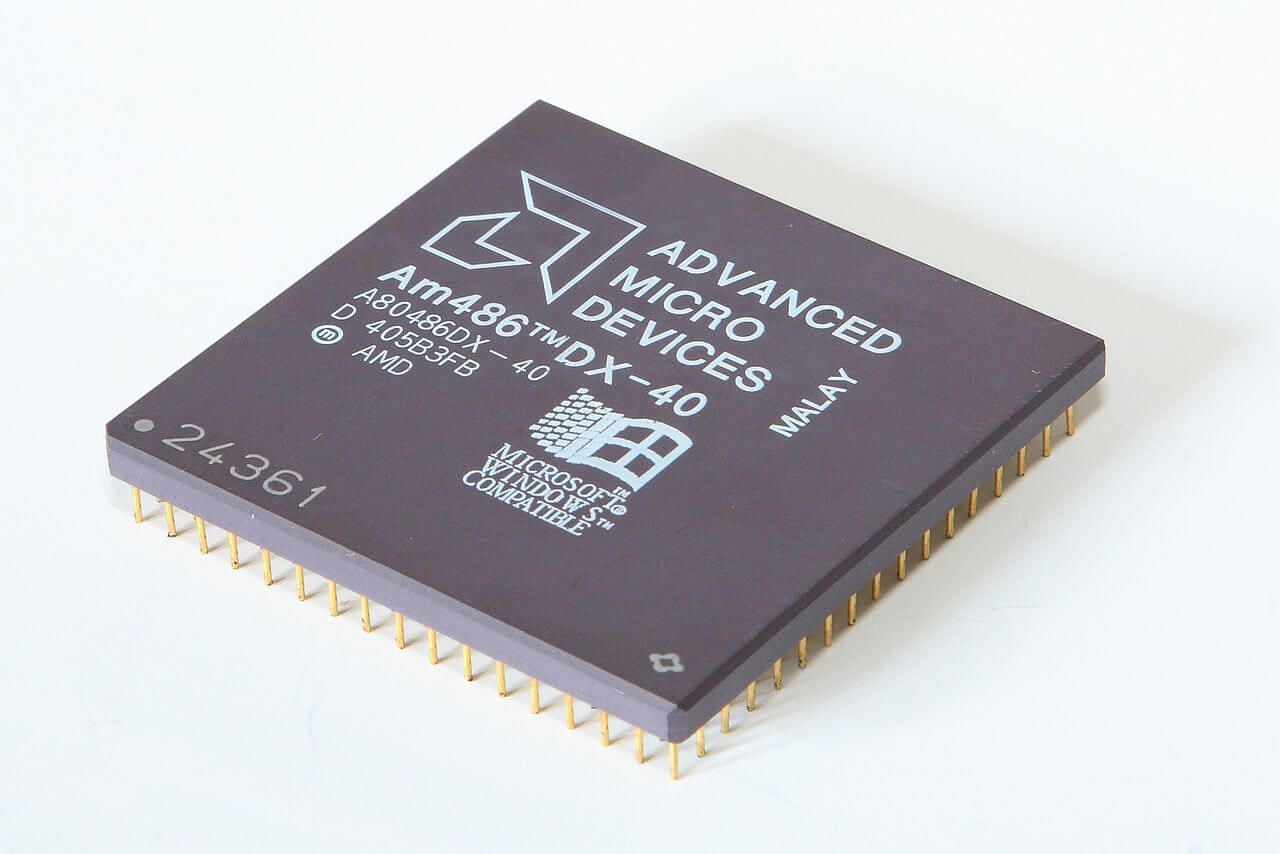

The success of the Am386 was followed by the release in 1993 of the very attractive 40MHz Am486, which provided about 20% more performance than the 33MHz Intel i486 at the same price point. The situation repeated itself for the entire 486 line: Intel's 486DX peaked at 100 MHz, but AMD offered a faster 120 MHz alternative. To better illustrate AMD's success during that period, let's say that the company's revenue doubled, from $ 1 billion in 1990 to over $ 2 billion in 1994.

In 1995, AMD released the Am5x86 processoras the heir to 486, positioning it as an upgrade for old computers. The Am5x86 P75 + boasted a frequency of 150 MHz, and the marking “P75” meant that it was comparable in performance to the Intel Pentium 75. The “+” sign meant that the AMD chip was a bit faster in integer math than its competitor.

In response, Intel has changed the labeling of its products to distance itself from competitors and other manufacturers. Am5x86 provided AMD with significant revenue, both in new sales and in upgrades of machines with 486 processors. As in the case of Am286, 386 and 486, AMD continued to increase the market share of its products, positioning them as embedded systems.

In March 1996, the first processor entirely developed by AMD engineers was released: 5k86later renamed to K5. The chip had to compete with Intel Pentium and Cyrix 6x86, so the correct implementation of the project was critical for AMD - it was supposed to get a much more powerful mathematical coprocessor for processing floating point numbers than Cyrix, approximately equal in performance to the Pentium 100 coprocessor. while integer performance was supposed to reach Pentium 200.

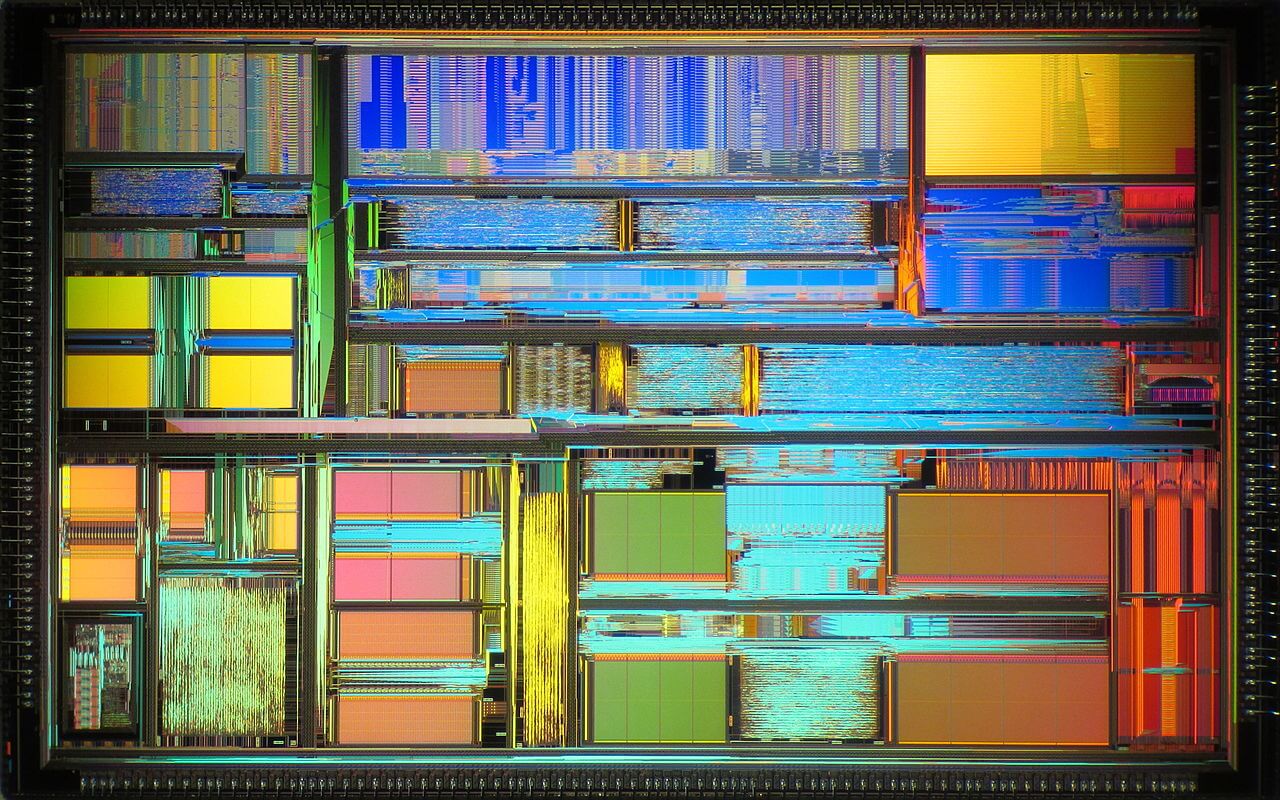

A false color image of a K5 crystal. Image: Wikipedia

Ultimately, the chance was lost because the project suffered from architectural and manufacturing problems. As a result, the processors did not reach the required frequencies and performance, appearing on the market later, which resulted in low sales volumes.

By then, AMD had spent $ 857 million on NexGen , a small chip design company with no manufacturing facilities of its own. The processors of this company were manufactured by IBM. AMD's K5 and the in-development K6 experienced scaling issues to higher clock speeds (150 MHz and above), and the NexGen Nx686 already had a core speed of 180 MHz. After buying the company, the Nx686 processor turned into AMD K6, and the project to develop the original chip went to the junkyard.

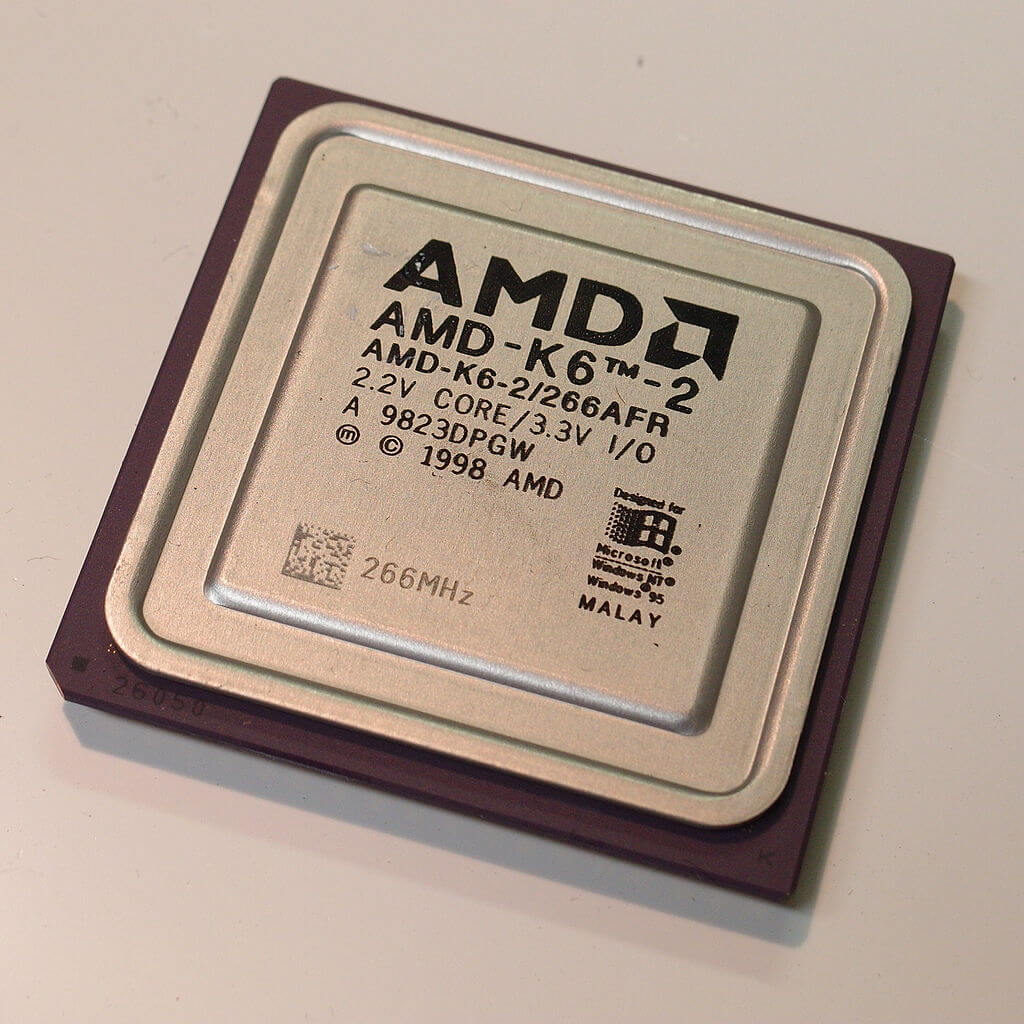

K6-2 introduces the AMD 3DNow! Instruction set, based on the SIMD (single instruction, multiple data) principle.

The growth of AMD was reflected in the decline of Intel, which began with the advent of the K6 architecture, which competed with Intel Pentium, Pentium II and Pentium III. Thanks to the K6, AMD’s progress toward success has accelerated; for this, we must pay tribute to the intelligence and talent of former Intel employee Vinod Dham ("the father of Pentium"), who left Intel in 1995 for NexGen.

When the K6 hit the shelves in 1997, it was a pretty decent alternative to the Pentium MMX. The K6 went from victory to victory - from 233 MHz in the first model to 300 MHz in the January 1998 Little Foot revision, followed by 350 MHz in the Chomper K6-2(May 1998) and an astounding 550 MHz revision of Chomper Extended (September 1998).

K6-2 introduces the AMD 3DNow! Instruction set based on the SIMD principle. At its core, it was the same as the Intel SSE, but it provided easier access to the processor's floating-point function; the disadvantage of this was that programmers had to embed a new command in each new code; in addition, patches and compilers had to be rewritten to use this feature.

Like the first K6, the K6-2 processor was a much better buy than a competitor, and often cost half as much as Intel Pentium chips. The latest version of K6, called K6-III , was a more complex processor, the number of transistors in which increased to 21.4 million (in the first K6 - 8.8 million, in K6-II - 9.4 million).

AMD PowerNow! Was built into it, dynamically changing speed in accordance with the load. The K6-III, whose clock frequency reached 570 MHz over time, was quite expensive to manufacture and had a rather short lifespan, reduced by the appearance of the K7, which was better suited to compete with the Pentium III and later models.

1999 became the zenith of AMD’s golden era - the advent of the K7 processor under the Athlon brand showed that now its products are not a cheaper cloned alternative.

Athlon processors starting at 500 MHz were installed in the new Slot A (EV6) and used a new internal system bus licensed from DEC. It was clocked at 200 MHz, significantly surpassing the 133 MHz bus used by Intel. June 2000 saw the introduction of the Athlon Thunderbird , a CPU that was praised by many for its overclockability; he had built-in support for DDR RAM modules and a fully functional Level 2 cache on the chip.

2 gigahertz 64-bit processing power. Image: Wikipedia

Thunderbird and its successors (Palomino, Thoroughbred, Barton and Thorton) battled the Pentium 4 for the first five years of the millennium, usually at a lower price point but always better performance. In September 2003, Athlon was upgraded with the release of K8 (codename ClawHammer), better known as Athlon 64 , because this processor added a 64-bit extension to the x86 instruction set.

This episode is considered by many to be a defining moment for AMD: the pursuit of megahertz at any cost has turned Intel's Netburst architecture into a classic example of a development deadlock.

Both profit and operating income were excellent for such a relatively small company. Although its revenue levels fell short of Intel's, AMD prided itself on its success and yearned for more. But when you are at the very top of the highest of the mountains, you have to make every effort to stay there, otherwise you have only one path.

Lost heaven

There was no specific reason that led AMD to fall from its high position. The global economic crisis, internal management mistakes, poor financial forecasting, dizziness from their own successes, Intel's luck and oversights - they all played a role in one way or another.

But let's see how the situation developed in early 2006. The CPU market was saturated with AMD and Intel products, but the former had processors such as the outstanding K8-based Athlon 64 FX series. The FX-60 is a 2.6 GHz dual-core processor while the FX-57 is a single-core processor running at 2.8 GHz.

Both processors overtook all other products on the market, as can be seen from the reviews of that time.... They were very expensive - the FX-60 retailed for over $ 1,000, but Intel's most powerful processor, the 3.46 GHz Pentium Extreme Edition 955, had the same price tag . It seemed that AMD had an advantage in the market of workstations / servers - Opteron chips outperformed Intel Xeon processors in performance.

Intel's problem was the Netburst architecture, an ultra-deep pipeline structure that required very high clock speeds to be competitive, which in turn increased power consumption and heat dissipation. The architecture reached its limit and could no longer provide the proper level, so Intel closed its development and, to create a successor to Pentium 4, turned to its old Pentium Pro / Pentium M processor architecture.

As part of the program, Yonah was first designed for mobile platforms, and then, in August 2006, the dual-core Conroe architecture for desktops. Intel was so keen to save face that it left the Pentium name only to low-budget models, replacing it with Core - 13 years of brand dominance ended in an instant.

The move to a high-performance, low-power chip coincided perfectly with the emergence of multiple markets, and almost instantly Intel regained its crown by taking the lead in performance in the mainstream and high-power sector. By the end of 2006, AMD was faced with a peak of maximum performance, but the cause of its fall was the disastrous decision of the leadership.

Three days before the release of Intel Core 2 Duo, AMD released a statement endorsed by CEO Hector Ruiz (Sanders retired four years before). On July 24, 2006, AMD announced that it intends to acquire graphics card manufacturer ATI Technologies . The deal was worth $ 5.4 billion ($ 4.3 billion in cash and loans, $ 1.1 billion from the sale of 58 million shares). This transaction was a great financial risk, its amount amounted to 50% of AMD's market capitalization, and although such a purchase made sense, the price did not justify it at all.

ATI was grossly overpriced because (like Nvidia) it did not provide that level of revenue at all. ATI also did not have production facilities, its price almost entirely consisted of intellectual property.

Over time, AMD admitted its mistake by recording a 2.65 billion price drop due to the overvalued value of ATI.

To appreciate the management oversight, compare this to the sale of ATI's Imageon handheld graphics division. It was sold to Qualcomm for just 65 million. This unit is now called Adreno (an anagram of the word "Radeon") and its product has become an integral component of the SoC Snapdragon.

XilleonThe 32-bit SoC for digital televisions and set-top boxes, was sold to Broadcom for 192.8 million.

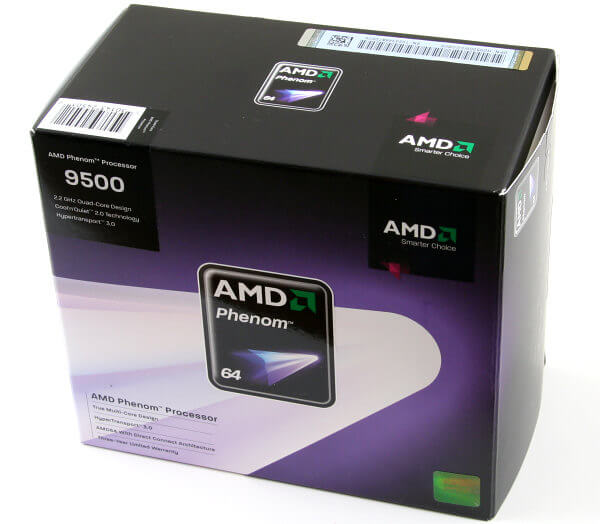

In addition to the prudently spent money, consumers were greatly disappointed by AMD’s response to Intel’s updated architecture. Two weeks after the release of Core 2, AMD President and CEO Dirk Meyer announced the completion of the new AMD K10 Barcelona processor . This was the company's decisive move in the server market, because the device was a powerful quad-core processor. Intel at that time produced only dual-core Xeon chips.

The new Opteron chip appeared with noise in September 2007, but it could not steal fame from Intel: the company officially completed the production of the processor, detecting a bug, which in rare cases could lead to hangs when nested entries in the cache. Despite its rarity, the TLB bug ended the production of AMD K10; over time, a BIOS patch was released that fixed the problem on the released processors, albeit at the cost of losing about 10% of the performance. By the time the processors of the new version "B3 stepping" were released after 6 months, the damage had already been done, both monetary and reputational.

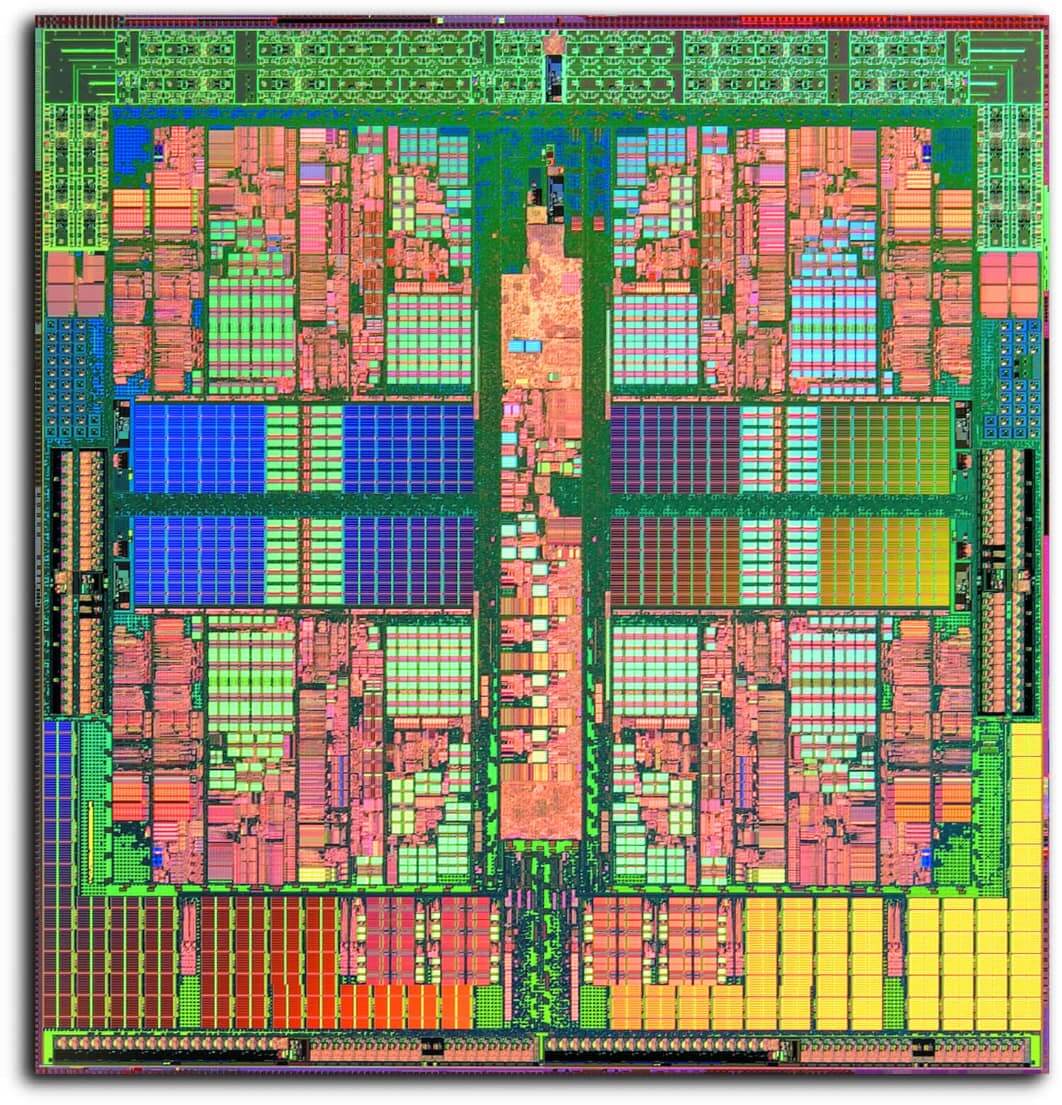

A year later, closer to the end of 2007, AMD brought the quad-core K10 to the desktop market. By then, Intel had pulled ahead and released the now famous Core 2 Quad Q6600... In theory, the K10 had a more advanced design - all four cores were on a single die, in contrast to the Q6600, which used two separate crystals. However, AMD had a hard time reaching the stated clock speeds, and the best version of the new CPU was clocked at just 2.3 GHz. The processor was slower than the Q6600, albeit by 100 MHz, but it turned out to be a little more expensive.

However, the most mysterious aspect of all this was AMD’s decision to create a new model name: Phenom . Intel switched to Core because the Pentium became synonymous with extremely high price and power consumption, while having rather poor performance. On the other hand, the name Athlon was well known to all computer enthusiasts, and it was associated with speed. The first version of Phenom was not so bad , it just turned out to be not as good as the Core 2 Quad Q6600, which was already on the market; in addition, Intel already has faster products.

It looked odd, but AMD seemed to deliberately steer clear of ads. In addition, the company did not participate in the software part of the business at all; a very interesting way of doing business, not to mention the competition in the semiconductor industry. A review of this era in AMD’s history would be incomplete without mentioning Intel’s anti-competitive moves. At this stage, AMD had to fight not only with Intel chips, but also with the actions of this company to promote the monopoly, including the board OEMs with huge funds (in the amount of billions of dollars) to actively oppose the use of AMD processors in new computers. Intel paid Dell $ 723 million in the

first quarter of 2007to remain the sole supplier of processors and chipsets, accounting for 76% of the company's total operating income of 949 million. AMD later won 1.25 billion in a settlement; it seems that this is surprisingly small, but, probably, the factor was taken into account that when Intel was engaged in its intrigues, AMD itself could not sell a sufficient number of processors to its customers.

This is not to say that Intel had to do all this. Unlike AMD, the company had well-defined goals, as well as a wider variety of products and intellectual property. It also had incomparable cash reserves: by the end of the first decade of the century, Intel managed to achieve revenues of more than 40 billion and 15 billion operating income. This allowed us to allocate huge budgets for marketing, research and software development, as well as for production, perfectly tailored to our own products and company schedules. These factors alone guaranteed that AMD would have to fight for its market share.

The multibillion-dollar overpayment for ATI and the accompanying interest on the loan, the failed successor to the K8, and problems with bringing the chips to market were hard blows. But the situation was about to get worse soon.

One step forward, one sideways and a few backwards

In 2010, the global economy continued to grapple with the aftermath of the 2008 financial crisis . A few years earlier, AMD ditched its flash memory division along with all the chip factories - eventually becoming GlobalFoundries , which AMD still uses for some of its products. About 10% of employees were laid off, and because of all this savings and investment, AMD had to moderate its ambitions and concentrate entirely on the design of processors.

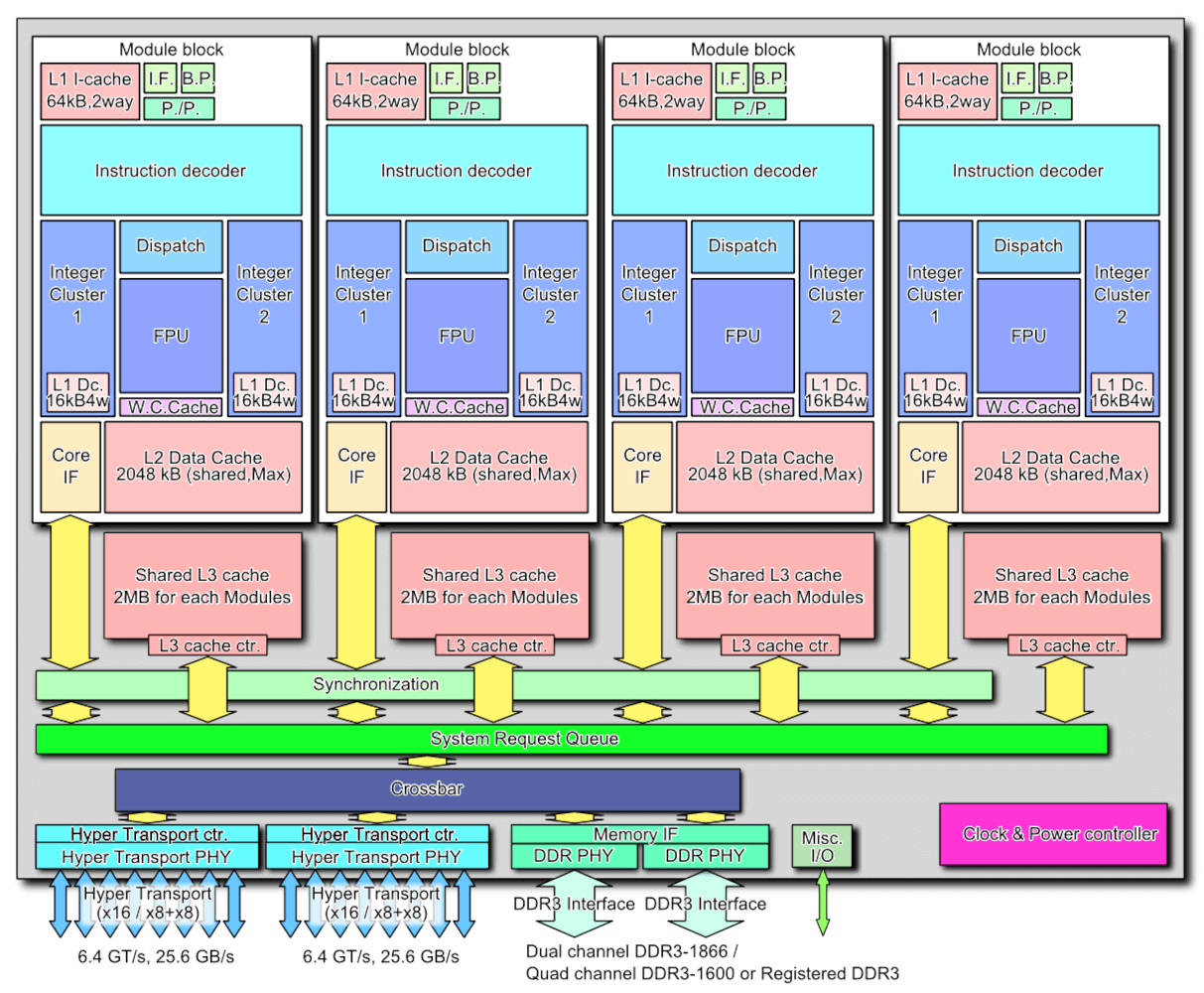

Instead of refining the K10's design, AMD embarked on a new project and a new Bulldozer architecture was released towards the end of 2011.... The K8 and K10 were true simultaneous multithreaded (SMT) processors, and the new design was classed as "cluster multithreading."

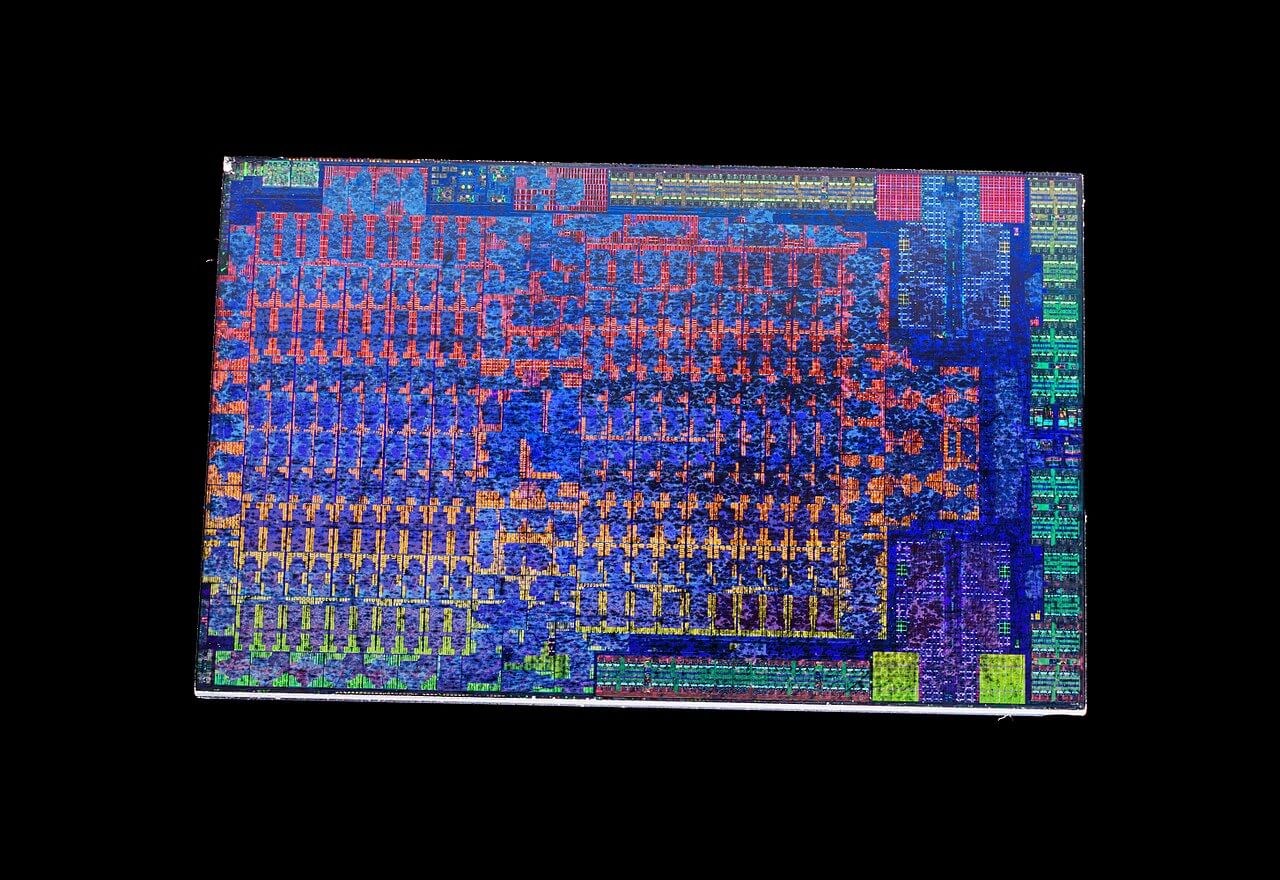

Bulldozer's four-module structure. Image: Wikipedia

In developing Bulldozer AMD decided to use a modular approach - each cluster (or module) contained two integer kernels, but they were not truly independent. They had shared caches L1 (instructions) and L2 (data), a device for receiving / decoding instructions and a block for processing floating point numbers. AMD has gone so far as to ditch the Phenom name and return to the Athlon FX glory days, giving the first Bulldozer processors the simple name AMD FX .

The meaning of all these changes was to reduce the overall size of the chips and increase their energy efficiency. As the die area decreases, the number of chips produced increases, which leads to higher profits, and the energy efficiency increases the clock speeds. In addition, due to its scalability, the architecture should be suitable for more market niches.

Best Model at October 2011 Launched FX-8510boasted four clusters, but was marketed as an 8-core, 8-thread processor. By that time, the processors had multiple clock speeds: the base frequency of the FX-8150 was 3.6 GHz, and the turbo frequency was 4.2 GHz. However, the chip had an area of 315 square millimeters and a maximum power consumption of over 125 watts. Intel has already released the Core i7-2600K: a traditional four-core, 8-stream CPU, clocked at up to 3.8 GHz. It was significantly smaller than the new AMD chip, only 216 square millimeters, and consumed 30 watts less.

In theory, the new FX should have dominated, but its performance has proven to be quite disappointing.- sometimes its ability to process several streams at the same time was manifested, however, single-threaded performance often turned out to be no better than that of the Phenom line, despite higher clock speeds.

AMD, which had invested millions of dollars in research and development at Bulldozer, was not about to abandon this architecture, and at this point, the purchase of ATI began to bear fruit. In the previous decade, AMD's first combined CPU / GPU project in the same package called Fusion came too late to the market and proved to be very weak. But this project allowed AMD to enter other markets. In early 2011, another new architecture was released called Bobcat .

AMD chip with combined CPU + GPU in PlayStation 4. Image: Wikipedia

This architecture was aimed at low power devices: embedded systems, tablets and laptops; its structure was diametrically opposed to that of Bulldozer: just a few conveyors and nothing more. A few years later, Bobcat received a long-awaited update, evolving into the Jaguar architecture that Microsoft and Sony chose in 2013 for use on the Xbox One and PlayStation 4.

Although margins should have been relatively small because consoles are usually built with the lowest possible price in mind. Both platforms have sold millions of units, highlighting AMD's ability to build custom SoCs.

Over the following years, AMD continued to improve the Bulldozer architecture - the first project was Piledriver, which gave us the FX-9550 (a monster with a frequency of 5 GHz and a consumption of 220 W), however, Steamroller and the latest version, Excavator (whose development began in 2011, and released - four years later) were more concerned with reducing energy consumption than realizing new opportunities.

By that time, the structure of processor names had become quite confusing, to say the least. Phenom has long become history, and FX had a pretty bad reputation. AMD abandoned all these names and simply called the desktop processors of the Excavator A-series project .

The company's graphics department for Radeon products has had ups and downs. AMD kept the ATI brand name until 2010, then replaced it with its own. In addition, in late 2011, the company completely rewrote ATI's GPU architecture with the release of Graphics Core Next (GCN). This architecture continued to evolve for another eight years, finding its way into consoles, desktops, workstations, and servers; it is still used today as an integrated GPU in the company's so-called APUs.

The first implementation of Graphics Core Next, the Radeon HD 7970

GCN processors have evolved with impressive performance, but their design made it difficult to get the most out of it with ease. The most powerful version AMD created, the Vega 20 GPU in the Radeon VII card boasted 13.4 TFLOPS of processing power and 1024 GB / s bandwidth, but in games it just couldn't reach the same heights as the best Nvidia cards.

Radeon products have often earned a reputation for being hot, noisy, and very power hungry. First iteration of GCN running on HD 7970, required much more than 200 W of power at full load, but it was produced using a fairly large 28-nanometer process technology from TSMC. By the time GCN reached maturity in the Vega 10 chip, the processors were already manufactured at GlobalFoundries using a 14nm process technology, but the power consumption was no better than that of cards like the Radeon RX Vega 64, which consumed a maximum of about 300 watts.

Although AMD had a decent selection of products, the company was not able to achieve high performance , as well as earn enough money.

| Fiscal year | Revenue (billion dollars) | Gross profit | Operating income (million dollars) | Net income (million dollars) |

| 2016 | 4.27 | 23% | -372 | -497 |

| 2015 | 4.00 | 27% | -481 | -660 |

| 2014 | 5.51 | 33% | -155 | -403 |

| 2013 | 5.30 | 37% | 103 | -83 |

| 2012 | 5.42 | 23% | -1060 | -1180 |

| 2011 | 6.57 | 45% | 368 | 491 |

By the end of 2016, the company's balance sheet recorded a loss for the fourth year in a row (the financial situation deteriorated by 700 million in 2012 due to the final separation from GlobalFoundries). Debt was still high, even with factories and other affiliates, and even the success with the Xbox and PlayStation didn't provide enough help.

In general, AMD experienced great difficulties.

New stars

There was nothing else to sell, and there was no major investment on the horizon that could save the company. AMD could only do one thing: redouble efforts and restructure. In 2012, the company hired two people who will play a vital role in its revival.

Former K8 lead architect Jim Keller returned from a 13-year absence to lead two projects: the ARM-based architecture for the server markets and the standard x86 architecture, with Mike Clark (Bulldozer's lead designer) becoming the lead architect.

They were joined by Lisa Su, formerly senior vice president and general manager of Freescale Semiconductors. At AMD, she took the same position; it is generally recognized that it was she, together with the president of the company, Rory Reed, who became the reason for the transition to other markets besides PC, especially to the console market.

Lisa Su (center) and Jim Keller (right)

Two years after Keller's return to R&D, CEO Rory Reid left the company and Lisa Su was promoted. With a doctorate in electronics from MIT, as well as experience in manufacturing silicon-on- insulator (SOI ) MOS transistors , Su had both the scientific knowledge and manufacturing experience needed to bring AMD back her fame. However, in the world of large-scale processors, nothing happens instantly - designing chips at best takes several years. While such plans can bear fruit, AMD will have to withstand the storm.

While AMD struggled to survive, Intel went from victory to victory. The Core architecture and manufacturing processes gradually improved, and by the end of 2016, the company reported nearly $ 60 billion in revenue. For several years, she used the tick-to-tack scheme in the development of processors , the tick is the new architecture, and the tak is the production improvement, which usually took the form of reducing the process technology.

However, despite huge revenues and almost complete dominance of the market, behind the scenes everything was not so rosy. In 2012, Intel was expected to start producing processors with an advanced 10nm process technology within three years. This " so " never came - in fact, and the " tick"was not there either. The first 14nm CPU based on the Broadwell architecture appeared in 2015, after which the process technology and fundamental structure remained unchanged for five years.

Industrial engineers were constantly confronted with problems of release at 10 nanometers, which forced Intel to improve the old process technology and architecture every year. Clock speeds and power consumption were getting higher, but no new architectures were expected; it probably echoed the Netburst era. PC users had an unpleasant choice: either purchase products from the powerful Core line at a decent price or buy weaker and cheaper FX / A-series.

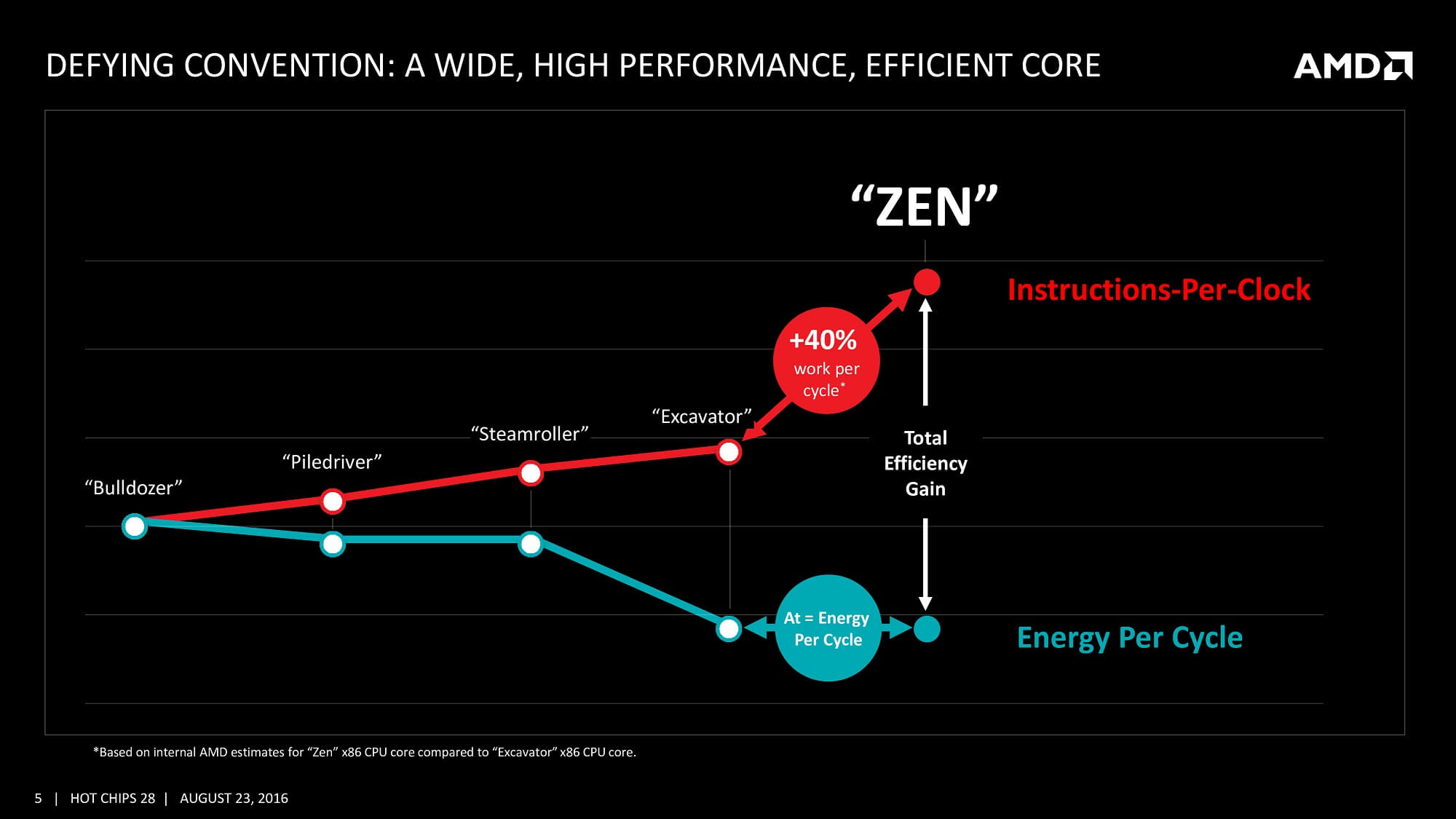

However, AMD was slowly gathering a winning combination of cards, playing its game in February 2016 at the annual E3 fair. Taking advantage of the platform's announcement of the highly anticipated Doom relaunch, the company announced an all-new Zen architecture .

Aside from the general phrases of "concurrent multithreading", "high bandwidth cache" and "energy efficient finFET design," little has been said about the new architecture. More details were revealed at Computex 2016, including the ambition to exceed the Excavator's performance by 40%.

To call such claims "ambitious" would be an understatement, especially since the company has achieved a modest 10% gain at best in each new version of Bulldozer's architecture.

There were still twelve months to wait for the chip itself, but after its release, AMD's long-term plan finally became apparent.

To sell any new hardware, you need suitable software, but multi-threaded CPUs fought an unequal battle. Despite the fact that the consoles could boast of 8-core processors, most of the games were enough for only four. The main reasons for this were Intel's market dominance and AMD's chip architecture in the Xbox One and PlayStation 4. Intel released its first 6-core CPU back in 2010, but it was very expensive (almost $ 1,100). Soon, others appeared, but a truly inexpensive six-core Intel processor could only be introduced after seven years. It was a Core i5-8400 under $ 200.

The problem with console processors was that the CPU circuit consisted of two quad-core CPUs on a single die, and there was a high latency between the two parts of the chip. Therefore, game developers aimed to execute engine threads in one of the parts, and use the other only for general background processes. Only in the world of workstations and servers was there a need for processors with serious multithreading - until AMD decided otherwise.

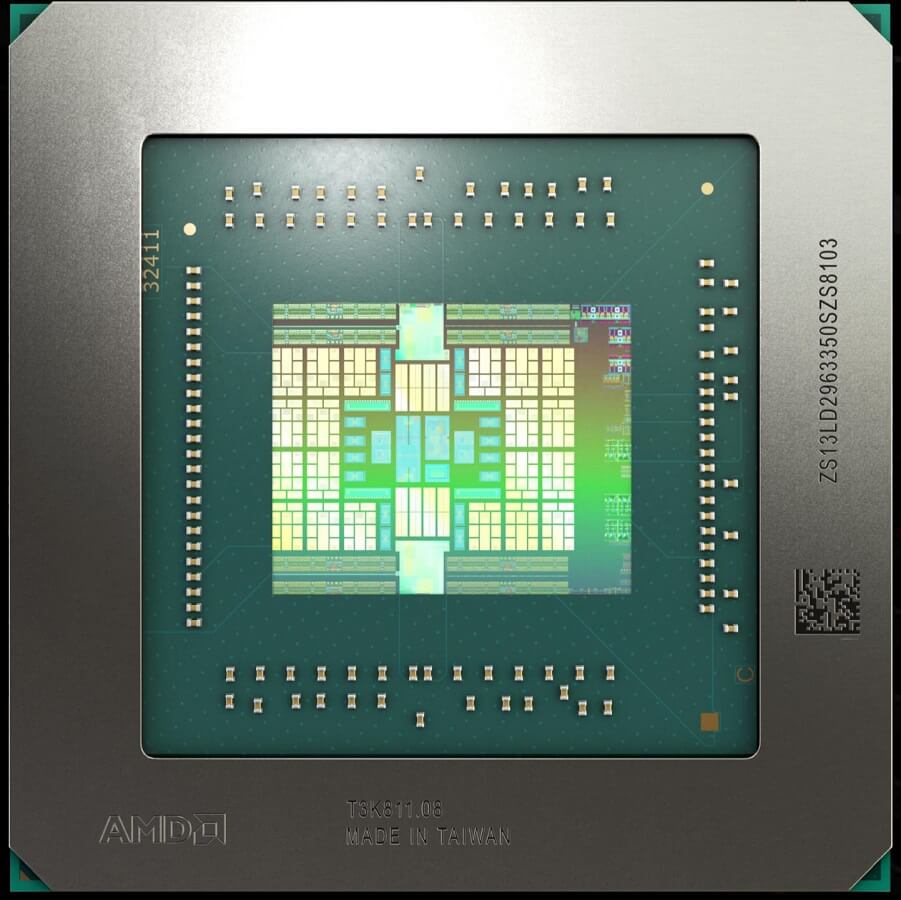

In March 2017, regular desktop users were able to upgrade their systems by selecting one of two eight-core 16-stream processors. The brand new architecture had to get its own name, and AMD ditched the Phenom and FX brands and gave us Ryzen .

None of the CPUs were particularly cheap:The Ryzen 7 1800X (3.6 GHz, after overclocking 4 GHz) retailed for $ 500, while the 0.2 GHz slower 1700X sold for $ 100 less. With this, AMD partly wanted to get rid of being perceived as a budget alternative, but this price was mainly caused by the fact that Intel was asking $ 1,000 for its eight-core Core i7-6900K processor .

Zen took all the best from all previous architectures and combined them all into a structure whose task was to maximize the workload of pipelines; and this required significant improvements in pipeline systems and caches. In the new design, the developers were removed from the shared L1 / L2 caches used in Bulldozer - each core was now completely independent, had more pipelines, better branch prediction, and increased cache throughput.

Like the chip that ran in the Microsoft and Sony consoles, the Ryzen processor was also a system on a chip; the only thing it lacked was the GPU (the GCN processor appeared in the later budget Ryzen models).

The crystal was divided into two so-called CPU Complex (CCX), each of which was a four-core, 8-stream module. Also on the chip is the Southbridge CPU, which provides controllers and PCI Express, SATA and USB connections. Theoretically, this meant that motherboards can be manufactured without a south bridge, but in almost all boards to expand the number of possible device connections, south bridges were still installed.

But all of this effort would be wasted if Ryzen didn't deliver the performance you need, and after years of falling behind Intel, AMD had a lot to prove. 1800X and 1700X were not ideal : in professional areas they are similar to Intel products, but slower in games.

AMD also had other cards on hand: a month after the first Ryzen processor hit the market, the six- and four-core Ryzen 5 models appeared , followed two months later by the four-core Ryzen 3 chips . They competed with Intel products in the same way as their more powerful counterparts, but were much more affordable.

And then aces appeared on the table - a 16-core, 32-thread Ryzen Threadripper 1950X(starting at $ 1,000) and a 32-core, 64-thread EPYC server processor. These monsters, respectively, consisted of two and four Ryzen 7 1800X chips in one package and used the new Infinity Fabric interconnection system to transfer data between the chips.

Over the past six months, AMD has demonstrated that it essentially aims at all the possible niches of the x86 desktop market with a single processor architecture.

A year later, the architecture was updated to Zen +; the improvements included changes to the cache system and the transition from the GlobalFoundries 14LPP process technology, created in conjunction with Samsung, to an updated, smaller 12LP system. The die size of the processors remained the same, but the new manufacturing process allowed the processors to operate at higher clock speeds.

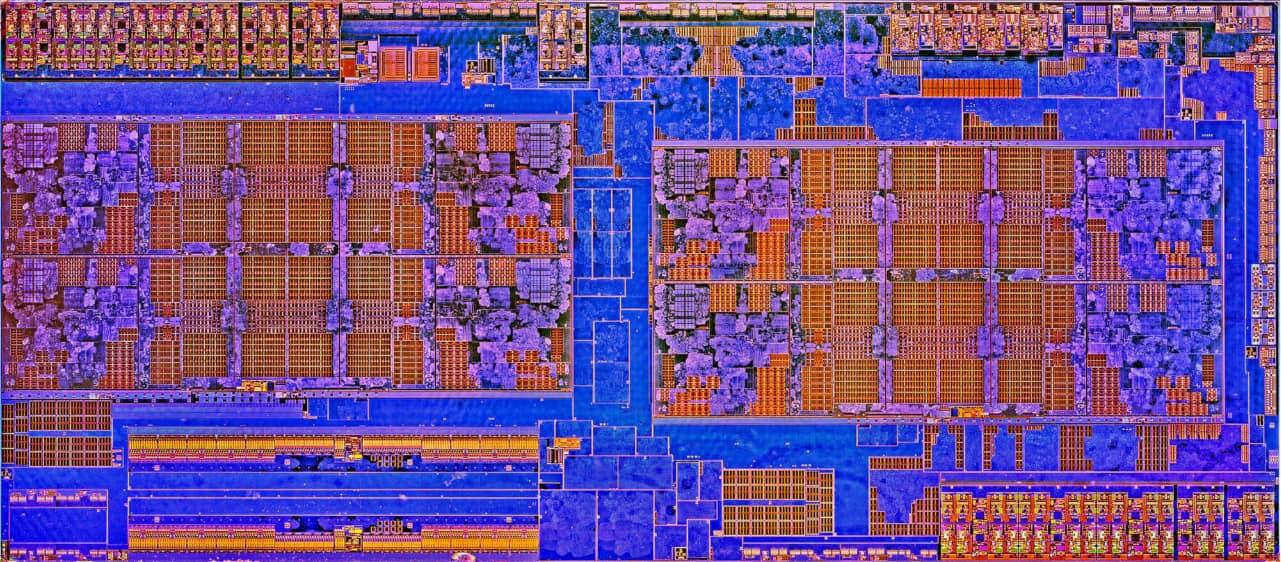

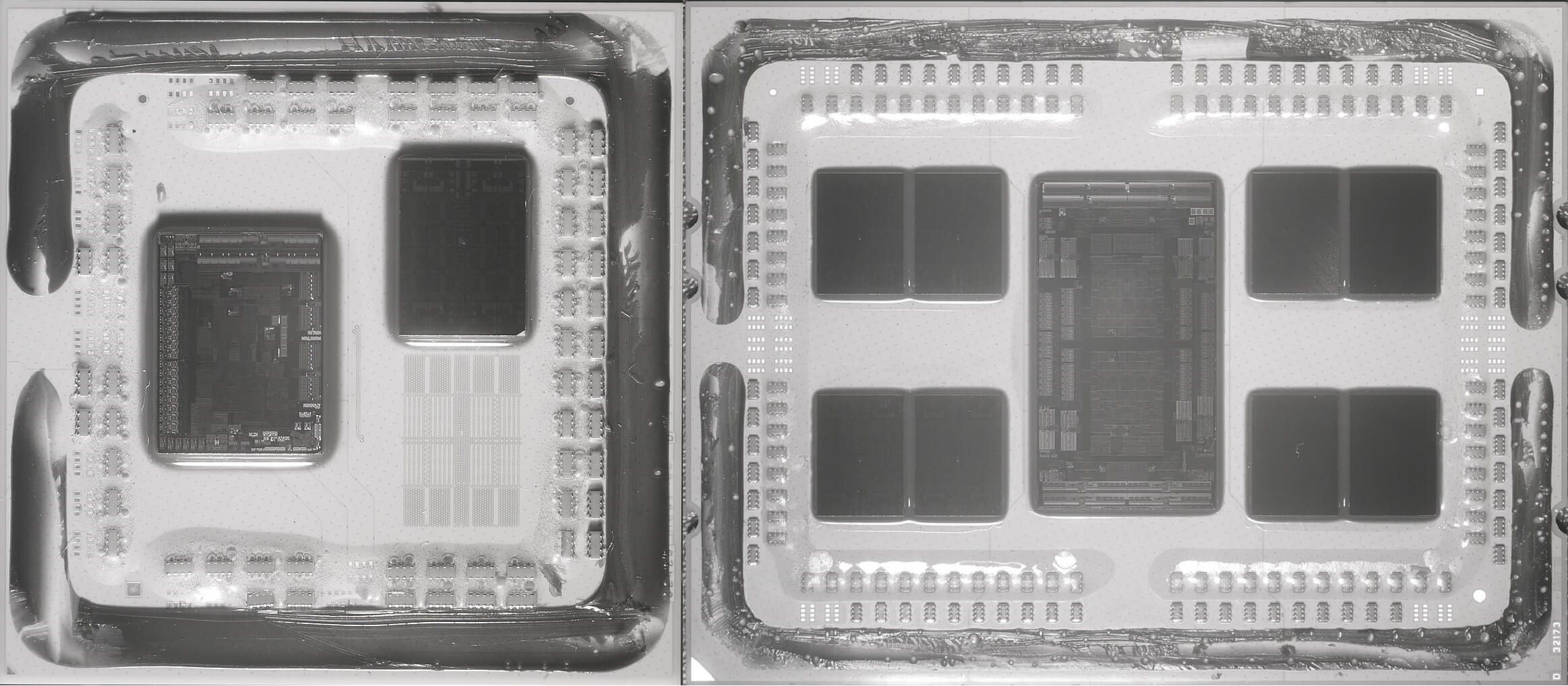

12 months later, in the summer of 2019, AMD released Zen 2 . This time, the changes became more significant and the term chiplet came into fashion .... Instead of using a monolithic design, in which each part of the CPU is the same piece of silicon (as was the case in Zen and Zen +), the engineers decoupled the Core Complex modules from the connection system.

Core Complex modules were manufactured by TSMC using the N7 process technology and became full crystals, hence the name Core Complex Die (CCD). The input / output structure was produced by GlobalFoundries, the Ryzen desktop models used the 12LP chip, and the Threadripper and EPYC used larger versions with 14 nanometers.

Zen 2 Ryzen and EPYC infrared images. It is noticeable that the CCD chipsets are separated from the I / O chip. Image: Fritzchens Fritz

The design of the chipset has been retained and improved in Zen 3, which is scheduled for release at the end of 2020. Most likely, CCDs will not bring anything new to the 8-core and 16-stream Zen 2 structure, rather, the improvements will be in the Zen + style (that is, improved cache, energy efficiency and clock speeds).

It's worth taking stock of what AMD has been able to achieve with Zen. In 8 years, the architecture has gone from a blank slate to an expansive product portfolio that has 4-core, 8-thread budget proposals for $ 99 and 64-core, 128-thread server CPUs for over $ 4,000.

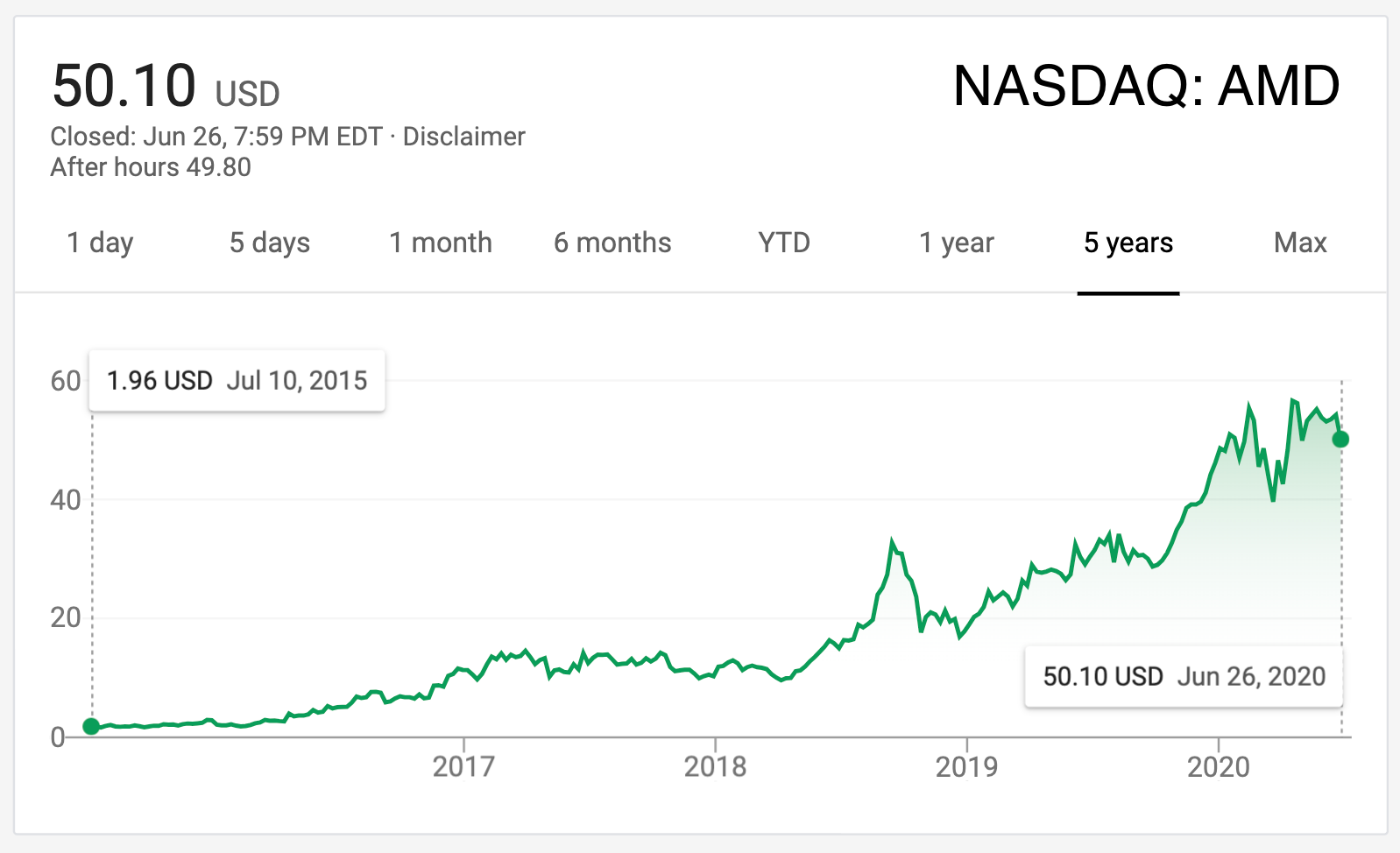

AMD’s financial position has also changed significantly: in the past, its losses and debts reached billions of dollars; AMD is now heading for debt relief and announces $ 600 million in operating revenue over the next year. While Zen was not the only reason for the company's financial renaissance, it has contributed greatly to it.

AMD's graphics division also suffered a similar fate - in 2015 it was granted full independence and the name Radeon Technologies Group (RTG). The most significant achievement of his engineers was RDNA - a heavily redesigned GCN. Changes to the cache structure, as well as improvements in the size and grouping of computing units, brought the architecture closer to use in games.

The first models to use this new architecture, the Radeon RX 5700 series , demonstrated significant design potential. It hasn't gone unnoticed by Microsoft and Sony, with both companies opting for Zen 2 and the updated RDNA 2 for their new Xbox and PlayStation 5 consoles .

While the Radeon Group hasn't enjoyed the same level of success as the CPU division and its graphics cards are probably still viewed as a "budget option," AMD is back to where it was in the Athlon 64 era in terms of development of architectures and technological innovations. The company reached the top, fell, and like a mythical creature rose from the ashes.

Cautiously looking to the future

It makes perfect sense to ask a simple question: can the company return to the dark days of product failure and lack of funds?

Even if 2020 turns out to be excellent for AMD (first quarter financial results are up 40% from the previous year), revenue of 9.4 billion still leaves it behind Nvidia (10.7 billion in 2019) and light-years from Intel (72 billion). Of course, the product portfolio of the latter is much more extensive, in addition, it owns its own production facilities, but Nvidia's profit is almost entirely dependent on graphic cards.

AMD bestsellers

Obviously, both profits and operating revenues are required to grow to fully stabilize AMD's future, but how can this be achieved? The bulk of the company's revenue continues to come from what it calls the Computing and Graphics segment, which is Ryzen and Radeon sales. Without a doubt, it will continue to grow, because Ryzen is very competitive, and the architecture of RDNA 2 provides a common platform for games running on both PC and next-generation consoles.

The comparative power of Intel's new desktop processors in gaming is steadily declining . In addition, they lack the breadth of features provided by Zen 3. Nvidia still maintains its crown in GPU performance, but has faced stiff resistance.by Radeon in the mid-range segment. It may be just a coincidence, but although RTG is a completely independent division of AMD, its profits and operating income are pooled with the CPU sector, which suggests that despite the popularity of its graphics cards, they are not sold in the same quantities as Ryzen products. ...

Perhaps even more of a concern for AMD is that its enterprise, embedded and semi-custom product segment accounted for just less than 20% of its Q1 2020 profits and resulted in an operating loss. This can be explained by the fact that in light of the success of the Nintendo Switch and the upcoming release of new consoles from Microsoft and Sony, sales of the current generation of Xbox and PlayStation are stagnating. In addition, Intel dominates the corporate market and no one who owns a multi-million data center will not get rid of it simply because a new amazing CPU has appeared.

Nvidia DGX A100 Powered by Dual 64-Core AMD EPYC Processors

But that could change over the next two years, due in part to new gaming consoles as well as an unexpected alliance. Nvidia has chosen AMD processors instead of Intel for its deep learning computing clusters / AI DGX 100 . The reason is simple: The EPYC processor has more cores and memory channels, and faster PCI Express lanes than what Intel has to offer.

If Nvidia is more than happy with AMD's products, others will surely follow. AMD will have to climb a steep mountain, but today it seems that it has the right tools for this. As TSMC continues to refine and fine-tune its N7 manufacturing process, all AMD chips using this process will also get better.

Looking to the future, there are several areas where AMD should definitely improve. The first is marketing. The Intel Inside catchphrase and jingle has been ubiquitous for 30 years, and while AMD has spent some money promoting Ryzen, it ultimately needs manufacturers like Dell, HP, and Lenovo to sell devices that showcase its processors in the same light. and with the same specifications as Intel products.

In the field of software, a lot of work has been done to create applications that increase user convenience, in particular, Ryzen Master , but recently, Radeon drivers had extensive problems.... Developing game drivers is incredibly difficult, but their quality can create or destroy a hardware product's reputation.

Today, AMD is in the strongest position in its 51-year history. With an ambitious Zen project that sees no limits in the near future, the phoenix-like rebirth of the company has been a huge success. However, she's not at the top yet, and probably for the best. They say that history repeats itself, but let's hope that this does not happen. A strong and competitive AMD, quite capable of competing with Intel and Nvidia, means only one benefit for users.

What do you think about AMD, its ups and downs - did you have a K6 chip, or maybe an Athlon? What is your favorite Radeon graphics card? Which Zen processor impressed you the most?