It is extremely important here that the WSL comes with support for the NVIDIA CUDA hardware and software parallel computing architecture .

The material we are publishing has been translated by NVIDIA experts. Here we will talk about what to expect from CUDA in the WSL 2 Public Preview.

Running Linux AI frameworks in WSL 2 containers

What is WSL?

WSL is a feature of Windows 10 that allows you to use Linux command line tools directly on Windows without having to deal with the complexities of applying a dual boot configuration. WSL is a containerized environment that is tightly integrated with Microsoft Windows. This allows Linux applications to run alongside traditional Windows applications and modern applications distributed through the Microsoft Store.

WSL is primarily a tool for developers. If you are working on certain projects in Linux containers, this means that you can do the same things locally, on a Windows computer, using familiar Linux tools. Usually, to run such applications on Windows, you need to spend a lot of time configuring the system, you need some third-party frameworks, libraries. Now, with the release of WSL 2, everything has changed. WSL 2 brings full support for the Linux kernel to the Windows world.

WSL 2 and GPU Paravirtualization Technology (GPU-PV) enabled Microsoft to take Linux support for Windows to a new level, making it possible to run GPU-based computing loads. Below we will talk more about how GPU usage in WSL 2 looks like.

If you are interested in the topic of support for video accelerators in WSL 2, take a look at this material and this repository.

CUDA to WSL

In order to take advantage of the GPU capabilities in WSL 2, a video driver that supports Microsoft WDDM must be installed on the computer . Such drivers are created by video card manufacturers such as NVIDIA.

CUDA technology allows you to develop programs for NVIDIA video accelerators. This technology has been supported in WDDM on Windows for many years. Microsoft's new WSL 2 container provides GPU-accelerated computing capabilities that CUDA technology can take advantage of, allowing CUDA-based programs to run in WSL. For more information, see the WSL CUDA User Guide .

CUDA support in WSL is included in NVIDIA drivers for WDDM 2.9. These drivers are easy to install on Windows. The CUDA user-mode drivers in WSL (libcuda.so) are automatically made available inside the container and can be detected by the loader.

The NVIDIA driver development team has added support for WDDM and GPU-PV to the CUDA driver. This is done so that these drivers could work in a Linux environment running on Windows. These drivers are still in Preview status, their release will take place only when the official WSL release with GPU support takes place. Details on the driver release can be found here .

The following figure shows how to connect the CUDA driver to WDDM inside a Linux guest system.

CUDA-enabled WDDM user-mode driver running on a Linux guest

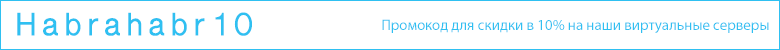

Suppose you are a developer who installed the WSL distribution on the latest Windows build from Fast Ring (build 20149 or older) Microsoft Windows Insider Program (WIP). If you've switched to WSL 2 and you have an NVIDIA GPU, you can try out the driver and run your GPU compute code in WSL 2. All you need to do is install the driver on the Windows host and open the WSL container. Here, without additional efforts, you will have the opportunity to work with applications that use CUDA. The following figure shows how a TensorFlow application using CUDA features is running in a WSL 2 container.

TensorFlow container running in WSL 2

The fact that CUDA technology is now available in WSL makes it possible to run applications in WSL that previously could only run in a regular Linux environment.

NVIDIA is still actively working on this project and is making improvements to it. Among other things, we are working on adding APIs to WDDM that were previously designed exclusively for Linux. This will lead to the fact that in WSL, without additional efforts on the part of the user, more and more applications will be able to work.

Another issue that interests us is performance. As mentioned, the GPU support in WSL 2 takes GPU-PV technology seriously. This can have a negative impact on the speed of execution of small tasks on the GPU, in situations where pipelining will not be used. We are working right now to reduce these effects as much as possible.

NVML

The original driver package does not include NVML technology, we are striving to fix this by planning to add NVML support and support for other libraries to WSL.

We started with the main CUDA driver, which will allow users to run most of the existing CUDA applications, even at an early stage of the appearance of CUDA support in WSL. But as it turns out, some containers and applications use NVML to get GPU information even before loading CUDA. This is why adding NVML support to WSL is one of our top priorities. It is quite possible that soon we will be able to share some good news about solving this problem.

GPU containers in WSL

In addition to WSL 2 support for DirectX and CUDA, NVIDIA is working to add support for the NVIDIA Container Toolkit (formerly called nvidia-docker2) to WSL 2. Containerized GPU applications that Data Scientists build to run on a local or cloud Linux environment can now, without any modification, run in WSL 2 on Windows computers.

No special WSL packages are required for this. The NVIDIA runtime library (libnvidia-container) can dynamically detect and use libdxcore when code is running in a GPU-accelerated WSL 2 environment. This happens automatically after installing the Docker and NVIDIA Container Toolkit packages, just like on Linux. This allows you, without additional effort, to run containers in WSL 2 that use the power of the GPU.

We strongly recommend that those who want to use the option

--gpusinstall the latest Docker tools (03/19 or later). To enable WSL 2 support, follow the instructions for your Linux distribution and install the latest version available nvidia-container-toolkit.

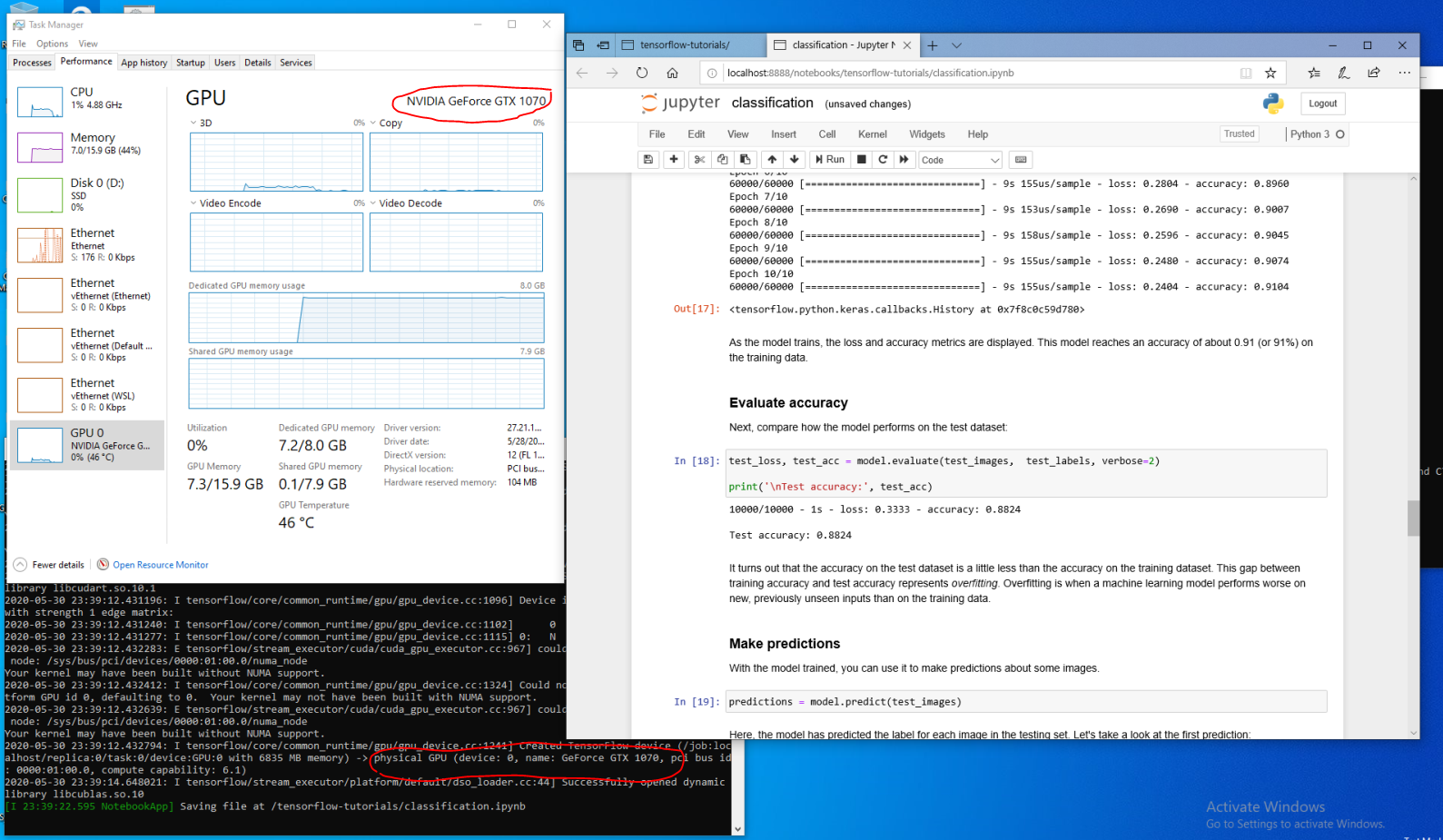

How it works? All tasks specific to WSL 2 are solved using the libnvidia-container library . Now this library can, at runtime, detect the presence of libdxcore.so and use this library to detect all GPUs visible to this interface.

If you need to use these GPUs in the container, then using libdxcore.so, you will access the driver storage location, the folder that contains all the driver libraries for the Windows host system and WSL 2. The libnvidia-container.so library is responsible for configuring the container in such a way that it would be possible to correctly access the driver store. The same library is responsible for setting up the core libraries supported by WSL 2. A diagram of this is shown in the following figure.

The scheme for detecting and mapping the driver repository container used by libnvidia-container.so in WSL 2

Also, this differs from the logic used outside of WSL. This process is completely abstracted with libnvidia-container.so and should be as transparent to the end user as possible. One of the limitations of this early version is that you cannot select GPUs in environments that have multiple GPUs. All GPUs are always visible in the container.

Any NVIDIA Linux containers you are already familiar with can run in a WSL container. NVIDIA supports the most exciting Linux-specific tools and workflows used by professionals. Download the container you are interested in from NVIDIA NGC and try it out.

Now we’ll talk about how to run TensorFlow and N-body containers in WSL 2 that are designed to use NVIDIA GPUs to speed up computing.

Running the N-body container

Install Docker using the installation script:

user@PCName:/mnt/c$ curl https://get.docker.com | sh

Install the NVIDIA Container Toolkit. WSL 2 support is available starting from nvidia-docker2 v2.3 and from the libnvidia-container 1.2.0-rc.1 runtime library.

Set up the repositories

stableand the experimentalGPG key. Changes to the runtime code that are designed to support WSL 2 are available in the experimental repository.

user@PCName:/mnt/c$ distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

user@PCName:/mnt/c$ curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

user@PCName:/mnt/c$ curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

user@PCName:/mnt/c$ curl -s -L https://nvidia.github.io/libnvidia-container/experimental/$distribution/libnvidia-container-experimental.list | sudo tee /etc/apt/sources.list.d/libnvidia-container-experimental.list

Install the NVIDIA runtime packages and their dependencies:

user@PCName:/mnt/c$ sudo apt-get update

user@PCName:/mnt/c$ sudo apt-get install -y nvidia-docker2

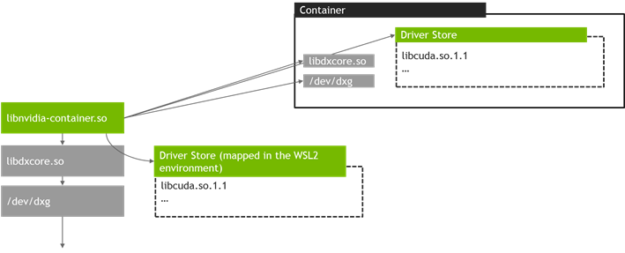

Let's open a WSL container and start the Docker daemon in it. If everything is done correctly - after that you can see service messages

dockerd.

user@PCName:/mnt/c$ sudo dockerd

Launching the Docker daemon

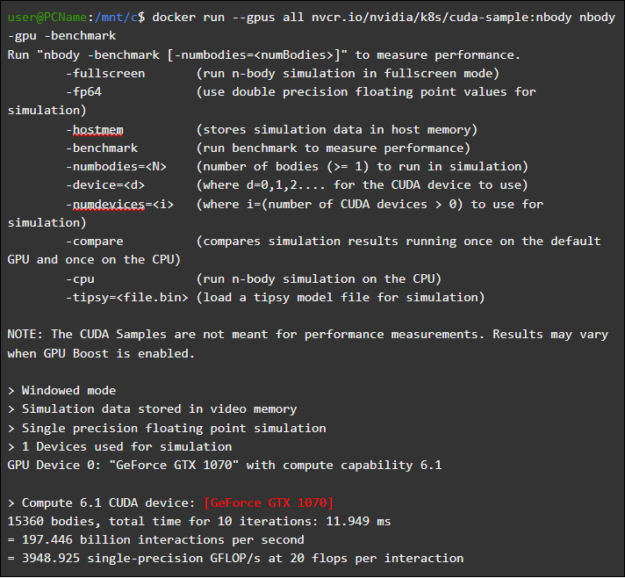

In another WSL window, load and run the N-body simulation container. It is necessary for the user performing this task to have sufficient authority to load the container. The following commands may need to be run using sudo. In the output you can see information about the GPU.

user@PCName:/mnt/c$ docker run --gpus all nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

Running the N-body container

Running a TensorFlow container

We will test in Docker, in the WSL 2 environment, another popular container - TensorFlow.

Download the TensorFlow Docker image. To avoid problems connecting to Docker, run the following command in sudo mode:

user@PCName:/mnt/c$ docker pull tensorflow/tensorflow:latest-gpu-py3

Let's save the slightly modified version of the GPU tutorial from TensorFlow Tutorial 15 to disk on the

Chost system. This drive, by default, is mounted in the WSL 2 container as /mnt/c.

user@PCName:/mnt/c$ vi ./matmul.py

import sys

import numpy as np

import tensorflow as tf

from datetime import datetime

device_name = sys.argv[1] # Choose device from cmd line. Options: gpu or cpu

shape = (int(sys.argv[2]), int(sys.argv[2]))

if device_name == "gpu":

device_name = "/gpu:0"

else:

device_name = "/cpu:0"

tf.compat.v1.disable_eager_execution()

with tf.device(device_name):

random_matrix = tf.random.uniform(shape=shape, minval=0, maxval=1)

dot_operation = tf.matmul(random_matrix, tf.transpose(random_matrix))

sum_operation = tf.reduce_sum(dot_operation)

startTime = datetime.now()

with tf.compat.v1.Session(config=tf.compat.v1.ConfigProto(log_device_placement=True)) as session:

result = session.run(sum_operation)

print(result)

#

print("Shape:", shape, "Device:", device_name)

print("Time taken:", datetime.now() - startTime)

The following shows the results of running this script running from a disk mounted in a container

C. The script was executed, first, using the GPU, and then using the CPU. For convenience, the output here has been reduced.

user@PCName:/mnt/c$ docker run --runtime=nvidia --rm -ti -v "${PWD}:/mnt/c" tensorflow/tensorflow:latest-gpu-jupyter python /mnt/c/matmul.py gpu 20000

Results of executing the matmul.py script

When using a GPU in a WSL 2 container, there is a significant acceleration in code execution compared to its execution on a CPU.

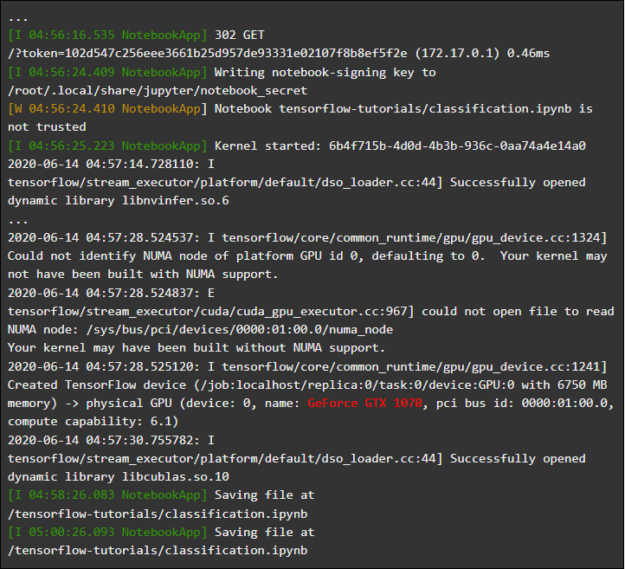

Let's conduct another experiment designed to investigate the performance of GPU computing. It's about code from the Jupyter Notebook manual. After starting the container, you should see a link to the Jupyter Notebook server.

user@PCName:/mnt/c$ docker run -it --gpus all -p 8888:8888 tensorflow/tensorflow:latest-gpu-py3-jupyter

Running Jupyter Notebook

You should now be able to run the demos in the Jupyter Notebook environment. Note that to connect to Jupyter Notebook using Microsoft Edge, instead of 127.0.0.1, use

localhost.

Go to

tensorflow-tutorialsand launch notepadclassification.ipynb.

In order to see the results of accelerating calculations using the GPU, go to the menu

Cell, selectRun Alland look at the log in the WSL 2-container Jupyter Notebook.

Jupyter Notebook Magazine

This demo, and some others in this container, allow you to see the problems with the virtualization layer, related to unreasonably high additional load on the system when solving small tasks. We have already talked about this above. Since we run very small tutorial models here, the execution time on the GPU is less than the time required to solve synchronization problems. When solving such "toy" problems in WSL 2, the CPU can be more efficient than the GPU. We are engaged in solving this problem, striving to limit its manifestations to only very small workloads, to which pipelining is not applied.

WSL overview

To understand how GPU support was added in WSL 2, we now talk about what it is like to run Linux on Windows and how containers view hardware.

Microsoft introduced WSL technology at Build in 2016. This technology quickly found widespread use and became popular among Linux developers who needed to run Windows applications like Office along with Linux development tools and related programs.

The WSL 1 system allowed unmodified Linux binaries to run. However, the Linux kernel emulation layer was used here, which was implemented as an NT kernel subsystem. This subsystem handled calls from Linux applications, redirecting them to the appropriate Windows 10 mechanisms.

WSL 1 was a useful tool, but it was not compatible with all Linux applications, since it needed to emulate absolutely all Linux system calls. In addition, file system operations were slow, resulting in unacceptably low performance for some applications.

With this in mind, Microsoft decided to go the other way and released WSL 2, a new version of WSL. WSL 2 containers run full Linux distributions in a virtualized environment, but still take full advantage of the new Windows 10 containerization system.

While WSL 2 uses Windows 10 Hyper-V services, it is not a traditional virtual machine, but rather a lightweight helper virtualization engine. This mechanism is responsible for managing virtual memory associated with physical memory, allowing WSL 2 containers to dynamically allocate memory by accessing the Windows host system.

Among the main goals of creating WSL 2 are to increase the performance of working with the file system and ensure compatibility with all system calls. In addition, WSL 2 was designed to improve the level of integration between WSL and Windows. This allows you to conveniently work with a Linux system running in a container using the Windows command line tools. This also improves the usability of the host file system, which is automatically mounted in the selected directories of the container file system.

WSL 2 was introduced in the Windows Insider Program as a Preview feature and was released in the most recent Windows 10 update, version 2004.

In WSL 2, from the latest version of Windows, there are even more improvements that affect a lot of things - from network stacks to the basic VHD mechanisms of the storage system. A description of all the new features in WSL 2 is beyond the scope of this material. You can learn more about them on this page, which compares WSL 2 and WSL 1.

Linux core WSL 2

The Linux kernel used in WSL 2 is compiled by Microsoft from the most recent stable branch, using the source code available at kernel.org. This kernel has been specially tuned for WSL 2, optimized in terms of size and performance to ensure that Linux runs on Windows. The kernel is supported through the Windows Update mechanism. This means that the user does not have to worry about downloading the latest security updates and kernel improvements. All this is done automatically.

Microsoft supports several Linux distributions in WSL. The company, following the rules of the open source community, published the WSL2-Linux-Kernel GitHub repository the source code for the WSL 2 kernel with the modifications necessary to integrate with Windows 10.

GPU support in WSL

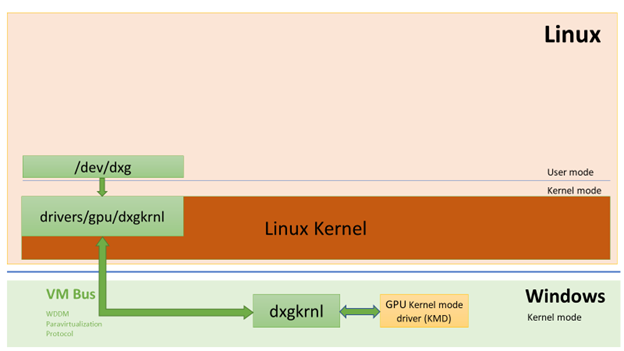

Microsoft has added support for real GPUs using GPU-PV technology to WSL 2 containers. Here, the operating system's graphics core (dxgkrnl) marshals the kernel-mode driver that is on the host with calls from user-mode components that are executed in the guest virtual machine.

Microsoft has developed this technology as a WDDM capability, and since its inception there have been several Windows releases. This work was carried out with the involvement of independent hardware vendors (Independent Hardware Vendor, IHV). NVIDIA graphics drivers have supported GPU-PV since the early days of this technology in the preview versions of products available in the Windows Insider Program. All currently supported NVIDIA GPUs can be accessed by guest OS in a virtual machine using Hyper-V.

In order for GPL-PV to be able to use WSL 2, Microsoft had to create the base of its graphical framework for the Linux guest system: WDDM with support for the GPU-PV protocol. The new Microsoft driver is behind dxgkrnl, the system responsible for supporting WDDM on Linux. The driver code can be found in the WSL2-Linux-Kernel repository.

Dxgkrnl is expected to provide GPU acceleration support in WSL 2 containers in WDDM 2.9. Microsoft says that dxgkrnl is a Linux GPU driver based on the GPU-PV protocol, and that it has nothing to do with the Windows driver with a similar name.

You can currently download the preview version of the NVIDIA WDDM 2.9 driver... Over the next few months, this driver will be distributed via Windows Update in the WIP version of Windows, making manual driver download and installation unnecessary.

Understanding GPU-PV

The dxgkrnl driver makes available, in user mode of the Linux guest system, the new device / dev / dxg. The D3DKMT kernel service layer, which was available on Windows, was also ported, as part of the dxcore library, to Linux. It interacts with dxgkrnl using a set of private IOCTL calls.

The Linux guest version of dxgkrnl connects to the dxg kernel on the Windows host using multiple VM bus channels. The dxg kernel on the host processes what comes from the Linux process, just like what comes from regular Windows applications using WDDM. Namely, the dxg kernel sends what it receives to the KMD (Kernel Mode Driver, a kernel-mode driver unique to each HIV). The kernel-mode driver prepares what it receives for sending to a hardware graphics accelerator. The following figure shows a simplified diagram of the interaction between the Linux device / dev / dxg and KMD.

A simplified diagram of how Windows host components enable the dxg device to work in a Linux guest

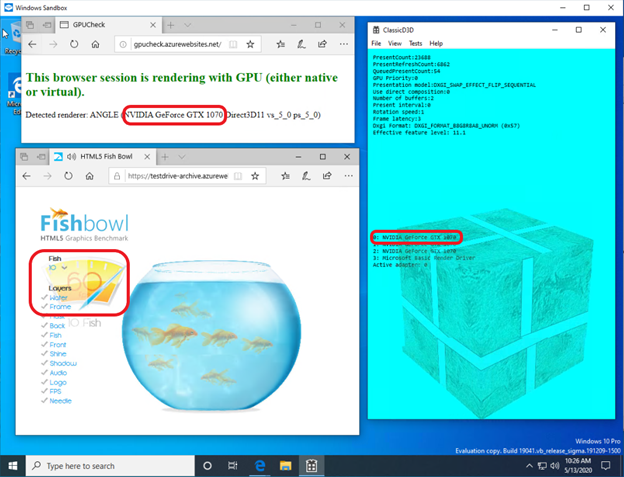

When it comes to providing this behavior in Windows guests, NVIDIA drivers have been supporting GPU-PV in Windows 10 for quite some time. NVIDIA GPUs can be used to accelerate compute and graphics output in all Windows 10 applications using Microsoft's virtualization layer. The use of GPU-PV also allows working with vGPU. Here are some examples of similar applications:

Here's what it looks like to launch a DirectX application in a Windows Sandbox container using an NVIDIA GeForce GTX 1070 video accelerator.

Graphics acceleration in Windows Sandbox container is performed by NVIDIA GeForce GTX 1070

Custom mode support

To add graphics rendering support to WSL, the corresponding development team from Microsoft also ported the dxcore user-mode component to Linux.

The dxcore library provides an API that allows you to get information about the WDDM-compatible graphics cards available on your system. This library was conceived as a cross-platform low-level replacement for working with DXGI adapters in Windows and Linux. The library also abstracts access to dxgkrnl services (IOCTL calls on Linux and GDI calls on Windows) using the D3DKMT API layer, which is used by CUDA and other user-mode components that rely on WSL WDDM support.

According to Microsoft, the dxcore library (libdxcore.so) will be available on both Windows and Linux. NVIDIA plans to add DirectX 12 support and CUDA APIs to the driver. These add-ons target the new WSL capabilities available with WDDM 2.9. Both API libraries will be hooked up to dxcore so that they can instruct dxg to marshal their requests to KMD on the host system.

Try the new WSL 2 features

Do you want to use your Windows computer to solve real problems from the fields of machine learning and artificial intelligence, and at the same time use all the amenities of a Linux environment? If so, then CUDA support in WSL gives you a great opportunity to do this. The WSL environment is where the CUDA Docker containers have proven to be the most popular computing environment among data scientists.

- In order to access the WSL 2 Preview version with GPU acceleration support, you can join the Windows Insider Program .

- Download the latest NVIDIA drivers , install them and try running a CUDA container in WSL 2.

Here you can learn more about the use CUDA technology in the WSL. Here , on the forum dedicated to CUDA and WSL, you can share with us your impressions, observations and ideas about these technologies.

Have you tried CUDA in WSL 2 yet?