Hello, Habr! As you know, datasets are the fuel for machine learning. Sites such as Kaggle, ImageNet, Google Dataset Search, and Visual Genom are the sources for getting datasets that people usually use and which are widely heard, but it is quite rare to find people who use sites such as Bing Image Search to find data. and Instagram. Therefore, in this article, I will show you how easy it is to get data from these sources by writing two small programs in Python.

Bing Image Search

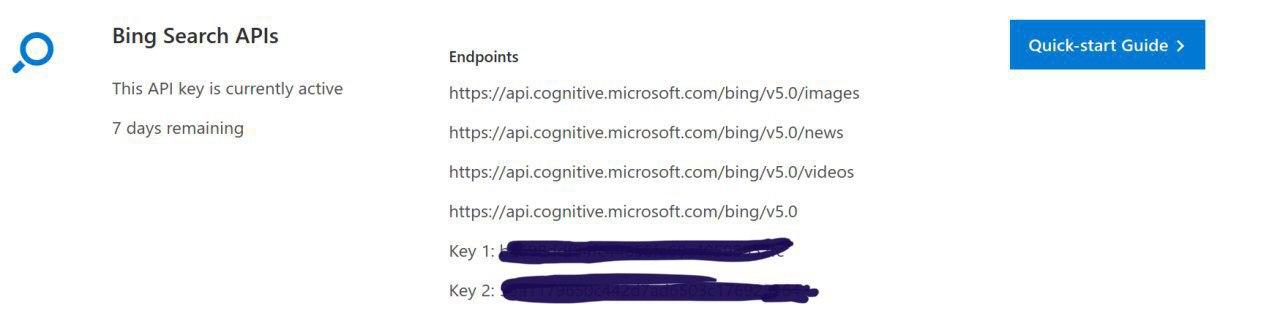

The first thing to do is follow the link, click the Get API Key button and register using any of the proposed social networks (Microsoft, Facebook, LinkedIn or GitHub). After the registration process is completed, you will be redirected to the Your APIs page, which should look similar (what is covered up is your API keys):

We turn to writing code. We import the required libraries:

from requests import exceptions

import requests

import cv2

import osNext, you need to specify some parameters: API key (you need to choose one of the two proposed keys), specify the search conditions, the maximum number of images per request, and also set the final URL:

subscription_key = "YOUR_API_KEY"

search_terms = ['girl', 'man']

number_of_images_per_request = 100

search_url = "https://api.cognitive.microsoft.com/bing/v7.0/images/search"Now we’ll write three small functions that:

1) Create a separate folder for each search term:

def create_folder(name_folder):

path = os.path.join(name_folder)

if not os.path.exists(path):

os.makedirs(path)

print('------------------------------')

print("create folder with path {0}".format(path))

print('------------------------------')

else:

print('------------------------------')

print("folder exists {0}".format(path))

print('------------------------------')

return path

2) Returns the contents of the server response in JSON:

def get_results():

search = requests.get(search_url, headers=headers,

params=params)

search.raise_for_status()

return search.json()3) :

def write_image(photo):

r = requests.get(v["contentUrl"], timeout=25)

f = open(photo, "wb")

f.write(r.content)

f.close():

for category in search_terms:

folder = create_folder(category)

headers = {"Ocp-Apim-Subscription-Key": subscription_key}

params = {"q": category, "offset": 0,

"count": number_of_images_per_request}

results = get_results()

total = 0

for offset in range(0, results["totalEstimatedMatches"],

number_of_images_per_request):

params["offset"] = offset

results = get_results()

for v in results["value"]:

try:

ext = v["contentUrl"][v["contentUrl"].

rfind("."):]

photo = os.path.join(category, "{}{}".

format('{}'.format(category)

+ str(total).zfill(6), ext))

write_image(photo)

print("saving: {}".format(photo))

image = cv2.imread(photo)

if image is None:

print("deleting: {}".format(photo))

os.remove(photo)

continue

total += 1

except Exception as e:

if type(e) in EXCEPTIONS:

continue:

from selenium import webdriver

from time import sleep

import pyautogui

from bs4 import BeautifulSoup

import requests

import shutil, selenium, geckodriver. , , #bird. 26 . , geckodriver, , :

browser=webdriver.Firefox(executable_path='/path/to/geckodriver')

browser.get('https://www.instagram.com/explore/tags/bird/') 6 , :

1) . login.send_keys(' ') password.send_keys(' ') :

def enter_in_account():

button_enter = browser.find_element_by_xpath("//*[@class='sqdOP L3NKy y3zKF ']")

button_enter.click()

sleep(2)

login = browser.find_element_by_xpath("//*[@class='_2hvTZ pexuQ zyHYP']")

login.send_keys('')

sleep(1)

password = browser.find_element_by_xpath("//*[@class='_2hvTZ pexuQ zyHYP']")

password.send_keys('')

enter = browser.find_element_by_xpath(

"//*[@class=' Igw0E IwRSH eGOV_ _4EzTm ']")

enter.click()

sleep(4)

not_now_button = browser.find_element_by_xpath("//*[@class='sqdOP yWX7d y3zKF ']")

not_now_button.click()

sleep(2)2) :

def find_first_post():

sleep(3)

pyautogui.moveTo(450, 800, duration=0.5)

pyautogui.click(), , , - , , , moveTo() .

3) :

def get_url():

sleep(0.5)

pyautogui.moveTo(1740, 640, duration=0.5)

pyautogui.click()

return browser.current_url, : .

4) html- :

def get_html(url):

r = requests.get(url)

return r.text5) URL :

def get_src(html):

soup = BeautifulSoup(html, 'lxml')

src = soup.find('meta', property="og:image")

return src['content']6) . filename :

def download_image(image_name, image_url):

filename = 'bird/bird{}.jpg'.format(image_name)

r = requests.get(image_url, stream=True)

if r.status_code == 200:

r.raw.decode_content = True

with open(filename, 'wb') as f:

shutil.copyfileobj(r.raw, f)

print('Image sucessfully Downloaded')

else:

print('Image Couldn\'t be retreived') . , , , , . , pyautogui, , , . , , .

, Ubuntu 18.04. GitHub.