It often happens that hidden in sight data centers are the core of our Internet. They transport, store and transmit the information we create every day.

The more data we create, the more vital data centers become.

Today, many data centers are impractical, inefficient, and outdated. To keep them operational, data center operators, from FAMGA to colocation centers, are working to upgrade them to meet the trends of an ever-changing world.

In this talk, we dive into many aspects of the future of data centers and their evolution. Starting from where and how they are created, what kind of energy they use and what hardware works inside them.

Location

Fiber optic access, tariffs for energy sources and the environment all play an important role in choosing a location for a data center.

According to some estimates, the global data center construction market by 2025 could reach $ 57 billion. This is a big enough challenge, as the commercial real estate giant CBRE has launched a whole division specializing in data centers.

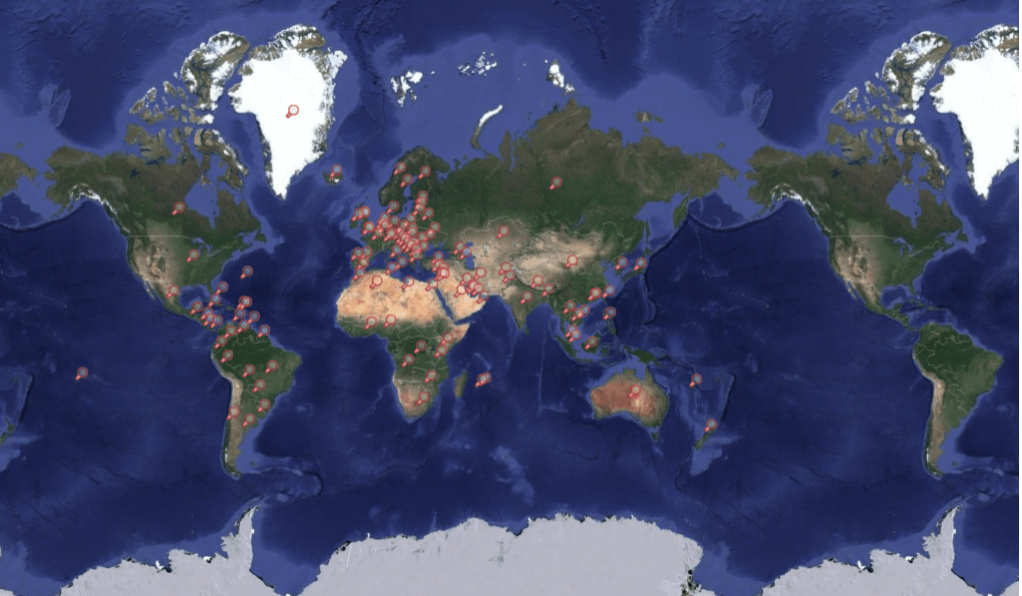

Map of data centers

Building close to cheap energy sources

Placing power-hungry data centers next to cheap power sources can make them more affordable to run. As emissions increase and large technology companies increasingly use dirty energy to power data centers, green energy sources are an important factor

Apple and Facebook have built data centers near hydropower resources. In downtown Oregon, Apple has acquired a hydropower project to provide green power to its data center in Prinville. The company said Oregon's deregulated electricity markets are the main reason why they built several data centers in the area. Deregulation allows Apple to buy electricity directly from third-party providers that use renewable energy sources, not just local utilities.

In Lulea, Sweden, Facebook has built a data megacenter next to a hydropower plant. In Sweden, data centers began to appear in the northern region, since, in addition to the cool climate and the location is not prone to earthquakes, there are a lot of renewable energy sources (hydropower and wind) in this part.

Building Facebook in Lulea, Sweden, in 2012. Source: Facebook

Several large data centers have already moved to the North Region, some are just eyeing it.

In December 2018, Amazon Web Services announced the opening of its AWS Europe Region data center, located about an hour's drive from Stockholm. In the same month, Microsoft acquired 130 hectares of land in two neighboring parts of Sweden, in Gävla and Sandviken, with the goal of building data centers there.

In addition to being close to cheap, clean energy sources, data center companies are also looking at the cool climate. Locations near the Arctic Circle, such as Northern Sweden, could allow data centers to save on cooling costs.

Telecommunications company Altice Portugal says its Covilhã data center uses outdoor air to cool its servers by 99%. And Google's old data center in Hamina, Finland, uses seawater from the Gulf of Finland to cool the facility and reduce energy consumption.

Verne Global has opened a campus in Iceland that connects to local geothermal and hydropower sources. It is located on a former NATO base and sits between Europe and North America, the two largest data markets in the world.

Construction in Emerging Economies

Placing a data center at a point of growing Internet traffic reduces the load and increases the data transfer rate in the region.

For example, in 2018, the Chinese company Tencent, which owns Fortnite and WeChat, set up data centers in Mumbai. The move is a sign that the region is actively using the internet and Tencent's gaming platforms are becoming more popular.

Building data centers in Internet-intensive areas is also a strategic business move. If local businesses develop, they will consider moving their operations to the nearest data center.

Also, data centers have a colocation function. This means that they provide the building with cooling, power, bandwidth and physical security, and then lease the space to customers who install their servers and storage there. Colocation service usually focuses on companies with small needs, it also helps companies save money on infrastructure.

Tax incentives and local law

Data centers are an important new source of income for electricity producers, and therefore the state attracts large companies with various benefits.

Beginning in January 2017, the Swedish government lowered the tax by 97% on any electricity used by data centers. Electricity in Sweden is relatively expensive and the tax cuts have put Sweden on a par with other Scandinavian countries.

In December 2018, Google agreed to a 100% 15-year exemption from tax on the sale of a data center in New Albany, Ohio for a data center worth $ 600 million.The offer was offered with the possibility of extension until 2058. In September 2018, France, after Brexit, hoping to attract economic talent and global capital, announced its plans to reduce electricity consumption taxes for data centers.

In some cases, the creation of a data storage and processing center is one of the ways companies can continue to operate in countries with a strict regime.

In February 2018, Apple began storing data at a center located in Guizhou, China, in order to comply with local laws. Whereas earlier, the Chinese authorities had to use the US legal system to gain access to the information of Chinese citizens stored in the American data center, now the local data center provides the Chinese authorities with easier and faster access to the information of Chinese citizens stored in the Apple cloud. ...

While the Chinese authorities previously had to go through the U.S. legal system to access Chinese citizens information stored in the U.S. data center, the local data center provides authorities with easier and faster access to Chinese citizens information stored in the Apple cloud.

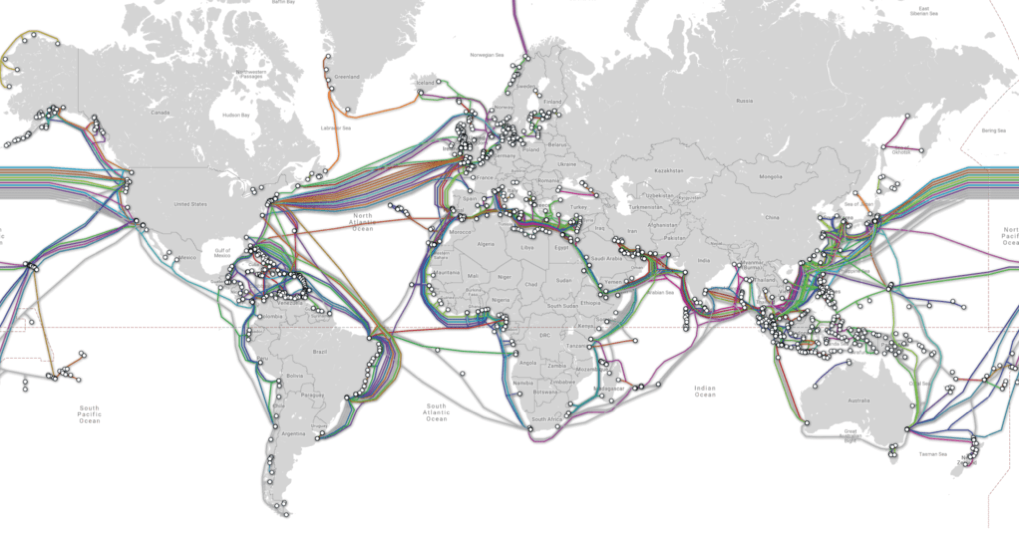

Fiber optic connectivity and security

Another two critical factors when it comes to data center locations are fiber connectivity and strong security.

For many years, fiber optic networks have been a key factor in the selection of data center locations. Although the data is often transferred to mobile devices via wireless networks or local Wi-Fi.

Most of the time, data travels to and from storage over fiber optic cables. Fiber optic connects data centers with cellular antennas, home routers, and other storage devices.

Submarine cable map

Ashburn, Virginia and its surrounding regions have grown into a major market for data centers, thanks in large part to the large network of fiber-optic infrastructure that Internet company AOL created once it built its headquarters there. While other companies such as Equinix have moved to the region and built their own data centers, the region's fiber networks continue to grow, attracting more and more new data centers.

Facebook invested nearly $ 2B in a data center in Henrico, VA, and in January 2019, Microsoft received a $ 1.5 million grant for the sixth time to expand its data center in Southside, Virginia.

New alternatives to fiber optic networks are also emerging as large technicians build their own connectivity infrastructure.

In May 2016, Facebook and Microsoft announced a joint submarine cable work between Virginia Beach, Virginia and Bilbao, Spain. In 2017, Facebook announced further plans to build its own 200 miles of land-based fiber optic network in Las Lunas, New Mexico, to connect its New Mexico data center to other server farms. The underground fiber optic system will create three unique networked routes for the information travel to Las Lunas.

In addition to connecting, another important point is security. This is especially important for data centers that store sensitive information.

For example, the Norwegian financial giant DNB, in partnership with the Green Mountain data center, has created its own data center. Green Mountain housed the DNB data center in a high security facility - a converted bunker in the mountain. The company claims that the mountain is completely protected from all types of hazards, including terrorist attacks, volcanic eruptions, storms, and earthquakes.

The Swiss Fort Knox data center is located under the Swiss Alps, with a door disguised as a stone. Its complex internal system includes many tunnels that can only be accessed with appropriate clearing. The data center is protected by emergency diesel engines and an air pressure system that prevents toxic gas from entering the room.

Sweden's Knox Fort is physically and digitally hyper-secure. Source: Mount 10

Due to the high degree of server security in some data centers, there are many data centers, the location of which has never been announced. The location information of these centers can be used as a weapon.

In October 2018, WikiLeaks published an internal list of AWS objects. Among the information found was the fact that Amazon was a contender for the creation of a private cloud worth about $ 10B for the Department of Defense.

WikiLeaks itself has had difficulty finding the right data center to host its information. AWS stopped hosting for WikiLeaks because the company violated the terms of service - namely, issued documents that it did not have rights to, and put people at risk.

As a result, WikiLeaks has moved to many different data centers. At one point, there were even rumors that WikiLeaks was considering placing its data center in the ocean, in the unrecognized state of Sealand in the North Sea.

Structure

While location is perhaps the single most important factor to reduce the risk of data center malfunctioning, data center structures also play an important role in ensuring reliability and durability.

Proper construction of a data center can make it resilient to seismic activity, floods and other types of natural disasters. In addition, structures can be adapted for expansion, reducing energy costs.

In all sectors - from healthcare to finance and manufacturing - companies rely on data centers to support growing data consumption. In some cases, these data centers may be proprietary and located in place, while others may be shared and located remotely.

In any case, data centers are at the center of the growing technological world and continue to experience their own physical transformations. According to CB Insights Market Sizing tool, the global data center services market is valued at $ 228 billion by 2020.

One of the latest transformations in building data centers is size. Some data centers have become smaller and more common (called peripheral data centers). At the same time, other data centers are bigger and more centralized than ever - these are mega data centers)).

In this section, we look at the peripheral and megacenters of data centers.

Peripheral data centers

Small distributed data centers, called edge data centers, are created to provide hyper-local storage.

While cloud computing has traditionally served as a reliable and cost-effective means of connecting many devices to the Internet, the continuous growth of IoT and mobile computing has put a strain on the bandwidth of data center networks.

Edge computing is now emerging to offer an alternative solution. (Edge computing is a paradigm of distributed computing that is within the reach of end devices. This type of computing is used to reduce network response time, as well as make more efficient use of network bandwidth.)

This involves placing computing resources closer to where the data is coming from (ie, engines, generators or other elements. By doing so, we reduce the time it takes to move data to centralized computing locations, such as clouds.

Although this technology is still in its infancy, it already provides a more efficient method of processing data for numerous use cases, including autonomous vehicles.For example, Tesla vehicles have powerful on-board computers that can process data with low latency (near real time) for data collected by dozens of peripheral vehicle sensors. allows the vehicle to make timely independent decisions.)

However, other advanced technologies, such as wireless medical devices and sensors, do not have the necessary computing power to directly process large flows of complex data.

As a result, smaller modular data centers are being deployed to provide hyperlocal storage and data processing. According to CB Insights Market Sizing, by 2023 the global computing market will reach $ 34B.

These data centers, which are typically the size of a shipping container, are located at the base of cell towers or as close as possible to the data source.

In addition to transportation and healthcare, these modular data centers are used in industries such as manufacturing, agriculture, energy and utilities. They also help mobile network operators (MNCs) deliver content to mobile subscribers faster, while many technology companies use these systems to store (or cache) content closer to their end users.

Vapor IO is one of the companies offering colocation services, hosting small data centers based on cell towers. The company has a strategic partnership with Crown Castle, the largest provider of wireless infrastructure in the United States.

Other leading data center companies, such as Edgemicro, provide microdata centers that connect mobile network operators (MNCs) to content providers (CPSs). Founders of EdgeMicro draw on the experience of executives from organizations such as Schneider Electric, one of the largest energy companies in Europe, and CyrusOne, one of the largest and most successful data center service providers in the United States.

The company recently unveiled its first production unit and plans to sell its services to collocation content providers such as Netflix and Amazon, who will benefit from improved speed and reliability of content delivery. These colocation services are ideal for companies looking to own, but not manage, their data infrastructure.

Edge Micro

And startups are not the only ones involved in the peripheral data center market.

Large companies such as Schneider Electric, not only collaborate with startups, but also develop their own products for data centers. Schneider offers several different prefabricated modular data centers that are ideal for a variety of industries that require hyper-local computing and storage space.

Huawei

These end-to-end solutions integrate power, cooling, fire suppression, lighting and control systems in one package. They are designed for quick deployment, reliable operation and remote monitoring.

Megacenters of data processing

At the other end of the spectrum are mega data centers - data centers with an area of at least 1 million square meters. ft. These facilities are large enough to meet the needs of tens of thousands of organizations simultaneously and benefit greatly from economies of scale.

Despite the fact that the construction of such megacenters is expensive, the cost per square foot is much higher than the cost of an average data center.

One of the largest projects is an object with an area of 17.4 million square meters. ft, built by Switch Communications, which provides businesses with housing, cooling, power, bandwidth, and physical security for their servers.

Switch

In addition to this huge Citadel campus in Tahoe Reno, the company also has a data center with an area of 3.5 million square meters. ft in Las Vegas, 1.8 million sq. ft in Grand Rapids and a 1 million sq. feet in Atlanta. The Citadel campus is the world's largest colocation data center, according to the company's website.

Big tech is also actively building mega data centers in the US, with Facebook, Microsoft and Apple all buildings to support their growing storage needs.

For example, Facebook is building a data center with an area of 2.5 million square meters. ft in Fort Worth, Texas, to process and store its user's personal data. It was assumed that the data center will occupy only 750 thousand square meters. Feet, but the social media company has decided to triple its size.

Source: Facebook

The new data center will cost about $ 1 billion and will be located on 150 acres of land, allowing for future expansion. In May 2017, 440 thousand sq. Feet.

One of Microsoft's most recent investments is a data center in West Des Moines, Iowa, which cost the company $ 3.5 billion. Together, this data center cluster covers 3.2 million square feet of space, with the largest data center covering 1.7 million square feet. This special data center, called Project Osmium, is located on 200 acres of land, and is expected to be completed by 2022.

In recent years, Iowa has become a popular destination for data centers due to its low energy prices (some of the lowest in the country) and low disaster risk.

Apple is also building a small, 400,000-square-foot facility in Iowa that will cost $ 1.3 billion.

Source: Apple Newsroom

While some Apple facilities are built from the ground up to manage all of their content - app storage, streaming music service, iCloud storage, user data, Apple repurposed a 1.3 m² solar panel plant in Mesa, Arizona, which opened in August 2018. The new data center runs on 100% green energy thanks to a nearby solar farm.

Outside of the US, specific regions that have attracted these mega data centers include Northern Europe, which has been a popular building destination for tech giants for its cool temperatures and tax breaks.

Hohhot, China, located in Inner Mongolia, also has a convenient location for mega data centers as it has access to cheap local energy, cool temperatures, and university talent (with Inner Mongolia University).

While large data centers have become a popular topic for all of China, the Inner Mongolia region has become the center of such developments. China Telecom (SF 10.7 million), China Mobile (SF 7.8 million) and China Unicom (SF 6.4 million) have established mega data centers in the region.

Source: World's Top Data Centers

Data center innovation

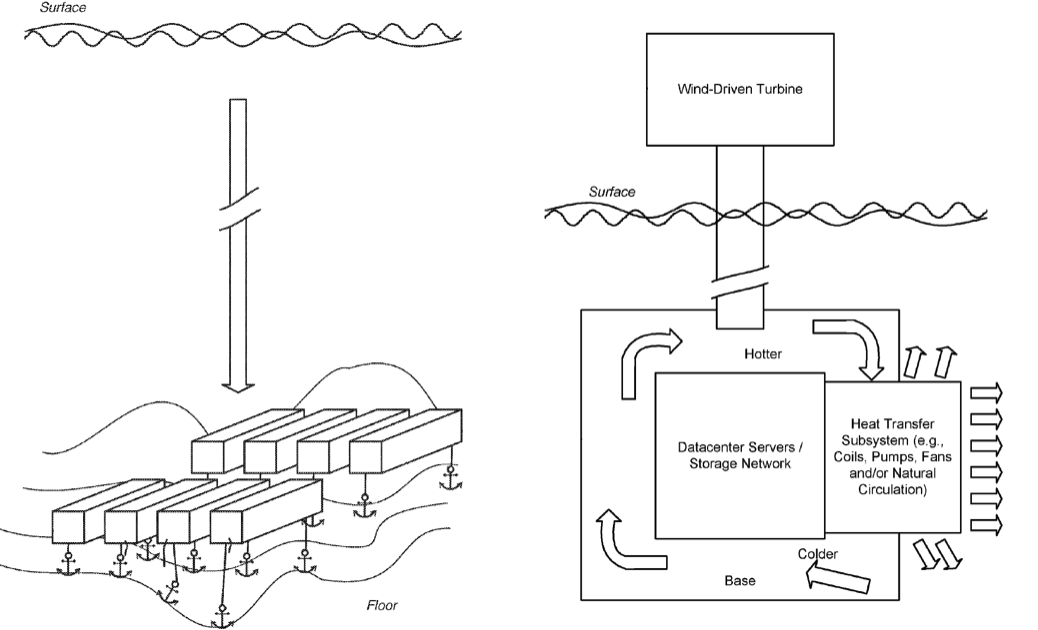

In addition to data centers, new data center structures designed with environmental benefits in mind are another emerging trend.

Today, many organizations are experimenting with data centers that operate in and around the ocean. These structures use their environment and resources to naturally cool the servers at a very low cost.

One of the most advanced examples of using the ocean with natural cooling is the Microsoft Project Natick project. As noted in a 2014 patent application called Submersible Data Center, Microsoft submerged a small cylindrical data center on the coast of Scotland.

The data center uses 100% of locally produced renewable electricity from coastal wind and solar sources, as well as from tides and waves. The facility uses the ocean to remove heat from infrastructure.

Source: Microsoft

From an early stage, Microsoft has made great strides in this project, which could provide a green light for the expansion of data centers in the ocean in the future.

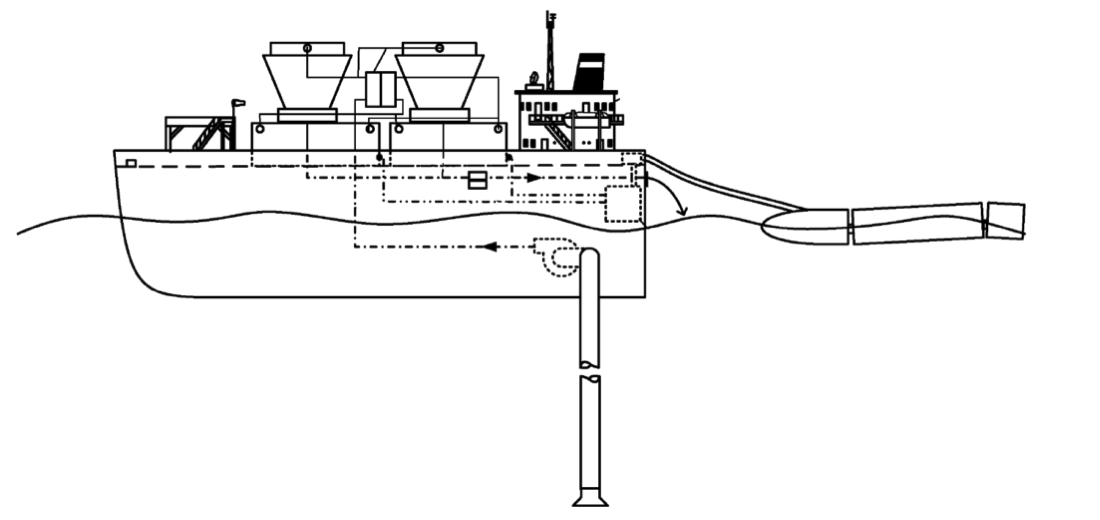

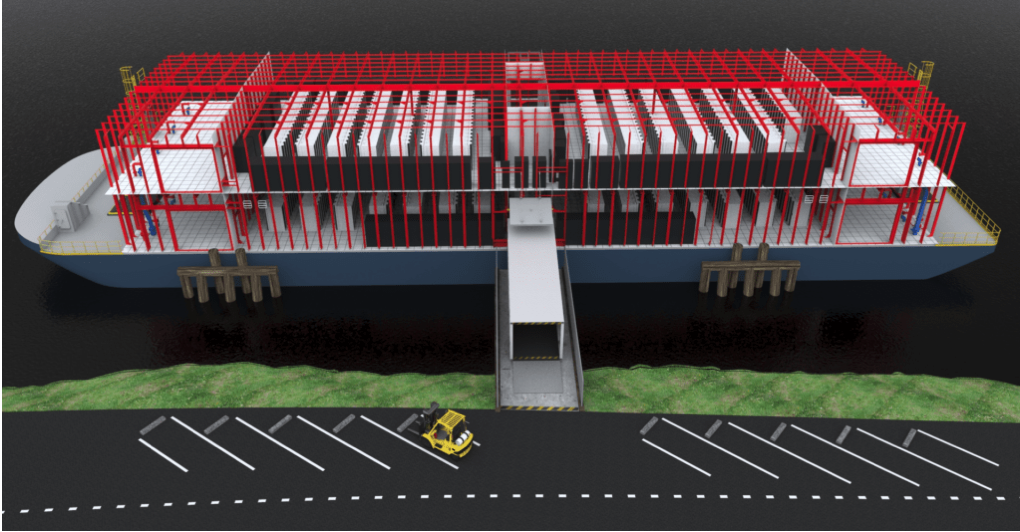

Google has also experimented with ocean data centers. In 2009, the company applied for a patent for the Water Based Data Center, which provides for the creation of data centers on board floating barges.

Similar to Microsoft's design, the structure will use the surrounding water to naturally cool the facility. In addition, the barge can also generate energy from ocean currents.

Although Google did not report whether these designs were tested, it is believed that the company is responsible for a floating barge in the San Francisco Bay back in 2013.

The launch of Nautilus Data Technologies was carried out with a very similar idea. In recent years, the company has raised $ 58 million to bring the concept of this floating data center to life.

Source: Business Chief

The company pays less attention to the ship itself, and more technology is needed to power and cool the data centers. Nautilus main objectives are: reducing the cost of computing, reducing energy consumption, stopping water consumption, reducing air pollution and reducing greenhouse gas emissions.

Energy efficiency and economy

Currently, 3% of all electricity consumption in the world falls on data centers, and this percentage will only grow. This electricity is not always “clean”: according to the United Nations (UN), the information and communication technology (ICT) sector, which is heavily powered by data centers, provides the same amount of greenhouse gases as the aviation sector with fuel.

Although ICT has been able to stem the rise in electricity consumption in part due to the closure of old, inefficient data centers, this strategy can only lead to an increase in Internet consumption and data production.

In the future, there are two ways for data centers to reduce emissions: to increase the energy efficiency of the data center and to ensure the cleanliness of the energy used.

Reduced consumption and improved energy efficiency

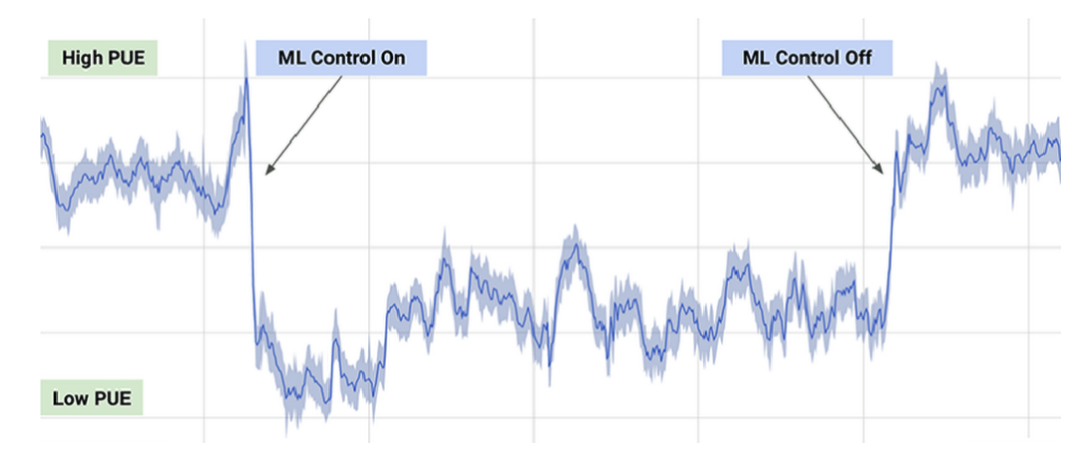

In 2016, DeepMind and Google worked on an AI recommendation system to help make Google's data centers more energy efficient. The focus was on the smallest improvements: even small changes were enough to help significantly save energy and reduce emissions. However, after the recommendations were put into effect, their implementation required too much effort from the operator.

Data center operators asked if they could be implemented autonomously. However, according to Google, no artificial intelligence system is yet ready to fully control the cooling and heating processes in the data center.

Google’s current AI management system is working on specific actions, but can only perform them under limited conditions, prioritizing safety and reliability. According to the company, the new system provides an average of up to 30% energy savings.

Typical PUE day (energy efficiency) with ML on and off.

Source: DeepMind

Google also used an artificial intelligence system to adjust the efficiency of a data center in the Midwest during tornado surveillance. While the human operator could focus on preparing for the storm, the AI system took advantage of and knowledge of tornado conditions - such as air pressure drops and changes in temperature, humidity - to tune the data center's cooling systems for maximum efficiency during a tornado. In winter, the AI control system adapts to the weather to reduce the energy consumption needed to cool the data center.

However, this method is not without drawbacks. Gaps in AI technology make it difficult to create an environment for the center to easily make effective decisions, and artificial intelligence is very difficult to scale. Every Google data center is unique and it is difficult to deploy an AI (artificial intelligence) tool to all at once.

Another way to increase efficiency is to change the method of cooling the overheated parts of the data center, such as servers or certain types of chips.

One such method is to use liquid instead of air to cool parts. Google CEO Sundar Pichai said the company's recently released chips are so powerful that the company had to immerse them in liquid to cool them to the right degree.

Some data centers are experimenting with submerging data centers underwater to simplify cooling and improve energy efficiency. This allows data centers to have constant access to naturally cool deep sea water, so heat from the equipment goes to the surrounding ocean. Since the data center can be located off any coast, it makes it possible to choose a connection to clean energy - such as Microsoft Project Natick 40 feet long, powered by the wind of the Orkney Islands network.

The advantage that smaller underwater data centers have is that they can be modular. This simplifies their deployment compared to new centers on land.

However, despite these advantages, some experts are wary of underwater datacenters, as flooding is possible. Since the cooling process involves drawing in clean water and releasing hot water into the surrounding region, these data centers can make the sea warmer, affecting the local marine life. Although underwater data centers such as Project Natick are designed to operate without human control, they can be difficult to fix if problems arise.

In the future, data centers can also contribute to clean energy and efficiency by recycling some of the electricity they generate. Several projects are already exploring the possibility of reusing heat. Nordic data center DigiPlex is partnering with heating and cooling supplier Stockholm Exergi to create a heat recovery system. The concept of this solution is to collect excess heat in a data center and send it to a local district heating system to potentially heat up to 10,000 people in Stockholm.

Buying Clean Energy

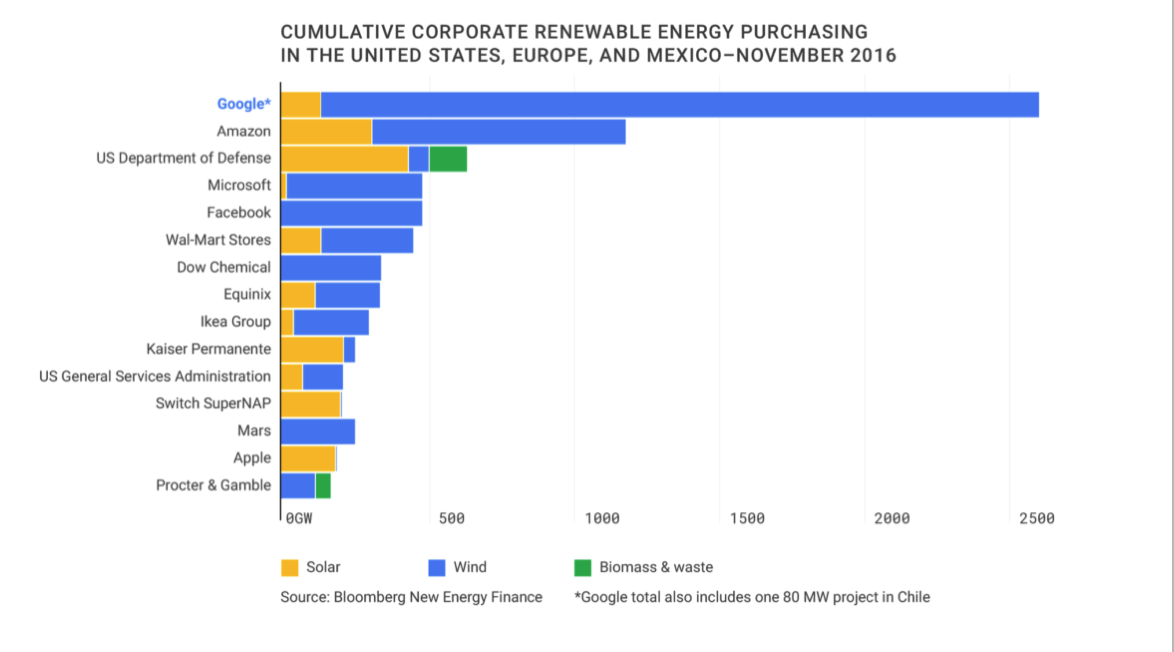

According to the IEA, the ICT industry has made some progress in the field of clean energy and is currently closing almost half of all corporate agreements for the purchase of electricity from renewable sources.

As of 2016, Google is the largest buyer of corporate renewable energy on the planet.

Source: Google Sustainability

In December 2018, Facebook acquired 200 MW of power from a solar power company. In Singapore, where land area is limited, Google has announced plans to buy 60 MW of rooftop solar.

At the same time, large companies like Google and Facebook, which have the resources to buy large amounts of renewable energy, can be difficult for small companies that may not need large amounts of energy.

Another more expensive energy option is MicroGrid: installing an independent power source inside the data center.

One example of such a strategy is fuel cells like those sold by the recently publicly traded company Bloom Energy. The cells use hydrogen as a fuel to create electricity. Although these alternative sources are often used as backup energy, the data center can rely entirely on this system to survive, although it is an economically expensive option.

Another way that data centers are exploring clean energy is through the use of renewable energy sources (RECs), which represent a certain amount of clean energy and are typically used to balance the “dirty energy” that a company has been using. For any amount of dirty energy produced, REC represents the production of an equivalent amount of clean energy in the world. These recovered amounts are then sold back to the renewable energy market.

However, there are certain problems with the REC model. On the one hand, REC only compensates for dirty energy - this does not mean that the data center is running on clean energy. Although the REC method is generally easier than finding available sources that can provide enough energy to meet the needs of the data center. The downside is that the method is usually out of reach for small companies that don't always have the capital to bet on the fluctuations of a solar or wind farm.

In the future, technology will facilitate close collaboration between small producers and buyers. Market models and portfolios of renewable energy sources (similar to those created by mutual funds) are emerging that allow for the assessment and quantification of fluctuations in renewable energy sources.

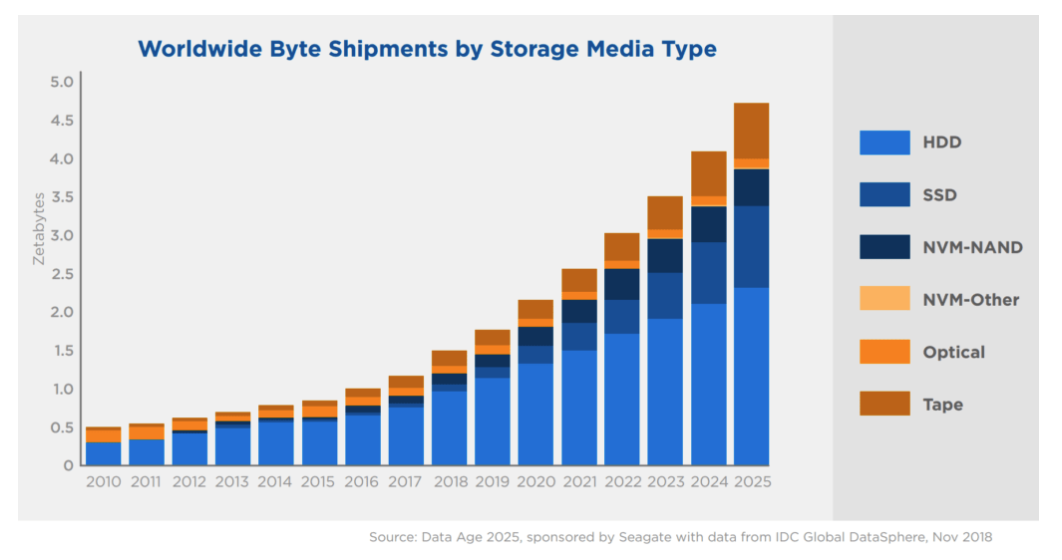

(SSD)

Solid state drives, or SSDs, are a type of storage device that can read and write data. These devices can store data without power - this is called persistent storage. This differs from temporary forms of data storage, such as Random Access Memory (RAM), which store information only during device operation.

SSDs compete with HDDs, another form of mass storage. The main difference between SSD and HDD is that SSDs function without moving parts. This allows SSDs to be more durable and lighter.

However, SSDs remain more expensive than traditional HDDs - a trend that is likely to continue without a breakthrough in the production of solid state drives.

Source: Silicon Power Blog

Although SSDs have become the standard for laptops, smartphones, and other thin-profile devices, they are less practical and therefore less common in large data centers due to their higher price tag.

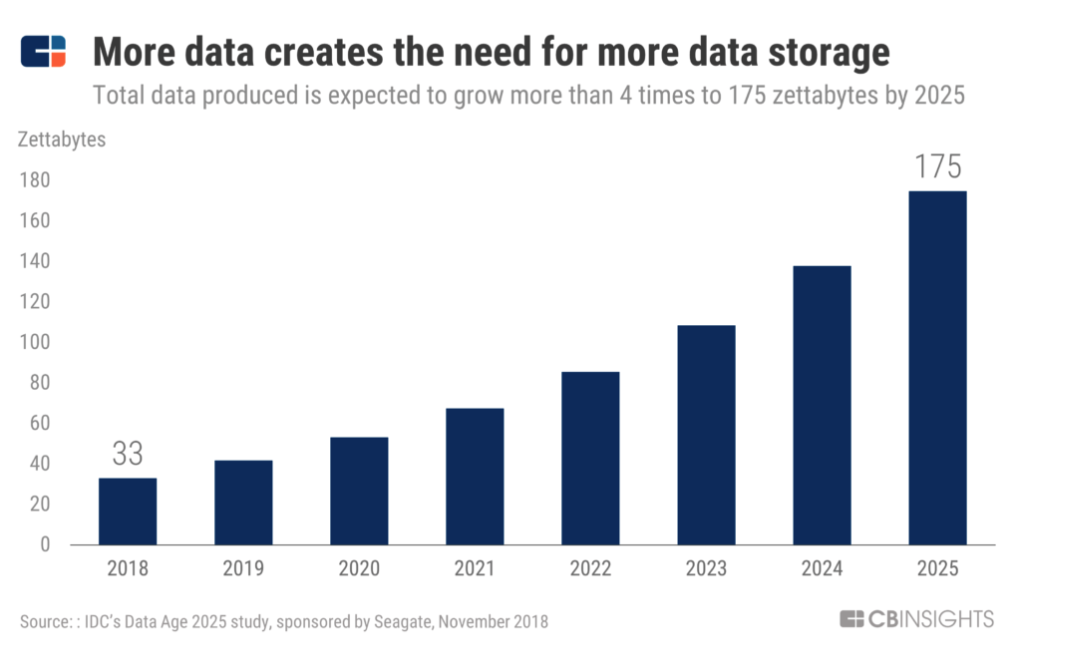

Source: Seagate & IDC

According to IDC, by the end of 2025 more than 80% of corporate storage capacity will remain in the form of hard drives. But after 2025, SSDs may become the storage medium of choice for businesses and their data centers.

With the widespread adoption of solid state drives in consumer electronics and IoT devices, increased demand can lead to increased supply and, ultimately, lower costs.

Cold storage

Unlike newer SSDs, cold storage uses many older technologies, such as CD-Rs and magnetic tapes, to keep data using as little power as possible.

In cold storage, access to data takes much longer than in hot storage (for example, SSD). In such systems, only infrequently used data should be stored.

"Hot" clouds work on SSDs and hybrid drives, provide the fastest access to information. Cold storage - solutions are more economical and slower. Cold Clouds stores information that is not required for instant viewing. Upon request, data can be uploaded from a few minutes to tens of hours.

Literally speaking, the difference in temperature inside data centers - the faster the cloud, the more heat the equipment generates.

However, the world's largest technology companies such as Facebook, Google and Amazon use cold storage to store even the most granular user data. Cloud providers like Amazon, Microsoft and Google also offer these services to customers who want to store data at low cost.

According to CB Insights Market Calibration Tool, the cold storage market is expected to reach nearly $ 213B by 2025.

habrastorage.org/webt/cr/cc/t-/crcct-u3vtckequtnd0eazlwkqk.png

Source: IBM

No business wants to get off the curve in data collection. Most organizations argue that it is best to collect as much data as possible today, even if they have not yet decided how it will be used tomorrow.

This type of unused data is called dark data. This is data that is collected, processed and stored, but usually not used for specific purposes. IBM estimates that approximately 90% of sensor data collected from the Internet is never used.

In the future, perhaps, there are more effective ways to reduce the total amount of dark data collected and stored. But now, even with the advancement of artificial intelligence and machine learning, enterprises are still interested in collecting and storing as much data as possible in order to use that data in the future.

Therefore, for the foreseeable future, the best cold storage makes it possible to store data at the lowest cost. This trend will continue as users generate more data and organizations collect it.

Other forms of data storage

In addition to solid state drives, hard drives, CDs, and magnetic tapes, a number of new storage technologies are emerging that promise to increase capacity per storage unit.

One of the promising technologies is thermomagnetic recording (also thermal magnetic recording) or HAMR. HAMR dramatically increases the amount of data that can be stored on devices such as hard drives by heating the surface of the disc with a high-precision laser while recording. This provides more accurate and stable data recording, which increases storage capacity.

It is expected that by 2020 this technology will allow producing up to 20 TB of hard drives on one 3.5-inch drive, and in the future, annually increase capacity by 30% - 40%. Seagate has already created a 16TB 3.5-inch drive, which it announced in December 2018.

Seagate has actively patented this HAMR technology over the past decade. In 2017, the company filed for a record number of patents related to this technology.

(Note: There is a 12-18-month delay between the filing of patents and publication, so that 2018 could see an even greater number of patents HAMR.)

A little advertising :)

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, cloud VPS for developers from $ 4.99, a unique analogue of entry-level servers, which was invented by us for you: The whole truth about VPS (KVM) E5-2697 v3 (6 Cores) 10GB DDR4 480GB SSD 1Gbps from $ 19 or how to divide the server? (options available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper at the Equinix Tier IV data center in Amsterdam? Only we have 2 x Intel TetraDeca-Core Xeon 2x E5-2697v3 2.6GHz 14C 64GB DDR4 4x960GB SSD 1Gbps 100 TV from $ 199 in the Netherlands! Dell R420 - 2x E5-2430 2.2Ghz 6C 128GB DDR3 2x960GB SSD 1Gbps 100TB - From $ 99! Read about . c Dell R730xd 5-2650 v4 9000 ?