The proliferation of technologies of the industrial Internet of things, unmanned vehicles and other cyber-physical systems affecting the safety of people makes it increasingly important for programmable electronic devices to comply with the requirements of international standards in the field of functional safety, in particular IEC 61508 and ISO 26262.

Hardware developers and software, a lot of practical questions arise, for the answer to which it is required to give some kind of comprehensive understanding, which will allow you to quickly grasp the principles of solving many particular questions and problems, seemingly small, but which are an important part of the puzzle.

For a deep understanding of the essence of the development and certification of critical software and hardware systems, you need to know the "three pillars" of functional safety:

- Safety culture (Safety Cultute)

- Functional Safety Management (FSM);

- Safety Proof.

This article will focus on the first of these, Safety Culture. More precisely, about the characteristic features of different types of safety culture and about the features of safety culture for companies that develop electrical, electronic and software components of safety systems.

The term “safety culture” is considered in detail in [1].

Introduction

Since the tragedies at the Chernbyl nuclear power plant and at the Piper Alpha oil platform, the culture of safety has, one might say, “soaked in the DNA” of people working in dangerous sectors of the economy. But one thing is dangerous production, and another is the development of hardware or software for critical systems. By itself, the work of circuit designers and programmers is obviously not fraught with a risk to life either for developers or for residents of houses in the vicinity of the office. Safety issues relate to the scope of the product, as failures due to errors and miscalculations may occur, firstly, not immediately, and secondly, elsewhere.

At the same time, the place may already be very unsafe, and the failure to happen very wrong time ...

Safety culture is part of the organizational culture. This question is perfectly disclosed in his best-selling book by Jim Collins [2], here is a short quote:

“All companies have some kind of culture, some have discipline, but few have a culture of discipline. If employees are disciplined, no hierarchy is needed. If there is discipline in thinking, there is no need for bureaucracy. If there is discipline in action, you don't need extra control. When a culture of discipline is added to ethical business conduct, it is a magic potion for outstanding achievement. "In this passage, the author talks about the personal culture of employees, which he calls a culture of discipline . Other authors use other terms: Alexander Kirillovich Dianin-Havard talks about moral leadership [3], Guy Kawasaki, quoting Steven Jobs, talks about players of the first category [4]. These authors reveal well the fact that the activity of an organization is the activity of its employees in all the variety of personal motivations and interpersonal relationships.

I would like to note one more thought before moving on to the essence. Of course, “safety” and “safety culture” can have different names: industrial, aviation, transport, medical activities. But, since the deep essence of the matter is the same, in the methodological and normative literature there is a gradual rejection of the "industry adjectives" used with the word "safety" or instead of it. For example, the IAEA glossary [5] no longer uses the word “nuclear” in the term “safety culture”.

Many methods have been developed for auditing and analyzing safety culture in global industry, healthcare and transport: for example, a study by the British Health Association [6] lists more than 20 such methods, as well as links to 125 studies in this area. Similar studies are published by other organizations [7]. In practice, the following methods of analyzing the safety culture of organizations are most common:

- Hearts & Minds ("Hearts and Minds");

- Safety Culture Maturity Model (SCMM);

- Safety Culture Indicator Scale Measurement System (SCISMS).

The Hearts and Minds program for the analysis and transformation of safety culture is perhaps the best known of these methods. It was developed for its own use by the Shell group of companies and has become, in fact, the de facto standard in the global oil and gas industry, and has also gained distribution in the energy, mining, chemical, pharmaceutical, defense and other hazardous industries. Now the program is administered by the Energy Institute of Great Britain (Energy Institute), which accredits consulting companies to support implementation, training of internal trainers, etc. In Russia and the CIS, the "Hearts and Minds" program is officially represented by the Yamnaska company .

And finally, before moving on to the consideration of types of crops, one cannot help but mention the detailed work [8] prepared under the auspices of the Neftegazstroyprofsoyuz of Russia.

Vestrama model

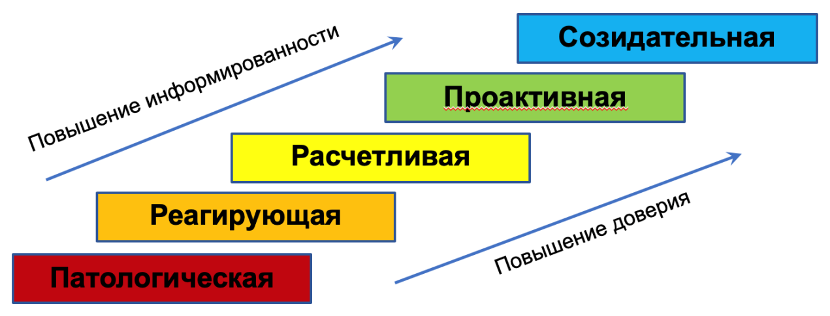

At the heart of Hearts and Minds, among others, is an evolutionary safety culture model known as the Westrum model, which defines five types of safety culture (Figure 1).

Fig. 1. Evolutionary model of Vestram safety culture The Vestram

model assumes the evolution of safety culture. Of course, the reverse process can also take place in an organization - degradation. To put it mildly, the stages of reducing the effectiveness of security are considered in the work mentioned above [8] and we will not discuss them: we will think positively. After all, our organizations are evolving, aren't they?

Why else would we waste time working for them?

Of course, pathological and reactive cultures can hardly be called cultures in the full sense of the word. There is even a special name for this: negative cultures. This is the terminology from the category “lack of hairstyle - also hairstyle”. In such organizations, formal and superficial structures may well exist that do not penetrate into real processes. For example, there may be a quality / safety management system and even dedicated personnel are assigned to perform quality and / or safety control functions, that is, the organization seems to actually allocate some resources, but their true purpose is to formally fulfill (or even only imitate fulfillment) requirements of regulators.

However, let's take a closer look at each type of safety culture.

1) Pathological "culture" of security

The leadership of such an organization treats safety as an external requirement, as a kind of hindrance to work. It is considered sufficient only to comply with the mandatory requirements of regulatory documents; there is no willingness to independently study safety aspects.

A management organization of this type is confident that all troubles are due to their subordinates.

In Appendix A we have included a list of some of the signs that the culture of an organization is at the pathological level.

2) Responsive Security Culture

The English name for this level "reactive" in the literature is often conveyed by the Russian tracing word "reactive", but I find it not very successful.The management of an organization at this level considers safety an important element of product quality even in the absence of pressure from regulatory authorities, but believes that all problems lie at the lower levels of the corporate hierarchy. Safety is the goal and objective, along with other performance indicators. The organization begins to apply some methods and means by which security reaches a certain level, and seeks to use the experience of other organizations. When an incident occurs, proactive action is taken.

In Appendix B, we have placed a list of some signs that the organization’s culture is at a reactive level.

3) Calculating safety culture

The management of a prudent organization believes in the need for a systematic approach to managing safety performance, uses various methods and means for this, and conducts personnel training. An organization with a calculating culture performs the correct, in general, actions, but does it mechanically, sometimes blindly following the procedures.

In Appendix B, we have placed a list of some signs that the organization’s culture is at a calculated level.

In the first version of the Vestram model, this type was called bureaucratic

4) Proactive safety culture

Leadership of a proactive organization perceives security as a fundamental value. Executives at all levels sincerely care about product quality and safety. All employees are fully involved in safety management and consider it their duty to work efficiently. Fundamental security processes are well established, understood and used by the organization. Full incident reporting. Investigation of problems eliminates system defects. Potentially hazardous product defects are used as critical indicators of product quality.

5) Creative safety culture

The organization does not require the influence of regulatory authorities to ensure safety; it seeks to have a complete understanding of the conditions and environment for the use of products. Constant improvement of security involves all employees of the organization, as well as contractors. Employees are unknowingly competent. People understand the impact of their actions on safety, each employee can contribute to the development of the organization. An environment has been created that allows for improvements, there is a constant exchange of knowledge and the improvement of safety culture. Safety and quality are integrated into everything the organization does.

, , , . , , . – , , . , .There is one subtle but fundamental difference between proactive and creative organizations. The fact is that the bureaucratic, mechanistic style of work at a prudent level is very comfortable for many employees of the organization, especially if it is accompanied by success. There is a very strong temptation to “rest on our laurels,” and, as Vestram's colleague, Professor Patrick Hudson, writes in [11], proactive organizations easily return to the calculating level. This is not typical of creative organizations, because, as Hudson writes, they have anti-bureaucratic properties, and their speed of action breaks hierarchical structures.

Development of hardware and software

Discussing organizational culture in general and levels of safety culture in particular, we aimed to present the material in a refraction to the specifics of the development of hardware and software security systems. Appendix B of GOST R ISO 26262-2 can also be used as a good methodological aid in assessing and self-assessing the safety culture of organizations developing such components. Here is Table B.1 from this appendix:

When developing your own safety culture development program, you can develop measures to overcome the signs of low culture and the formation of signs of high culture.

Appendix B of GOST R ISO 26262-2 contains a reference to INSAG-4 [9], a document that has largely laid the foundation for the dissemination of safety culture around the world.

The context of this document is described in detail in [10].

conclusions

- The key to the safe development of hardware and software components is a strong personal and collective safety culture. A safety culture is part of an organizational culture.

- Culture is a derivative of the qualifications and discipline of all personnel of the organization, starting with senior management, as well as their attitude to their duties.

- A natural sign of a high culture is well-thought-out, well-understood, really executed and constantly measured work processes that make up the management system (quality or safety).

Appendix A. Signs of pathological safety culture

Some signs that the organization is at this level are:

- Security issues are not handled by anyone other than designated personnel who perform the function of simulating activities for external auditors.

- The management and staff of the organization are not so much concerned about security, but about not being “caught” on violations.

- Information about the problems by employees is hidden, information about the true state of affairs is not collected by the leadership (“A messenger who brings bad news is cut off his head”).

- The staff is unconsciously incompetent, employees shy away from responsibility ("You tell me, boss, what exactly I have to do - I will do it").

- , (« ? ?»).

- , ( ) «» .

- , , , , .

- « », ;

- , — .

.

Some signs that the organization is at this level are:

- Security activities focus on an incident that has already occurred;

- Most employees are not involved in quality and safety assurance - these tasks are assigned to a separate department or employees;

- Decisions are often made in terms of cost (costs, expenses) and technical capabilities;

- Management's response to employee errors is expressed in increased control using administrative procedures and training, and not in finding the culprit;

- The organization is open to training from other structures, especially in technical matters and transfer of experience;

- Only part of the security-related processes has been built. Either many processes, but formal or superficial.

- The relationship between the organization and the inspection bodies, consumers, suppliers, contractors is more distant than close.

- Employees are rewarded for achieving short-term goals, meeting or overfulfilling a plan, without considering delayed results and consequences.

- The relationship between employees and management is hostile, there is only demonstrative trust and respect.

Appendix B. Signs of a prudent safety culture

Some of the signs that an organization is at this level are the following qualities:

- Safety is not only the responsibility of designated personnel but also of the organization's management. The leadership is "strict but fair."

- The importance and value of safety is well understood by staff.

- Underlying processes are up and running, such as risk assessment and incident analysis.

- , .

- , .

- , , .

- .

- , . .

- . , . .

- People are aware of the production or economic issues of the organization and help management to manage them.

- The relationship between management and employees is supportive, respectful and supportive. People are respected and valued for their contribution to the development of the organization.

However, there is work to do:

- Some status information may be ignored. At the same time, “messengers bringing bad news” are quite tolerant.

- There is a delineation of responsibility for safety. (“Do you have any complaints about the buttons?”) Interaction between those responsible for different aspects of safety is not prohibited, but not encouraged either.

- New ideas create inconvenience and problems.

List of sources used

- . . , . . . . « », №2, 2017 . URL -https://biota.ru/publishing/magazine/bezopasnost-i-oxrana-truda-№2,2017/kultura-bezopasnosti-kak-neotemlemyij-element.html [ 20.05.2020]

- . « ». . «, », 2017.

- - « », 3 , . .: 2019. URL -http://hvli.org/upload/files/-2019.pdf [ 21.05.2020]

- «. 11 - - Apple ». . «, », 2012.

- . 2007 . URL -https://pub.iaea.org/MTCD/publications/PDF/IAEASafetyGlossary2007/Glossary/SafetyGlossary_2007r.pdf [ 20.05.2020]

- Evidence scan: Measuring safety culture. The Health Foundation, 2011. URL -https://www.health.org.uk/sites/default/files/MeasuringSafetyCulture.pdf [ 20.05.2020]

- Occupational Safety and Health culture assessment – A review of main approaches and selected tools. European Agency for Safety and Health at Work, 2011. osha.europa.eu/en/publications/occupational-safety-and-health-culture-assessment-review-main-approaches-and-selected [ 20.05.2020]

- . . URL -https://www.rogwu.ru/content/bl_files_docs/%2004.04.19%20%2014.40%20.pdf [ 20.05.2020]

- , , , No 75-INSAG-4, , 1991. URL — www-pub.iaea.org/MTCD/Publications/PDF/Pub882r_web.pdf [ 25.05.2020]

- .. . . 2. URL — www.helicopter.su/assets/media_sources/ehest-ihts/2016/Safety%20Culture/Article_Rosatom/1%20-%20Safety%20Culture%20Article%20-%20ROSATOM%20-%20Mashin_AV_PSY42.pdf [ 25.05.2020]

- Patrick Hudson. Safety Management and Safety Culture: The Long, Hard and Winding Road. URL — www.caa.lv/upload/userfiles/files/SMS/Read%20first%20quick%20overview/Hudson%20Long%20Hard%20Winding%20Road.pdf [ 21.05.2020]