All laptops and phones now have a camera, so you can analyze head and eye positions using tensoflow models. Also, a new article at SIGGRAPH 2020 explains how to make photogrammetric datasets that are convenient for parallax effect.

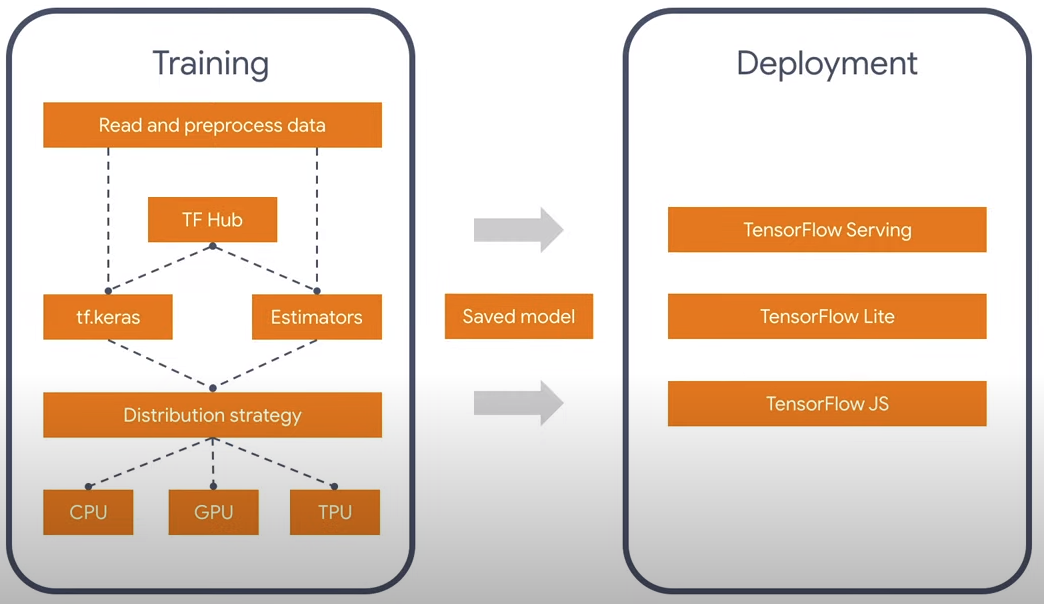

Probably everyone knows that there is a Tensorflow library for neural networks, it works under the Python and Javascript languages. The convolution process in neural networks is a rather heavyweight calculation that parallels well and there are versions not only for the CPU, but also for CUDA for Python and WebGL / WebGPU for Javascript. It's funny, but if you don't have NVidia, then the official build of Tensorflow in Javascript language will work faster on the PC, since there is no official build with OpenGL support. Fortunately for everyone, TF 2.0 has a modular architecture that allows you to think only about the essence, and not the language in which it is performed. There are also converters 1.0 -> 2.0.

There are currently two official face recognition models: facemesh and blazeface. The first is more suitable for facial expressions and masks, the second is quicker and simply determines the square and characteristic points, such as eyes, ears, mouth. Therefore, I took the lightweight version - blazeface. Why redundant information? In general, it may be possible to reduce the existing model even further, since apart from the position of the eyes, I do not need anything else.

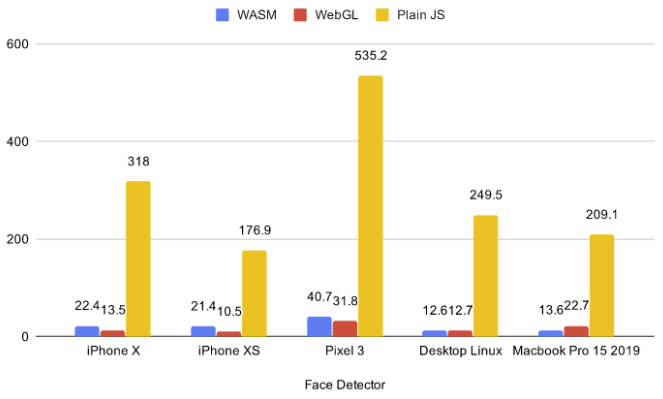

There are 5 backends in the browser at the moment: cpu, wasm, webgl, wasm-simd, webgpu. The first CPU is too slow and should not be taken now in any cases, the last two are too innovative and are at the stage of pro-posals and work under the flags, so there is zero support for end customers. Therefore, I chose from two: WebGL and WASM.

From existing benchmarks, you can see that for small models, WASM is sometimes faster than WebGL. In addition, parallax can be used with 3D scenes and by running the WASM backend on them, I realized that WASM works much better, since discrete laptop video cards do not simultaneously export neural networks and 3D scenes. For this, I took a simple scene with 75 globes in .glb. The link is clickable and there is WASM.

If you followed the link, you probably noticed that the browser asked for permission to access the video camera. The question arises: what will happen if the user clicks no? It would be wise not to load anything in this case and fallback to control the mouse / gyroscope. I didn't find the ESM version of tfjs-core and tfjs-converter, so instead of a dynamic import, I settled on a rather creepy construct with the fetchInject library, where the order in which modules are loaded matters.

creep construction

, (Promise.All), , , .

fetchInject([

'https://cdn.jsdelivr.net/npm/@tensorflow-models/blazeface@0.0.5/dist/blazeface.min.js'

], fetchInject([

'https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-converter@2.0.1/dist/tf-converter.min.js',

'https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-backend-wasm@2.0.1/dist/tfjs-backend-wasm.wasm'

], fetchInject([

'https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-core@2.0.1/dist/tf-core.min.js'

]))).then(() => {

tf.wasm.setWasmPath('https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-backend-wasm@2.0.1/dist/tf-backend-wasm.min.js');

//some other code

}

In order to find the position of the gaze, I take the average between the two eyes. And it is easy to understand that the distance to the head will be proportional to the distance between the eyes in the video, due to the similarity of the sides of the triangle. The data on the position of the eyes that come from the model is quite noisy, so before doing the calculations, I smoothed them using the Exponential Moving Average (EMA):

pos = (1 - smooth) * pos + smooth * nextPos;

Or writing it differently:

pos *= 1 - smooth;

pos += nextPos * smooth;

Thus, we get the coordinates x, y, z, in a certain spherical coordinate system with the center in the camera. Moreover, x and y are limited by the camera's angle of view, and z is the approximate distance from the head to it. At small angles of rotationso x and y can be taken as angles.

pushUpdate({

x: (eyes[0] + eyes[2]) / video.width - 1,

y: 1 - (eyes[1] + eyes[3]) / video.width,

z: defautDist / dist

});

Photogrammetry

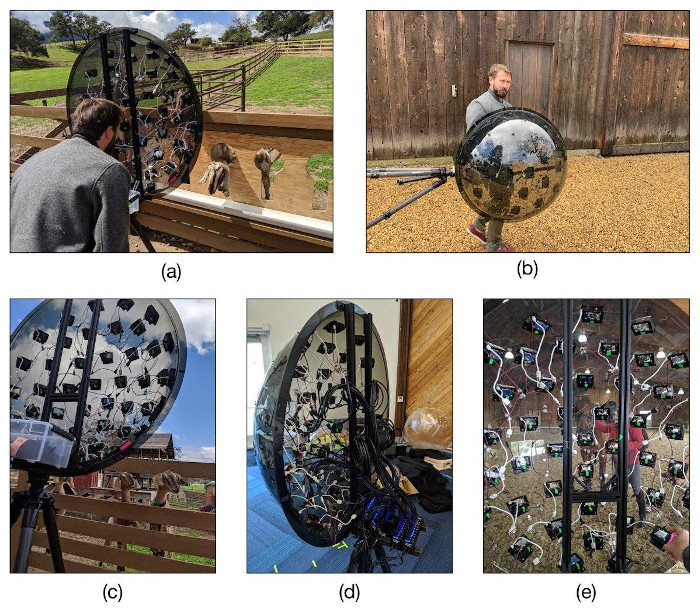

Pretty funny date set from the publication SIGGRAPH 2020 Immersive light field video with a layered mesh representation. They made pictures specifically so that you could move the camera in a certain range, which ideally fits the idea of parallax. Parallax example.

A 3D scene is created in layers here, and a texture is applied to each layer. The device with which they created photogrammetry looks no less funny. They bought 47 cheap Yi 4K action cameras for 10k rubles each, and placed them on a hemisphere in the shape of an icosahedron in which a triangular grid is tripled. After that, everyone was placed on a tripod and the cameras were synchronized for shooting.

References

- My github

- Immersive light field video

- WebGL community in telegram